The enduring appeal of LEGO comes not from the complexity of the sets, nor the adorable minifigure versions of pop culture icons, but from the build process itself, and turning a box of seemingly random pieces into a completed model. It’s a satisfying experience, and another one that robots might steal from you one day, thanks to researchers at Stanford University.

LEGO’s instruction manuals are a masterclass in how to visually convey an assembly process to a builder, no matter what their background is, their experience level, or what language they speak. Pay close attention to the required pieces and the differences between one image of the partly-assembled model and the next, and you can figure out where all the pieces need to go before moving on to the next step. LEGO has refined and polished the design of its instruction manuals over the years, but as easy as they are for humans to follow, machines are only just learning how to interpret the step-by-step guides.

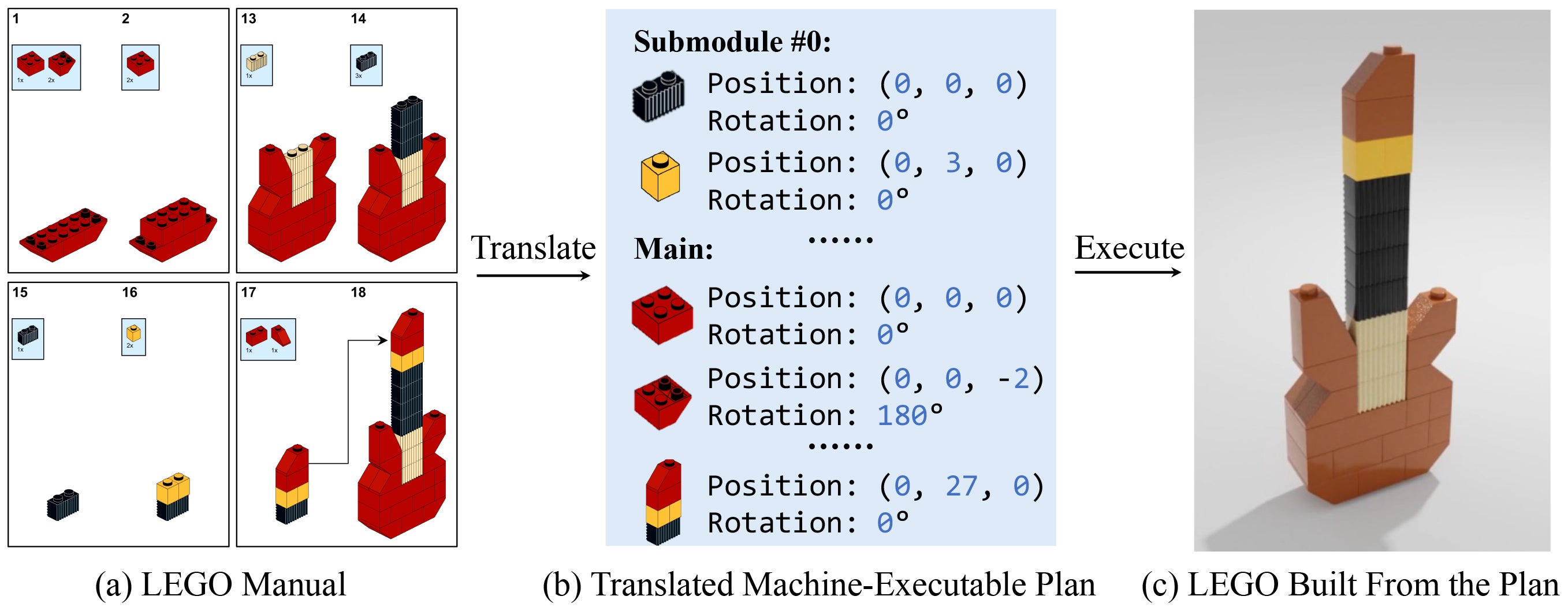

One of the biggest challenges when it comes to machines learning to build with Lego is interpreting the two-dimensional images of the 3D models in the traditional printed instruction manuals (although, several LEGO models can now be assembled through the company’s mobile app, which provides full 3D models of each step that can be rotated and examined from any angle). Humans can look at a picture of a LEGO brick and instantly determine its 3D structure in order to find it in a pile of bricks, but for robots to do that, the researchers at Stanford University had to develop a new learning-based framework they call the Manual-to-Executable-Plan Network — or, MEPNet, for short — as detailed in a recently published paper.

Not only does the neural network have to extrapolate the 3D shape, form, and structure of the individual pieces identified in the manual for each step, it also needs to interpret the overall shape of the semi-assembled models featured in every step, no matter their orientation. Depending on where a piece needs to be added, LEGO manuals will often provide an image of a semi-assembled model from a completely different perspective than the previous step did. The MEPNet framework has to decipher what it’s seeing, and how it correlates to the 3D model it generated as illustrated in previous steps.

The framework then needs to determine where the new pieces in each step fit into the previously generated 3D model by comparing the next iteration of the semi-assembled model to previous ones. LEGO manuals don’t use arrows to indicate part placement, and at the most will use a slightly different colour to indicate where new pieces need to be placed — which may be too subtle to detect from a scanned image of a printed page. The MEPNet framework has to figure this out on its own, but what makes the process slightly easier is a feature unique to LEGO bricks: the studs on top, and the anti-studs on the underside that allow them to be securely attached to each other. MEPNet understands the positional limitations of how LEGO bricks can actually be stacked and attached based on the location of a piece’s studs, which helps narrow down where on the semi-assembled model they can be attached.

So can you drop a pile of plastic bricks and a manual in front of a robot arm and expect to come back to a completed model in a few hours? Not quite yet. The goal of this research was to simply translate the 2D images of a LEGO manual into assembly steps a machine can functionally understand. Teaching a robot to manipulate and assemble LEGO bricks is a whole other challenge — this is just the first step — although we’re not sure if there are any Lego fans out there who want to pawn off the actual building process on a machine.

Where this research could have more interesting applications is potentially automatically converting old Lego instruction manuals into the interactive 3D build guides included in the Lego mobile app now. And with a better understanding of translating 2D images into three-dimensional brick-built structures, this framework could potentially be used to develop software that could translate images of any object and spit out instructions on how to turn it into a LEGO model.