I was recently asked to speak at the SMX London conference on ‘Identifying Core Algorithm Impact and Key Actions to Recover From Its Search Visibility Loss’. My presentation got me thinking about the myriad potential causes for a drop in organic visibility and the juxtaposition between two constants; the ever-changing landscape of SEO and the basic principles behind how we identify and address the reasons for poor SEO performance.

At Re:signal, whenever we’re approached by clients with an organic visibility drop, we follow the same process, irrespective of the industry the client works in or the product/service they offer. Even if we have a strong suspicion about what the issue may be (core algorithm update, lost links, technical SEO issues, etc.), we go through this process to ensure that our efforts are addressing the issue at its root cause. In essence, it’s a process of elimination, analysing the available data to confirm what’s not an issue, before we embark on a strategy to address the real problem.

How do you identify the cause behind an SEO visibility drop?

In order to comprehensively diagnose and respond to a drop in organic visibility, I’ve broken down our process into a ‘Five-Step Problem-Solving Cycle’:

This problem-solving model is fairly common. The purpose of this article is to illustrate how it can be used to address the issue of visibility loss in an SEO context.

The five steps are:

- Identify exactly what the problem is.

- Determine the root causes of the problem.

- Find the most appropriate and speedy solution, classified by overall impact and value.

- Implement the changes

- Re-examine / Re-evaluate and continuously improve.

So let’s get going with Step 1. Whatever you think the problem may be, the first step is defining what it actually is!

Step 1: Identify the problem

The first step in solving the problem is acknowledging there is one. While there are a whole host of problems that can arise as a consequence of a poorly conceived SEO strategy, this article will focus on organic visibility loss, an issue that sits at the heart of everything we do. As such, let’s look at the most common indicators:

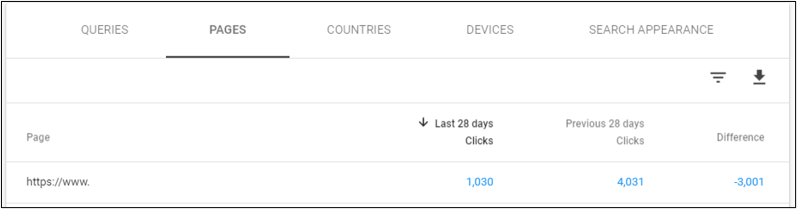

Organic traffic/conversions going down and impressions/clicks/click-thru rate showing similar trends

While further investigation to establish context and causes is worthwhile, you should at least have answers to these questions:

-

- Is the size of the drop an anomaly?

- Is the decline consistent?

- How does the traffic/clicks over a similar duration, pre- and post-drop, compare?

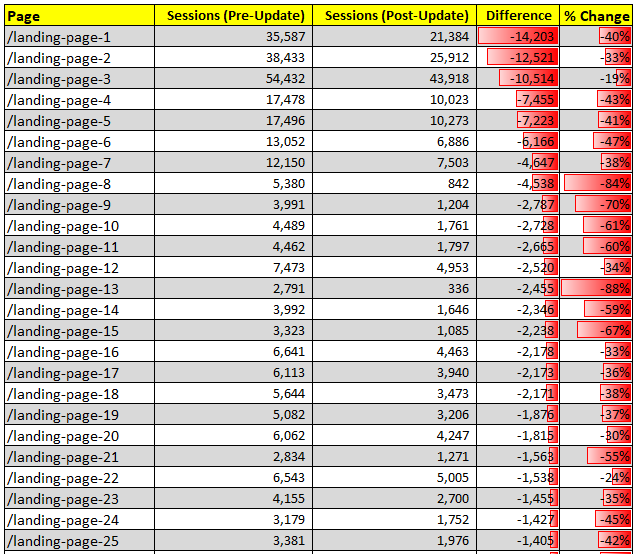

As a business, you should be aware of your core, money-driving landing pages. It’s important to evaluate their pre- and post-drop performance. Whether you suspect the drop is a result of internal technical issues or a change outside of the business, you should benchmark your traffic/revenue for pre-drop and post-drop dates. You can then compare it to similar timeframes to ensure the drop is not part of a seasonal change or a wider trend. Make sure that you factor in enough data i.e. not just a 2-3 days comparison. If it’s over a week, make sure to compare the same weekdays in an earlier time period to get a more accurate overall sense of what’s going on.

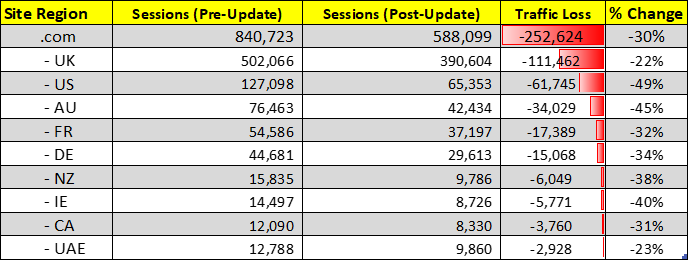

If your business is operational in multiple markets, split it by markets/region, as the more granular you go, the easier you know where the impact has been:

Pro Tip: Want to save time? Read this Analyzing A Recent Google Update Impact using Google Data Studio + Search Console by Aleyda Solis.

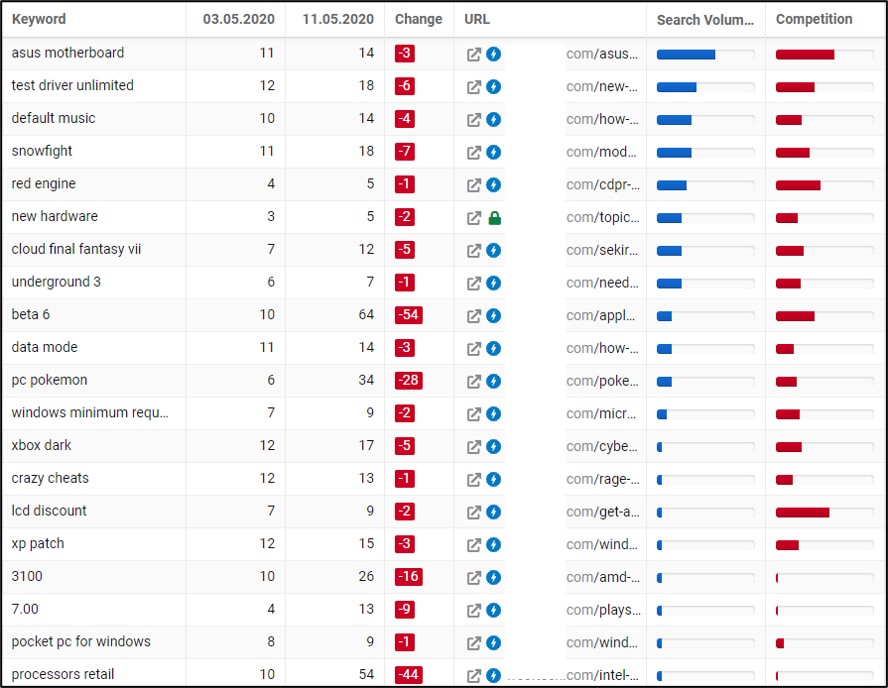

Important keywords/head terms are dropping in ranking

If your money terms/head terms are dropping then you’ve got a problem. Compare your keyword data over a similar earlier duration, (pre- and post-drop), to identify the rankings impact overall and to see if the drop is an anomaly or part of a wider trend. An anomaly suggests it’s a result of either an internal change to your site or the external landscape i.e. a Google algorithm core update.

Step 2: Determine the cause

If Step 1 is about identifying a pattern of negative changes, then Step 2 is about identifying the causes that could have resulted in that impact. To do this we need to carefully rule out potential issues that could have caused the negative changes to see what we are left with. The issues you might face can be broadly broken down into two types; internal factors within your control and external factors beyond it.

Factors that are in your control

Internal actions and changes that result in a drop are easier to respond to than external factors. It’s within your power to fix the issue and you should aim to do so as quickly as possible. Broadly, internal issues fall into the categories below:

Technical/structural/URL changes to the site

Let’s start with the most obvious. I can’t stress this enough but small changes that have been mistakenly implemented, development issues you’re not aware of and things of a similar nature can have a major impact.

Some common issues can stem from:

- URL structure changes

- Faulty canonicals (e.g. staging URLs as canonicals in production)

- Hreflangs incorrectly setup

- GSC Site removal tool

- Blocked crawlers in robots.txt

- CMS forced new settings

- Pages/Site set to noindex

- HTML structure changed

- Hosting issues

- Faulty redirects or loops

This list is by no means exhaustive but it’s a good place to start.

If you identify changes that you think have resulted in a drop, reverse them and follow your best practice guide to ensure that when they are implemented, they don’t negatively impact your site performance.

Website/CMS Migration gone wrong

Sometimes, a CMS migration, website migration or platform change might have damaged the code or altered the redirection strategy because of a failure to implement a proper migration strategy. This can apply to either a section of the site or the site in its entirety.

If this is the case, take a step back and try to immediately fix the issue. In most cases, the quick fix would almost guarantee a return to your normal visibility. However, if the fix takes more time, it could take a while to see positive results.

Pro tip: Read the Recovering from a faulty web site migration by Aleyda Solis (it’s an old read but still valid).

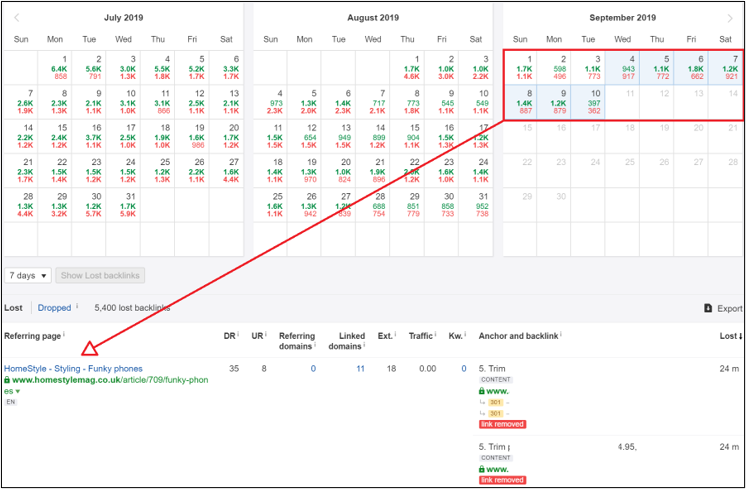

Loss of backlinks

Losing good backlinks in big numbers can negatively impact rankings and that impact happens in real-time, so if you lose a lot of links it needs to be addressed. You need to:

- Identify the links lost

- Attempt to regain the links if possible

- Rebuild

While losing links can arise as a consequence of external changes to the destination site, keep in mind, lost links could be caused by technical issues in your own site structure, such as a URL change or folder change with no redirection.

Factors that are beyond your control:

On the other side of the coin, there are the issues that your business can certainly address, but the root cause is external, e.g. changes handed down by Google or a shift in the competitor landscape. The most common causes for a drop in organic visibility driven by external factors are:

Are the competitors upping their overall game?

Firstly, it’s worth considering whether your drop can be attributed to pro-activeness on competitors’ part. They may well have upped their game and you’re now in serious contention and competing for the same market share.

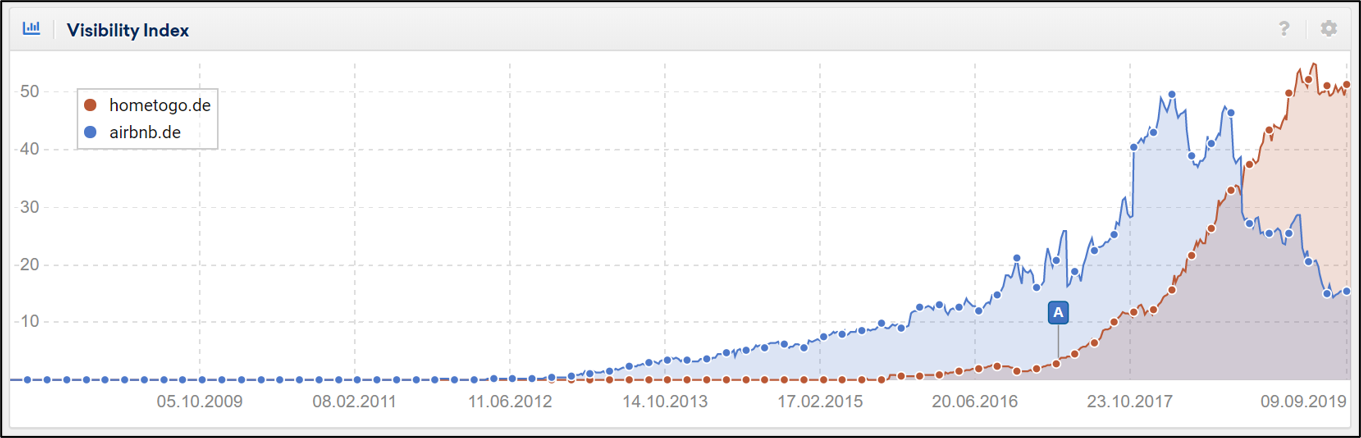

While this issue relies too heavily on the context of your business to address here, it’s important to realise these are situations from which we can learn from and evolve. This case study of how Hometogo ATE Airbnb’s German market share with EAT is a great example of how companies can respond to this problem.

Manual Link Penalties

Although Google has become pretty good at identifying, devaluing or ignoring low-quality backlinks and tends not to send out too many manual action notifications these days, you may have been issued a Manual Link Penalty. Check Google Search Console to see if you’ve received a manual penalty.

If so, you’ve got a serious problem, but do not despair. To recover from a link penalty you will need to implement a well-researched cleanup strategy, which requires a blog post in itself! The good news is, it’s doable; we’ve had clients recover from penalties as a result of a dedicated, strategic effort to address the punishable offence.

If there is no Manual Link Penalty, you’re safe, but it’s still worth cleaning out your link profile and disavowing links that are a potential risk/threat. You will need a tool like Link Research Tools, Kerboo, or SEMRush Link Analyzer to aid in this activity, so make sure you have access.

There are studies that show disavowing links has zero impact and studies that show that it’s very effective. I’d always propose playing it safe, it’s better to be risk-averse and use the Google disavow links feature which offers an additional level of support (it hasn’t been discontinued yet!).

The key actions for cleaning up your links are:

- Comprehensive Link Audit

Utilising all data sources e.g. Search Console, Majetic, Ahrefs, Moz, etc.

- Link Spam Identification

Using link research tools like Kerboo or SEMRush. (While these tools help identify spammy links based on predefined metrics, it’s important to manually review the links to ensure you don’t end up disqualifying a valid link.) - Disavowing the link spam

Do this with extreme caution to ensure that only links/domains that you are absolutely sure are spam/irrelevant or go against Google’s guidelines are disavowed.

Google Algorithm Core Update

While Google rolls out unannounced, relatively minor algorithmic updates nearly every day, we also see more broad core algorithm updates that Google officially confirms. Over the last two years, these core updates have increased in frequency and are usually pre-announced on the Google Search Liaison Twitter feed. You can follow Google’s @searchliaison twitter account, managed by Danny Sullivan himself, to keep up to date with the latest on these updates.

The impact of these updates is felt throughout a considerable portion of the world wide web. However, a certain type of site, specifically those in the health, finance, publishing and retail verticals, are held to a much higher standard and can often see a drop or gain in visibility once the updates have been rolled out. This can be a very challenging situation for marketing and SEO teams. They need to be on top of their game to ensure these updates result in rewards and not a visibility drop.

If you’ve dropped in organic visibility and you’ve ruled out technical changes, a CMS migration, a manual penalty and lost links, then you can begin to question whether the cause is an algorithmic update.

How can you be sure it’s algorithmic? Well, are you seeing changes that cause you to suspect Google is interpreting the overall content quality and structure of your site differently? Do the queries you ranked for now seem to serve a different search intent and you’ve dropped down the SERP?

If so, you need to ensure you’ve carried out the steps in Stage 1 to ensure the data confirms the suspicion that a core algorithm update is behind the drop. You’re looking for an anomalous fall in organic visibility without an obvious internal cause or loss of links.

In addition to the actions in Step 1, you will also need to establish two things:

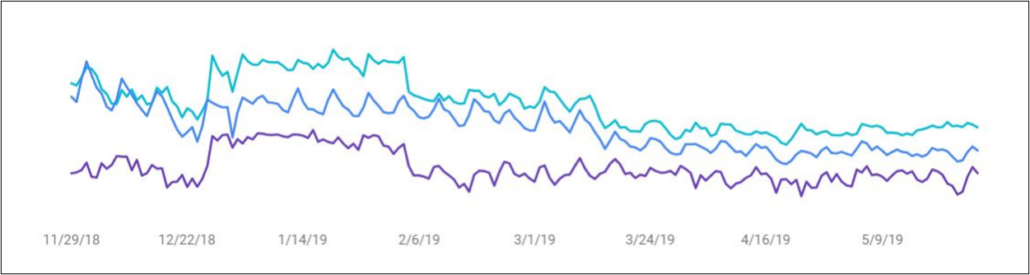

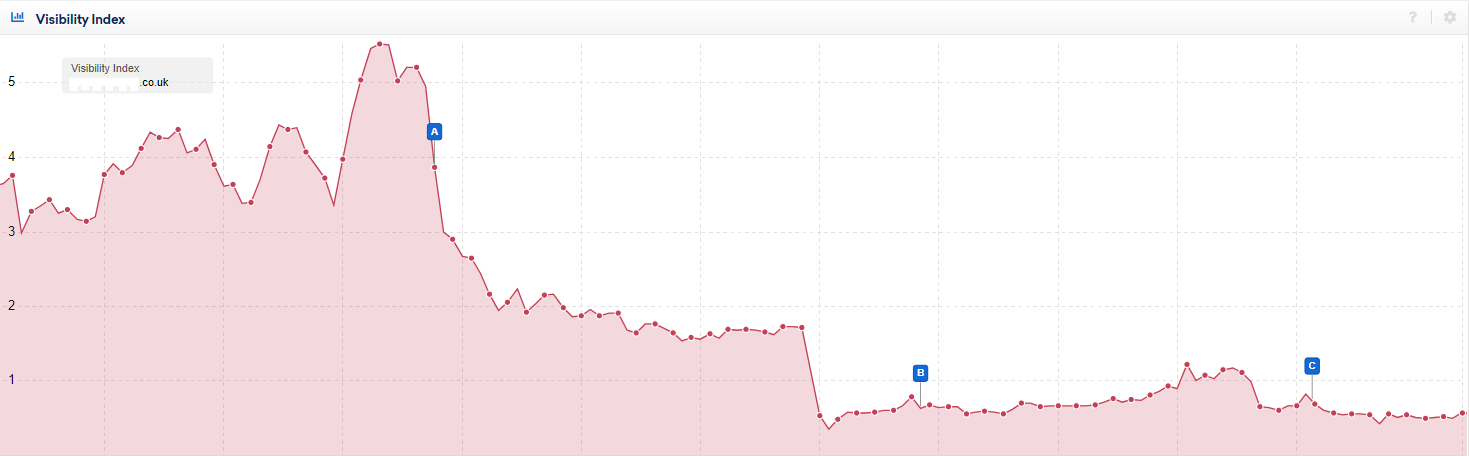

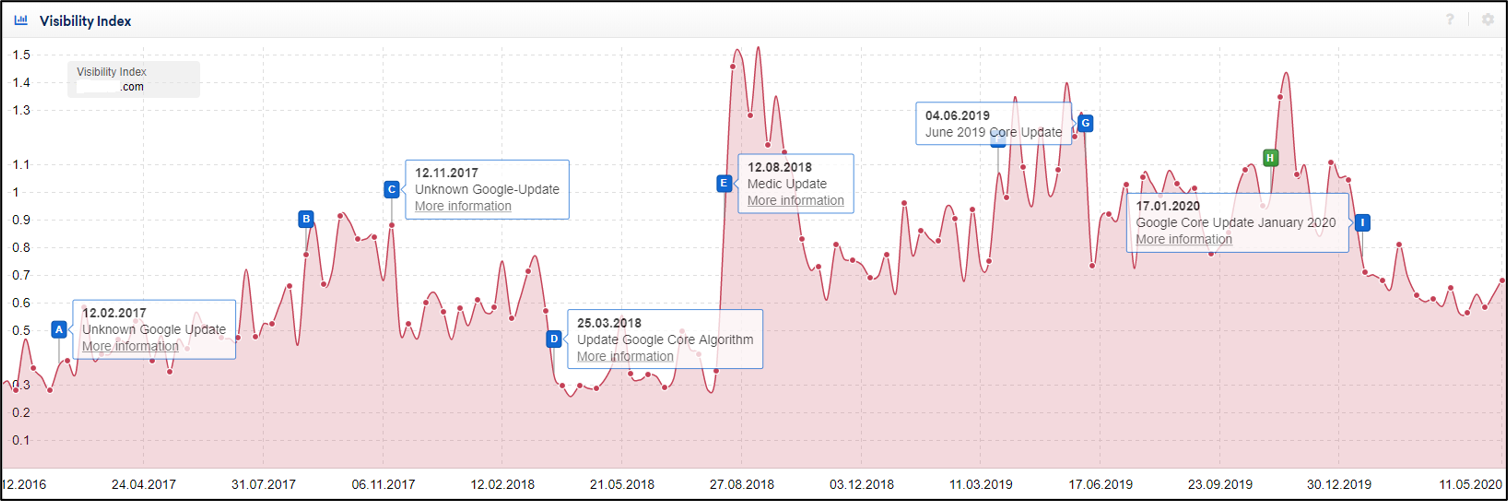

- Did search visibility drop around the algorithm update?

I normally use Sistrix to analyse daily/weekly (Smartphone/Desktop) visibility, for an overarching view of the domain, host or directory. You can do the same for your target money pages/keywords to help establish a trend of decline or growth.

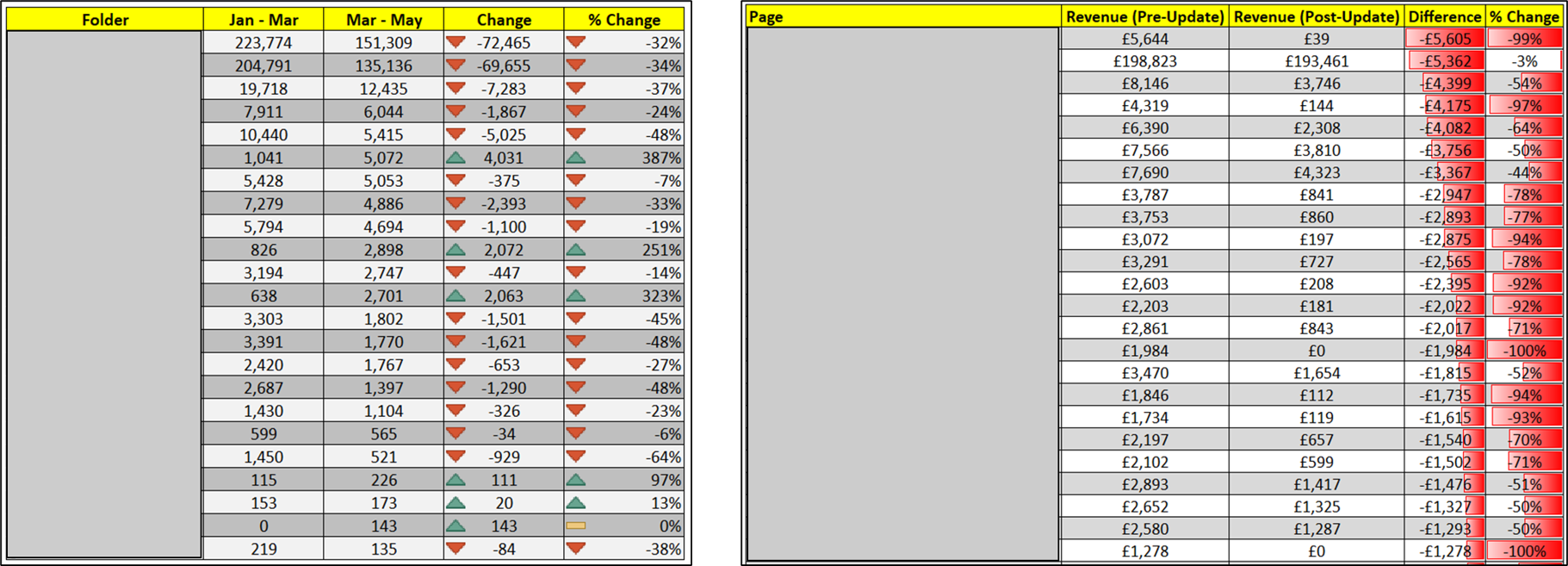

- Was there any impact on top-performing content?

As discussed in Step 1, you should check the impact on your content/site after a drop that coincides with an update. Do this for sessions/revenue across key markets and product categories. Assess the various content types for traffic/revenue/engagement impact, whether it’s the informational content produced for awareness or commercial/branded content aimed at consideration and conversion stages of the user journey.

Pro tip: Informational content compliments commercial content and vice versa, so ensure the two are working in symbiosis. Here is a great case study by Eoghan Henn that discusses this in detail.

Step 3: Finding the best solution

Finding the best solution to recover from visibility loss should be carefully thought out to ensure it positively impacts all the fundamental areas of the site. In essence, any solution should be grounded in good SEO practices and be viewed as an opportunity to improve overall performance, as opposed to a limited algorithmic maintenance and recovery exercise or a kneejerk response to an internal issue. This could also be your opportunity to solve multiple, long-lasting issues in the pipeline, so take your time and get it right.

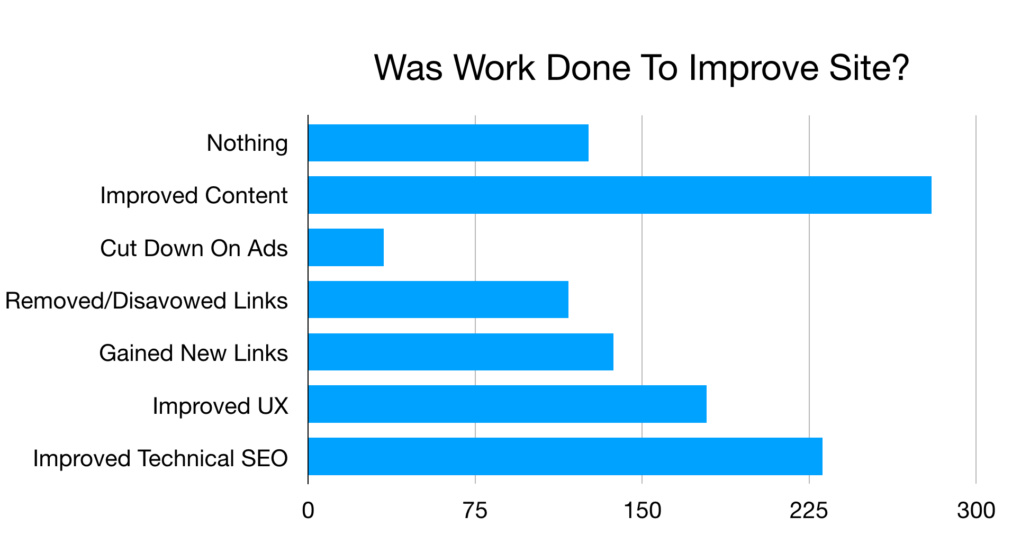

A survey by SERountable was carried out after the March 2019 Core Algorithm Update to identify what work was done to recover from the previous algorithm updates.

It doesn’t come as a surprise that ‘Improved Content’, ‘Technical SEO’ and ‘User Experience’ were the top 3 actions. This just shows that whether the issue is internal or external, the core response to visibility loss focuses on good SEO practice.

Another survey carried out by SparkToro identified 26 Google Ranking Factors with opinions from 1500+ search marketers in August 2019.

This survey reinforces the importance of content relevance, quality links and keywords. It also shows that Google’s Expertise, Authoritativeness, Trustworthiness (E-A-T) model, is a driving factor at the heart of any strategic response to a visibility drop. Perhaps the best way to understand E-A-T is by looking at the Quality Raters Guidelines (QRG).

Quality Raters Guidelines and E-A-T

Google has published their Quality Raters Guidelines (QRG). These are used as a guide to help train the team of Search Evaluators who manually visit websites and assess their quality. They then feedback their data, which is used by Google to improve their algorithm, resulting in improved quality of search results.

Expertise, Authoritativeness, Trustworthiness (E-A-T) is at the heart of the QRG. Indeed, the document mentions E-A-T elements a total of 160 times. E-A-T is a crucial factor for the ranking of all websites, but especially for ‘Your Money Your Life’ (YMYL), news and retail sites.

It’s important to recognise that the QRG only provides a framework used by humans for improving algorithms. In no way can it be classified as a bible of ranking factors authored by Google. However, the QRG offers some great insights on how Google’s Search Evaluators gauge the overall quality of a site, and by applying the QRG to your own site it’s possible to identify and address issues.

Create a value-driven strategy to address the visibility drop

By following the first three steps of the process, whether the issue is internal or external, you should be in a position to classify any issues across content, technical structure, rankings and links by their impact. You should also try to put a value on those issues, as this can be used to make a business case and acquire the necessary resources for implementing the proposed changes (as well as allowing you to prioritise the changes you’d like to make based on ROI).

As a general rule, you should prioritise actions that impact the ‘money pages’ and lead to the highest conversions, followed by pages that assist with conversions, etc.

Pro tip: Lily Ray has put in a great list of 10 Tips for Recovering from Google Core Algorithm Updates that are a must to read.

Step 4: Implement the changes…quickly!

Having worked in SEO for over eight years, the most typical obstacle I encounter is that the recommendations provided never get off the ground, or when they are implemented, it’s a bit too late.

You can only win if you implement your recommendations quickly. All good results come from working collectively with the wider team to achieve timely execution of the recommendations. Whether it’s fixing an issue or implementing a new strategy, the race with your search competitors isn’t just about product/price/market share etc, it’s about speed,

This quote by Kevin Indig says it best: “SEO is an execution problem, not a knowledge problem… [The] Best SEOs have the resources and back-up to get things done. They don’t know something others don’t.”

The good news is, whether it’s an internal structure issue, a loss of links or an external core algorithm update, losses can be recovered (even if Google states otherwise). It just takes time and effort, but crucially, those efforts have to be targeted in the right place.

While the specific issue might be a focus, it’s also important that you direct your activities across all the elements of SEO in a sustained fashion, be it technical, content, links, UX, etc. Don’t fall into the trap of doing just “a little SEO”.

John-Henry Scherck says: “It’s an all or nothing commitment. Doing “a little SEO” is the same as doing none at all. It’s now an operational commitment and discipline, no longer a growth hack.”

Step 5: Re-examine and re-evaluate

The process of re-examining and re-evaluation is a test and learn approach because what works well for some may not be the best solution for you. If you treat SEO as a long-term marketing commitment, your ability to identify issues and address them will evolve alongside your wider organic search strategy. With time and effort, you’ll find the sweet spot and see great results.

This five-step cycle is a continuous process that should lie at the heart of any SEO strategy. Whether it’s used to respond to a specific issue like a visibility drop, or just to ensure good practice and effective implementation, it’s important to keep going. The aim should be continuous improvement rather than a destination, but also a great emphasis over monitoring the repeat offenders that caused the drop to begin with. One of the comment I received over this piece was “…And if you have sucessfully found the cause for the drop you should find a way to monitor this cause / the solution. Nothing is more frustrating than getting work “destroyed” by the next deployment”

Part of that improvement also includes being prepared for visibility drops when they happen, not just responding to them retrospectively. For a broad overview on how to future-proof your site against a drop caused by a core algorithm update, I’d highly recommend you check out Lily Ray’s blog.

Overwhelmed? Don’t be!

While the entirety of this process may seem a little overwhelming at first, the principles are quite straightforward. The important thing is to avoid implementing a knee jerk reaction to a drop in organic visibility. Any such action might have no impact whatsoever, or worse, negatively affect your visibility or impact your offering.

For the most part, the questions that you should be asking of your site that I’ve outlined above should be relatively easy to answer and the reason for the drop will become apparent. Take your time to identify the issue, take your time strategizing a solution, then when the time comes to implement the suggestions, you can go full steam ahead.

Note from the author:

Thanks for taking the time to read this post, I have the wider SEO community to thank for a lot of the insight it contains. As I mentioned at the start of this article, the SEO landscape is constantly evolving and there’s no unified approach. With that in mind, I’d love to hear your thoughts and comments. Please get in touch via twitter @khushal