We find most testers just run the most basic tests on Lambdas. One technique that has helped us discover more bugs when testing Lambdas at Qxf2 is to send batch messages to the SQS that triggers the Lambda. Often times, when you (the tester) send batch messages and “something goes wrong”, you need to be able to triage the cause of error. In this post, we will outline an investigation we recently undertook.

Note: Not all the quick and dirty triaging tricks here will be applicable to you. So pick and choose as it suits you.

Background:

Testers can sometimes find Lambdas as black boxes. The supposed “serverless” technology works off micro VMs. You can read more about it here. When you do go through the architecture, you will realize that there are many ways in which the infrastructure itself can produce errors. For example, Firecracker microVM stays around for about 15 minutes. With concurrency, you can have multiple instances of the Lambda working. These lead to many interesting possibilities that need to be tested well.

While working with a client, one of our colleagues wanted to test out if the AWS Lambda function processes all the messages when the messages were sent in batches. She used AWS SDK to test the Lambda by sending batch messages to the SQS queue that triggers the Lambda function. Here, she noticed that some events got triggered multiple times & also the order in which the messages were sent was not preserved.

Since we cannot reveal any information on our client’s application, we decided to simulate a similar scenario on one of our own internal application, the “Qxf2 Newsletter Automation” tool.

Why testing with Batch Messages is important:

Batch messaging is a common technique used to process multiple messages simultaneously. Testing your Lambda function with batch messages could help uncover vital issues in your workflow.

The order of the messages might not preserved when batch messages are sent. Therefore, it is important to test if this can cause any issues in the workflow. There can also be issues like message duplication that arises from the retry policy of the Lambdas. This can lead to several issues such as increasing processing time, wastage of resources, and producing inaccurate results. This makes it essential to test this so as to help uncover bugs in your workflow. Furthermore, it also necessary to ensure that all the messages in the batch are processed by the Lambda by testing it with large number of messages in the batch. This could help identify potential issues where some important messages or records could be missed by your Lambda function.

Testing with Batch Messages:

As we mentioned earlier, we decided to test our Newsletter Automation application with batch messages.

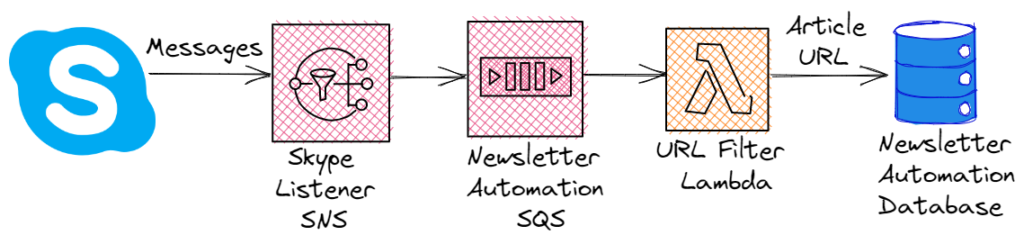

1. The Newsletter Application has a Lambda function that is triggered by an SQS. The SQS is subscribed to an SNS topic “Skype Listener” , which actively listens to our official skype group, and broadcasts the messages sent in the group.

2. Every time the SQS gets a message, the Lambda is triggered. The Lambda checks if the message contains a URL in it. If it does, then the URL is extracted from the message and stored in the Newsletter Automation database.

3. We wrote a test in Python using the library aws-sqs-batchlib, to send batch messages to an SQS queue that triggers the Lambda function.

def send_batch_messages(): "Send batch messages to the SQS and verify if all messages are sent" # Create a list of messages to send message_count=10 for message in range(message_count): url = f"https://stage-newsletter-lambda-test-{message}.com" articles_sent.append(url) message_body = {"Message" : "{\"user_id\": \".cid.f9000d4f3453e385\", \"chat_id\": \"19:[email protected]\", \"msg\": \"" + url + "\"}"} messages.append({"Id": f'{message}', "MessageBody": json.dumps(message_body)}) # Send the messages to the queue response = aws_sqs_batchlib.send_message_batch( QueueUrl=queue_url, Entries=messages, ) return len(response['Successful']) == message_count |

As, you can see in the above code snippet, we use the send_message_batch() method from the aws_sqs_batchlib library to send the batch messages to the SQS. It requires two mandatory arguments, the QueueUrl and Entries. Entries is a list of messages that you wish to send to the SQS, Each message in the list should have an Id and a MessageBody

4. We initially tested the Lambda by passing about 50 messages in the batch. Upon running this test, we noticed that not all messages sent in the batch were processed by the Lambda.

5. We then tuned down number of messages to 10 to find out the minimum amount of messages in a batch that can be processed successfully. However, the Lambda still failed to process all of the 10 messages.

6. In the hope to get any traces of this error, we went through the AWS CloudWatch logs to see if we could find the cause for the missing articles in the database. However, we couldn’t find any failures or errors recorded in the logs.

Load Testing the API endpoint with Locust:

On examining the Lambda metrics we noticed spikes in the Lambda invocation metrics around the time the messages were sent to the Lambda. This indicated that the Lambda was getting invoked when the messages were sent, but most of these messages were not being processed. This made us suspect that it might be an issue with the API endpoint used to post the URL’s to the database. So, we decided to load test the API endpoint to check if it is able to handle large number of requests simultaneously.

To do this, we used Locust, a load testing tool that is easy to setup and get started with, to write a simple test for load testing the endpoint.

1. We first tested the endpoint with a load of about 15 requests per second. The endpoint was able to process all the requests without any failures

2. By this point we knew that the API was not the source of the problem. However, we decided to continue testing it by tuning up the load to about 56 requests per second because this eventually was going to be a question.

3. Interestingly enough, the API endpoint was able to handle all the requests without a single failure.

Now that we had eliminated the API endpoint as a suspect, we half heartedly decided to test if it was an issue with the Lambda concurrency. Well, not really. We were fairly certain that lambda concurrency would not be a problem for such a simple case. But for the sake of completeness of this blog post, we are going to pretend that we suspected lambda concurrency.

Testing Lambda Concurrency:

In order to verify if it was an issue with the Lambda concurrency, we had to invoke the Lambda directly by passing the payload to it. This invocation had to happen multiple time simultaneously.

1. Therefore, we decided to use threads in Python to invoke the Lambda function concurrently.

2. To achieve this, We first wrote a function to invoke the Lambda using boto3 library in Python.

response_data = [] # Create a Boto3 client for AWS Lambda lambda_client = boto3.client('lambda') # Define a function to invoke the Lambda function with a given payload def invoke_lambda(payload): "Method to invoke the lambda function" # Define the Lambda function name and its payload data function_name = 'staging-newsletter-url-filter' # Invoke the Lambda function with the payload data response = lambda_client.invoke( FunctionName=function_name, Payload=payload, InvocationType='Event' ) response_data.append(response) |

3. We then added another test method that invokes the above function concurrently based on the payload data using threads.

def test_invoke_lambda_concurrently(): "Method to execute the function to invoke the lambda multiple time concurrently" for url_count in range(140,150): url = f"https://lambda-batch-test{url_count}.com" articles_sent.append(url) payload_data.append(json.dumps({ "Records": [ { "body": "{\"Message\":\"{\\\"msg\\\": \\\"" + url + "\\\", \\\"chat_id\\\": \\\"19:[email protected]\\\", \\\"user_id\\\":\\\".cid.f9000d4f3453e567\\\"}\"}" } ] })) # Use a ThreadPoolExecutor to invoke the Lambda function concurrently with multiple payloads with concurrent.futures.ThreadPoolExecutor() as executor: # Invoke the Lambda function with each payload in the payload data list executor.map(invoke_lambda, payload_data) assert len(response_data) == len(payload_data),f"Not all payloads were invoked" |

4. On running this test, as expected, the Lambda was able to process all the payloads that was sent.

5. This helped us eliminate Lambda concurrency as the cause for the issue.

Now that we knew neither API endpoint nor Lambda concurrency was responsible for the issue, we wanted to make sure if it wasn’t a problem with the SQS itself.

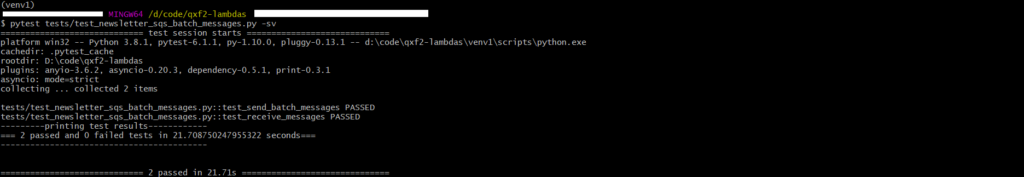

Testing the SQS:

1. In order to test the SQS, we created a dummy queue with the same configuration as the actual queue but without a Lambda trigger.

2. We then sent the batch messages to this dummy queue and polled for messages to check if all the sent messages were present in the queue.

def test_receive_messages(): "Retreive messages from the SQS and verify if all the messages sent are retrieved" # Receive the messages from the queue res = aws_sqs_batchlib.receive_message( QueueUrl = queue_url, MaxNumberOfMessages=100, WaitTimeSeconds=20, ) # Extract the message body from the recieved messages received_messages = {msg['Body'] for msg in res['Messages']} for message in received_messages: json_message = json.loads(message) recieved_msg_body = json.loads(json_message['Message']) #Append the message content to a list received_articles.append(recieved_msg_body['msg']) #Check if all the messages sent are present in the recieved messages assert all(item in received_articles for item in articles_sent),f"Not all articles sent were recieved from the SQS" |

3. You can see in the above code snippet that we are using the receive_message() method from the aws_sqs_batchlib library to poll for the messages from the queue. Once we get the messages, we extract the message body and store it into a list. We then compare this list to the list of articles that was sent to the SQS.

4. The above test passed indicating that all the articles sent were received from the dummy queue.

This test helped us confirm that SQS was not the source of the problem as well.

Finding the cause for the issue:

Having eliminated all other suspects, we were now sure the problem was with our code. So we started digging through StackOverflow for any possible hinters and yay! finally found one thread that helped get us to the core of the issue.

1. Our investigation had narrowed down to the way the SQS events were being handled by the Lambda function. The StackOverflow thread that we stumbled upon helped us confirm our suspicion.

2. The problem was that, our Lambda assumes that there is only going to be a single record in an event all the time. Therefore, it was fetching only the first record from the event, blatantly ignoring the rest of the records.

def get_message_contents(event): "Retrieve the message contents from the SQS event" record = event.get('Records')[0] message = record.get('body') message = json.loads(message)['Message'] message = json.loads(message) |

3. As you can see from the above code snippet record = event.get('Records')[0], we are aonly getting the first record from the event.

4. However, when a batch messages is passed, there can be multiple records in a single event. Since the Lambda fetches only the first record, the rest of the records were not processed at all.

Finally, after a long investigation we were able to debug the issue and get to the root cause of the problem!

Hire technical testers from Qxf2

Qxf2 employs highly technical testers. Our approach is to go well beyond standard ‘test automation’. From this post you must have picked up the fact that we know about the underlying architecture of AWS Lambdas. Our testers are versatile and enable teams to test better. You can reach out to us here.

I am a QA Engineer. I completed my engineering in computer science and joined Qxf2 as an intern QA. During my internship, I got a thorough insight into software testing methodologies and practices. I later joined Qxf2 as a full-time employee after my internship period. I love automation testing and seek to learn more with new experiences and challenges. My hobbies are reading books, listening to music, playing chess and soccer.