Projects need a story for local development. With some projects, the development environment is cobbled together and evolves organically. With others, a dedicated team designs and manages the dev environments.

I've noticed a common theme in the ones I like. Good development environments make me confident that my code will work in prod while giving me fast feedback. These sorts of environments are fun to code in, since I can easily get into the flow — I don't have to stop coding to wait for my code to deploy, or wait until it's merged to do a "proper" test.

Recently, I've been thinking of test usefulness and test speed as the fundamental tradeoff in development environments. It's easy to build a robust testing environment that's slow to use. Or an environment that gives fast feedback, but forces you to test in a higher environment. The challenge is finding the right balance between the two.

In this post I'll explore why it's hard to have both, and what some companies have done about it.

How deployment pipelines help

Before focusing on development environments, let's take a look at deployment pipelines through the lens of test usefulness and test speed.

Most companies test code in a series of environments before deploying to production. In a well-designed deployment pipeline, you have high confidence that changes will work in prod once they reach the end of the pipeline.

In the ideal world, you'd be able to instantly tell, with 100% certainty, whether changes will work in production.

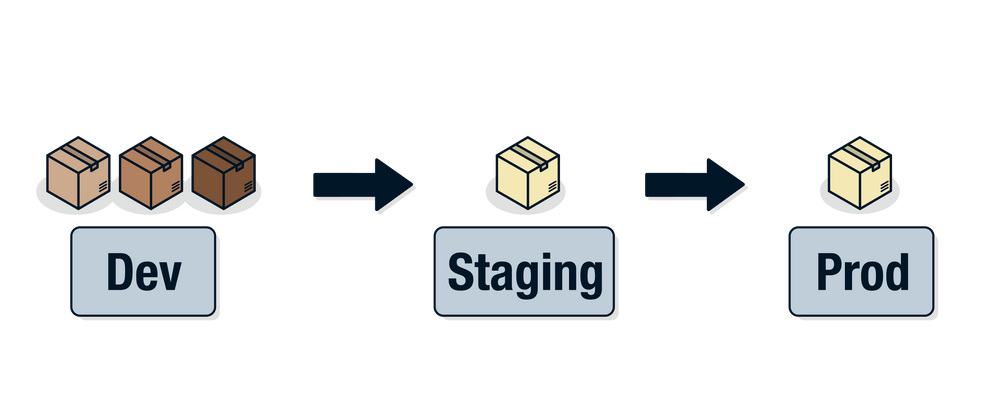

However, this is impossible to build in. Instead, we create deployment pipelines where development is quick to test in, but isn't as similar to production. Once things are working in development, developers deploy them to staging, which is as similar to production as possible, for final testing.

Note: Some companies have different names for these environments, or more environments, but the concept generally applies

Staging environments: high confidence, slow feedback

The most common reason bugs slip through to production is that they're tested in environments that aren't similar to production. If you don't test in an environment that's similar to production, then you can't really know how your code will behave once it gets deployed.

Staging environments are the last place changes are tested before going live in production. They mimic production as closely as possible so that you can be confident that a change will work in production if it works in staging. The similarities between staging and prod should go deeper than just what code is running — VM configuration, load balancing, test data, etc should be similar.

Staging environments live in the upper left of our "test usefulness vs speed" spectrum. They give you high confidence that your code will work in prod, but they're too difficult to do active development in.

However, they hold a nugget of wisdom: the key to getting useful test results in development environments is to make them similar to production. For the rest of this post, I'll focus on dev-prod parity as a proxy for test usefulness since the former is easier to evaluate.

Let's dig a bit deeper into staging environments to see what we do (and don't) want to replicate in development environments.

How is staging similar to production?

Here are some common ways that staging environments match production.

- They run the same deployment artifacts (e.g. Docker images) for services.

- They run with the same constellation of service versions. If you test with a dependency at v2.0 in staging, it better not be v1.0 in production.

- They run on the same type of infrastructure (e.g. on a Kubernetes cluster running in AWS, where the worker VMs have the same sysctls).

- They have realistic data in databases.

- They're tested with realistic load.

- They run services at scale (e.g. with multiple replicas behind load balancers)

- If the application depends on third-party services (like Amazon S3, Amazon Lambda, Stripe, or Twilio), they make calls to real instances of these dependencies rather than mocked versions.

The relative importance of these factors varies depending on the application and its architecture. But it's useful to keep in mind the factors that you deem important, because you may want your development environment to mimic production in the same way.

Why not just use staging for development?

Developing directly in the staging environment ruins the principle of having a final checkpoint before deploying to production, since it would be dirtied by in-progress code that's not ready to be released.

But putting that aside, developing via a staging environment is slow:

- The environment is shared by all developers, so testing is blocked for all developers if any broken code is deployed.

- Deploying is slow because it requires going through the full build process, even if you're just making a small change.

- Debugging is difficult since the code is running on infrastructure that developers aren't familiar with.

Development environments: a sea of tradeoffs

Development environments don't need to be perfect replicas of productions to be useful. Parity with production follows the Pareto principle: 20% of differences account for 80% of the errors. Plus, deployment pipelines provide a "safety net", since even if a bug slips through development, it'll get caught in staging.

This lets us cut some of the features of staging that decrease productivity during development. But what should we cut?

The sweet spot for development environments is the shaded area around "ideal".

We want our development environments to be much faster to test in than staging,

and we're willing to sacrifice a bit of "test usefulness" to get that.

Here are some common compromises teams make, allowing them to operate in the ideal area.

Problem: Slow preview time

Nothing breaks your flow like having to wait 10 minutes to see if your change

worked. By the time you're able to poke around and see that your change didn't work,

you've already forgotten what you were going to try next.

Solution: Hot reload code changes

Docker containers are great since they let you deploy the exact same image that you tested with into production. However, they're slow to build since they don't handle incremental changes very well. Doing a full image build to test code changes wastes a lot of time.

Docker volumes let you sync files into containers without restarting them. This, combined with hot reloading code, can get preview times for code changes down to seconds.

The downside is that this workflow doesn't let you test other changes to your service. For example, if you change your package.json, your image won't get rebuilt to install the new dependencies.

Solution: Have a separate development environment per developer

It's tempting to share resources in development so that there's less to maintain, and less drift between developers. But the potential for developers to step on each other's toes and block each other outweighs the conveniences, in my opinion.

The most common thing that drifts when using isolated environments is service versions. If your development environment boots dependencies via a floating tag, images can get stale without developers realizing it. One solution is to use shared versions of services that don't change often (e.g. a login service).

Problem: Cumbersome debugging

Previewing code changes is only one part of the core development loop. If the

changes don't work, you debug by getting logs, starting a debugger,

and generally poking around. Too many layers of abstraction between the

developer and their code make this difficult.

Solution: Use simpler tools to run services

Even if you use Kubernetes in production, you don't have to use Kubernetes in development. Docker Compose is a common alternative that's more developer-friendly since it just starts the containers on the local Docker daemon. Developers boot their dependencies with docker-compose up and get debugging information through commands like docker logs.

However, this may not work for applications that make assumptions about the infrastructure setup. For example, applications that rely on Kubernetes operators or a service mesh may require those services to run in development as well.

Solution: Run code directly in IDE

In traditional monolithic development, many developers run their code directly

from their integrated development environment (IDE). This is nice because IDEs have integrations with tools such as

step-by-step debuggers and version control.

Even if you're working with containers, you can run your dependencies

in containers, and run just the code you're working on via an IDE. You can

then point your service at your dependencies by tweaking environment variables.

With Docker Desktop, containers can even make requests back to the host

via docker.internal.host.

The downside of this approach is that your service is running in a

substantially different environment, the networking is complicated, and

versions of dependencies like shared libraries tend to drift.

Implementation challenges

Sometimes, you're forced to make compromises because it's just too hard to build the

perfect development environment. Unfortunately, most companies need to invest

in building custom tooling to solve the following problems.

- Working with non-containerized dependencies, like serverless: Some teams just point at a shared version of serverless functions, which quickly gets complicated if they write to a database.

- Too many services to run them all during development: Applications get so complex that the hardware on laptops isn't sufficient. Some companies run just a subset of services or move their development environment to the cloud.

- Development data isn't realistic: Because production data contains sensitive customer information, many development environments just use a small set of mock data for testing. Some teams set up automated jobs that back up and sanitize production data. Others point their development environments at databases in staging, which tend to be more similar to production.

Conclusion

Development environments are usually an afterthought compared to staging and

production. They evolve haphazardly based on band-aid fixes. But developers

spend the bulk of their time in development, so I think they should be

designed consciously by making tradeoffs between test usefulness

and test speed.

Unnecessary differences between development and production cause bugs to slip through

to staging and production. Therefore, differences between development and production should be intentional, and designed to speed up development.

What does your ideal development environment look like? What tradeoffs does it make?

Resources

Try Blimp for booting cloud dev environments that hot reload your changes instantly.

Whitepaper: How Cloud Native kills developer productivity

Why Eventbrite runs a 700 node Kube cluster just for development

Top comments (1)

I think it is worth mentioning — when I was using C# in Visual Studio, with ReSharper, NUnit, and NCrunch — not only did we do unit testing (bona fide TDD style unit testing), it was actually fun. FUN! NCrunch is nothing short of magical.

(Although I'm more partial to xUnit.net rather than NUnit ... but that's okay.)

(One other team member swore by Gold Rush rather than ReSharper. It looked good too, I admit. My own familiarity with ReSharper made me not try Gold Rush out more.)