As Artificial Intelligence becomes increasingly sophisticated, there are growing concerns that robots could become a threat. This danger can be avoided, according to Stuart Russell, computer science professor at University of California, Berkeley, if we figure out how to turn human values into a programmable code.

Russell told The California Report that in the field of robotics, “moral philosophy will be a key industry sector.” He argues that as robots take on more complicated tasks, it’s imperative that we translate our morals into AI language.

For example, if a robot does chores around the house (as is expected in the coming decades), you wouldn’t want it to put the pet cat in the oven to make dinner for the hungry children. “You would want that robot preloaded with a pretty good set of values,” said Russell.

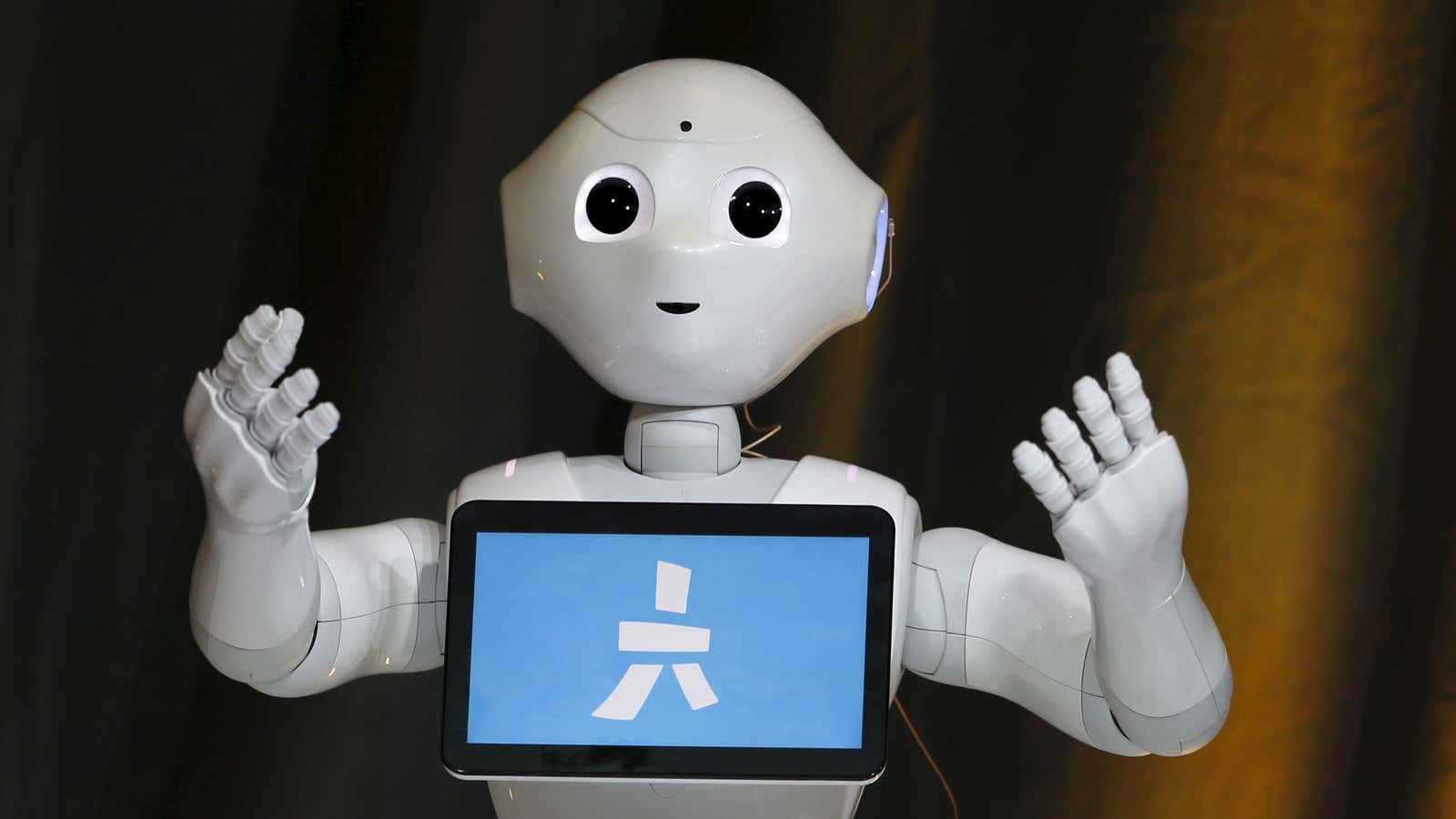

Some robots are already programmed with basic human values. Peter McOwan, computer science professor at Queen Mary University of London, tells Quartz that mobile robots have been programmed to keep a comfortable distance from humans.

“That’s a very simple example of robot behavior that could be considered a value. Obviously there are cultural differences, but if you were talking to another person and they came up close in your personal space, you would think that that’s not the kind of thing a properly brought up person would do,” he says.

It will be possible to create more sophisticated moral machines, if only we can find a way to set out human values as clear rules.

“That sort of value engineering would involve an exerts in human values, including philosophers, psychologists, ethnographers, and lay people as well,” says McOwan. “Just as you can take expertise and turn it into a rule set, you can take information from that focus group and turn it into a series of rules.”

Robots could also learn values from extracting patterns from large sets of data on human beheavior.

McOwan says that robots are only dangerous if programmers are careless.

“The biggest concern with robots going against human values is if human beings don’t programmed them correctly because they haven’t done sufficient testing, they don’t have sufficient safeguards and they’ve produced a system that will break some kind of taboo,” he says.

One simple check would be to program a robot to check the correct course of action with a human when presented with an unusual situation.

“If the robot is unsure whether an animal is suitable for the microwave, it has the opportunity to stop, send out beeps, and ask for direction from a human. If you think about it, we do that anyway—if humans aren’t quite sure about a decision, they can go and ask somebody else,” says McOwan.

The most difficult step in programming values will be deciding exactly what we believe is moral, and how to create a set of ethical rules. But if we come up with an answer, robots could be good for humanity.

“Perhaps it’ll help make people better,” said Russell.