By Bill Bice, CEO of nQ Zebraworks

· We’ve gone from peak hype to severe backlash on generative AI in record time, neither of which captures the reality.

· The best way to understand the utility and limitations of generative AI is to understand how it works.

· Generative AI is a sophisticated auto complete that is valuable for certain tasks today and will become more useful in the future.

Now, suddenly, everyone is talking about ChatGPT, thanks to OpenAI running the world’s largest beta (I might argue alpha) test of software. OpenAI got what they needed – a $10 billion investment from Microsoft.

In legal, we got a lot of discussions about what this really means, but without much context as to what it actually is and how it works.

What is ChatGPT?

AI is full of lovely acronyms that need some definition:

· Artificial Neural Network (ANN): mathematical model inspired by the structure of the human brain, where neurons and parameters are analogous to brain cells and synapses.

· Natural Language Processing (NLP): AI focused on “understanding” language – rather important in legal. NLP breaks down words into numbers so they can be fed in to the ANN.

· Large language model (LLM): a specific kind of ANN, using deep learning to train on lots and lots of data.

ChatGPT is built on these core AI technologies. The “Chat” part is simply a way to interact with the GPT engine, which stands for Generative Pre-trained Transformer:

· Generative – it can create text.

· Pre-trained – it’s been trained on a bunch of data, in this case 570GB of stuff on the Internet as of September 2021.

· Transformer – an advancement in ANNs introduced by Google in 2017 that made them so much more useful, and is the foundation for this new generative AI.

Auto complete in Word is an earlier version of the same technology. The easiest way to think of ChatGPT that is reasonably accurate is an absolutely amazing auto complete on steroids that can write entire essays instead of just suggesting the next word or phrase in your document. But it gets there essentially the same way.

How Does ChatGPT Work?

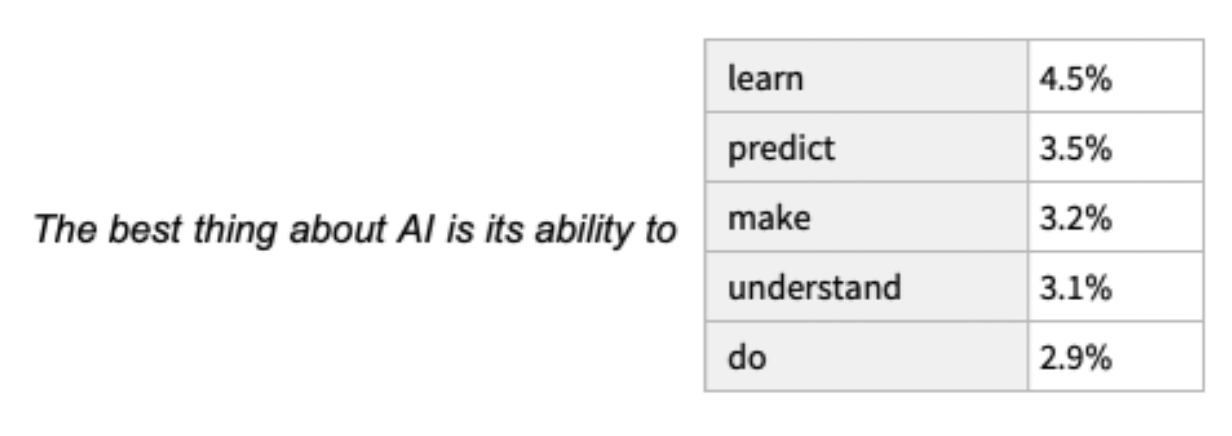

I’ll use Stephen Wolfram’s example of how GPT works. Assume you have the text “The best thing about AI is its ability to” and you want to finish the sentence. You also have the advantage of having read text that amounts to hundreds of billions of words, so you have lots of examples with which to work, and from that, you can make a list of probabilities of the most likely next word:

GPT does this repeatedly, analyzing the whole sentence (and then likely the whole paragraph, etc.) each time, to predict each subsequent word. You’re probably noticed, however, that ChatGPT produces different results when asked the same prompt multiple times. That’s because it doesn’t just pick the word with the absolute highest probability: that turns out to produce very mundane and not-very-useful text. So, there is an amount of randomness added to each pick to introduce “creativity”.

There is no creativity. There is randomness.

Once you understand this, it also becomes clear why ChatGPT just makes things up (what’s called hallucinations in AI). Multiple sources report a GPT-3.5 hallucination rate of 15%-20%, and OpenAI says that GPT-4 has improved on this by 40%.

The question then becomes, why is GPT so much better than previous LLMs? It’s built on the “Transformer” breakthrough made by Google in 2017, and it puts the “large” in LLM. It was trained on 570GB of data, or about 300 billion words, resulting in 175 billion parameters, which are easiest to think of as synapses. That is large for an ANN, but far short of the 100 trillion synapses in the human brain (GPT-4 was rumored to have 100 trillion synapses, but OpenAI’s Sam Altman said that’s “complete BS”).

Generative AI is “Narrow AI”

When people talk about AI replacing lawyers or Skynet destroying the world (same thing for law firms), they’re referring to “broad AI” or “artificial general intelligence”. But LLMs like GPT are not going to get us there:

“There are claims that by scaling out those [LLM] systems we will reach human level intelligence. My opinion on this is that it is completely false.”

– Yann LeCun, NYU professor and Meta’s chief AI scientist

Because ChatGPT is quite good at mimicking the output of humans, it’s easy to think there is something deeper going on:

“Anyone who attributes understanding or consciousness to LLMs is a victim of the Eliza effect named after the 1960s chatbot created by Joseph Weizenbaum that, simple as it was, still fooled people into believing it understood them.”

– Melanie Mitchell and David C. Krakauer, Santa Fe Institute

Although we don’t fully understand why GPT works so well, it’s a huge leap of faith to say that it’s something so magical that we’re on the verge of human-level intelligence. It’s much more likely we just don’t fully understand how pattern-based human language is – the one thing this technology is really good at is identifying patterns.

“There’s no consciousness, sentience or mind. Just numbers in and numbers out.”

– Uwais Iqbal, AI data scientist focused on legal at Simplexico

In my mind, knowing how ChatGPT works – one word at a time – makes the results even more astonishing. But with that comes some real challenges.

Challenges in Legal

There are some unique challenges with generative AI in legal:

· We’re in a precedence-based profession, talking about a tool that at least today, can’t cite how it got to its answers. The recently adopted ABA Resolution 604 requires “traceability” when using AI.

· The answers themselves can be problematic. In addition to just making things up, ChatGPT is based on a wide swath of what humans have written – which can either be just wrong or difficult to interpret. The Truthful QA benchmark is a series of 800 questions designed to be a little tricky around common misconceptions, yet humans get 94% right. GPT-3.5 scores 48% while GPT-4 improves to almost 60%. Being right 60% of the time on slightly difficult questions is not great. Note: legal can be more than just slightly difficult.

· The privacy concerns around proprietary information with ChatGPT are well known, and even with OpenAI’s new feature to not record prior conversations they still keep your chat history for the last 30 days.

· Precision is a cornerstone of law. Many existing AI tools like contract review aide that precision; generative AI can easily obscure it.

· Many legal issues around the development and use of generative AI are yet to be resolved. “LLM” could end up standing for “Large Libel Models”.

What’s Next?

“We are in 1L right now for legal AI. But don’t forget that 1Ls being useless in actual practice doesn’t mean they will stay that way with training.”

– David Wang, Chief Innovation Officer at Wilson Sonsini

These tools are going to keep getting better. In the immediate future:

· OpenAI and Microsoft are both working on business versions of ChatGPT that should do a better job addressing client confidentiality. Legal tech vendor tools that leverage GPT, properly implemented, don’t have the same client confidentiality issues.

· Better data management will result in better answers. ChatGPT has the same problem as any database: garbage in, garbage out.

Down the road, the guardrails around generative AI will improve dramatically:

· Generative AI will fact-check itself to reduce hallucinations. GPT makes up quotes, for example, which are easily verified. It makes up citations to non-existent websites – that can be programmed out.

· Governance will create rules and guidelines around the use of generative AI, implementing security and change management while protecting privacy and IP.

“LLMs simply aren’t sufficiently accurate for use in organizations without a strong governance system that includes a precision data management and disciplined data provenance.”

– Mark Montgomery, CEO KYield

· Integrating other tools with GPT: for example, GPT is not very good at math, spatial relationships – really anything that requires understanding the world. But integrated with Wolfram Alpha, a platform designed for those kinds of problems, it suddenly becomes much “smarter”.

“I think we’re at the end of the era where it’s going to be these, like, giant, giant models. We’ll make them better in other ways.”

– Sam Altman, OpenAI CEO

GPT will become an ingredient in more complete solutions that are used by attorneys.

The hype surrounding generative AI overplays the impact today, but paying too much attention to the backlash risks ignoring a core technology that has transformational potential. It reminds me of the famous quote from Bill Gates that has played out repeatedly:

“We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten. Don’t let yourself be lulled into inaction.”

— Bill Gates

Bill Bice is the CEO at nQ Zebraworks, which is tackling the challenges created by Work From Anywhere. nQzw Queues is the workflow engine that integrates with the core systems in law firms, powering more than a third of the largest 250 law firms in the United States and 5 of the top 10 firms globally.

We never charge for guest posts – they appear here purely on merit. To submit a guest post for consideration please contact caroline@legaltechnology.com