One of the most critical areas for the health of any test automation strategy is the long-term maintenance of your testing code. Like any other development code used throughout your application, building maintainable tests will keep your test suite humming along smoothly while helping developers and testers correct any future issues quickly.

Unfortunately, maintenance is an often-forgotten part of test automation, at least in my experience. It's not very common to see startups and small companies with a well-maintained test suite. Typically, their tests are cobbled together by less-experienced developers and testers in their spare time—which is already scarce enough as it is. Regardless of the root cause, I've found that when a company has issues with the effectiveness of their test automation, it's often due to the lack of a maintainable test suite.

The good news is that most automated test suites in this condition don't have to make sweeping changes. In many cases, it's usually an accumulation of minor issues that lead to difficulty maintaining the test suite. These seemingly-unimportant issues look insignificant on the surface but have profound ramifications for long-term maintenance.

This article discusses a few of these more minor issues I encountered recently and what I recommended to the team with slight adjustments to help improve the maintainability of their test suite. Although most of these changes look inconsequential, these fixes will significantly impact any test suite as the codebase and the team grows.

Issue: Writing unclear or ineffective test case descriptions

Something often overlooked with writing automated test cases is the description of the test. Most testing libraries and frameworks require a test description, which helps teams understand the test and the underlying implementation. These descriptions also appear when running the test suite since testing tools use them as part of the reports for the test run results. I'm a strong advocate of writing clear test descriptions, even if they're long or require a bit of extra thought to get right.

The words you use to label a test can help in many ways. They serve as documentation—an area that small teams and startups tend to skip. When a new team member joins your team or a developer has to dig into a section of the code they haven't explored, the test suite can guide them on how specific functions work. Without clear descriptions, you're missing a valuable opportunity to expand the knowledge of the underlying codebase to current and future team members.

Clear test descriptions also assist with debugging. When you get an error during a test run, the report generated by your testing tool of choice will print out which tests failed. This information helps isolate the test scenarios that failed. More importantly, it gives the developer or tester responsible for fixing the problem additional context whether the underlying code has an issue or the automated test is to blame.

Unclear test descriptions are one of the most common offenders I've repeatedly seen in automated test suites. It shows up in the shape of descriptions without helpful information that can help developers or testers understand what the function or test does. To show an example, here's part of a recent commit I saw recently on one of the companies I work with. This test uses Jest, an excellent JavaScript testing library, to validate a helper function called daysBetweenDates.

import { daysBetweenDates } from "./date-helpers";

test("daysBetweenDates returns 31", () => {

const days = daysBetweenDates("2022-01-01", "2022-02-01");

expect(days).toEqual(31);

});

test("daysBetweenDates returns 5", () => {

const days = daysBetweenDates("2022-06-15", "2022-06-20");

expect(days).toEqual(5);

});

test("daysBetweenDates returns 0", () => {

const days = daysBetweenDates("2022-09-01", "2022-09-01");

expect(days).toEqual(0);

});By looking at the tests in isolation, you wouldn't have any clear idea about what the underlying function does. Their description only says the expected result of the test, not what the function does. Based on the usage of the function and the test assertions, you might guess that the daysBetweenDates function takes two dates and returns the number of days between them. But you would still need to peek at the function's code to make sure if you weren't familiar with the codebase, and it doesn't guarantee the reader will fully grasp its inner workings.

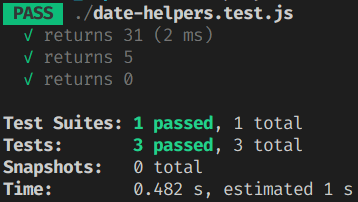

Besides adding extra effort to make others understand what the underlying code does, the other issue with these descriptions is that the test run results aren't beneficial to anyone who needs to view these results. Here's what Jest prints out when running these specific tests:

These descriptions wouldn't help anyone debug the tests if they failed. For instance, let's say the daysBetweenDates function changes to accept Date objects instead of strings, and the change causes these tests to fail. Seeing a test description that says "daysBetweenDates returns 31" won't provide any context to help a developer or tester figure out what the function used to do and why the test fails now. They can go into the implementation of daysBetweenDates but wouldn't know what changed, requiring them to waste more time hunting down the offending commit in their source code repository.

Fix: Use test case descriptions to describe what the function under test does

The simple fix here is to ensure that the team responsible for creating and maintaining the codebase and test suite clearly describes how the underlying function should work. To refactor the example above, I recommended that the team rewrite the description to explain how the daysBetweenDates function works. Also, since all three tests perform similar actions, I suggested they either group all the assertions under a single test case or keep only one assertion.

test("daysBetweenDates returns the number of days between two date strings", () => {

expect(daysBetweenDates("2022-01-01", "2022-02-01")).toEqual(31);

expect(daysBetweenDates("2022-06-15", "2022-06-20")).toEqual(5);

expect(daysBetweenDates("2022-09-01", "2022-09-01")).toEqual(0);

});This new description clearly tells me at a glance what the daysBetweenDates function does without having to dig into its implementation. Anyone looking at the test can see first-hand how it works, helping further my understanding provided the tests pass.

Another benefit is that it now helps me understand what changes if the test fails without spending time digging through the source code history. Again, let's imagine the daysBetweenDates function changes to use Date objects. When the tests fail, I can quickly see that the implementation actually uses Date objects and not strings as it used to require. Understanding why a test fails is a requirement for knowing how to fix the problem.

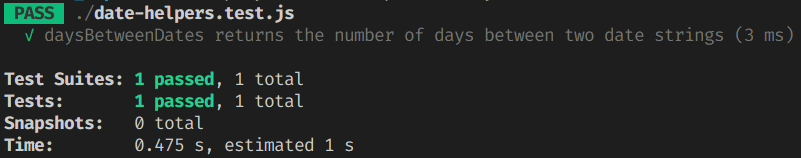

Finally, the reduction in test cases with a clear description makes reporting much easier to read and understand:

In the long haul, having a test suite with unhelpful descriptions significantly decreases the maintainability of the test suite. The time it takes to write clear descriptions for your automated test cases is brief, but hopefully, you can see the impact it can have on a larger scale. Test suites containing hundreds or thousands of tests will become easier to maintain if issues occur, and your team will better understand the codebase when they run across these tests.

Issue: Inefficient test case grouping strategy

Another overlooked area with automated test suites is a lack of clarity around how tests get grouped in the codebase. Almost every automated testing tool allows developers and testers to organize their tests by grouping them into discreet categories. Some examples are markers in pytest, collections and folders in Postman, and fixtures in TestCafe.

Using this type of grouping functionality in your preferred testing library or framework has some powerful benefits for long-term maintenance. Test groups greatly increase the readability of the automated test suite by letting developers and testers see each category of their tests, whether by function, feature, or test type. Grouping also provides speed and flexibility for test runs, as you can run only a specific set of tests based on a group. Finally, it helps with reporting since most tools organize test results by groups.

When I see a test suite that doesn't classify or organize its test scenarios in the codebase, it's usually difficult to follow which tests belong to a specific function or feature. The lack of clear grouping also permits teams to develop bad habits by scattering tests in different areas of the test suite. Reports also become less valuable since everything gets lumped together in a single list.

Let's look again at the original example tests that I ran into recently, with their improved descriptions:

test("daysBetweenDates returns the number of days between two date strings", () => {

expect(daysBetweenDates("2022-01-01", "2022-02-01")).toEqual(31);

expect(daysBetweenDates("2022-06-15", "2022-06-20")).toEqual(5);

expect(daysBetweenDates("2022-09-01", "2022-09-01")).toEqual(0);

});This example might not show how much this affects maintainability in isolation since it's only a single test case with a few assertions. But imagine you have over fifty unit tests in this same file, covering ten different functions, and this test is in the middle of the file. How easy can you spot which tests belong to the daysBetweenDates function without resorting to your text editor's Find functionality? You might also have other tests for daysBetweenDates in the same file that aren't nearby or even have some tests for the function in a separate file.

These tests will also become difficult to spot in your test tool's reporter. Again, these results won't become difficult to comprehend since it's a single test case. But imagine a test suite containing thousands of test cases. Your tests will appear in one long list, and lots of testing tools run tests in random order by default, so you can't guarantee test cases for similar functionality to appear near each other in these reports.

Fix: Come up with a strategy to use your testing tool's grouping functionality

Developers and testers should think about how to group their automated tests as early in the process as possible. It's easier to figure out the grouping strategy when there are few test cases to organize. There isn't a "one-size-fits-all" approach for choosing a strategy, as it relies on the team working on the tests and the testing tools in use. among other factors. The key is establishing a strategy early and following it as best as possible while building your test suite.

In the examples we've been using for this article, we're writing unit tests for a JavaScript function. A simple yet effective way to group unit tests is by function or method in your codebase. The testing tool used, Jest, has a method called describe that allows us to group related tests. We can group our existing tests in the following way to continue improving on the tests for the daysBetweenDates function:

describe("daysBetweenDates", () => {

test("returns the number of days between two date strings", () => {

expect(daysBetweenDates("2022-01-01", "2022-02-01")).toEqual(31);

expect(daysBetweenDates("2022-06-15", "2022-06-20")).toEqual(5);

expect(daysBetweenDates("2022-09-01", "2022-09-01")).toEqual(0);

});

});While adding describe seems like an insignificant change, grouping tests go a long way in boosting the maintainability of automated tests. When reading the code for these tests, the describe keyword and the indentation between the group and the test case make it much easier to distinguish that these tests belong to the daysBetweenDates function. Your code editor can also help with color-coded keywords or code folding to view the organization between tests better.

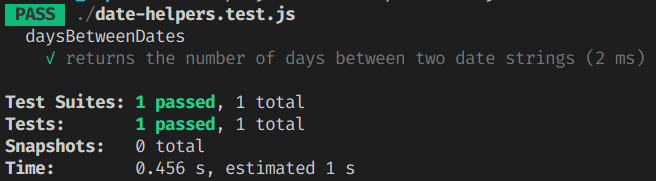

Grouping tests will also increase the readability of test run results. The reporters for most testing tools that support this kind of grouping will delineate each group separately:

Besides code readability and reporting improvements, the primary benefit of grouping your tests is to keep all your tests together under a unifying section of your test suite. When you have multiple scenarios to cover a specific function or segment of your application, having all test cases in a single place becomes more manageable. To put this in perspective, let's say we now want to verify that the daysBetweenDates function handles leap years correctly. We can add this test under our group:

describe("daysBetweenDates", () => {

test("returns the number of days between two date strings", () => {

expect(daysBetweenDates("2022-01-01", "2022-02-01")).toEqual(31);

expect(daysBetweenDates("2022-06-15", "2022-06-20")).toEqual(5);

expect(daysBetweenDates("2022-09-01", "2022-09-01")).toEqual(0);

});

test("handles leap years", () => {

expect(daysBetweenDates("2020-02-01", "2020-03-01")).toEqual(29);

expect(daysBetweenDates("2021-02-01", "2021-03-01")).toEqual(28);

});

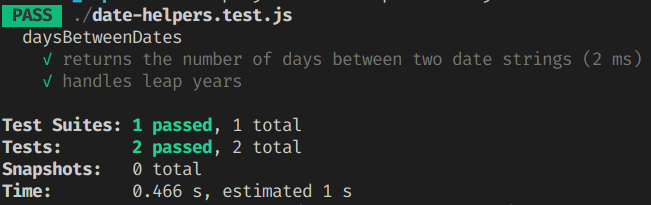

});In the future, if the daysBetweenDates function changes, we can easily find all unit tests related to it and update them if needed. Test run reporting also becomes more valuable since anyone can clearly see what the function does based on passing tests:

Using built-in functionality like describe for Jest isn't the only way to group tests. You can also place your tests in separate files and gather your test cases where relevant. Another way to group tests is by using tags. While teams often use tags for running specific test cases, it also serves as a form of grouping. For instance, it can help teams know which group of tests are higher priority than others.

One rule of thumb I use is to group differently by the type of test automation you're performing. The examples above for unit testing group them by function. For API testing, one route to take is to group by endpoint. In end-to-end testing, an excellent way to group tests is by the feature they cover.

How you decide to group your tests is up to you and your team, but the important thing is to have a strategy and follow it. A little bit of up-front work will save you countless hours in the future.

Issue: Forgetting to cover the "sad" paths

An issue that frequently occurs, especially with developers new to test automation, is that they only cover the "happy" path of their work. This issue occurs when a developer or tester writes tests solely to check off some basic acceptance criteria. In a rush to add test coverage, they cover just a few paths for how the functionality works under normal circumstances. While it's certainly better than nothing, neglecting the "sad" paths can wreak havoc in the future.

When developers are coding up a new function, they focus on getting it to work as specified. Unfortunately, this leads to some easy-to-spot bugs slipping through the cracks. At best, a bug will interrupt the functionality of your application. At worst, bugs can expose massive security issues or destroy valuable data. Sometimes, testing what can go wrong is equally or more important than testing what goes right. This line of thinking is especially true when you have public-facing functionality. You'll be surprised at all the ingenious ways the users of your product find to break your code—unintentionally or not.

It's also easier to fix bugs as early as possible. Ideally, a developer will fix bugs before getting their code merged into the product's main branch. If they're not focused on potential bugs while coding, it'll likely end up with that bug getting discovered days, weeks, or even months later. In a fast-paced startup where speed of development and shipping matters, these disruptions can feel catastrophic. It's challenging for developers to return to a piece of code they haven't touched in a while. If you're a developer at a startup and need to go back to a piece of code you wrote just a few months ago, there's a good chance you won't even remember writing any of it.

Let's go back to the example code used in this article. Notice that there aren't any test cases to verify how the daysBetweenDates function would handle a parameter that isn't a valid date string. Without looking at the implementation, would we know that the developer who wrote the function remembered to address that potential scenario? We'll add a test to find out:

describe("daysBetweenDates", () => {

test("returns the number of days between two date strings", () => {

expect(daysBetweenDates("2022-01-01", "2022-02-01")).toEqual(31);

expect(daysBetweenDates("2022-06-15", "2022-06-20")).toEqual(5);

expect(daysBetweenDates("2022-09-01", "2022-09-01")).toEqual(0);

});

test("handles leap years", () => {

expect(daysBetweenDates("2020-02-01", "2020-03-01")).toEqual(29);

expect(daysBetweenDates("2021-02-01", "2021-03-01")).toEqual(28);

});

test("returns undefined if one of the dates is not a valid date string", () => {

expect(daysBetweenDates("2022-06-15", "invalid date")).toEqual(undefined);

});

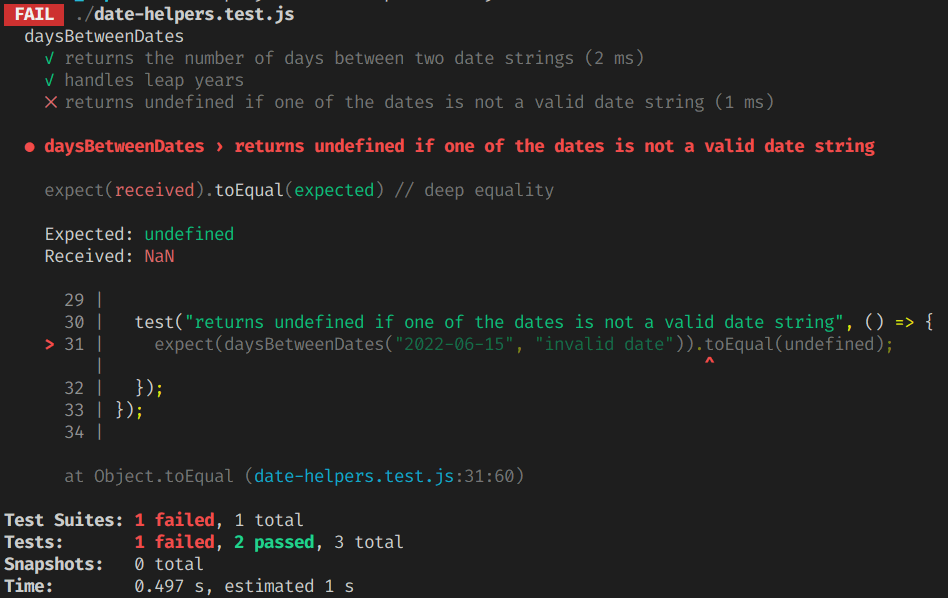

});In our case, we can see the test doesn't handle that well:

For this example, the damage caused by this oversight is limited since it's an internal function. Still, it could cause some annoying extra work for future developers if they use the function and it blows up on them. If this function were exposed to the public, this error would undoubtedly surface at the worst time for a user, leading to a negative impression of your product.

Fix: Write tests for the "sad" paths—but only when it makes sense

The obvious solution to this problem is to think about both the "happy" and "sad" paths while writing test cases. As a developer or tester, it forces you to fully understand essential parts of your application and the ramifications if something goes wrong. Sometimes we're so focused on getting something to work that we don't grasp how things can go wrong outside our solution.

Of course, thinking about what "sad" paths to cover is easier said than done. As developers and testers, we need to know where to place our attention when testing. In the example above, there are many other permutations of how a developer can improperly use the daysBetweenDates function—using different data types like booleans or numbers, reversing the dates to test, only using one parameter, and so on. You can't expect to cover all of those cases through automation. Spending some time thinking about these points of potential breakage will help create a more robust function even if there's no explicit automated test covering them.

It also helps to consider when and where to cover the "sad" paths in your test automation. Depending on the areas that need more coverage around where things can go wrong, you should focus more on expanding your testing scope for these scenarios. Typically, it's easier to handle them through unit testing since developers and testers can cover different paths quickly. You can also do them through more complex forms of testing, such as integration or end-to-end tests. However, these kinds of tests require more maintenance—something we're trying to improve.

One skill to explore when building automated test suites—particularly for developers—is to practice test-driven or behavior-driven development, where you write your test cases before writing any development code. While these practices aren't for everyone, writing tests before implementation forces you to think about how to test your code. It yields a better understanding of the ways your code can blow up.

You need to choose the right amount of coverage for you when adding tests to validate against breakage. This skill doesn't come immediately and will build over time with practice. The more time spent improving your long-term automation strategy, the more you'll know what to cover and where. When in doubt, it's probably best to add some coverage for the "sad" paths instead of leaving them exposed.

Summary

Building your automated test suite with maintainability in mind will ensure you continue receiving value for them throughout the lifespan of your applications. A well-maintained test suite makes it easy for teams to quickly add new tests and fix issues. Unfortunately, maintainability often takes a back seat as deployment timelines get shortened or when organizations give these tasks to their less-experienced developers or testers with little to no guidance.

Most test suites with poor maintainability typically have a buildup of minor issues that go unnoticed by the team, either by unfamiliarity or indifference. Test cases with unclear descriptions, poorly organized test suites, and not testing "sad" paths look harmless at first glance. Left ignored over time, these areas can hinder a team's ability to move quickly and effectively with their test automation strategy.

The great news is that improving these issues doesn't require a massive effort. Your team can improve maintainability by writing better descriptions for test cases, organizing them better, and covering areas where the code under test can run into unexpected use. These steps are minor improvements but will massively impact the long-term health of your automated test suite.

What steps do you take to improve the maintainability of your automated test suite? Leave a comment below and share your ideas to help other developers and testers build resilient and robust test suites!