Parents and teachers across the world are rejoicing as students have returned to classrooms. But unbeknownst to them, an unexpected insidious academic threat is on the scene: a revolution in artificial intelligence has created powerful new automatic writing tools. These are machines optimised for cheating on school and university papers, a potential siren song for students that is difficult, if not outright impossible, to catch.

Of course, cheats have always existed, and there is an eternal and familiar cat-and-mouse dynamic between students and teachers. But where once the cheat had to pay someone to write an essay for them, or download an essay from the web that was easily detectable by plagiarism software, new AI language-generation technologies make it easy to produce high-quality essays.

The breakthrough technology is a new kind of machine learning system called a large language model. Give the model a prompt, hit return, and you get back full paragraphs of unique text. These models are capable of producing all kinds of outputs – essays, blogposts, poetry, op-eds, lyrics and even computer code.

Initially developed by AI researchers just a few years ago, they were treated with caution and concern. OpenAI, the first company to develop such models, restricted their external use and did not release the source code of its most recent model as it was so worried about potential abuse. OpenAI now has a comprehensive policy focused on permissible uses and content moderation.

But as the race to commercialise the technology has kicked off, those responsible precautions have not been adopted across the industry. In the past six months, easy-to-use commercial versions of these powerful AI tools have proliferated, many of them without the barest of limits or restrictions.

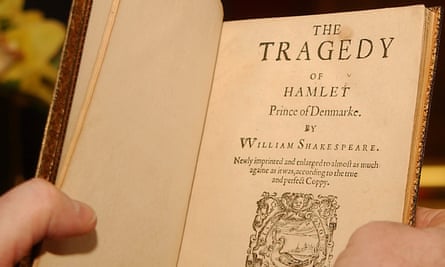

One company’s stated mission is to employ cutting edge-AI technology in order to make writing painless. Another released an app for smartphones with an eyebrow-raising sample prompt for a high schooler: “Write an article about the themes of Macbeth.” We won’t name any of those companies here – no need to make it easier for cheaters – but they are easy to find, and they often cost nothing to use, at least for now. For a high school pupil, a well written and unique English essay on Hamlet or short argument about the causes of the first world war is now just a few clicks away.

While it’s important that parents and teachers know about these new tools for cheating, there’s not much they can do about it. It’s almost impossible to prevent kids from accessing these new technologies, and schools will be outmatched when it comes to detecting their use. This also isn’t a problem that lends itself to government regulation. While the government is already intervening (albeit slowly) to address the potential misuse of AI in various domains – for example, in hiring staff, or facial recognition – there is much less understanding of language models and how their potential harms can be addressed.

In this situation, the solution lies in getting technology companies and the community of AI developers to embrace an ethic of responsibility. Unlike in law or medicine, there are no widely accepted standards in technology for what counts as responsible behaviour. There are scant legal requirements for beneficial uses of technology. In law and medicine, standards were a product of deliberate decisions by leading practitioners to adopt a form of self-regulation. In this case, that would mean companies establishing a shared framework for the responsible development, deployment or release of language models to mitigate their harmful effects, especially in the hands of adversarial users.

What could companies do that would promote the socially beneficial uses and deter or prevent the obviously negative uses, such as using a text generator to cheat in school?

There are a number of obvious possibilities. Perhaps all text generated by commercially available language models could be placed in an independent repository to allow for plagiarism detection. A second would be age restrictions and age-verification systems to make clear that pupils should not access the software. Finally, and more ambitiously, leading AI developers could establish an independent review board that would authorise whether and how to release language models, prioritising access to independent researchers who can help assess risks and suggest mitigation strategies, rather than speeding toward commercialisation.

After all, because language models can be adapted to so many downstream applications, no single company could foresee all the potential risks (or benefits). Years ago, software companies realised that it was necessary to thoroughly test their products for technical problems before they were released – a process now known in the industry as quality assurance. It’s high time tech companies realised that their products need to go through a social assurance process before being released, to anticipate and mitigate the societal problems that may result.

In an environment in which technology outpaces democracy, we need to develop an ethic of responsibility on the technological frontier. Powerful tech companies cannot treat the ethical and social implications of their products as an afterthought. If they simply rush to occupy the marketplace, and then apologise later if necessary – a story we’ve become all too familiar with in recent years – society pays the price for others’ lack of foresight.

Rob Reich is a professor of political science at Stanford University. His colleagues, Mehran Sahami and Jeremy Weinstein, co-authored this piece. Together they are the authors of System Error: Where Big Tech Went Wrong and How We Can Reboot