Looking to Listen: Building Augmented Reality For Good

Augmented reality could change the lives of people with hearing impairments.

With SXSW no longer going ahead due to the coronavirus, we wanted to spotlight two groundbreaking tech entrepreneurs who were hoping to introduce the attendees of the massive tech, movie, and music festival to a whole new realm of accessibility. At this year’s festival, Cochlear.ai’s Dr. Yoonchang Han and COO Subin Lee were set to present a panel on their new Augmented Reality-based project Looking to Listen, which aims to make small but groundbreaking improvements in the lives of people with hearing impairments.

In the wake of the festival’s cancellation, we’re sharing this interview that was originally going to run in our SXSW special edition print magazine in the hopes of highlighting the work that the pair are doing with their company and innovations.

Founded in Seoul in 2017, Cochlear.ai has an intriguing mission statement: “To create a machine listening system that can understand any kind of sound.” That idea is being put into practice with the Looking to Listen project, which aims to utilize their technology to create an app that can offer hearing-impaired people a way of being visually alerted to the sounds that surround them.

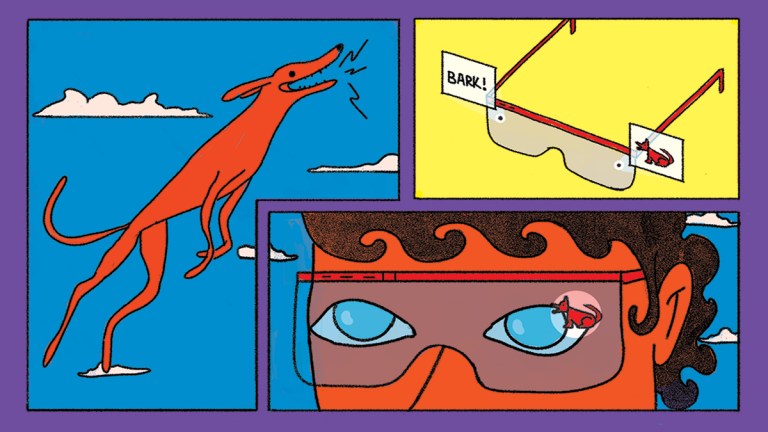

“There are lots of speech recognition systems out there,” Dr. Han says. “But the thing is, we believe that there is so much other information contained in other audio, like environmental sounds. Most modern AI systems focus too much on voice recognition, so we’re making a system that can understand other kinds of sounds. For example: dogs barking, glass breaking, laughing, or screaming.” Cochlear.ai’s aim is to create a wearable prototype that will allow people who can’t hear to have visual prompts explaining the sounds that the Looking to Listen program hears around them.

For Han, the journey, from his student days at King’s College London studying music analysis to founding a machine listening startup, was a logical one. “After doing music analysis we realized that other sounds can be analyzed in a similar way. Then we have to think about what application will be most useful for people and, at Cochlear.ai, our company slogan is ‘Creating ears for artificial intelligence.’ So, of course, the most useful application of that will be for someone who cannot hear very well.”

If you’re a gamer, you’ve probably utilized Augmented Reality (AR) when playing games like Pokémon Go, Ingress, or Niantic’s recent foray into gaming, Harry Potter: Wizards Unite. The idea of the tech is to build on the world that we see around us, hence the term “augmented,” but, with Looking to Listen, the company hopes to take the technology and apply it to the everyday lives of people with hearing impairments.

The current iteration of the program can decipher 34 separate environmental sounds that range from human interactions to home appliances and emergency alerts. That development has informed what the first iteration of the tech will center on: basic interpersonal connections and vital emergency warnings. “When we talk about emergency sounds, we’re thinking about screaming, sirens, or fire and smoke alarms. For human interactions, we’re talking about noises like finger snapping, whistling, or knocking at a door,” Dr. Han explains.

During early conversations and consulting sessions with people with hearing impairments about how the technology could be useful, something quickly became clear: “They didn’t think that this was possible. Most of the technology around hearing impairments is more conventional and focused on helping someone’s listening ability so what we’re doing as a startup is very new.”

When it comes to what those people want from the prospective tool, the Cochlear.ai team soon realized that it is about creating reasonable and easy adjustments in people’s everyday lives that could make a huge difference. “If they can ‘hear’ or be aware of a simple thing like a laundry machine finishing its cycle, a dog barking, or a baby crying, it can really change someone’s daily life.”

In our vision of the future, whether in games, films, or real life, there is a large array of technology that could potentially have positive life-altering impacts on the lives of those who live with disabilities, but often that aspect and the potential of these innovations is ignored. Dr. Han wants to change that with Looking to Listen by taking advantage of technology that already exists and shaping it into something new. “These days so many speakers and these platforms are using speech recognition, that’s why there are so many microphones out there. When I think about how everyone uses smart speakers, wears Apple earpods 24/7 and forgets that they’re wearing them, these microphones are really everywhere. We have the infrastructure ready for us.”

Though the systems are there, the company is still working on a prototype for the wearable tech which would contain Looking to Listen and convert the audio analysis to a visual prompt. Originally beginning with the idea of simple text visuals, the team is now considering using images or even animation, but the prototype is still in the works.

The end goal for Dr. Han and Cochlear.ai is to create something that goes far beyond the simple “off and on” capabilities of current voice recognition tech.

“To provide a suitable customized experience for each person, that context-aware system is a really important goal,” he says. “That’s the ideal use of this audio analysis technology.”