7 Major 2023 Tech Failures Based On Human Factors Science Mistakes

It was a bumper crop year for major tech flameouts stemming from a failure to consider basic human factors science. The following is our team's take on seven tech craters for 2023. Some have significant implications; others are simply frustrating. One incinerated 500 million dollars, solving the wrong problem, and finally, there is a surprising example from Pickleball!

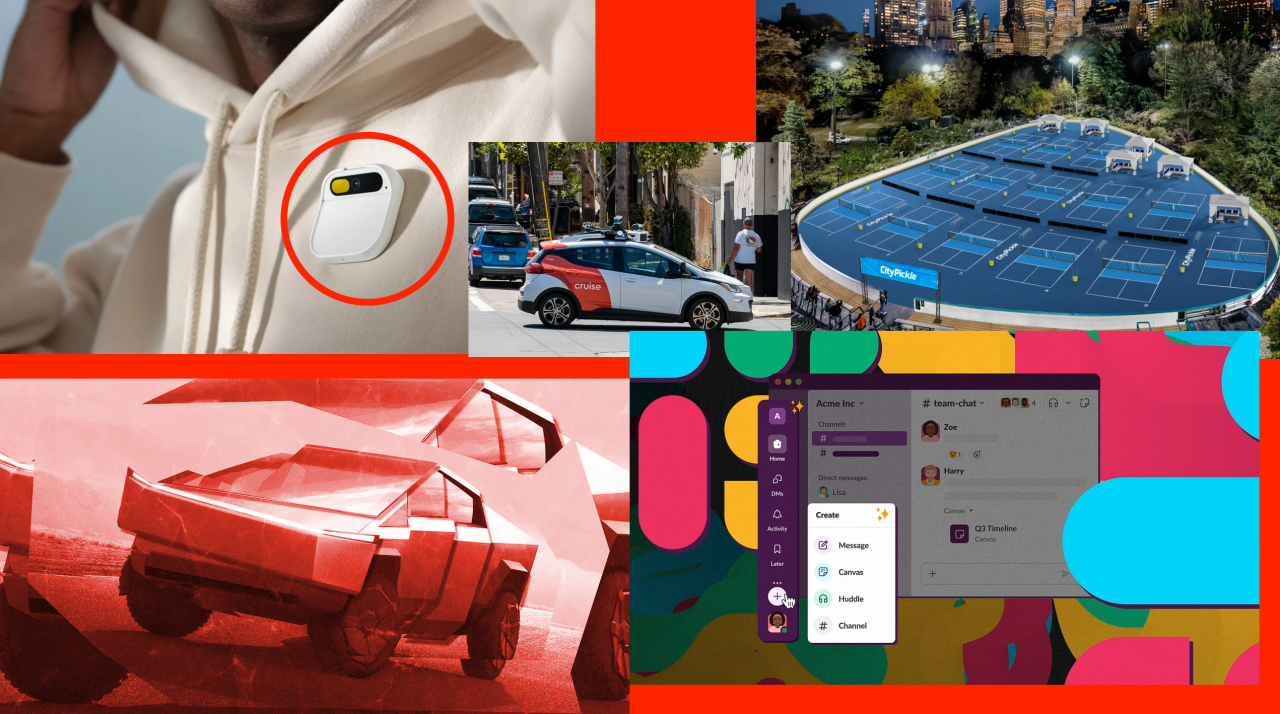

Number 1: Humane AI Pin

Well, the big release arrived in December. I am speaking about The Humane Co. launch of its AI Wearable Pin. By all indications, it is a wildly misdirected example of ignoring fundamental human factors science. I will make this simple: Imran Chaudhri (previous Apple Engineer) set out to remove the screen from the modern smartphone interface. Chaudhri and his team did so through design of an AI voice UI combined with a crude projected UI image on one's hand. What the Humane development team should have understood is that at the core of human information processing and decision-making is the VISUAL capture of one's environment, including data on your smartphone's screen. The actual human operating system is optimized for visual data capture...full stop. By removing a high-resolution display, Chaudhri made the visually salient information we seek vastly more complex by moving core functions from visual delivery to voice/touch/audio data streams. Who at Humane with a background in neuroscience and human factors thought that was a good idea? The Humane AI Pin forces complex information down cognitive processing channels that are less robust and powerful than the human visual system. Thinking that a voice AI can replace visual processing ignores fundamental human factors science. How a startup raises hundreds of millions of dollars solving the wrong problem is beyond staggering, and another example of how being successful at Apple does not mean one understands human factors science and how it impacts new product development. After a billion dollars in VC funding, let's see what Jony Ive and Sam Altman come up with. Will they also solve the wrong problem?

Number 2: GM Cruise RoboTaxi

After a disastrous year of failures and embarrassments for GM Cruise, California finally banned all GM Cruise RoboTaxis from San Francisco. Behind the scenes at Cruise, there was apparently an ongoing deception regarding system human performance. New data shows that GM Cruise autonomous taxis require the full-time attention of 1-1.5 humans in a massive control center! Based on an investigation by New York Times, we now know that Cruise delivered operator-directed remote-controlled taxis, nothing like baseline actual autonomy. Cruise remote operators had to interact with the cars every 2.5 miles to keep them in operation. As mentioned in our previous posts on Cruise, the service was based on an impoverished understanding of the human factors science concept of function allocation. We now see that Cruise did have a function allocation model present, but in a truly "old-world" way. Operators remotely piloted their vehicles like the kid down the street with their toy RC car. GM is now stuck with a total investment cost of several billion dollars and zero to show for Cruise except for brand damage. The overall failure of Cruise is being attributed to the CEO, who lived by the "Zuckerberg Rule: Move Fast and Break Things"...That he did!

Number 3: Tesla Cybertruck

The Exterior Design Manager for the Tesla Cybertruck, Ian Kettle, recently posted a significant thanks to his team for designing the Cybertruck. However, there is a reasonable alternative view based on a more objective analysis of the problem that Tesla solved with the design of the Cybertruck. Did Tesla waste massive design and engineering talent on the wrong problem?

A CONTRA-VIEW: Imagine, if you will, having utilized the extraordinary Tesla design and engineering TEAM to solve a meaningful problem instead of pushing 7,000 pounds of steel and rubber into the already stressed urban environment. Did Tesla solve a meaningful problem, given its extraordinary available resources? It is a fact that the Cybertruck is brimming with innovations that have turned cleverly in the direction of creating one of the most aggressive automotive products in history. The mere neuroaesthetics of the visual design alone tell the story. In the worst case, one can imagine styling studios the world over reflecting the visual design of the Cybertruck in new offerings, thus turning the roadways into real-life versions of Blade Runner. Given the automotive industry's penchant for copying styling trends, this is more plausible than one might assume.

As one moves through a development career, it becomes clear that many creative development teams often solve the wrong problem. I suggest such is the case for the Cybertruck. For sure, Tesla will sell a prodigious number of Cybertrucks, but to what creative end? Did all of Tesla's staggering talent pool solve a meaningful problem?

Number 4: Slack Redesign

In 2023 Fast Company wrote about the disastrous UX redesign of Slack, but the article failed entirely to note the critical human factors science problem with the new system that is causing millions of users to beg for a return of the prior UI design. The clear oversight comes from human factors science and is known technically as "Negative Learning Transfer" or NLT. This is a fundamental requirement in all screen-based UX redesign programs that is routinely overlooked by UX design/research teams. The new Slack interface is a massive rat's nest of negative learning transfer, where new functions have no relationship to prior visual or cognitive scan patterns. Previous features and functions are combined with new functions to create even more NLT. It is always a big surprise to UX designers/researchers that a new design may appear to be an improvement based on the UX designer's intuition or graphic layout, but causes havoc for users...This is the difference between UX design/research and human factors science. Had Slack executed professional independent usability testing, the NLT problems would have stopped the redesign dead in its tracks. In a later post by the Slack UX Design team, we learned that the redesign was mainly based on the UX group's opinions and no formal human factors science. At the time of this writing, we see that a new CEO has been appointed. Will she fix the UI? Hard to say.

Number 6: Pickleball Courts

In what seems like a trivial problem, pickleball is being banned from courts and communities around the globe because of the sounds it generates. However, behind this fact is a lesson in human factors science. It turns out that pickleball gameplay generates a psychoacoustic profile guaranteed to be highly disruptive to those within earshot. Two aspects of the sound production result in instant distraction of the human attention mechanism. They are 1) Random, loud, and rapid acoustic events (i.e., ball strikes) and 2) Human speech, especially excited human speech. (It turns out that pickleball players are verbally very active, far more so than tennis players). It is this cocktail of acoustic events that is so disruptive, but in a very special way.

IMPORTANT: One would be hard-pressed to design a series of psychoacoustic stimulus events that would be more distracting than the rapid and random snap of the pickleball strike and the verbal exchanges of the players. It's just a simple fact of human factors science. Equipment companies are developing "quiet" equipment, but that still leaves the problem of human verbal expression...much less easy to restrain!

Number 7: Sam Altman - The Executive with the Most Disregard for Human Factors Science in 2023

It has been a complex year for AI, and specifically, for MML model ChatGPT, which was dropped into the everyday lives of millions of users by the self-appointed captain of BIG AI, Sam Altman. So what human factors science did Mr. Altman fail to consider that one can objectively quantify as a failure? The answer is simply that human factors science includes a vast research literature on the impact of TRUST on technology adoption and utilization. We know from this research that various factors impact one's perception of trust. Like the pickleball example, some behaviors impact trust and truth-telling more than others. First is creating a technology solution that is transparent in terms of its operational underpinnings. Second is following the accepted rules of business and personal behavior. Third is removing to the greatest extent possible risks associated with a given new technological product. Mr. Altman ignores all three in ways unseen in modern tech.

Specifically, Mr. Altman and OpenAI "Hoovered-up" the entire internet and a massive amount of printed and illustrated literature, with virtually no attempt at transparency or approval. He did this quietly over several years on a massive scale to build the MML ChatGPT. This falls squarely into the time-honored Silicon Valley strategy of "Proceed now and beg forgiveness later". His testimony before Congress was a thinly veiled version of this maxim, which said under the covers, "I have already copied every piece of content I can get my hands on, so regulate AI now that the truly damaging behavior is behind us." Sam Altman may have fooled congress but he has not fooled a massive legal industry that is coming after OpenAI and its behavior. This is a much bigger problem than Sam Altman realizes.

The founding fathers of the US Constitution wrote into law intellectual property rights for all. IP is not a passing fade but a bedrock attribute of how we behave in terms of transparency and respect for other's ideas and protected subject matter. In technology TRUST is acquired not demanded.

Product Manager, autonomous driving and smart mobility enthusiast

4moCould you please refer to the investigation that reveals Cruise “remotely piloted their vehicles like the kid down the street with their toy RC car”? I believe Cruise as well as all other major AV players never admitted in public they do any sort of remote driving, but rather using the operators to do some critical non-realtime decisions that the vehicle is struggling to make autonomously. I mean, that doesn’t undermine that Cruise is in a deep trouble. And that very incident, which caused them to halt the operations, could very well be caused by human factors indeed. But that’s not what is said in your article.

Founder and President of Mauro Usability Science / Neuroscience-based Design Research / IP Expert

4moRoman Senger Thanks for your comment. We appreciate your challenging our findings with regard to the AI Pin and Cybertruck. Here is our response. In the case of the Humane AI Pin our HF Heuristics Analysis shows that the product will have massive human factors challenges. We conduct HF Heuristics Analysis frequently for leading corporations and VC. We very rarely have the production product to work with but proceed with what is available at the time of analysis. The HF Heuristics methodology is a validated process utilized for decades by human factors science experts. Any product that removes 80% of the human information processing capabilities by design is going nowhere in the marketplace. This one was easy. In terms of the Cybertruck. We make clear that Tesla will sell a ton of trucks. However, when taken from a broader human factors science perspective, there is no reasonable basis on which such a vehicle makes sense in the current urban environment. Our POV is that Tesla wasted wonderful sets of expertise to no larger benefit. The failure is, therefore, more nuanced than market sales. The entire purpose of producing an analysis is to help colleagues look beyond the obvious technological innovations into human impact.

Mit Digitalisierung im Service zum Erfolg • Offen für Partnerschaften aus Service, Wissensmanagement, Knowledge Graph, Chatbots • Empolis Knowledge Management • OKR-Champion

4moI might not be familiar with all seven products discussed in the article, but I find the categorization of two of them, the Humane AI Pin and the Tesla Cybertruck, as 'Tech Failures' quite challenging to accept. Regarding the Humane AI Pin, it's important to note that the product isn't even available yet. Therefore, evaluating its success is only possible on raised VC funding, which is significant. As for the Tesla Cybertruck, despite the initial debates and polarizing opinions, it's noteworthy that the vehicle reportedly sold out until 2027. Considering this overwhelming demand, branding it as a failure seems contrary to the evident market response. Labeling these two innovations as 'Tech Failures' might require a more nuanced analysis, taking into account their respective stages in the market and the substantial interest they've generated. It might be premature to assess their success solely through traditional measures at this point.

HCD Strategist, Senior Writer and Author, Instructor

4moThe idea for the article is great, but I think the following line is a completely false and sloppy generalization - "It is always a big surprise to UX designers/researchers that a new design may appear to be an improvement based on the UX designer's intuition or graphic layout, but causes havoc for users...:" That's certainly not true for all UX designers or researchers and, speaking for myself and some esteemed colleagues, "always" is an utterly false overstatement. I think Human Centered Design encompasses many people with a variety of skill sets and experiences. There has been a long, ongoing trend to hire junior "UX" designers primarily based on significantly lower salary expectations, too much focus on graphic design and specific UX tools, and the pliability to simply rubber stamp decisions by people with incentives that are not always aligned with the organization or their users. I think people who are serious about HCD and genuinely care about it can learn from each other and try to strengthen the community overall, especially as it is being eroded on many fronts. I am personally seeing a marked decline in usability and good user experience in my own recent online interactions and hope this somehow reverses.

I help product leaders and startups brief products that delight while removing investment risk.

4moInsightful list, thank you. A few simple thoughts on the Cyber Truck. Viewing through the lens of Desirability, Feasibility, and Viability, this new truck seems to tick one box (marginally)—Desirability. Yes, the queue is long and they may sell many to true believers. Yet, if your main user problems are amphibious driving, sniper-proof driving, and 10-micron tolerances... you will be disappointed. That said, I seriously doubt that those are serious user problems to solve in the first place. 🤔