Ask Me Anything: Exploratory Testing

Back in April 2021 I contributed to the Ministry of Testing Ask me Anything on the topic of exploratory testing (recording here). And followed that up by answering some additional questions in their forums. This post is a recapturing of the answers that I provided in the forums.

How do you convince people that exploratory testing is valuable?

Whenever I start at a new organisation I set out a contract between myself and the team to bring them on the journey of “let’s use exploratory testing”. This is something I’ve discussed in my blog post Why I’m talking to developers about exploratory testing. Explaining what exploratory testing is (and isn’t) helps people to be convinced as to its use and usefulness.

I also look to answer the following points:

Why should we do this?

- Show the value and explain what’s in it for them (useful information earlier & the ability to collaborate and own design).

- Set expectations and show that you’ll be working with the team to pull in the same direction (not against them).

- Talk about how it’s a plannable style of testing and not just ad hoc or unplanned.

We don’t need testing

- Talk about working together and how this is not test vs. dev. We’re not trying to prove them wrong, we’re trying to support them and help in their design thinking.

- Point out that we’re providing useful information that can help them and we’ll tailor that information to be useful to them.

- mention that unit and confirmatory testing can only tell us about what we already know. What about what we don’t know?

Won’t it slow us down?

- Talk about how exploratory testing can be planned and timeboxed.

- Talk about using questioning and critical thinking to shift testing earlier (left) and save time by building the right thing initially.

- We’re still going to do automated regression, that’ll speed us up!

What do we devs get out of it?

- INFORMATION!

- We’re here to help, we can give you product knowledge or help debug issues with our skills and knowledge.

- Talk about how having us about means they can cognitively offload some analysis onto us, leaving them freer to think about implementation.

How do you teach new people to do exploratory testing?

I always start by showing people that exploration is something they already do. I ask “When you get a new Phone / Game / App what do you do? Read the manual first or play with it to see what it does?” If you use the product to learn about it, that’s exploratory testing!

I’ve run a number of practical workshops in the past where I work with people to show the basics of exploratory testing:

- What is exploratory testing (finding and sharing information).

- How to come up with test ideas by breaking an item into components, risks and tests.

- Writing test charters using Explore… With… to Discover syntax.

- Running test sessions and taking notes (I do this practically as an ensemble so we can all work together).

- Working together to understand what a debrief is and how we can use our test notes to share information (good, bad and questions).

I also pair with people one on one to teach skills, show how to keep on track and share any heuristics (rules of thumb) that I use.

How do you know how much time to have for exploring when you add a new feature?

The simple answer for this is that a lot of the time I don’t until I’ve started looking. Because testing aims to find useful information for a team the amount of time that testing could take could be any amount of time whilst information is still useful.

In the real world we have deadlines and have to support our teams getting things “done” so I time box my testing. I will write test charters based on the risks I think are related to the feature (based on breaking it into components and then risks and tests) and will work with the team to prioritise and size these tests. Based on the priority and sizing I can say “Okay I plan to take this long for this feature, unless anything major comes up that we need to look into.”

So really it comes down to working with the team to work out what’s interesting and useful for us to test and feed back any information that might require us to change these timings.

I find it difficult to explore when multiple systems are involved in a larger business process, maybe due to the longer feedback cycles. Do you have any tips how to make it feel less like executing test cases?

Yes I do! Break it down, just like you would for a scenario or a test case. When we think of too big of a system, we get bogged down in the e2e flow and can’t see what to test, so lets break it sown to make it easier.

Product > Feature > Components > Risks > Test ideas

How would you test a chair?

This is a standard question that we testers ask each other and our teams and we tend to find that we get about 5 or so answers before the ideas run out:

- I sit on it

- I’d tip it over

- I’d stand on it

- I’d kick it

It can be hard to design tests without techniques and skills to help us to come up with lots of ideas quickly. One way we can do this is by thinking about the components of a product, rather than the whole product as one complete entity.

Break it down

Instead of thinking about the whole thing, we can break the product or feature into its component parts and concepts to help us come up with test ideas. Now you’re not testing a “chair” you’re testing “legs”, “colour”, “design”, “arms”, “seat”, “material”, etc…

From there we can look at the risks for each component and how they might not work.

Risks then lead to test ideas as we look to see how the product (or component) works in relation to that risk.

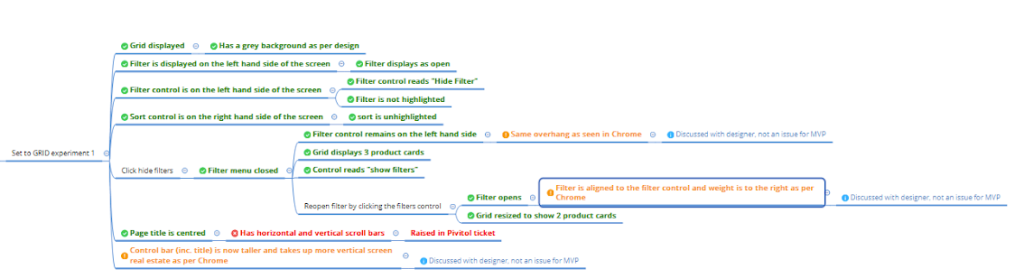

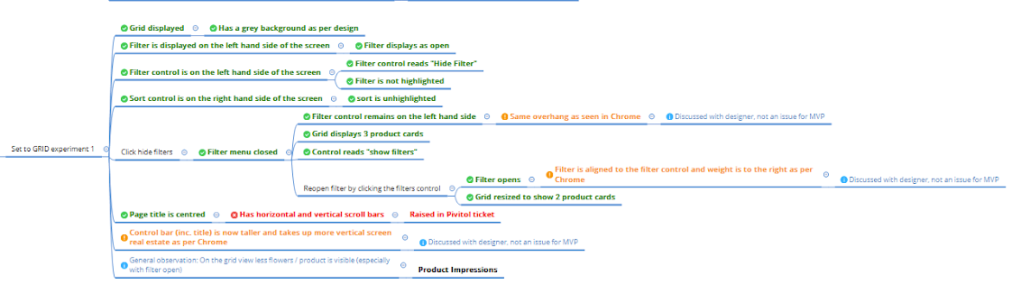

(Those images come from a blog post That I wrote for Bloom & Wild in 2020.)

In which phase exploratory testing fit? And who is responsible for that? What tooling required to implement?

Exploratory testing is using critical analysis and thinking to uncover and share information about a product, design or idea. That means unlike in scripted testing we don’t need a product to be able to start testing. We can start exploring ideas or designs as soon as we hear about them, questioning them to find out information that’ll shape how they’re thought about, designed and implemented.

Responsibility lies ultimately with the team, the team wants better quality of their products so they’re in charge of making sure that testing happens. As a tester in the team you might end up doing the testing (because you have the bests skills for it) and you’ll certainly champion quality, but the whole team is accountable for quality.

Note: That doesn’t mean only testers should be doing exploratory testing. I try to teach my team mates how to do it through pairing so that everyone can start finding more information about quality.

Tooling will depend upon what you’re testing but fundamentally you need anything that’ll help you explore and find information about the product and a way of taking test notes.

- Test Notes: Google docs, Excel, XMind, Screen recorders, Audacity audio, Pen & paper, PicPick screenshot grabbers.

- Networking: Charles proxy, Dev Tools, Clumsy network throttling.

- APIs: Dev tools, Postman, SOAP UI.

- UI: Colour marketing psychology, Accessibility tooling (WCAG 2.1, Axe, WAVE, Lighthouse, A11y)

- Back end: SQL readers, IDEs, GitHub.

How would you characterise Exploratory Testing? And how does it differ from “other testing”?

Exploratory testing is a testing technique that we use to interact with and learn about a product, design or idea with the express intention of sharing information about it. it’s different from other testing techniques in that:

- We are looking to learn new things about the item under test, not just confirm what we already know.

- It is flexible because we can use our own intuition to investigate opportunities that come up within a defined ringfence, rather than sticking to a script.

- Our reporting focuses on sharing knowledge rather than stats and figures (what does “75.765% of my tests have been run” really mean in terms of how good something is anyway?)

In my notes I tend to capture:

- The test charter, what I’m testing.

- Who’s done the testing and when (for traceability)

- What I’ve done, so this is a narrative of what I’ve tried and what I’ve seen

- Any issues I’ve found

- Any questions I come up with during my testing

- New testing ideas

The idea of the notes is to have something that

- I can use to share information about what I’ve looked at back to people

- can be used to learn about how the product works for future training.

Considering the tendency for minimal documentation when exploratory testing, how do you transfer your test knowledge of your product to a new team member?

I’d say that exploratory testing creates more documentation and test notes than other forms of testing. If you have a specific need and know you’ll be handing over a project to others then make your test notes more descriptive and verbose.

I also run debriefs and demos to share what I’ve learned about the product too.

Is there a possibility to be a good tester, but not do exploratory testing at all? My feeling is that exploration is a kind of essential virtue of a tester.

Of course you can be! Different people have different skills in testing based on the needs of their organisations. just because your organisation isn’t in a place where exploratory testing is needed (or can be utilised) that doesn’t make you a bad tester at all.

The same testing skills we use in all forms of testing (critical analysis, learning, inquisitiveness, asking questions, coming up with ideas, fearlessness, note taking, sharing information) are present across so many types of testing. Those are the things that make you a good tester, not the specifics of your technique implementation.

(However knowing exploratory testing will certainly help your information finding massively and will make you more marketable in the job market.)

In our project, test cases are necessary as the Automation engineer needs them to refer when writing automation scripts. How can we implement exploratory testing in a culture like this?

Exploratory testing helps us find out information about what we don’t know. Once we’ve run our exploration we have information that can be shared back to our automation capability to create scripted tests.

- Run exploratory charters to learn information about the product.

- Debrief the team to agree what we need to continue behaving in that way.

- Share that agreement & test notes to automation engineers (or write those scripted tests).

And remember that we’re not automating everything! Be selective about what needs to be automated from your exploratory testing results.

What are the main outcomes after exploratory testing and debriefing? Does it only result in notes to do (bug or fix) on the task board? Do you store and go back to your charters later? Do they become regression tests? Do you create any test matrix or statistics from your testing? if so How?

The outputs of exploratory testing is information that I share back to the team via my test notes and debriefs. From our debrief we may create fixes, bugs or new tests back on the Jira board, this is based on an agreement with the person I’m debriefing.

I store charters against Jira tickets for traceability and you can use something like Test Rail or Zephyr to help with that. Just write your charter as the the scenario and attach your test notes back to the scenario (which is more than good enough to meet ISO standards of auditability).

Anything we learn from testing via exploratory testing can become a regression test in the same way any other test would.

- We do the testing to find information

- Through a debrief we agree what we’d like to persist behaviour of (and what needs a regression test)

- We create an automated test script based on what we know about the system (directly or sharing back to the SDIT that’s writing the automation)

I don’t create matrices or reports / stats for my exploratory testing because in my organisation there’s no call for it. Instead I provide demos and debriefs about the quality of the system and also share information at stand ups about how good what we’re building is.

“I’ve looked at this endpoint, looking at all of the CRUD behaviours with different valid and invalid data types, including some naughty strings and 4 bit character inputs. It’s looking good, the responses are showing we persist the data in the database and the error handling for fields X, Y and Z are awesome with really meaningful error responses. Today I can find out more information about the structure of the JSON queries we send if we thing we need it?”

rather than

“59.00087% of tests have been run.”

In the first instance the team knows what’s been covered and what else I might look into (and how good things are). they see that I’m engaged and trying to help them out and am working with them. In the second instance when I just report a number I’m not really saying anything about the quality of the system, I’m basically just telling people I’ve done something.

If people need a type of reporting at your organisation then I’d recommend creating a document like the below that will allow people to see what testing has been done, details of the quality (through bugs) and an indication of what’s left to run and how long that’ll take.

Should exploratory testing be done by the tester with the most business knowledge or actually not?

A good question. Exploratory testing is the learning of our system or product to share information about it. Because we’re not confirming behaviour, but instead learning about and playing back about what happens, we don’t need to really know about the system in advance.

Business knowledge can help to identify issues more easily, and shorten feedback time to seeing issues, but you can always debrief with a developer or product owner to see where business issues might arise.

Don’t fall into the trap of thinking that Exploratory testing is like an ad hoc smoke test, just unscripted regression to check what we already think should happen. If we’re confirming what we already know then we’re not really exploring the system and probably don’t need to use exploratory testing techniques for that testing; try scripted testing instead.

How do we keep the knowledge and do knowledge transfer while doing exploratory testing? assume the tester left and a new tester comes? Since we do not maintain scripts and steps, how can the new tester learn things?

As a part of exploratory testing we should be creating test notes and sharing our findings about the system through demos, debriefs and the notes themselves. Regression tests will also be documented through our automation tests that we create.

If you’re in an environment where there’s a lot of new testers (or any team mates) joining your project then use that as an opportunity to make your test notes more verbose, so that they can be used for training.

Don’t fall into the trap of thinking that exploratory testing is chaotic, ad-hoc and not documented. We can plan our tests and charters with a scope and purpose, timebox them, prioritise them and create useful test notes for sharing the information we find.

How do you utilise or avoid biases when you are using your life experience as a heuristic/oracle?

I think inherently using your own life experience for something will involve bias. Asking “will I like this?” is leaning into your own bias completely. The trick is to know this, be humble and not use this as the only heuristic for your testing. Learn about other heuristics, share your findings with your team mates to see what they think, learn about your biases and also have empathy for your customers that aren’t like you.

It’s a little harder to think about what other people might want / like from a product, but it’s totally doable. The more you learn about other people then the easier that’ll be too. Why not look into How Diversity and Inclusion can help improve testing?