Effective software teams are always looking for ways to make their members more efficient. They realize that improving the development process is an good way to improve quality and reduce time to market. Sometimes this means adding new tools, but sometimes it just requires stepping back from the day-to-day work to take a fresh look at what we’re doing.

Consider testing. Most of us are working on projects that have thousands of test cases, and it’s common for a full run to take hours if not days. Each week our teams add new tests, and then a couple of times a year, we realize that the total test time has gotten too long, so we add hardware resources for automated testing, or add staff for manual and free-play testing.

Today I’d like to help you think about some alternatives to throwing more resources at the problem. Below are some simple steps that I’ve used when faced with the “testing is taking too long” problem.

Step 1: Analyze test failure data

It should be easy for any project to automate the collection of this data. Most likely you already have this in some format, so just make sure that it’s consistent (e.g. tests have unique-ids) and can be accessed programmatically for analysis. No one wants to be scanning log files. The underlying storage format of the data doesn’t matter as long as you expose an API that let’s you spin through all test runs, and access the list of tests and their historical status. Also, make sure that you are recording this information every time any user runs any test in any context. The more data the better. Once you have the raw data, group the tests into three buckets by failure rate: often, sometimes, seldom.

Step 2: Fix or delete fragile tests

The next step is to sub-divide the tests that fail “often” into two groups: the ones that find bugs, and the ones that are fragile. Nothing will destroy confidence in your testing and reporting environment more than fragile tests. If your immediate inclination when you see a broken test is to re-run it and hope it passes, then your process is broken. Fragile tests need to be reworked or deleted. If it’s not easy to fix these tests quickly, and you believe they have value, then at least disable them for everyday testing and run them periodically when there’s slack time in your infrastructure.

Step 3: Understand your tests

More important than running tens of thousands of tests, is ensuring that you’re running the right tests.

There are lots of ways we could group tests, but I am partial to things that are simple and easy to maintain, so let’s create three more buckets and group the tests by importance of the feature being tested: high, medium, low.

This will have to be manually assigned, but it doesn’t have to be perfect, we can refine the groups over time. Keep in mind that the importance should be from the end-user point of view; and remember that most users use a fraction of a product’s total features. So if 90% of your tests end up in the “high” priority bucket, take a second look. Also, keep in mind that the features most valued by your customers might not be the ones that your developers like the most, so ask your customer support people for help with this part, and review user bug reports to gain insight into how customers are using the product.

Step 4: Prune obsolete tests

How often do we review the tests that we’re running? Do we remove the ones that no longer make sense or are redundant? Or do we continually maintain and run old tests, because we’re not exactly sure what they do, and we’re afraid to delete them? Just like refactoring a code base, refactoring test cases is critical to maintain efficiency, but where should we start?

When you finished with step 3, chances are you’ll have some tests in the “low” bucket because you’re not really sure what they do. This is the time to take a closer look at those and consider deleting or re-factoring them.

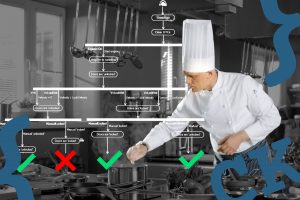

Step 5: Prioritize the tests

Once you’ve fixed (or deleted) your fragile tests and deleted the obsolete ones, assign priorities to the 9 attribute combinations as shown in the table below. This will result in 5 test priority groups. Next, check that the percentage of tests in each group makes sense for your project. There’s nothing magical about the priority groups or percentages I’ve used in this example, you should customize this for your project, but if 90% of your tests are failing a lot and testing high priority features, you might have problems beyond the scope of this post!

Step 6: Execute tests in priority order

Now that you have your tests grouped by priority level, you can develop an execution strategy. I recommend executing the tests in priority order, and stopping after some number of failures, maybe after 5 or 10 failures. A good reason to allow multiple failures is that it helps developers to see a pattern in the results when looking at a problem.

Step 7: Continually refine things

The final step is to ensure that you constantly re-visit the test importance and frequency of failure attributes. As your product evolves older features might move down in importance, and newer features might move up, leading to a different distribution of tests among the priority levels.

Further readings

Closely related to the points I’ve made above, is the the more general idea of Test Case Prioritization (TCP). Here are a two academic papers that that summarize some of the approaches being used for TCP. Enjoy.