A Craft CMS Development Workflow With Docker: Part 5 - Testing

As part of my Craft CMS Development Workflow With Docker series of articles I'll be covering, from start to finish, setting up a development workflow for working with Craft CMS in docker.

- Part 1 - Local Development

- Part 2 - Buildchains in Docker

- Part 3 - Continuous Integration

- Part 4 - Docker In Production

- Part 5 - Testing

- Part 6 - Performance

- Part 7 - Multi-Developer Workflow

If you're following along with this series after completing Part 4 you'll have a Craft CMS project running on a server after passing through a continuous integration process which builds your docker images.

In Part 5 we'll be exploring how to augment this system to include automated testing so that we can deploy with confidence.

Testing Craft Projects

Before we start a few words about the scope of this article. The majority of applications which are built using Craft CMS are front end in nature. Pixel and Tonic have made an awesome job of all the back end stuff so that we don't need to worry about it.

Just as you wouldn't test a 3rd party API just because you're integrating with it, the scope of our application doesn't extend to the back end code, so our testing also shouldn't be creeping in there.

Our tests should therefore be focusing on the front end and data model, the bits that we've been working on. The framework for testing presented here is also open-ended so you can drop in whatever other testing tools you fancy.

** If you're building plugins or an API then there's more of an argument for creating low level unit and integration tests. We won't cover that here because we aren't building a plugin or module or API right now **

The Setup

First things first, we need to get our CI set up to run whatever tests we want to run. Open up your .gitlab-ci.yml and update the following. I've kept all of the top level properties but hidden anything within them that hasn't changed.

image: tmaier/docker-compose:18.09

services:

...

stages:

- build

- test

- deploy

variables:

...

before_script:

...

build:

...

test:

stage: test

script:

- 'false'

deploy:

...We've created a failing test!

If we were to commit and push this now we would see GitLab create three stages in its CI pipeline. Build would succeed, test would fail and deploy would not run at all.

The success or failure of a task in GitLab is determined by the return codes generated by the script lines within the task. In our example above we are running false which in the command line environment in which this is running will always result in an error return code.

We can also use tests to generate reports which don't necessarily have to pass or fail - they can just output some useful information for us to review later.

With this structure in place I'd like to demonstrate a few potential uses which are tailored to our Craft project. These may or may not be useful to you when you start working on real-world projects, but hopefully they'll demonstrate some of the possibilities.

Content

In order to test our site we'll need it to be in a state similar to what will be found in our staging or production environments. Most importantly we'll need Craft to be installed and our content model established in the database. It would also be useful to have some pre-populated content.

There are two ways we can go about doing this. I regularly use one of them - the other is a bit more complex and I've not quite managed to get it working nicely yet, but I wanted to get this article written so I'll just have to revisit it later...

The Way That Works

Our first method is to simply get our site into a representative state locally and then dump the database. We can restore this DB dump before our CI tests in order to make sure we're testing against a known state. Whenever the content model within our site changes we'll need to remember to export another database dump. This is pretty standard practice for multi-dev teams anyway - the database content is a huge part of any Craft project's specification and therefore should be made available to new developers starting on a project.

** Craft 3.1's Project Config will change this a bit by allowing the data model to be codified outside of the database. This will allow us to easily transfer this to our CI environment, but not create test content that adheres to it. More on that in another article. **

Anyway, you probably want some commands to run by now. Make sure your project is up and running then hit it with this:

mkdir dbdumps

docker-compose exec database mysqldump -u project -p"project" project > dbdumps/dump.sqlHere we've connected to our database container and executed mysqldump inside it. By default mysqldump will just print out the SQL that it generates so we capture that output and push it into an sql file in our newly created dbdumps directory.

Next we need to create a docker-compose config that we want to execute in our CI environment. As was discussed in the Docker in Production article, we can't simply re-use our existing local docker-compose.yml because that is set up to build our images rather than pull the already created ones from our container registry.

Make a copy of the docker-compose.yml that we created for our production server and place a copy in the root of our project repo called docker-compose-ci.yml. In this new file we just need to update a couple of bits:

- Remove the restart properties from all three containers

- Change the database password to "project" in both the database and php container definitions

Once that's done we can update our gitlab-ci.yml to tell it to boot up our project and import the database:

test:

stage: test

script:

- docker-compose -f docker-compose-ci.yml up -d

- sleep 30;

- cat dbdumps/dump.sql | docker exec -i $(docker-compose ps -q database) mysql -u project -p"project" projectWhat's with that sleep 30? I hear you scoff.

The database takes a little while to initialise so we have to delay our import until it's ready. The 30 is arbitrary. This would be much better if we could poll the connectivity of the database in a loop, something like:

for try in {1..30} ; do docker-compose exec database mysql -u project -p"project" -e ";" && break; sleep 1; done

But I couldn't get it to work due to TTY issues. Let me know if you manage to solve that one and I'll happily get rid of that sleep.

So now we have a copy of our project running inside our CI environment - our docker containers inside a gitlab CI provisioned docker container running on a Google Cloud VPS that's also a container... Container-ception? Luckily time doesn't slow as noticeably with containers.

The Way That I'd Like Things To Work

It would be much nicer if we were able to create a copy of our project and generate test data for it without having to lug around database dumps. In my past Laravel based life this would easily be achieved using model factories. The closest equivalent that Craft has is Yii Fixtures. These allow us to define some data to be inserted into a project's models and then can be executed as a migration to insert that data.

I've not used them for anything robust, but from some brief experimentation they don't lend themselves well to defining the data that is stored in Craft Entries, especially when you start using complex or custom field types like matrix fields or tables.

This also only solves the creation of models within a project, not the creation of the schema. Project Configs in Craft 3.1 should help with that but for now the difficulty of modelling anything but the simplest field types using Fixtures makes them unlikely to replace my current DB dump preference.

We Haven't Tested Anything Yet

Correct. We've set up a base upon which we can run different types of tests against our running project. The following sections will provide a couple of ideas for tests that you might like to perform.

Lighthouse

A favourite testing tool of the web developer. Running Lighthouse during CI will give us loads of useful information about our project and highlight any potential issues, especially with mobile devices, that might prompt us to think again about pushing the deploy button.

You can use the following as a template to get you started:

test:

stage: test

script:

- docker-compose -f docker-compose-ci.yml up -d

- sleep 30;

- cat dbdumps/dump.sql | docker exec -i $(docker-compose ps -q database) mysql -u project -p"project" project

- mkdir reports && chmod 777 reports

- wget https://raw.githubusercontent.com/jfrazelle/dotfiles/master/etc/docker/seccomp/chrome.json -O ./chrome.json

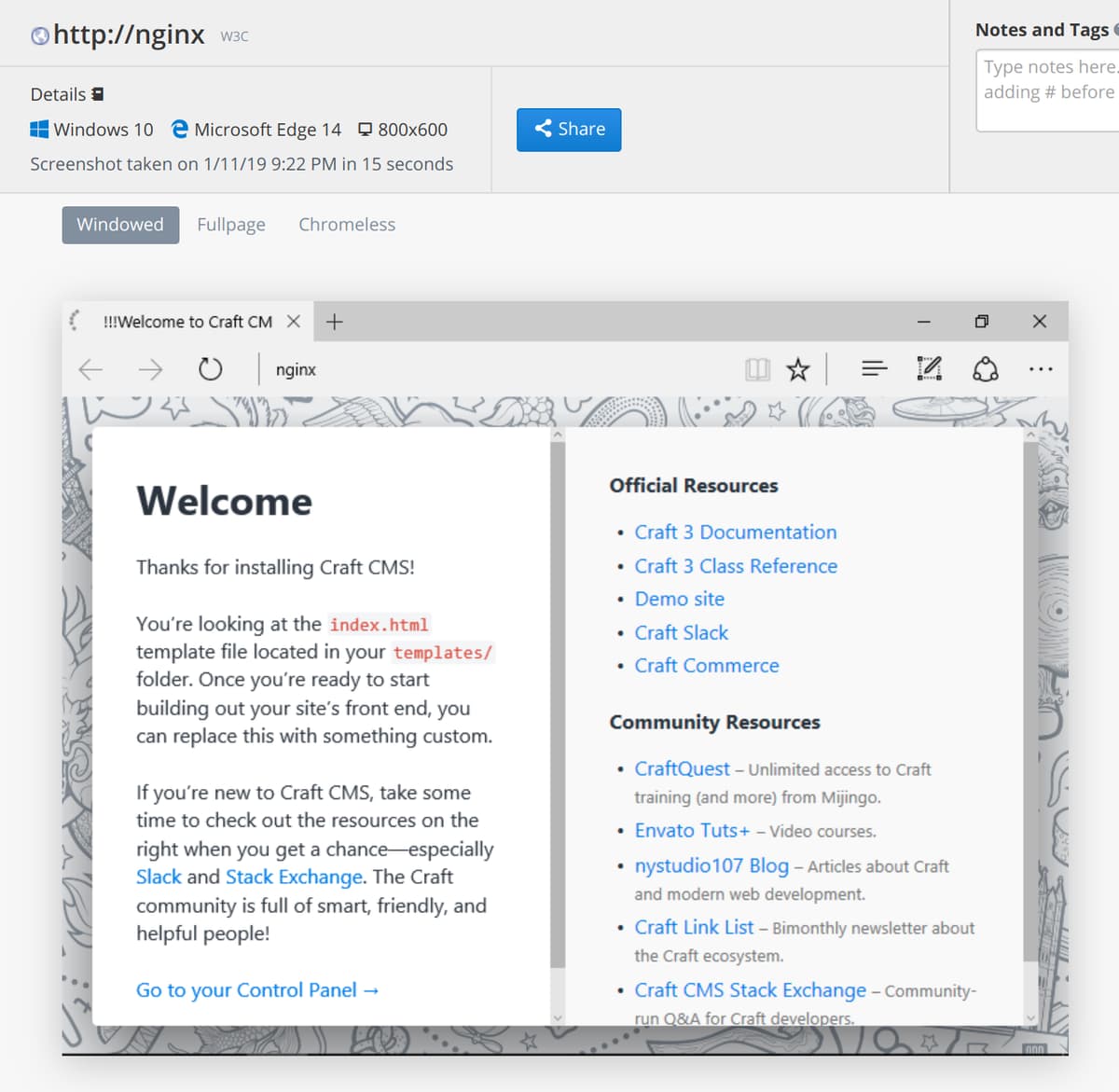

- docker run --network ${CI_PROJECT_NAME}_default --rm -v $(pwd)/reports:/home/chrome/reports --security-opt seccomp=./chrome.json femtopixel/google-lighthouse http://nginx

artifacts:

paths:

- ./reports

expire_in: 1 weekThat will run lighthouse against your home page, generate a report and store it as a GitLab CI Asset for you to review once the job has completed.

Because lighthouse is running on the same server as your project, network latencies won't be taken into account and response times will be different from your production environment as the hardware you're running on will be very different. So take the finer grained timing information with a pinch of salt. It can be useful for relative comparisons between different versions of your app though.

There's lots of things that you can mess around with to change the way lighthouse does its thing so I encourage you to experiment. It might also be fun to write a little script that runs through a list of URLs that you'd like to test rather than only testing the home page.

Here's some links that might help:

Link Checker

Another fairly straight forward test that you can employ is detection of broken links. We can do this using a piece of software called Link Checker which we will (of course) run inside another docker container.

test:

stage: test

script:

- docker-compose -f docker-compose-ci.yml up -d

- sleep 30;

- cat dbdumps/dump.sql | docker exec -i $(docker-compose ps -q database) mysql -u project -p"project" project

- mkdir reports && chmod 777 reports

- docker run --network ${CI_PROJECT_NAME}_default --rm -v $(pwd)/reports:/reports linkchecker/linkchecker --file-output=html/utf_8//reports/linkchecker.html http://nginx

artifacts:

paths:

- ./reports

expire_in: 1 weekYou are the proud owner of another HTML based report showing the status of all internal and outbound links for your site. What a treat.

Again, experimentation is a good thing here. Here's a few resources to help out:

CrossBrowserTesting Screenshots

This one is pretty cool, but costs $$.

We can perform cross-device visual regression testing by taking screenshots of our application as rendered on multiple different devices while our project is still in CI.

We'll need a CrossBrowserTesting API key so if you want to follow along go and get a trial account here. Once you've set that up grab your Authkey from the Manage Account section.

In GitLab add two new CI Variables by navigating to Settings > CI / CD > Environment Variables. Create one called CBT_USERNAME containing the email that you used to sign up with, and another called CBT_AUTH_KEY containing your Authkey.

Set this as your test:

test:

stage: test

script:

- docker-compose -f docker-compose-ci.yml up -d

- sleep 30

- cat dbdumps/dump.sql | docker exec -i $(docker-compose ps -q database) mysql -u project -p"project" project

- docker run -d --network ${CI_PROJECT_NAME}_default --rm -e CBT_USERNAME=$CBT_USERNAME -e CBT_TUNNEL_AUTHKEY=$CBT_AUTH_KEY mattgrayisok/cbt_tunnel:latest

- sleep 30

- apk add curl jq

- SC_ID=$(curl -s --user $CBT_USERNAME:$CBT_AUTH_KEY --data "browser=Mac10.11|Safari9|1024x768&browsers=Win10|Edge20|800x600&url=http://nginx" https://crossbrowsertesting.com/api/v3/screenshots/ | jq -r '.screenshot_test_id')

- sleep 2

- while $(curl -s --user $CBT_USERNAME:$CBT_AUTH_KEY https://crossbrowsertesting.com/api/v3/screenshots/$SC_ID | jq -r '..|.state?' | grep -q "running\|launching"); do sleep 2; doneJesus Christ, what is that monstrosity?

When researching for this article I actually thought this test would be a lot simpler. I ended up having to create a new docker image to tunnel traffic into the CI environment (mattgrayisok/cbt_tunnel) and then figure out how to wait for the screenshot task to be completed before allowing the CI task to complete - otherwise the job ends immediately and our project's containers are all destroyed 🙄.

Anyway, it works - it would probably benefit from all of the CBT related commands being wrapped in a shell script and added to the repo.

You can see the results of your quarry by having a look in the CBT dashboard under Screenshots > View Test Results. Hopefully you'll see some lovely renderings of your project.

CBT also does some cool visual comparison stuff to tell you which grabs look different to the others and why, but I'll leave you to explore that.

Next Steps

Decide what the most important things are for you to test in your project and then test them. If you can get your testing tool to return an error code even better as that'll stop your pipeline from progressing.

There are hundreds of different mechanisms for testing web projects these days. A few other left field ideas:

- Use Cross Browser Testing and Selenium to record videos of page scroll animations

- Smash your website randomly using gremlins.js

- Obey the law and make sure your website is accessible using pa11y

- Use codeception to perform and validate complex journeys through your site

In Part 6 we'll be looking at the performance of our project by making some tweaks to the default configs that come with our base images. We might do some load testing using docker too.

Feedback

Noticed any mistakes, improvements or questions? Or have you used this info in one of your own projects? Please drop me a note in the comments below. 👌