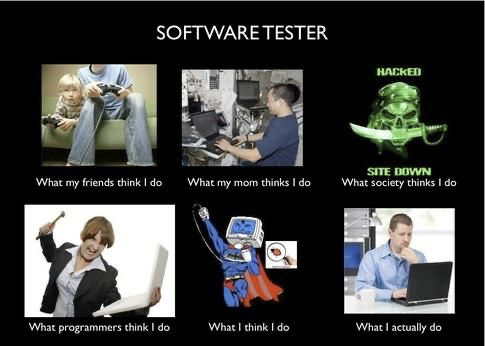

I would argue that just about every piece of the Software Development Lifecycle (SDLC) has drastically changed over the last decade, with the exception of manual testing. Okay, okay, that was dramatic! I understand there are niche organizations that have turned manual testing on its head. However, the majority of testers I engage with haven’t seen this drastic shift I speak of. Most manual testers are still writing exhaustive amounts of test cases and executing them to a tee. Some of ya’ll are still using Excel to manage all that mess (albeit sometimes that is out of your control)! Why is that? For me, writing test cases was the process I was comfortable with. Plus, like many of you all I’m sure, the many years of being told that test cases equal quality was engrained in my testing soul.

Well, I’m here to confirm for you all, getting quality products to customers doesn’t rest on the shoulders of a test case. Even so, crushing this ugly, engrained mindset that test cases equal quality is challenging. While there are many arguments in favor of and against abolishing test cases, the key to determining if you can leave test cases behind is context. In this post, I explore some of the sticking points I’ve had/have and offer some advice on how to break away from your reliance on test cases if you feel it could benefit you.

But First, Why Do We Need to Consider Nixing Test Cases?

Asking why is a primal trait of a tester, so you shouldn’t expect anything less than to start with the why. Here’s my best sell on getting rid of test cases where you can. Test cases take forever to write and maintain! I would argue that in most cases you spend more time writing and maintaining test cases than actually testing. Sure, sometimes that upfront work can save you time in the end. Other times you’re writing test cases for trivial stuff that you could have more quickly checked on your own without wasting time writing steps out. Time management, specifically, is essential to our success.

That’s my sales pitch. If you’re still with me, let’s jump in and start talking through those what-ifs and buts I’m sure you’re having.

But, How Would Regression Testing Work?

Originally, when I heard test cases weren’t required, I immediately panicked. What about regression testing? Without a set of regression test cases how do you execute your regression suite for each release? Clearly, it would be nice to have all of your regression suite automated, and bundled into your CICD pipeline, but that’s not always feasible. So in those examples, where regression relies on manual testing, how would a team manage this piece? A common solution could be something like a feature map.

Instead of detailing every step of every test scenario (a test case), the team would have a feature map, which is a document that details every page of their applications and the features on each. From there, the tester is reliant on their knowledge of the system to be able to use exploratory testing as a regression technique. Additionally, the team could communicate exactly what was changing and focus testing around the riskier or changed parts of the application, allowing the tester to focus extra efforts in those spots. There are multiple ways to go about this, and not all solutions fit every team, so do some research and find what may work best for your group.

But, Test Cases Keep Me Organized When Testing Complex Features

I honestly feel this statement so much. When testing, I personally have a plan and ensure organization or else I’ll find myself somewhere deep down a rabbit hole testing something trivial or obscure. To help me stay organized, and instead of writing test cases, I’ve used black-box testing techniques such as equivalence testing, or boundary value analysis, to help derive scenarios. From there, I use those as a guide instead of writing test steps out for each scenario.

If scenarios are even too detailed for a specific feature or project, I might simply focus on executing exploratory testing. During exploratory testing, I sometimes use a technique called touring to keep me focused. There are lots of variations of “tours you can take”. Here is a good read on the technique and some example tours. The whole concept of touring is to pick a specific testing goal. This way I’m not randomly testing about my application; I have purpose and set a time box so I don’t lose myself down that rabbit hole.

What If My Team Isn’t Very Mature

So maturity in this context isn’t referring to the hilarious, but possibly inappropriate, memes that are shared on your team’s Slack channel. In this context I’m talking about process maturity. In order to ditch your test case writing you have to have a team that supports, and even engages, in your testing efforts. For example, a team that struggles to define a user story during grooming isn’t going to be able to fully define what areas of the application need to be tested or what downstream issues the feature might create. On the flip side, a mature team will have their QA’s involved in grooming, sprint, and feature planning. During those meetings, QA will be encouraged to ask questions around the new functionality and the impacts it may have on existing functionality. Developers and testers will work together to outline the testing strategy. When the code is ready to test, the tester will fully understand what their goals are and they will be able to test the application without needing fully defined test cases. Team maturity plays a huge role in how testers approach testing. If your team doesn’t closely represent the latter of my examples, I would recommend reading a previous posting of mine: How Can Testers Champion Quality Throughout the SDLC?

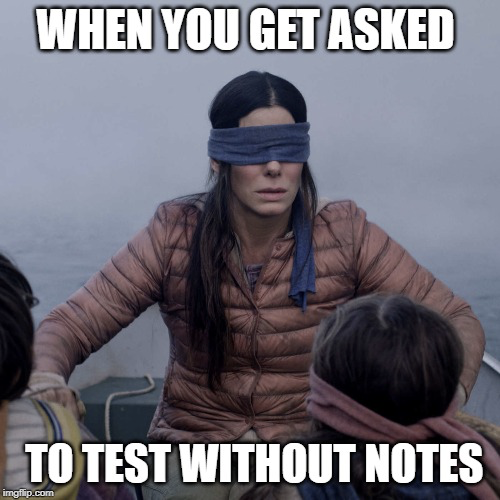

But I’m New to My Team or Company and Don’t Know the Application Well

Most of us have experienced starting a new position, at a new company, or working on a new application. You might have years of testing experience, but lack proper knowledge on the application you’re being asked to test. If you’re starting on a new team, or at a new company, and they have proper documentation like feature mapping, you’re probably going to be successful jumping in and learning the system pretty quickly. If there isn’t sufficient documentation, I would suggest you spend the time documenting the scenarios you want to cover and taking the time you would normally take writing test cases to review the scenarios with the team instead. This allows you to begin fostering the culture where quality belongs to everyone on the team. It also ensures you aren’t overlooking the unknowns and missing opportunities to fully test. In addition, start your own feature mapping document so you have something to build on – a great way to introduce something new and set yourself apart when starting a new gig!

How Will My Manager Know What I’m Testing and What My Progress Is?

Arguably, this may be the hardest one to address. So many people, outside of the QA role, lean on metrics to help them understand what’s being tested and how much progress you’ve made. Percentage complete and pass rate are some of the most common QA metrics used, and having test cases to calculate these metrics is the most common way to report them.

When you don’t have test cases, trying to report on your testing progress might seem impossible. With test cases, it’s easy to spell out that you have X number of test cases in total, and you’ve executed Y, so you’re Z% completed. The good news is, those metrics aren’t the only way for you to communicate your status to your team or manager. And quite frankly, they aren’t even the most informational. Let’s explore a few other options. Feel free to mix and match and get creative on your own. Knowing your audience and what they will find most valuable is key to overcoming this hurdle.

- You can use your test scenarios to count instead. You’re still saving time by not writing out steps for every scenario, and you also have a count of something to be able to report on. This is probably the closest to what your manager is used to today and could be a quick baby step for you to take without disrupting too much of what your manager is comfortable with.

- If you’re using a feature map, you can report on the total number of pages / how many you’ve tested through. Similar to a % complete of test cases or test scenarios, this gives your manager a familiar understanding of where you are in your testing.

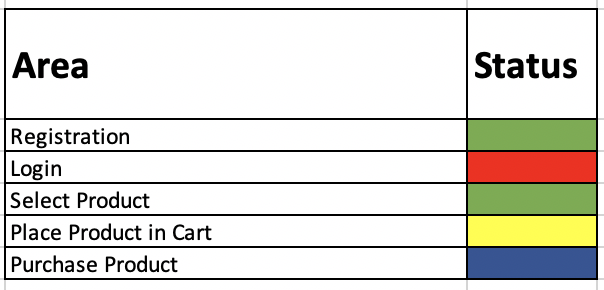

- If your management team is keen on visuals, try taking your feature map and adding a color-coded system to represent status. For example, if the row is green, it’s been tested and has passed. If blue, testing is in progress but no issues have been found. If yellow, minor issues were found. If red, major issues were found. This could give a quick indicator of your testing and any areas that are at risk.

- If you totally want to get away from % completed, passed, and failed – which I believe isn’t the most valuable information we could be giving any way – use a bulleted list (managers love bulleted lists) to outline the highlights. For example, at the end of the week, or test cycle, you could send out:

- Defects Reported: List the defects your team has found and the current state of each defect (fixed, in dev, backlogged, etc…). You could even add metrics around this to show the severity of the bugs you’re finding and how quickly they’re being fixed.

- Blockers: List any blockers you have to finish testing. Think about test data issues, unexpected sick leave, third-party integrations that aren’t available, etc… This fosters collaboration and allows your test manager to jump in and help resolve blockers with you.

- Areas Tested: List areas of the application you’ve tackled so far. You can use your feature map to help tell the story as well. If you’re not using feature mapping, try using areas of the application instead.

- Areas Remaining: List areas of the application you have left to test. You can use your feature map to help tell the story here as well. If you’re not using feature mapping, try using areas of the application instead. Think about what areas are most risky and perhaps you can indicate risk levels in this section.

- Automation Completed: If your team has automation in place, don’t forget to report on the progress. This is where you could use some % completed because automation executes test cases. So giving transparency into this metric will feel familiar to your management team.

This bulleted output gives your team and leaders insight into what you’re working on and how it’s going – it’ a conversation starter. Just providing % completed and a pass rate doesn’t effectively tell the full story. For example:

- Defects Reported:

- DE13534: Cannot log into site from IE – High priority – In Dev

- DE14324: When clicking Next, the site crashes – High Priority – In Dev

- DE14533: Here is misspelled hear on the terms and conditions page – Low Priority – Unplanned

- Blockers:

- No reported blockers at this time

- Areas Tested:

- Registration – Passed

- Depositing money into account – Passed

- Login – DE13534

- Areas Remaining:

- Select product – Not started

- Place product in cart – In Progress

- Automation Completed: 74% of automation completed with a 82% pass rate

Conclusion

As you think about how you may adopt this shift in the way you work, make sure context is king (or queen). My recommendation isn’t to rush into this tomorrow, shouting to the world that you will no longer write test cases! Instead, think about your day to day testing. Is there a piece of your application that you know well and trust yourself to do exploratory testing instead? Perhaps you use the touring technique and try it out. Or maybe the next time you get a feature, try writing test scenarios but leaving the test cases behind. Think of how you might use the time you normally would spend writing the test steps. Perhaps that time is better spent talking with your team on your testing strategy?

At the end of the day, I’m excited that we are even broaching this topic. There are probably some of you reading this that have already dived into the deep end and aren’t writing test cases at all anymore. I’d love to hear from you in the comments below sharing how you started this journey and where you are today with it! For others, you’re looking at this posting and perhaps something sparked your curiosity! Drop some feedback for others and share what you’re thinking and areas you might try something different in. Together, let’s drastically change the way we manually test!

Just yesterday I started a new project and I was thinking about this subject, because after years of doing this I no longer find the real value of having steps in a test scenario described in detail.

I currently work more with test automation, but of course, I still do manual tests. This article came in handy at the moment, of course I will not stop manufacturing a standard imposed by the company, but I think I can start this agile movement in projects that are subject to this.

LikeLike

Thanks for sharing, Rodrigo! Testers can spend a lot of time writing test case steps and then maintaining them…glad to hear you’re considering a switch where you can.

LikeLike

Nice article! Amazingly aligned with our latest QA strategies here. Exactly the Test Case dependency is the tricky part for us. I completely agree is all about context and beyond the time invested to write and keep test cases is way better to have people thinking while testing instead of only automatically executing steps. Going to share with the team to inspire them! 😉

LikeLiked by 1 person

Nice article! While I do agree that writing test cases in detail is an overhead I still believe there has to be test case. The reason for it is, if there are no test cases how would you find the automation coverage? For example say that you have a module with 100 tcs and you know that you have 80% automated and 20% more to be automated. How do you manage this task in the absence of test cases.

LikeLiked by 1 person

That’s a common way organizations manage automation. Test cases are written by manual testers and then automated. So great question!

I would like to explore this: What if automation could just use requirements or ACs to automate from while manual testers focus on exploratory testing? If testers didn’t have to spend so much time writing and maintaining test cases, they could thoroughly test the feature or change while automation is still in progress. Thoughts on that?

LikeLiked by 1 person

Hi! At my company we don’t use any test cases at all. We calculate our automation coverage in two ways. For APIs we have an endpoint that retrieves all endpoints and we have a script that looks at our automation and determines what percentage of coverage we have based on tags added to the automation.

For UI we have a feature map in excel. When we add new features, they’re added to a map and we have a ‘yes/no’ column to state whether or not there is automation around the feature. It’s worked quite well for us in determining coverage and we have excel formulas that display the information in an easy-to-digest table.

LikeLike

Very good insight! I love that you have the endpoint that retrieves all endpoints. Great idea for my situation.

LikeLike

Great article! As someone who has never worked in a world with test cases it’s hard for me to imagine ever going back!

LikeLike