Where does the Liberal promise to address harmful online content stand?

Minister of Canadian Heritage, Pablo Rodriguez announces a new expert advisory group on online safety as a next step in developing legislation to address harmful online content during a press conference in Ottawa on Wednesday, March 30, 2022. THE CANADIAN PRESS/Sean Kilpatrick

Minister of Canadian Heritage, Pablo Rodriguez announces a new expert advisory group on online safety as a next step in developing legislation to address harmful online content during a press conference in Ottawa on Wednesday, March 30, 2022. THE CANADIAN PRESS/Sean Kilpatrick

With considerable attention on an increase in hate and harassment online, questions are being raised over where the federal government’s promises stand, related to advancing legislative and regulatory changes aimed at tamping down harmful content.

After receiving heaps of largely-critical feedback, and going back to the drawing board with the help of experts over the last several months, sources close to the file tell CTVNews.ca that the government is still contemplating how to approach the complex "online safety" legislation in a way that responds to critics’ concerns while addressing the state of discourse online.

The pledge originated with the intention of forcing “online communication service providers,” such as Facebook, YouTube, Twitter, Instagram, and TikTok, to be more accountable and transparent in handling five kinds of harmful content on their platforms: hate speech, child exploitation, the sharing of non-consensual images, incitements to violence, and terrorism.

The Liberals' intention was to ensure the kinds of behaviours that are illegal in-person are also illegal online, with a focus on public content, not private communication.

"Online platforms are increasingly central to participation in democratic, cultural and public life. However, such platforms can also be used to threaten and intimidate Canadians and to promote views that target communities, put people’s safety at risk, and undermine Canada’s social cohesion or democracy," reads the government's landing page for this initiative.

"Now, more than ever, online services must be held responsible for addressing harmful content on their platforms and creating a safe online space that protects all Canadians," it continues.

Beyond seeking expert advice, this summer Canadian Heritage Minister Pablo Rodriguez and top officials from his department have travelled across the country holding panel discussions with stakeholders and representatives from minority groups.

Public hearings intended to capture the concerns of Canadians — particularly those in marginalized communities —are set to continue into the fall, sources said, adding that this continuing outreach is meant to help inform the scope of the legislation.

PAST PROMISED DEADLINE, FOCUS ON GETTING ‘RIGHT’

The Liberals have already blown past their campaign commitment to move on a "balanced and targeted" online harms bill within the first 100 days of their post-2021 election mandate.

Amid this heightened attention and considerable work left before the bill is completed, CTVNews.ca asked when Canadians might expect to see a bill introduced in Parliament and, in an emailed response, Rodriguez's office wouldn't commit to a timeframe, saying the government's priority is getting the legislation right.

"The minister is now engaging directly with Canadians across the country on the insights provided by the experts," said the heritage minister's press secretary Laura Scaffidi. "Canadians should be able to express themselves freely and openly without fear of harm online… We’re committed to getting this right and to engaging Canadians in a thorough, open, and transparent manner every step of the way."

Despite reluctance to put a timeline on seeing the bill tabled, sources told CTVNews.ca that it is unlikely this fall, with early 2023 appearing to be the most realistic timeframe.

Sources said that the government remains committed to putting forward legislation that will give Canadians more tools to address harms online, but there are some factors adding pressure to Rodriguez' desire to "get it right."

Mindful of the pushback from opposition parties and some platforms during the government's push to pass Broadcasting Act updates — including accusations of attacking free speech — sources said the Liberals are bracing for an even bigger fight over this bill.

Given this, some consideration has been made about waiting until there is more space on the legislative agenda to allow the Liberals to dedicate greater attention to this bill once it is tabled. Rodriguez currently has two pieces of outstanding legislation; Bill C-11, the revived Broadcasting Act bill is before the Senate, and Bill C-18 regarding online news remuneration is before a House committee.

Speaking about recent examples of politicians and journalists facing threats, Public Safety Minister Marco Mendicino said on Monday that in addition to engaging law enforcement, "Minister Rodriguez is very eager to bring forward his legislation, so that legislatively the tools are there as well."

However, sources CTVNews.ca spoke with cautioned that while there are elements of the legislation that will likely help—particularly when it comes to platforms taking more accountability for content posted— it is not going to be the "panacea" for rectifying increasingly toxic online discourse. Rather, the coming legislation is being seen as one piece of a bigger puzzle.

For example, an element of the initial proposal from the government that may be unlikely to change given Charter and privacy considerations, is that it’s meant to focus on public content and not private communications such as text messages or emails. Hate-filled and harassing emails have been a central focus of what some journalism advocacy groups are considering a co-ordinated campaign.

Sources said there’s some hope that recent attention, spurred by more federal political figures from across the political spectrum speaking out, will help galvanize support and allow for a serious conversation about tackling the issue.

Ahead of the government presenting the online harms legislation, here's what you need to know about what's transpired on this file so far, and how it may ultimately shape the bill.

CONCERNS WITH WHAT WAS INITIALLY PROPOSED

Two weeks before Prime Minister Justin Trudeau called the 2021 federal election, the government presented a “technical discussion paper” and rolled out a summer-long consultation process on a proposed online harms legislative framework, promising that responses would inform the new laws and regulations.

That proposal included implementing a 24-hour takedown requirement for content deemed harmful, as well as creating federal “last resort” powers to block online platforms that repeatedly refuse to take down harmful content.

The Liberals' initial proposal also floated:

- Compelling platforms to provide data on their algorithms and other systems that scour for and flag potentially harmful content and provide a rationale for when action is taken on flagged posts;

- Obligations for sites to preserve content and identifying information for potential future legal action and new options to alert authorities to potentially illegal content and content of national security concern if an imminent risk of harm is suspected;

- Outlining potential new ways for CSIS and RCMP to play a role when it comes to combating online threats to national security and child exploitation content; and

- Installing a new system for Canadians to appeal platforms’ decisions around content moderation.

The regime proposed a series of severe new sanctions for companies deemed to be repeatedly non-compliant, including fines of up to five per cent of the company’s annual global revenue or $25 million, whichever is higher.

In order to operate and adjudicate this new system, the government suggested creating a new “Digital Safety Commission of Canada” that would be able to issue binding decisions for platforms to remove harmful content, ordering them to do so when they “get it wrong.”

During the 2021 summer feedback period, the government got an earful from stakeholders expressing concerns with then-Canadian heritage minister Steven Guilbeault’s proposals, as well as what was described as a “massively inadequate” consultation process.

From concerns the proposal didn't strike an appropriate balance between addressing online harms and safeguarding freedom of expression, to questioning why the range of harms are being treated as equivalent, experts called for some significant changes.

Facing concerted pressure from stakeholders that the government would ideally want onside as it pushes ahead with this conversation, after Rodriguez was re-appointed as heritage minister, he announced plans to go back to the drawing board.

The decision to rework the plan was announced in February, alongside the release of a "What We Heard" report based on its assessment of the feedback from the consultation process.

It concluded that, while the majority of respondents felt there is a need for the government to take action to crack down on harmful content online, given the complexity of the issue, the coming legislation needed to be thoughtful in its approach to guard against “unintended consequences.”

Minister of Canadian Heritage, Pablo Rodriguez announces a new expert advisory group on online safety as a next step in developing legislation to address harmful online content during a press conference in Ottawa on Wednesday, March 30, 2022. THE CANADIAN PRESS/Sean Kilpatrick

Minister of Canadian Heritage, Pablo Rodriguez announces a new expert advisory group on online safety as a next step in developing legislation to address harmful online content during a press conference in Ottawa on Wednesday, March 30, 2022. THE CANADIAN PRESS/Sean Kilpatrick

Minister of Canadian Heritage, Pablo Rodriguez announces a new expert advisory group on online safety as a next step in developing legislation to address harmful online content during a press conference in Ottawa on Wednesday, March 30, 2022. THE CANADIAN PRESS/Sean Kilpatrick

This online harms framework is separate from a piece of government legislation tabled at the eleventh hour of the 43rd Parliament.

Called Bill C-36, it focused on amendments to the Code and the Canadian Human Rights Act to address hate propaganda, hate crimes and hate speech, but after dying when the 2021 election was called, the legislation has not been revisited by the Liberals.

The bill was mentioned in the latest Liberal campaign platform as part of their promise to "more effectively combat online hate," so remains to be seen whether it could be folded into the coming legislation.

HOW THE COMING PLAN COULD LOOK

In late March, the government tasked experts and specialists in platform governance, content regulation, civil liberties, tech regulation, and national security to help guide the government on what the bill should and shouldn’t include. Among the panelists were stakeholders who were publicly critical of the initial proposal.

Over the course of eight sessions — each focusing on different elements of the framework, from the regulatory powers and law enforcement's role, to freedom of expression — the panel deliberated over the proposal and discussed their concerns.

The panel held its concluding workshop in June, and in early July a high-level summary was published online.

It included some key advice that may help shed light on the ways the coming approach will look different from the initial outline.

Among the panel's suggestions to the government were:

- That any regulatory regime should put an equal emphasis on managing risk and protecting human rights, with any legislative obligations needing to be flexible and adaptable as to not become quickly outdated;

- That public education needs to be a "fundamental component" of any framework, suggesting the plan come alongside programs to improve media literacy;

- That "particularly egregious" content like child sexual exploitation material may require its own solution unique from what other forms of harm may require, with some questioning whether not each of the five forms of harm set out in 2021 require tailored approaches;

- That the proposed regulator should be well-resourced and equipped with audit and enforcement powers because it shouldn't be left up to the platforms who need to take responsibility for their role;

- That clear consequences should be set for regulated services that do not fulfill their obligations under the regime, alongside a "content review and appeal process" at the platform level; and

- Separate from the Digital Safety Commissioner there should be an independent ombudsperson for victim support who could play a "useful intermediary role between users and the regulator."

It was clear from the summaries of each session that there were some sticky areas of disagreement among panellists as well, including whether the legislation should compel services to remove content.

While some experts said a 24-hour takedown requirement should be avoided, except for instances of content that explicitly calls for violence and child sexual exploitation content, others suggested it would be preferable to "err on the side of caution."

Others raised concerns that content removal could disproportionately affect marginalized groups.

The experts also emphasized that "something must be done about disinformation," as it has grown to become one of the more troubling forms of harmful online behaviour. However, the experts cautioned against defining disinformation in legislation.

"Doing so would put the government in a position to distinguish between what is true and false – which it simply cannot do," read the summary, instead suggesting the possibility of focusing on the co-ordinated amplification of misinformation through bots and bot networks.

IN DEPTH

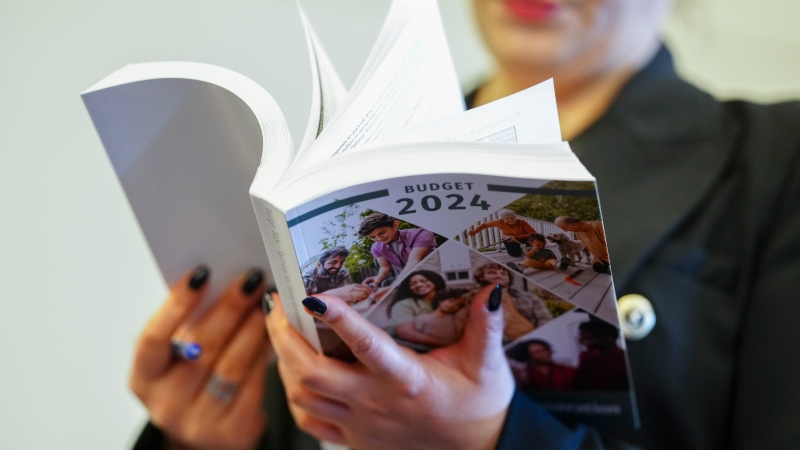

Budget 2024 prioritizes housing while taxing highest earners, deficit projected at $39.8B

In an effort to level the playing field for young people, in the 2024 federal budget, the government is targeting Canada's highest earners with new taxes in order to help offset billions in new spending to enhance the country's housing supply and social supports.

'One of the greatest': Former prime minister Brian Mulroney commemorated at state funeral

Prominent Canadians, political leaders, and family members remembered former prime minister and Progressive Conservative titan Brian Mulroney as an ambitious and compassionate nation-builder at his state funeral on Saturday.

'Democracy requires constant vigilance' Trudeau testifies at inquiry into foreign election interference in Canada

Prime Minister Justin Trudeau testified Wednesday before the national public inquiry into foreign interference in Canada's electoral processes, following a day of testimony from top cabinet ministers about allegations of meddling in the 2019 and 2021 federal elections. Recap all the prime minister had to say.

As Poilievre sides with Smith on trans restrictions, former Conservative candidate says he's 'playing with fire'

Siding with Alberta Premier Danielle Smith on her proposed restrictions on transgender youth, Conservative Leader Pierre Poilievre confirmed Wednesday that he is against trans and non-binary minors using puberty blockers.

Supports for passengers, farmers, artists: 7 bills from MPs and Senators to watch in 2024

When parliamentarians return to Ottawa in a few weeks to kick off the 2024 sitting, there are a few bills from MPs and senators that will be worth keeping an eye on, from a 'gutted' proposal to offer a carbon tax break to farmers, to an initiative aimed at improving Canada's DNA data bank.

Opinion

opinion Don Martin: Gusher of Liberal spending won't put out the fire in this dumpster

A Hail Mary rehash of the greatest hits from the Trudeau government’s three-week travelling pony-show, the 2024 federal budget takes aim at reversing the party’s popularity plunge in the under-40 set, writes political columnist Don Martin. But will it work before the next election?

opinion Don Martin: The doctor Trudeau dumped has a prescription for better health care

Political columnist Don Martin sat down with former federal health minister Jane Philpott, who's on a crusade to help fix Canada's broken health care system, and who declined to take any shots at the prime minister who dumped her from caucus.

opinion Don Martin: Trudeau's seeking shelter from the housing storm he helped create

While Justin Trudeau's recent housing announcements are generally drawing praise from experts, political columnist Don Martin argues there shouldn’t be any standing ovations for a prime minister who helped caused the problem in the first place.

opinion Don Martin: Poilievre has the field to himself as he races across the country to big crowds

It came to pass on Thursday evening that the confidentially predictable failure of the Official Opposition non-confidence motion went down with 204 Liberal, BQ and NDP nays to 116 Conservative yeas. But forcing Canada into a federal election campaign was never the point.

opinion Don Martin: How a beer break may have doomed the carbon tax hike

When the Liberal government chopped a planned beer excise tax hike to two per cent from 4.5 per cent and froze future increases until after the next election, says political columnist Don Martin, it almost guaranteed a similar carbon tax move in the offing.

CTVNews.ca Top Stories

Young people 'tortured' if stolen vehicle operations fail, Montreal police tell MPs

One day after a Montreal police officer fired gunshots at a suspect in a stolen vehicle, senior officers were telling parliamentarians that organized crime groups are recruiting people as young as 15 in the city to steal cars so that they can be shipped overseas.

'It was joy': Trapped B.C. orca calf eats seal meat, putting rescue on hold

A rescue operation for an orca calf trapped in a remote tidal lagoon off Vancouver Island has been put on hold after it started eating seal meat thrown in the water for what is believed to be the first time.

Man sets self on fire outside New York court where Trump trial underway

A man set himself on fire on Friday outside the New York courthouse where Donald Trump's historic hush-money trial was taking place as jury selection wrapped up, but officials said he did not appear to have been targeting Trump.

Sask. father found guilty of withholding daughter to prevent her from getting COVID-19 vaccine

Michael Gordon Jackson, a Saskatchewan man accused of abducting his daughter to prevent her from getting a COVID-19 vaccine, has been found guilty for contravention of a custody order.

Mandisa, Grammy award-winning 'American Idol' alum, dead at 47

Soulful gospel artist Mandisa, a Grammy-winning singer who got her start as a contestant on 'American Idol' in 2006, has died, according to a statement on her verified social media. She was 47.

She set out to find a husband in a year. Then she matched with a guy on a dating app on the other side of the world

Scottish comedian Samantha Hannah was working on a comedy show about finding a husband when Toby Hunter came into her life. What happened next surprised them both.

B.C. judge orders shared dog custody for exes who both 'clearly love Stella'

In a first-of-its-kind ruling, a B.C. judge has awarded a former couple joint custody of their dog.

Saskatoon police to search landfill for remains of woman missing since 2020

Saskatoon police say they will begin searching the city’s landfill for the remains of Mackenzie Lee Trottier, who has been missing for more than three years.

Shivering for health: The myths and truths of ice baths explained

In a climate of social media-endorsed wellness rituals, plunging into cold water has promised to aid muscle recovery, enhance mental health and support immune system function. But the evidence of such benefits sits on thin ice, according to researchers.

Local Spotlight

UBC football star turning heads in lead up to NFL draft

At 6'8" and 350 pounds, there is nothing typical about UBC offensive lineman Giovanni Manu, who was born in Tonga and went to high school in Pitt Meadows.

Cat found at Pearson airport 3 days after going missing

Kevin the cat has been reunited with his family after enduring a harrowing three-day ordeal while lost at Toronto Pearson International Airport earlier this week.

Molly on a mission: N.S. student collecting books about women in sport for school library

Molly Knight, a grade four student in Nova Scotia, noticed her school library did not have many books on female athletes, so she started her own book drive in hopes of changing that.

Where did the gold go? Crime expert weighs in on unfolding Pearson airport heist investigation

Almost 7,000 bars of pure gold were stolen from Pearson International Airport exactly one year ago during an elaborate heist, but so far only a tiny fraction of that stolen loot has been found.

Marmot in the city: New resident of North Vancouver's Lower Lonsdale a 'rock star rodent'

When Les Robertson was walking home from the gym in North Vancouver's Lower Lonsdale neighbourhood three weeks ago, he did a double take. Standing near a burrow it had dug in a vacant lot near East 1st Street and St. Georges Avenue was a yellow-bellied marmot.

Relocated seal returns to Greater Victoria after 'astonishing' 204-kilometre trek

A moulting seal who was relocated after drawing daily crowds of onlookers in Greater Victoria has made a surprise return, after what officials described as an 'astonishing' six-day journey.

Ottawa barber shop steps away from Parliament Hill marks 100 years in business

Just steps from Parliament Hill is a barber shop that for the last 100 years has catered to everyone from prime ministers to tourists.

'It was a special game': Edmonton pinball player celebrates high score and shout out from game designer

A high score on a Foo Fighters pinball machine has Edmonton player Dave Formenti on a high.

'How much time do we have?': 'Contamination' in Prairie groundwater identified

A compound used to treat sour gas that's been linked to fertility issues in cattle has been found throughout groundwater in the Prairies, according to a new study.