Releases: huggingface/transformers

v4.40.1: fix `EosTokenCriteria` for `Llama3` on `mps`

v4.40.0: Llama 3, Idefics 2, Recurrent Gemma, Jamba, DBRX, OLMo, Qwen2MoE, Grounding Dino

New model additions

Llama 3

Llama 3 is supported in this release through the Llama 2 architecture and some fixes in the tokenizers library.

Idefics2

The Idefics2 model was created by the Hugging Face M4 team and authored by Léo Tronchon, Hugo Laurencon, Victor Sanh. The accompanying blog post can be found here.

Idefics2 is an open multimodal model that accepts arbitrary sequences of image and text inputs and produces text outputs. The model can answer questions about images, describe visual content, create stories grounded on multiple images, or simply behave as a pure language model without visual inputs. It improves upon IDEFICS-1, notably on document understanding, OCR, or visual reasoning. Idefics2 is lightweight (8 billion parameters) and treats images in their native aspect ratio and resolution, which allows for varying inference efficiency.

- Add Idefics2 by @amyeroberts in #30253

Recurrent Gemma

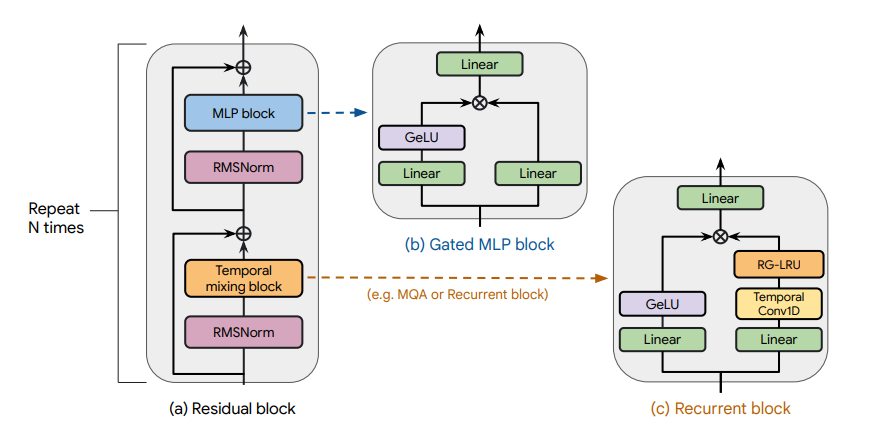

Recurrent Gemma architecture. Taken from the original paper.

The Recurrent Gemma model was proposed in RecurrentGemma: Moving Past Transformers for Efficient Open Language Models by the Griffin, RLHF and Gemma Teams of Google.

The abstract from the paper is the following:

We introduce RecurrentGemma, an open language model which uses Google’s novel Griffin architecture. Griffin combines linear recurrences with local attention to achieve excellent performance on language. It has a fixed-sized state, which reduces memory use and enables efficient inference on long sequences. We provide a pre-trained model with 2B non-embedding parameters, and an instruction tuned variant. Both models achieve comparable performance to Gemma-2B despite being trained on fewer tokens.

- Add recurrent gemma by @ArthurZucker in #30143

Jamba

Jamba is a pretrained, mixture-of-experts (MoE) generative text model, with 12B active parameters and an overall of 52B parameters across all experts. It supports a 256K context length, and can fit up to 140K tokens on a single 80GB GPU.

As depicted in the diagram below, Jamba’s architecture features a blocks-and-layers approach that allows Jamba to successfully integrate Transformer and Mamba architectures altogether. Each Jamba block contains either an attention or a Mamba layer, followed by a multi-layer perceptron (MLP), producing an overall ratio of one Transformer layer out of every eight total layers.

Jamba introduces the first HybridCache object that allows it to natively support assisted generation, contrastive search, speculative decoding, beam search and all of the awesome features from the generate API!

- Add jamba by @tomeras91 in #29943

DBRX

DBRX is a transformer-based decoder-only large language model (LLM) that was trained using next-token prediction. It uses a fine-grained mixture-of-experts (MoE) architecture with 132B total parameters of which 36B parameters are active on any input.

It was pre-trained on 12T tokens of text and code data. Compared to other open MoE models like Mixtral-8x7B and Grok-1, DBRX is fine-grained, meaning it uses a larger number of smaller experts. DBRX has 16 experts and chooses 4, while Mixtral-8x7B and Grok-1 have 8 experts and choose 2.

This provides 65x more possible combinations of experts and the authors found that this improves model quality. DBRX uses rotary position encodings (RoPE), gated linear units (GLU), and grouped query attention (GQA).

- Add DBRX Model by @abhi-mosaic in #29921

OLMo

The OLMo model was proposed in OLMo: Accelerating the Science of Language Models by Dirk Groeneveld, Iz Beltagy, Pete Walsh, Akshita Bhagia, Rodney Kinney, Oyvind Tafjord, Ananya Harsh Jha, Hamish Ivison, Ian Magnusson, Yizhong Wang, Shane Arora, David Atkinson, Russell Authur, Khyathi Raghavi Chandu, Arman Cohan, Jennifer Dumas, Yanai Elazar, Yuling Gu, Jack Hessel, Tushar Khot, William Merrill, Jacob Morrison, Niklas Muennighoff, Aakanksha Naik, Crystal Nam, Matthew E. Peters, Valentina Pyatkin, Abhilasha Ravichander, Dustin Schwenk, Saurabh Shah, Will Smith, Emma Strubell, Nishant Subramani, Mitchell Wortsman, Pradeep Dasigi, Nathan Lambert, Kyle Richardson, Luke Zettlemoyer, Jesse Dodge, Kyle Lo, Luca Soldaini, Noah A. Smith, Hannaneh Hajishirzi.

OLMo is a series of Open Language Models designed to enable the science of language models. The OLMo models are trained on the Dolma dataset. We release all code, checkpoints, logs (coming soon), and details involved in training these models.

- Add OLMo model family by @2015aroras in #29890

Qwen2MoE

Qwen2MoE is the new model series of large language models from the Qwen team. Previously, we released the Qwen series, including Qwen-72B, Qwen-1.8B, Qwen-VL, Qwen-Audio, etc.

Model Details

Qwen2MoE is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. Qwen2MoE has the following architectural choices:

Qwen2MoE is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

Qwen2MoE employs Mixture of Experts (MoE) architecture, where the models are upcycled from dense language models. For instance, Qwen1.5-MoE-A2.7B is upcycled from Qwen-1.8B. It has 14.3B parameters in total and 2.7B activated parameters during runtime, while it achieves comparable performance with Qwen1.5-7B, with only 25% of the training resources.

- Add Qwen2MoE by @bozheng-hit in #29377

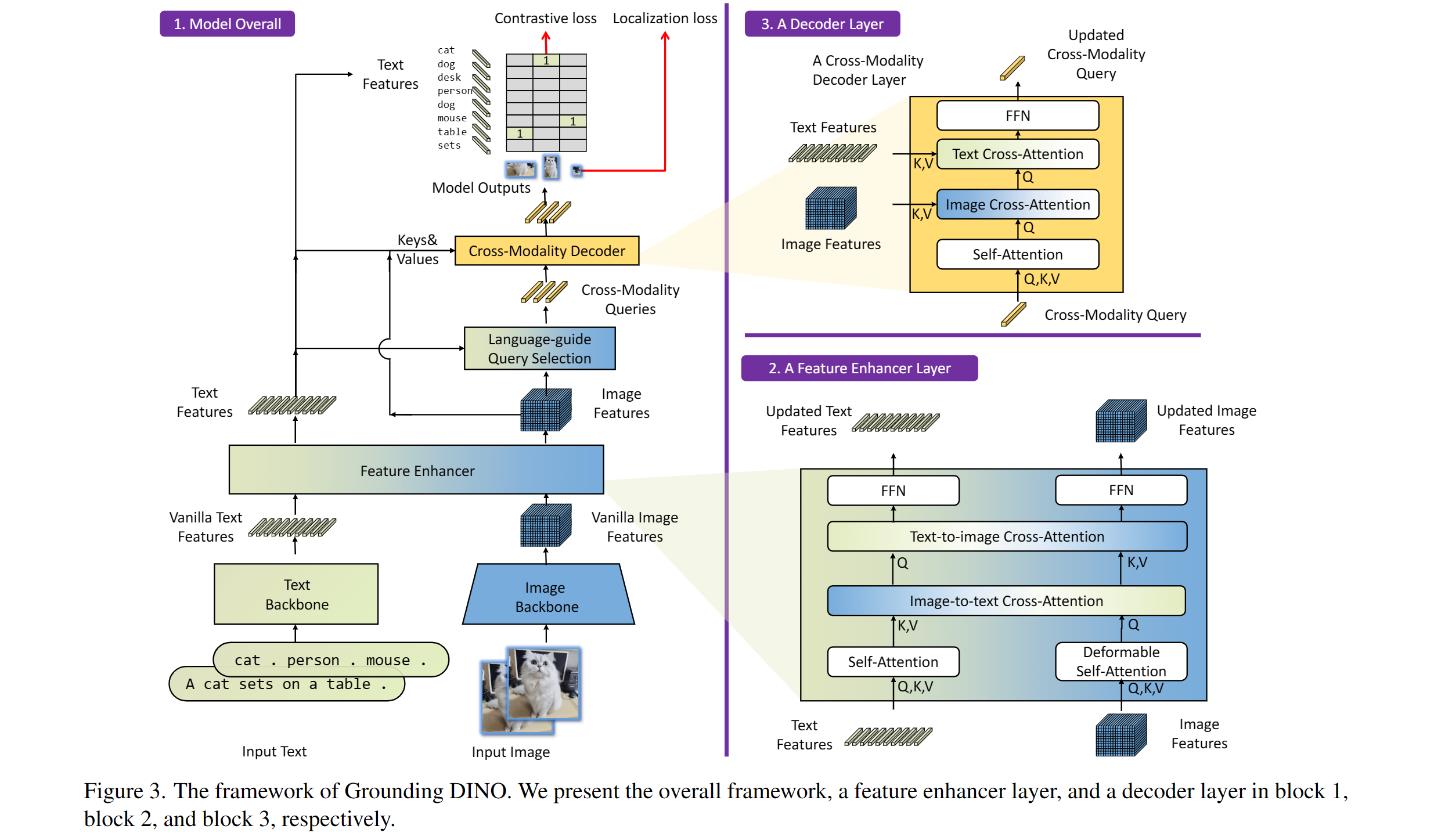

Grounding Dino

Taken from the original paper.

The Grounding DINO model was proposed in Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. Grounding DINO extends a closed-set object detection model with a text encoder, enabling open-set object detection. The model achieves remarkable results, such as 52.5 AP on COCO zero-shot.

- Adding grounding dino by @EduardoPach in #26087

Static pretrained maps

Static pretrained maps have been removed from the library's internals and are currently deprecated. These used to reflect all the available checkpoints for a given architecture on the Hugging Face Hub, but their presence does not make sense in light of the huge growth of checkpoint shared by the community.

With the objective of lowering the bar of model contributions and reviewing, we first start by removing legacy objects such as this one which do not serve a purpose.

- Remove static pretrained maps from the library's internals by @LysandreJik in #29112

Notable improvements

Processors improvements

Processors are ungoing changes in order to uniformize them and make them clearer to use.

- Separate out kwargs in processor by @amyeroberts in #30193

- [Processor classes] Update docs by @NielsRogge in #29698

SDPA

Push to Hub for pipelines

Pipelines can now be pushed to Hub using a convenient push_to_hub method.

Flash Attention 2 for more models (M2M100, NLLB, GPT2, MusicGen) !

Thanks to the community contribution, Flash Attention 2 has been integrated for more architectures

- Adding Flash Attention 2 Support for GPT2 by @EduardoPach in #29226

- Add Flash Attention 2 support to Musicgen and Musicgen Melody by @ylacombe in #29939

- Add Flash Attention 2 to M2M100 model by @visheratin in #30256

Improvements and bugfixes

- [docs] Remove redundant

-andthefrom custom_tools.md by @windsonsea in #29767 - Fixed typo in quantization_config.py by @kurokiasahi222 in #29766

- OWL-ViT box_predictor inefficiency issue by @RVV-karma in #29712

- Allow

-OOmode fordocstring_decoratorby @matthid in #29689 - fix issue with logit processor during beam search in Flax by @giganttheo in #29636

- Fix docker image build for

Latest PyTorch + TensorFlow [dev]by @ydshieh in #29764 - [

LlavaNext] Fix llava next unsafe imports by @ArthurZucker in #29773 - Cast bfloat16 to float32 for Numpy conversions by @Rocketknight1 in #29755

- Silence deprecations and use the DataLoaderConfig by @muellerzr in #29779

- Add deterministic config to

set_seedby @muellerzr in #29778 - Add support for

torch_dtypein the run_mlm example by @jla524 in #29776 - Generate: remove legacy generation mixin imports by @gante in #29782

- Llama: always convert the causal mask in the SDPA code path by @gante in #29663

- Prepend

bos tokento Blip generations by @zucchini-nlp in #29642 - Change in-place operations to out-of-place in LogitsProcessors by @zucchini-nlp in #29680

- [

quality] update quality check to make sure we check imports 😈 by @ArthurZucker in #29771 - Fix type hint for train_dataset param of Trainer.init() to allow IterableDataset. Issue 29678 by @stevemadere in #29738

- Enable AMD docker buil...

Release v4.39.3

Patch release v4.39.2

Patch release v4.39.1

Release v4.39.0

v4.39.0

🚨 VRAM consumption 🚨

The Llama, Cohere and the Gemma model both no longer cache the triangular causal mask unless static cache is used. This was reverted by #29753, which fixes the BC issues w.r.t speed , and memory consumption, while still supporting compile and static cache. Small note, fx is not supported for both models, a patch will be brought very soon!

New model addition

Cohere open-source model

Command-R is a generative model optimized for long context tasks such as retrieval augmented generation (RAG) and using external APIs and tools. It is designed to work in concert with Cohere's industry-leading Embed and Rerank models to provide best-in-class integration for RAG applications and excel at enterprise use cases. As a model built for companies to implement at scale, Command-R boasts:

- Strong accuracy on RAG and Tool Use

- Low latency, and high throughput

- Longer 128k context and lower pricing

- Strong capabilities across 10 key languages

- Model weights available on HuggingFace for research and evaluation

- Cohere Model Release by @saurabhdash2512 in #29622

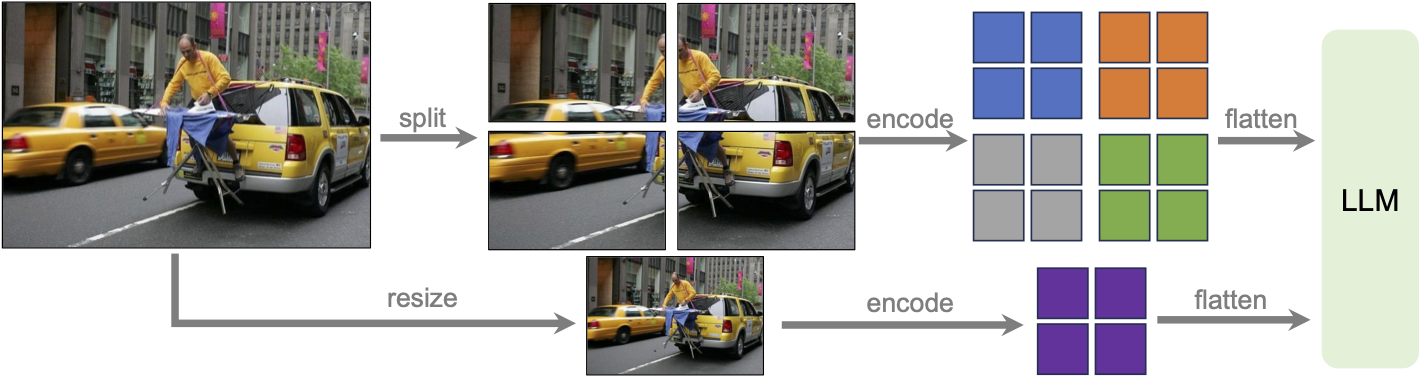

LLaVA-NeXT (llava v1.6)

Llava next is the next version of Llava, which includes better support for non padded images, improved reasoning, OCR, and world knowledge. LLaVA-NeXT even exceeds Gemini Pro on several benchmarks.

Compared with LLaVA-1.5, LLaVA-NeXT has several improvements:

- Increasing the input image resolution to 4x more pixels. This allows it to grasp more visual details. It supports three aspect ratios, up to 672x672, 336x1344, 1344x336 resolution.

- Better visual reasoning and OCR capability with an improved visual instruction tuning data mixture.

- Better visual conversation for more scenarios, covering different applications.

- Better world knowledge and logical reasoning.

- Along with performance improvements, LLaVA-NeXT maintains the minimalist design and data efficiency of LLaVA-1.5. It re-uses the pretrained connector of LLaVA-1.5, and still uses less than 1M visual instruction tuning samples. The largest 34B variant finishes training in ~1 day with 32 A100s.*

LLaVa-NeXT incorporates a higher input resolution by encoding various patches of the input image. Taken from the original paper.

MusicGen Melody

The MusicGen Melody model was proposed in Simple and Controllable Music Generation by Jade Copet, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi and Alexandre Défossez.

MusicGen Melody is a single stage auto-regressive Transformer model capable of generating high-quality music samples conditioned on text descriptions or audio prompts. The text descriptions are passed through a frozen text encoder model to obtain a sequence of hidden-state representations. MusicGen is then trained to predict discrete audio tokens, or audio codes, conditioned on these hidden-states. These audio tokens are then decoded using an audio compression model, such as EnCodec, to recover the audio waveform.

Through an efficient token interleaving pattern, MusicGen does not require a self-supervised semantic representation of the text/audio prompts, thus eliminating the need to cascade multiple models to predict a set of codebooks (e.g. hierarchically or upsampling). Instead, it is able to generate all the codebooks in a single forward pass.

PvT-v2

The PVTv2 model was proposed in PVT v2: Improved Baselines with Pyramid Vision Transformer by Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, and Ling Shao. As an improved variant of PVT, it eschews position embeddings, relying instead on positional information encoded through zero-padding and overlapping patch embeddings. This lack of reliance on position embeddings simplifies the architecture, and enables running inference at any resolution without needing to interpolate them.

- Add PvT-v2 Model by @FoamoftheSea in #26812

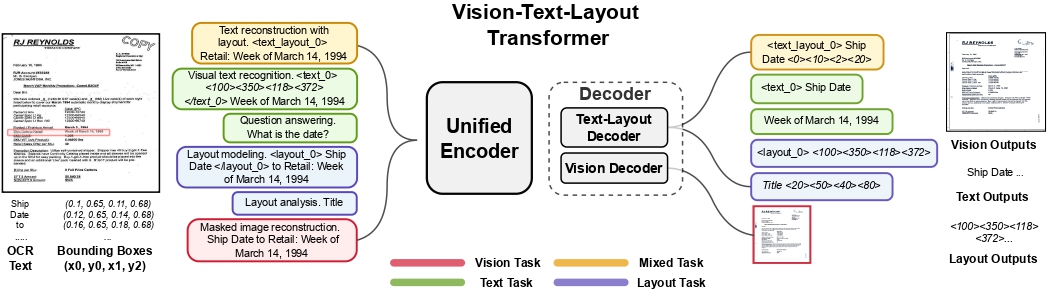

UDOP

The UDOP model was proposed in Unifying Vision, Text, and Layout for Universal Document Processing by Zineng Tang, Ziyi Yang, Guoxin Wang, Yuwei Fang, Yang Liu, Chenguang Zhu, Michael Zeng, Cha Zhang, Mohit Bansal. UDOP adopts an encoder-decoder Transformer architecture based on T5 for document AI tasks like document image classification, document parsing and document visual question answering.

UDOP architecture. Taken from the original paper.

- Add UDOP by @NielsRogge in #22940

Mamba

This model is a new paradigm architecture based on state-space-models, rather than attention like transformer models.

The checkpoints are compatible with the original ones

- [

Add Mamba] Adds support for theMambamodels by @ArthurZucker in #28094

StarCoder2

StarCoder2 is a family of open LLMs for code and comes in 3 different sizes with 3B, 7B and 15B parameters. The flagship StarCoder2-15B model is trained on over 4 trillion tokens and 600+ programming languages from The Stack v2. All models use Grouped Query Attention, a context window of 16,384 tokens with a sliding window attention of 4,096 tokens, and were trained using the Fill-in-the-Middle objective.

- Starcoder2 model - bis by @RaymondLi0 in #29215

SegGPT

The SegGPT model was proposed in SegGPT: Segmenting Everything In Context by Xinlong Wang, Xiaosong Zhang, Yue Cao, Wen Wang, Chunhua Shen, Tiejun Huang. SegGPT employs a decoder-only Transformer that can generate a segmentation mask given an input image, a prompt image and its corresponding prompt mask. The model achieves remarkable one-shot results with 56.1 mIoU on COCO-20 and 85.6 mIoU on FSS-1000.

- Adding SegGPT by @EduardoPach in #27735

Galore optimizer

With Galore, you can pre-train large models on consumer-type hardwares, making LLM pre-training much more accessible to anyone from the community.

Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and performance for pre-training on LLaMA 1B and 7B architectures with C4 dataset with up to 19.7B tokens, and on fine-tuning RoBERTa on GLUE tasks. Our 8-bit GaLore further reduces optimizer memory by up to 82.5% and total training memory by 63.3%, compared to a BF16 baseline. Notably, we demonstrate, for the first time, the feasibility of pre-training a 7B model on consumer GPUs with 24GB memory (e.g., NVIDIA RTX 4090) without model parallel, checkpointing, or offloading strategies.

Galore is based on low rank approximation of the gradients and can be used out of the box for any model.

Below is a simple snippet that demonstrates how to pre-train mistralai/Mistral-7B-v0.1 on imdb:

import torch

import datasets

from transformers import TrainingArguments, AutoConfig, AutoTokenizer, AutoModelForCausalLM

import trl

train_dataset = datasets.load_dataset('imdb', split='train')

args = TrainingArguments(

output_dir="./test-galore",

max_steps=100,

per_device_train_batch_size=2,

optim="galore_adamw",

optim_target_modules=["attn", "mlp"]

)

model_id = "mistralai/Mistral-7B-v0.1"

config = AutoConfig.from_pretrained(model_id)

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_config(config).to(0)

trainer = trl.SFTTrainer(

model=model,

args=args,

train_dataset=train_dataset,

dataset_text_field='text',

max_seq_length=512,

)

trainer.train()Quantization

Quanto integration

Quanto has been integrated with transformers ! You can apply simple quantization algorithms with few lines of code with tiny changes. Quanto is also compatible with torch.compile

Check out the announcement blogpost for more details

Exllama 🤝 AWQ

Exllama and AWQ combined together for faster AWQ inference - check out the relevant documentation section for more details on how to use Exllama + AWQ.

- Exllama kernels support for AWQ models by @IlyasMoutawwakil in #28634

MLX Support

Allow models saved or fine-tuned with Apple’s MLX framework to be loaded in transformers (as long as the model parameters use the same names), and improve tensor interoperability. This leverages MLX's adoption of safetensors as their checkpoint format.

- Add mlx support to BatchEncoding.convert_to_tensors by @Y4hL in #29406

- Add support for metadata format MLX by @alexweberk in #29335

- Typo in mlx tensor support by @pcuenca in #29509

- Experimental loading of MLX files by @pcuenca in #29511

Highligted improvements

Notable memory reduction in Gemma/LLaMa by changing the causal mask buffer type from int64 to boolean.

Remote code improvements

- Allow remote code repo names to contain "." by @Rocketknight1 in #29175

- simplify get_class_in_m...

v4.38.2

Fix backward compatibility issues with Llama and Gemma:

We mostly made sure that performances are not affected by the new change of paradigm with ROPE. Fixed the ROPE computation (should always be in float32) and the causal_mask dtype was set to bool to take less RAM.

YOLOS had a regression, and Llama / T5Tokenizer had a warning popping for random reasons

- FIX [Gemma] Fix bad rebase with transformers main (#29170)

- Improve _update_causal_mask performance (#29210)

- [T5 and Llama Tokenizer] remove warning (#29346)

- [Llama ROPE] Fix torch export but also slow downs in forward (#29198)

- RoPE loses precision for Llama / Gemma + Gemma logits.float() (#29285)

- Patch YOLOS and others (#29353)

- Use torch.bool instead of torch.int64 for non-persistant causal mask buffer (#29241)

v4.38.1

Fix eager attention in Gemma!

- [Gemma] Fix eager attention #29187 by @sanchit-gandhi

TLDR:

- attn_output = attn_output.reshape(bsz, q_len, self.hidden_size)

+ attn_output = attn_output.view(bsz, q_len, -1)v4.38: Gemma, Depth Anything, Stable LM; Static Cache, HF Quantizer, AQLM

New model additions

💎 Gemma 💎

Gemma is a new opensource Language Model series from Google AI that comes with a 2B and 7B variant. The release comes with the pre-trained and instruction fine-tuned versions and you can use them via AutoModelForCausalLM, GemmaForCausalLM or pipeline interface!

Read more about it in the Gemma release blogpost: https://hf.co/blog/gemma

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b", device_map="auto", torch_dtype=torch.float16)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)You can use the model with Flash Attention, SDPA, Static cache and quantization API for further optimizations !

- Flash Attention 2

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", torch_dtype=torch.float16, attn_implementation="flash_attention_2"

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)- bitsandbytes-4bit

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", load_in_4bit=True

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)- Static Cache

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto"

)

model.generation_config.cache_implementation = "static"

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)Depth Anything Model

The Depth Anything model was proposed in Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data by Lihe Yang, Bingyi Kang, Zilong Huang, Xiaogang Xu, Jiashi Feng, Hengshuang Zhao. Depth Anything is based on the DPT architecture, trained on ~62 million images, obtaining state-of-the-art results for both relative and absolute depth estimation.

- Add Depth Anything by @NielsRogge in #28654

Stable LM

StableLM 3B 4E1T was proposed in StableLM 3B 4E1T: Technical Report by Stability AI and is the first model in a series of multi-epoch pre-trained language models.

StableLM 3B 4E1T is a decoder-only base language model pre-trained on 1 trillion tokens of diverse English and code datasets for four epochs. The model architecture is transformer-based with partial Rotary Position Embeddings, SwiGLU activation, LayerNorm, etc.

The team also provides StableLM Zephyr 3B, an instruction fine-tuned version of the model that can be used for chat-based applications.

⚡️ Static cache was introduced in the following PRs ⚡️

Static past key value cache allows LlamaForCausalLM' s forward pass to be compiled using torch.compile !

This means that (cuda) graphs can be used for inference, which speeds up the decoding step by 4x!

A forward pass of Llama2 7B takes around 10.5 ms to run with this on an A100! Equivalent to TGI performances! ⚡️

- [

Core generation] Adds support for static KV cache by @ArthurZucker in #27931 - [

CLeanup] Revert SDPA attention changes that got in the static kv cache PR by @ArthurZucker in #29027 - Fix static generation when compiling! by @ArthurZucker in #28937

- Static Cache: load models with MQA or GQA by @gante in #28975

- Fix symbolic_trace with kv cache by @fxmarty in #28724

generate is not included yet. This feature is experimental and subject to changes in subsequent releases.

from transformers import AutoTokenizer, AutoModelForCausalLM, StaticCache

import torch

import os

# compilation triggers multiprocessing

os.environ["TOKENIZERS_PARALLELISM"] = "true"

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-hf")

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-7b-hf",

device_map="auto",

torch_dtype=torch.float16

)

# set up the static cache in advance of using the model

model._setup_cache(StaticCache, max_batch_size=1, max_cache_len=128)

# trigger compilation!

compiled_model = torch.compile(model, mode="reduce-overhead", fullgraph=True)

# run the model as usual

input_text = "A few facts about the universe: "

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda").input_ids

model_outputs = compiled_model(input_ids)Quantization

🧼 HF Quantizer 🧼

HfQuantizer makes it easy for quantization method researchers and developers to add inference and / or quantization support in 🤗 transformers. If you are interested in adding the support for new methods, please refer to this documentation page: https://huggingface.co/docs/transformers/main/en/hf_quantizer

HfQuantizerclass for quantization-related stuff inmodeling_utils.pyby @poedator in #26610- [

HfQuantizer] Move it to "Developper guides" by @younesbelkada in #28768 - [

HFQuantizer] Removecheck_packages_compatibilitylogic by @younesbelkada in #28789 - [docs] HfQuantizer by @stevhliu in #28820

⚡️AQLM ⚡️

AQLM is a new quantization method that enables no-performance degradation in 2-bit precision. Check out this demo about how to run Mixtral in 2-bit on a free-tier Google Colab instance: https://huggingface.co/posts/ybelkada/434200761252287

- AQLM quantizer support by @BlackSamorez in #28928

- Removed obsolete attribute setting for AQLM quantization. by @BlackSamorez in #29034

🧼 Moving canonical repositories 🧼

The canonical repositories on the hugging face hub (models that did not have an organization, like bert-base-cased), have been moved under organizations.

You can find the entire list of models moved here: https://huggingface.co/collections/julien-c/canonical-models-65ae66e29d5b422218567567

Redirection has been set up so that your code continues working even if you continue calling the previous paths. We, however, still encourage you to update your code to use the new links so that it is entirely future proof.

- canonical repos moves by @julien-c in #28795

- Update all references to canonical models by @LysandreJik in #29001

Flax Improvements 🚀

The Mistral model was added to the library in Flax.

- Flax mistral by @kiansierra in #26943

TensorFlow Improvements 🚀

With Keras 3 becoming the standard version of Keras in TensorFlow 2.16, we've made some internal changes to maintain compatibility. We now have full compatibility with TF 2.16 as long as the tf-keras compatibility package is installed. We've also taken the opportunity to do some cleanup - in particular, the objects like BatchEncoding that are returned by our tokenizers and processors can now be directly passed to Keras methods like model.fit(), which should simplify a lot of code and eliminate a long-standing source of annoyances.

- Add tf_keras imports to prepare for Keras 3 by @Rocketknight1 in #28588

- Wrap Keras methods to support BatchEncoding by @Rocketknight1 in #28734

- Fix Keras scheduler import so it works for older versions of Keras by @Rocketknight1 in #28895

Pre-Trained backbone weights 🚀

Enable loading in pretrained backbones in a new model, where all other weights are randomly initialized. Note: validation checks are still in place when creating a config. Passing in use_pretrained_backbone will raise an error. You can override by setting

config.use_pretrained_backbone = True after creating a config. However, it is not yet guaranteed to be fully backwards compatible.

from transformers import MaskFormerConfig, MaskFormerModel

config = MaskFormerConfig(

use_pretrained_backbone=False,

backbone="microsoft/resnet-18"

)

config.use_pretrained_backbone = True

# Both models have resnet-18 backbone weights and all other weights randomly

# initialized

model_1 = MaskFormerModel(config)

model_2 = MaskFormerModel(config)- Enable instantiating model with pretrained backbone weights by @amyeroberts in #28214

Introduce a helper function load_backbone to load a backbone from a backbone's model config e.g. ResNetConfig, or from a model config which contains backbone information. This enables cleaner modeling files and crossloading between timm and transformers backbones.

from transformers import ResNetConfig, MaskFormerConfig

from transformers.utils.backbone_utils import load_backbone

# Resnet defines the backbone model to load

config = ResNetConfig()

backbone = load_backbone(config)

# Maskformer config defines a model which uses a resnet backbone

config = MaskFormerConfig(use_timm_backbone=True, backbone="resnet18")

backbone = load_backbone(config)

config = MaskFormerConfig(backbone_config=ResNetConfig())

backbone = load_backbone(config)- [

Backbone] Use `load_backbone...

Patch release v4.37.2

Selection of fixes

- Protecting the imports for SigLIP's tokenizer if sentencepiece isn't installed

- Fix permissions issue on windows machines when using trainer in multi-node setup

- Allow disabling safe serialization when using Trainer. Needed for Neuron SDK

- Fix error when loading processor from cache

- torch < 1.13 compatible

torch.load

Commits

- [Siglip] protect from imports if sentencepiece not installed (#28737)

- Fix weights_only (#28725)

- Enable safetensors conversion from PyTorch to other frameworks without the torch requirement (#27599)

- Don't fail when LocalEntryNotFoundError during processor_config.json loading (#28709)

- Use save_safetensor to disable safe serialization for XLA (#28669)

- Fix windows err with checkpoint race conditions (#28637)

- [SigLIP] Only import tokenizer if sentencepiece available (#28636)