Over the years, I’ve worked on a number of large site migrations where .htaccess files have had the potential to grow into the tens of thousands of lines of unique one-to-one rules. Whilst there are typically no reported issues, one question does seem to come up quite often: “Does the number of .htaccess rules impact performance?”.

There are numerous anecdotal answers scattered around the web, plus this great test published recently by SEO Mike. However, there are some questions left unanswered by these which I try to cover in this post.

Caveat: Take these findings with a pinch of salt – actual impacts will vary based on your server spec and exact configuration.

tl;dr – Yes, the number of directives in a .htaccess file can impact the performance and scalability of your website – impacting TTFB directly, as well as CPU and Memory Usage on the server. However, the exact impact will vary depending on your exact infrastructure and configuration.

How does it work?

When a request comes in, if it’s configured to, Apache will look for a .htaccess file in each appropriate directory on the server. For instance, let’s assume the below structure within the DocumentRoot for the website:

./.htaccess ./level1/.htaccess ./level1/level2/.htaccess ./level1/level2/level3/index.html

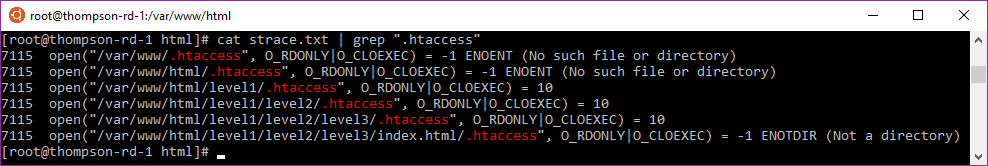

When a request is made to /level1/level2/level3/index.html, Apache will try and read from each of the three .htaccess files if they exist – which we can see from the log of system calls Apache makes under the hood when a request comes in.

I mention this as it’s useful to know for later, and because this in itself can incur a small performance hit just by virtue of being enabled, but we won’t dwell on that.

Any rules from these files are then parsed / executed in sequence by Apache, and can do anything from rewriting / redirecting the request, to setting arbitrary HTTP headers, to tweaking config settings for Apache itself.

Test 1 – Impact on TTFB

The first test was to simply have a .htaccess file and a .txt file in the same directory, inflate the .htaccess file to a set line count with Redirect rules, and then measure the Time To First Byte (TTFB) of the .txt file via Chrome’s Network panel.

The results were as follows…

| # of lines in .htaccess | TTFB of a 280 byte .txt file |

|---|---|

| 0 | 9.67ms |

| 100 | 9.85ms |

| 1,000 | 12.99ms |

| 10,000 | 33.43ms |

| 50,000 | 134.85ms |

| 100,000 | 269.91ms |

| 1,000,000 | 2.5s |

From this we can see that simply having a large .htaccess file in a folder can directly impact your TTFB (which SEO Mike’s test verifies via the same method).

This effect is compounded however when you consider that actual web pages have multiple resources and that rules will be re-processed for each request (again illustrated in the syscalls below for a basic HTML page with a CSS file, JS file, and a few images).

If any of these files are render blocking, you can potentially be adding many times the delay to your user getting a page rendered which they can interact with.

Test 2 – Impact on Scalability

Up until now, we’ve illustrated the visible impact from the client side, but there’s another important thing here; the server itself.

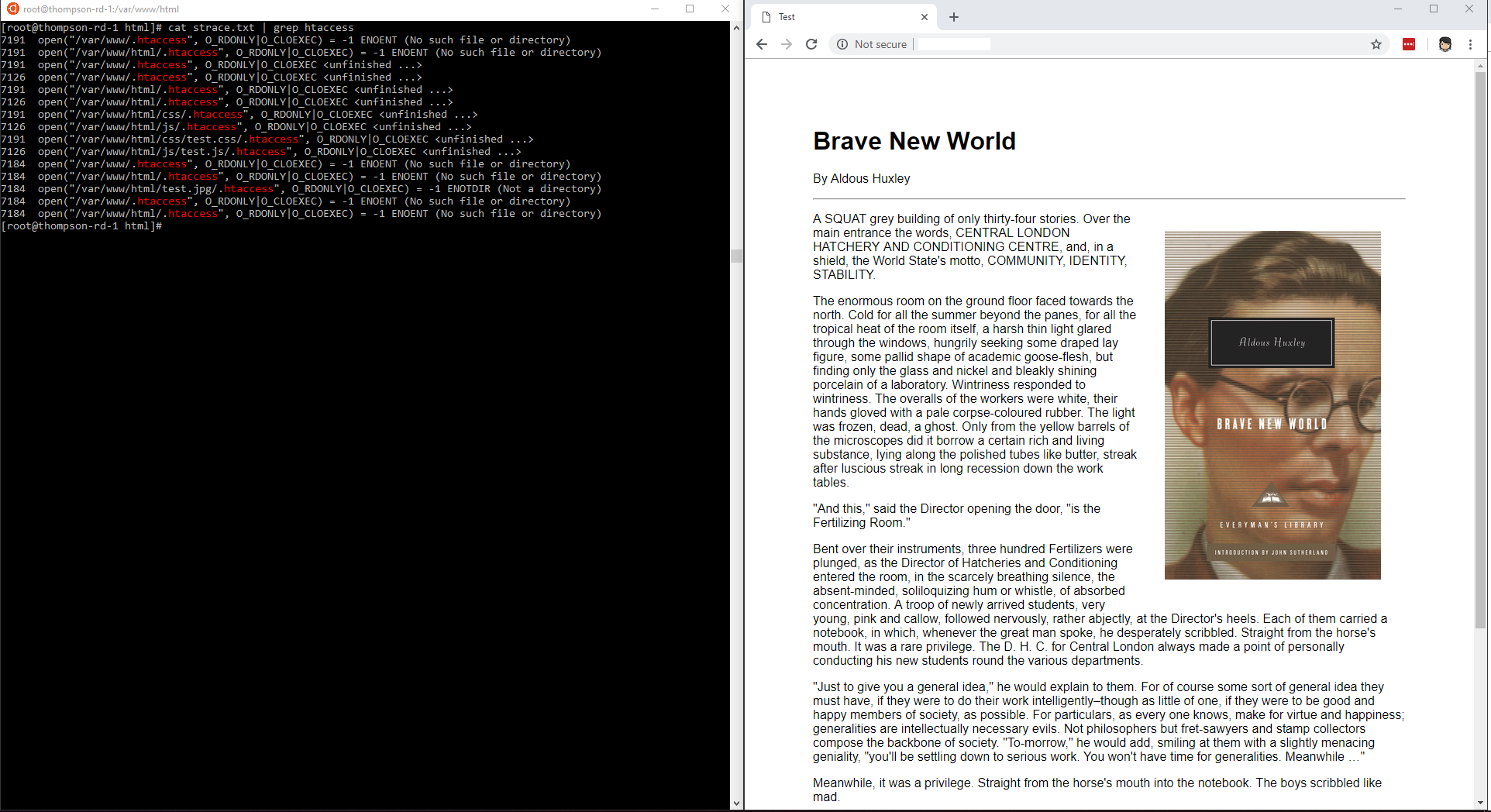

Given that we now know that .htaccess files are being processed on every request and that there is a delay whilst they are processed, it stands to reason that resources are being tied-up on the server in some way. To try and assess the impact of this, I’ve run some load tests on our mock “Brave New World” website from above.

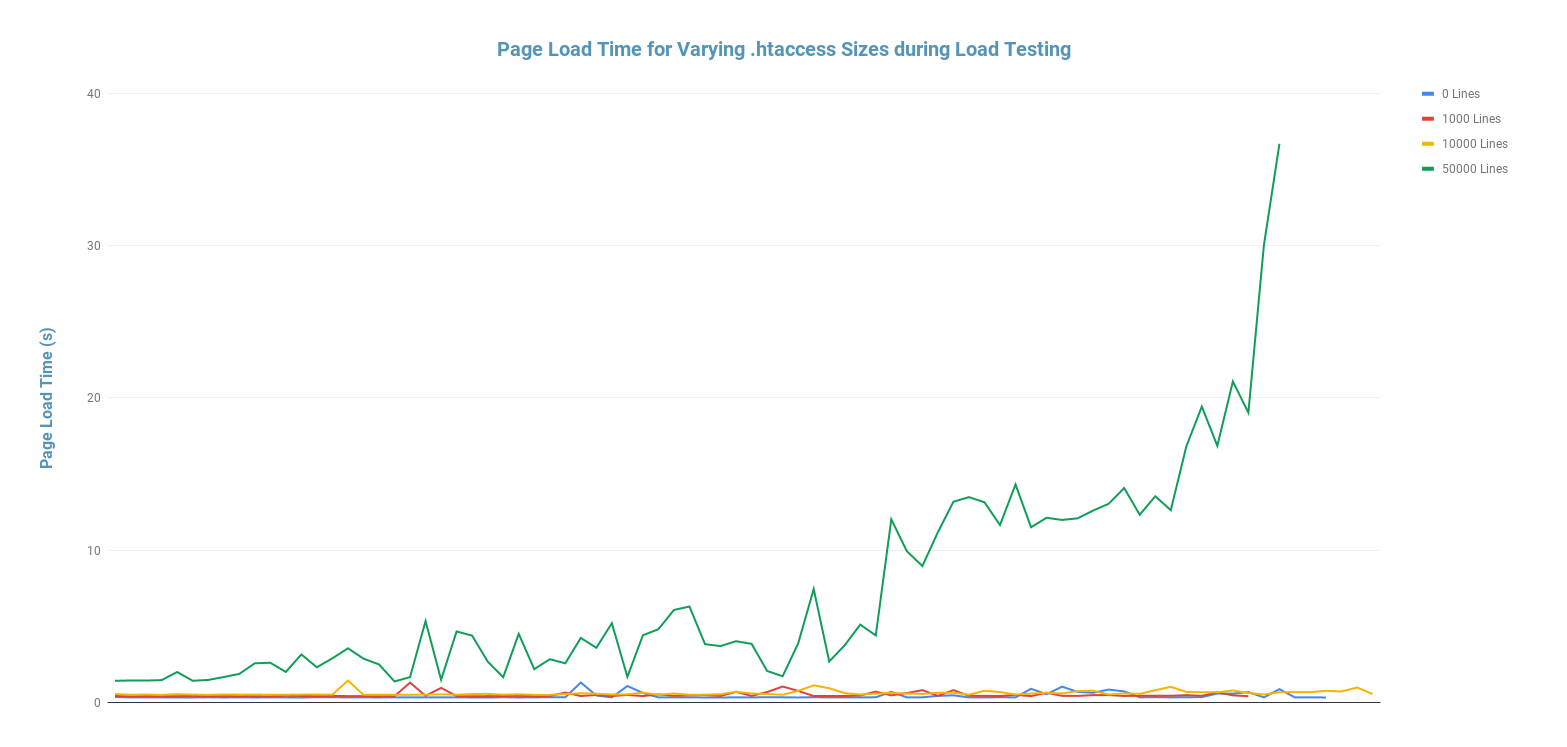

The tests run for 5 minutes, and make use of LoadImpact to gradually ramp up load on the site, up to 50 Virtual Users (VUs) at peak – a number of users not outside the realms of possibility for even smaller sites. I repeated this test with an empty .htaccess, and then differing amounts of Redirect rules in the .htaccess file. The results were as follows;

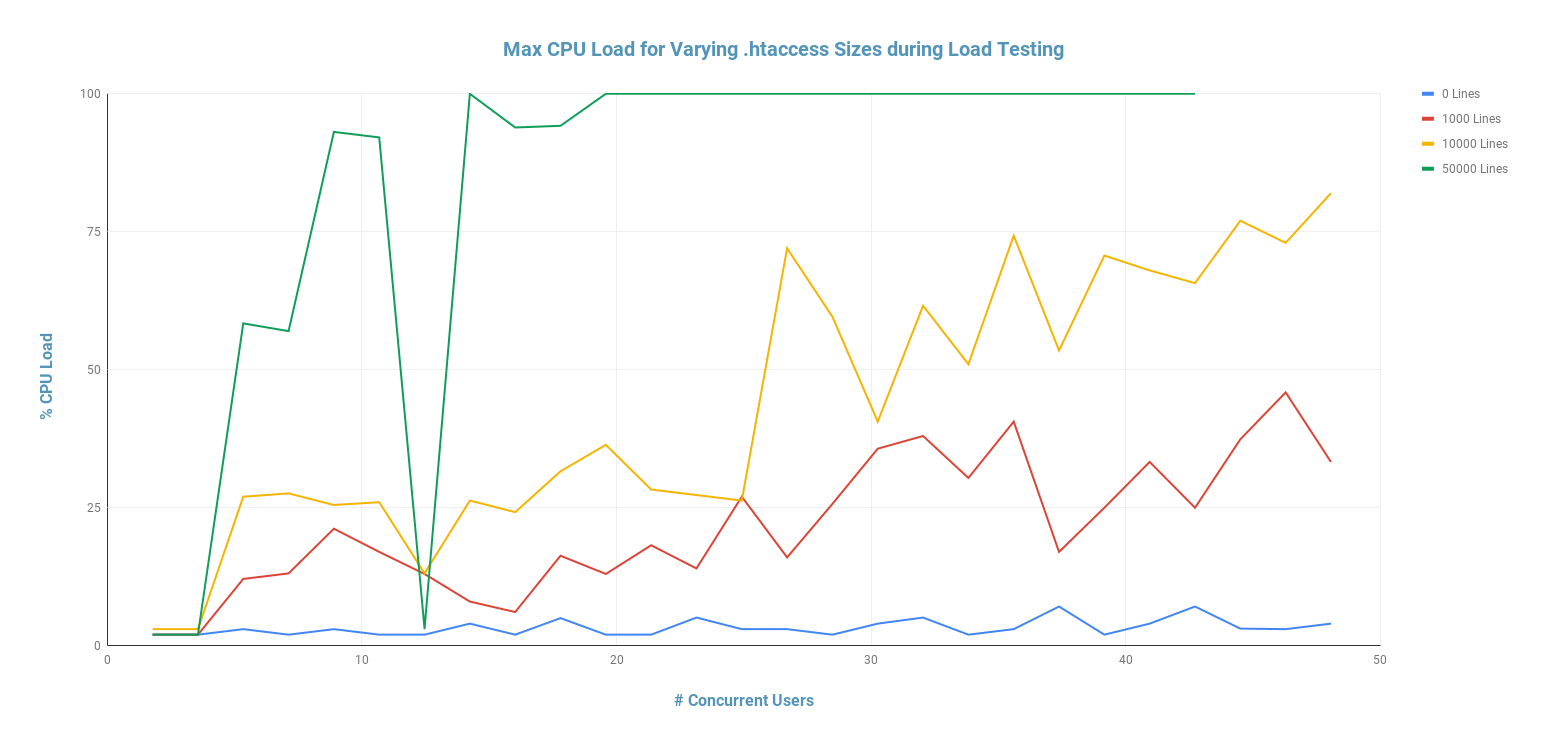

CPU Usage

Firstly, here are the stats for the CPU usage throughout the load test, where can see that there’s a clear impact from the larger .htaccess files.

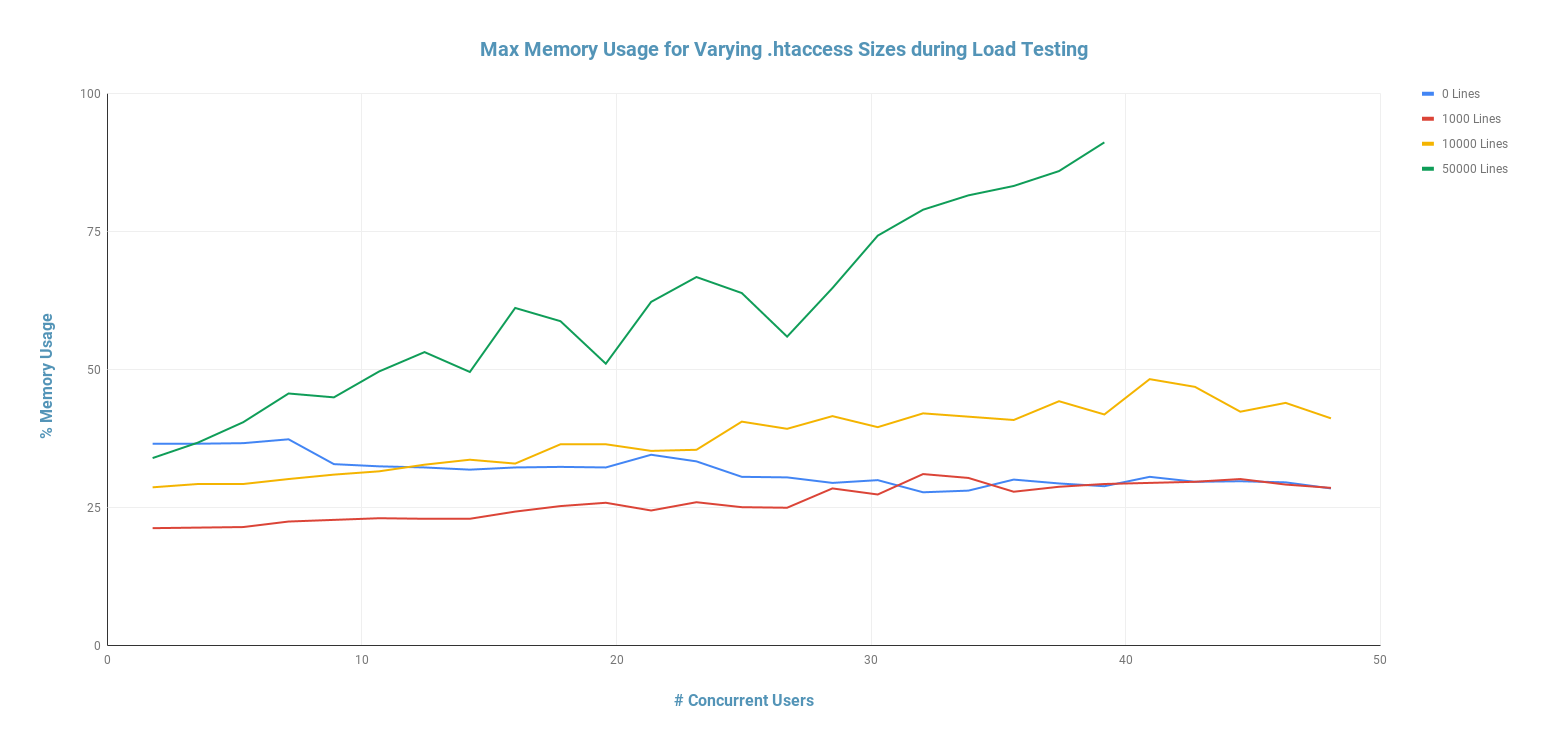

Memory Usage

Secondly, here are the stats for the memory usage throughout the load test. It’s worth noting that the memory usage was naturally fluctuating anyway, hence 0-lines starting out higher than the others, and dropping down. For this reason, I’d focus more on the overall trend as load increases rather than the exact data. With this in mind; the effect is less dramatic for the smaller files, but still noticeable for the 10,000 line file upwards.

From this test, it seems that even smaller .htaccess files with just 1,000 lines can quickly start to put significant strain on certain resources when under load. This could result in the site going down entirely, or – if you’re nearing the server’s capacity – an increased load time for all requests.

It’s worth noting though that during our tests, we didn’t see any huge impact on overall page load time on anything but the 50,000 line file. This is probably because, even though significant resource was being used in handling the requests, we still weren’t hitting peak capacity.

Summary

So to summarise; yes – in these tests we found that having more .htaccess rules can impact your website’s performance / scalability, and not just in the ways that you’d immediately expect.

I’m aware that the server I chose for this test was deliberately low-spec (the cheapest VM on DigitalOcean), so the typical production web server will probably cope better. However, unless your scaling strategy is to throw more resource (and thus money) at the problem, then this will likely rear it’s head at some point.

Ultimately there could at some point become a trade-off between the convenience a .htaccess file brings, and the performance issues it can cause if left untamed.

When you reach a certain scale you should begin to think of redirect management as part of your critical infrastructure, and put in place systems and processes which work for your requirements.

This being said, if you do feel like your quantity of redirects might be causing issues, I’d suggest considering some (or all) of the following;

- As recommended in the Apache Documentation, consider avoiding .htaccess files entirely and instead use the httpd.conf server configuration file to add your directives.

- If you must use .htaccess files, always look for patterns. Try and combine multiple rules into one. Acquaint yourself with the RewriteRule directive and the basics of regex.

- If your site doesn’t have patterns which can easily be mapped by a regex rule, then consider using a rule to rewrite legacy URLs to a router script of some form, maintain a map of old to new URLs somewhere, and handle the 301 redirect in code instead of letting the server do it.

- Take some time to review your hosting infrastructure; run some load tests using something like LoadImpact, and consider caching 301 responses in your caching layer.

If you found this post useful or have any other questions / ideas for follow-up tests, please do drop me a message on Twitter @Simon_JThompson, or an email at simon [at] strategiq.co.