In the race to build the underlying technologies that can power the next wave of AI revolution, a Chinese lab just toppled OpenAI, the venerated US-based research lab, in terms of who can train a gigantic deep learning model with the most training parameters--as for whether or not there is a race, at least ranking members of the lab believe so.

The Beijing Academy of Artificial Intelligence, styled as BAAI and known in Chinese as 北京智源人工智能研究院, launched the latest version of Wudao 悟道, a pre-trained deep learning model that the lab dubbed as “China’s first,” and “the world’s largest ever,” with a whopping 1.75 trillion parameters.

(Numbers don't tell a full story, but just for the sake of it: Wudao has 150 billion more parameters than Google's Switch Transformers, and is 10 times that of OpenAI's GPT-3, which is widely regarded as the best model in terms of language generation.)

Unlike conventional deep learning models that are usually task-specific, Wudao is a multi-modal model trained to tackle both text and image, two dramatically different sets of problems. At BAAI’s annual academic conference on Tuesday, the institution demonstrated Wudao performing tasks such as natural language processing, text generation, image recognition, image generation, etc.

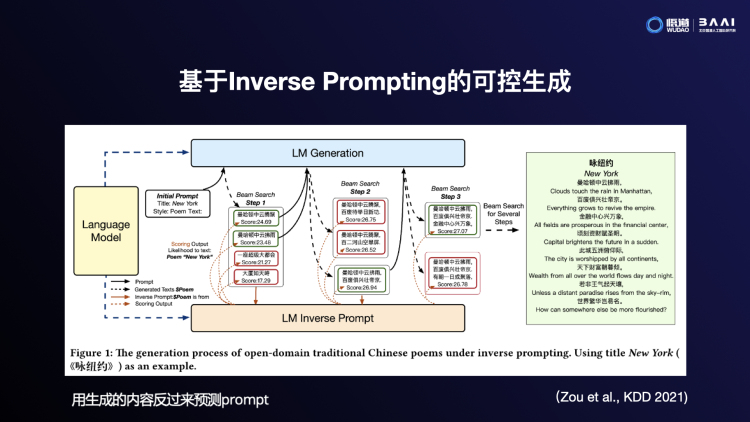

The model is capable of writing poems and couplets in the traditional Chinese styles, answer questions, write essays, generate alt text for images, and generate corresponding images from natural language description with a decent level of photorealism. It is even able to power “virtual idols”, with the help of XiaoIce, a Chinese company spun off of Microsoft--so there can be voice support too, in addition to text and image.

Multi-modal model is currently a buzzword within the deep learning community, with researchers increasingly wanting to push the boundary towards what’s known as artificial general intelligence, or simply put, AIs that are more than incredibly smart one-trick ponies. Google’s MUM, or Multi-task Unified Model, unveiled two weeks ago at the Silicon Valley giant’s annual developer conference, capable of answering complex questions and distilling information from both text and image, is one recent example of multi-modal models.

Very interestingly, this model with 1.75 trillion parameters is already the 2.0 version of Wudao, whose first version was just launched less than 3 months ago. One of the main reasons the Chinese researchers made progress quickly was that they were able to tap into China’s supercomputing clusters, with the help of a few of its core members who also worked on the national supercomputing projects.

A little more technical explanation: BAAI researchers developed and open-sourced a deep learning system called FastMoE, which allowed Wudao to be trained on both supercomputers and regular GPUs with significantly more parameters, giving the model, in theory, more flexibility than Google’s take on the MoE, or Mixture of Experts. This is because Google’s system requires the company’s dedicated TPU hardware and distributed training framework, while BAAI’s FastMoE works with at least one industry-standard open-source framework, namely PyTorch, and can be operated on off-the-shelf hardware.

The Chinese lab claims that Wudao's sub-models achieved better performance than previous models, beating OpenAI’s CLIP and Google’s ALIGN on English image and text indexing in the Microsoft COCO dataset.

For image generation from text, a novel task, BAAI claims that Wudao’s sub-model CogView beat OpenAI's DALL-E, a state-of-the-art neural network launched in January this year with 13 billion parameters. The institution is working with Damo Academy, Alibaba's AI research lab, to utilize CogView to develop applications that can be used to produce custom designed apparel that better addresses shoppers' need.

“The way to artificial general intelligence is big models and big computer,” said Dr. Zhang Hongjiang, chairman of BAAI, “What we are building is a power plant for the future of AI, with mega data, mega computing power, and mega models, we can transform data to fuel the AI applications of the future.”

Dr. Zhang led the project that eventually resulted in BAAI’s founding. He is currently a Venture Partner at Source Code Capital, and was the CEO of Kingsoft, as well as one of the dozen co-founders of Microsoft Research Asia.

There’s no doubt that BAAI, founded in 2018, positions itself as “the OpenAI of China”, as ranking members of the institution can’t talk for five minutes without at least mentioning the US-based research institution once at the annual conference.

Both BAAI and OpenAI are targeting basic research that has the potential to enable significantly higher performance for deep learning technologies, empowering new experiences previously unimaginable. Both are capable of training gigantic models, the big numbers of which attract attention, and in turn help them with hiring and business development.

One of Wudao’s sub-models, Wensu 文溯, is even capable of predicting 3D structures of proteins, a very complex task with immense real world value that Google's DeepMind also took on in the past with its AlphaFold system. DeepMind, on the other hand, is also a top AI research organizaton.

However, while OpenAI and DeepMind are privately funded, a key distinction for BAAI is that it's formed and funded with significant help from China’s Ministry of Science and Technology, as well as Beijing’s municipal government.

Many of BAAI’s resident researchers come from top institutions including Peking University, Tsinghua University, and the Chinese Academy of Science. Working within the institution’s many individual labs, they conduct research spanning all relevant directions, including deep learning, reinforcement learning, and brain-inspired intelligence. In the end, the purpose of BAAI is to solve fundamental problems that hinder the progress towards artificial general intelligence.

The lab is also partnering deeply with a list of who’s who in China’s tech scene: Didi, Xiaomi, Meituan, Baidu, ByteDance, Megvii, and JD.com, etc.

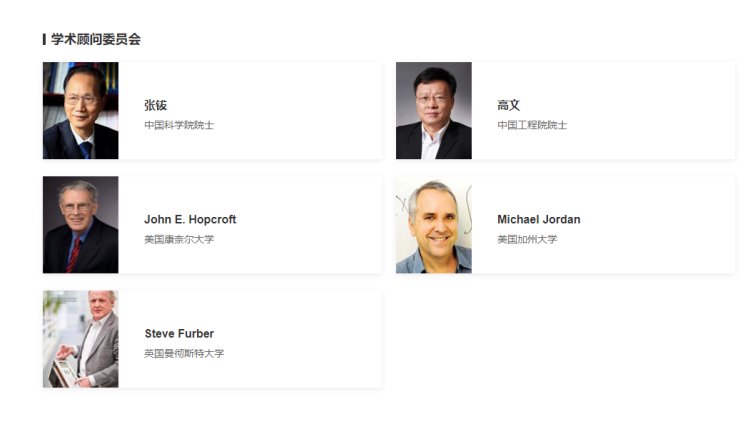

Like OpenAI, BAAI is governed by a board of directors filled with esteemed Chinese researchers in the deep learning scene, including PKU's Song-Chun Zhu, Baidu's Wang Haifeng, Megvii's Sun Jian, and Xiaomi's Cui Baoqiu, to name a few. The institution is also advised by Michael I. Jordan, an UC Berkeley professor and a leading figure in the machine learning world, as well as John E. Hopcroft, an ACM A. M. Turing Award winner.

Besides the headline-making, record-smashing new deep learning model, the 3-year-old institution had produced some other promising results as well, netting one of its teams an ACM Gordon Bell Prize for its research on micro-climate prediction, a problem that was generally considered too compute-heavy for AI.

In fact, the institution is so proud of its team of over 100 AI researchers, that Huang Tiejun, BAAI's vice chairman and principal, literally claims that it has created a “School of Beijing” (北京学派) for AI research, attracting scholars from across China and around the world. The institution has about a hundred researchers in various levels of seniority and residency.

With the convenience of good relationships with the government, that Gordon Bell Prize-winning research is already being put to use by the upcoming 2022 Winter Olympics. Some of BAAI’s other research results were also being adopted by local governments to process civil affairs more efficiently, according to the institution.

The institution assembles China's various AI related research and talent initiatives, and is making big announcements at the same, if not an even faster pace than OpenAI in the US, or DeepMind (affiliated with Google/Alphabet) in the UK.

Further down the road, aside from the continued effort on core research, BAAI is also planning to work with more partners to build demonstrative applications this year. For next year, it plans to consolidate relevant APIs into a platform with the potential of commercialization, a route that OpenAI also took with its highly sought-after GPT-3 model.

“No matter how many models there are, it’s the biggest, best performing few that eventually get commercialized in the industry,” said Huang, explaining why BAAI is laser focused on building big models. But the principal understands that artificial general intelligence won’t be achieved with big models alone, saying that BAAI had also set up individual labs dedicated to laws of physics as well as life sciences.

“Wudao is our model for information. We are also building Tiandao 天道 as the model for physics, and Tianyan 天演 as the model for life sciences,” said the principal, adding that the end-game plan is to fuse all of them together, making AI not only work inside computers, but also across the universe.

BAAI would be able to make an attempt at that very futuristic goal with the continued help from the Chinese Academy of Science, which has already been researching these basic science subjects for decades. The idea is that if their research progress had been hindered in the past due to lack of compute power, BAAI is now here to save the day.