I’d previously written about the concept of soft asserts. From that blog post: “A soft assert is an assert that the result of which is recorded but does not halt the test script’s execution at that point. The results of all soft asserts are evaluated at an author-specified point in the test script, usually at the end; if any soft assert condition evaluates to false, the soft assert reports false as its result and the test script is typically reported as a failure”. If you missed the previous post, check out that post about soft asserts as a concept.

Additionally, I recently reposted this blog on Twitter because I had two client teams who are relatively new to the concept of soft asserts and thought that others might benefit from the content but who had missed the original publication. Then I noticed, that both teams had comparable struggles with how to appropriately use soft asserts as well as when to call the function that causes a test script to fail on soft assertion failure. I decided it was time for a sequel!

So, how should we use soft asserts?

Soft asserts are best used when we are checking the response to a stimulus that provides multiple results that we want to treat as one result, and for which we also want to report the information for all failed soft assertions up to that point. For example, if we perform a POST request and receive a response with multiple data items, we probably want to check each of those data items. Also, we probably don’t want to have to run that test script multiple times, once to identify each failure if there are multiple failures: field three was a mismatch, fix it, and run again, now field five was a mismatch, so now we fix it, and run again, ad nauseam.

Cool, right? So why not use soft assert everywhere and just call the “fail test script if a soft assert failed” at the end of each test script? Well, as usual, there are reasons.

In general, soft asserts are not intended to be a solution for all assertion situations. As previously indicated, they are generally intended for checking multiple, related data points from a single response. Examples are the aforementioned POST response or multiple elements on a GUI screen that have been updated due to some stimulus like a button click. If, however, a single assertion failure should prevent the test script from continuing to perform subsequent test steps or assertions, a hard assert is often a better choice.

How do we decide when we should not continue? Generally, if continuing past an assertion failure provides no value, we should cause the test script to fail on that assertion. For example, in a GUI test, if we click a button that is supposed to take us to a certain page, we might write an assertion to ensure that we went to the proper page. If that assertion fails, we are likely not on the intended page or the website returned an error page such as a 404 page. In either case, continuing with other test steps likely provides no value because whatever we tried to do on the intended page can’t be done on the actual page. Even worse, some of the actions we wanted to perform on the intended page might succeed on the actual page, allowing the script to proceed farther along; the script will likely fail later in the flow when it will be more difficult to diagnose the problem.

To recap,

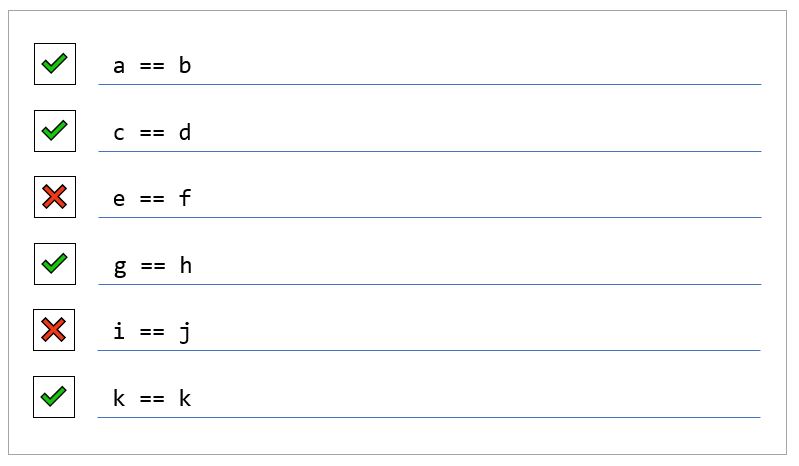

- If you have a single assertion condition, a hard assert is usually the right choice.

- If you are checking multiple related values, a soft assert is a suitable candidate.

- If after the failure of Condition X, you still want to check Condition B, a soft assert is a suitable candidate.

- If after the failure of Condition X, there is no value in checking Condition Y or performing subsequent test steps, the right choice is either to use a hard assert or call the soft assert’s “fail test script if a soft assert failed” function immediately after Condition X.

When it comes to test automation, the understandability of the results is of utmost importance; if we don’t understand our results, we cannot appropriately act on them to resolve issues. When deciding between hard and soft asserts, it’s usually best to choose the one that will reduce test script maintenance, which includes diagnosing issues revealed by the automation.

Like this? Catch me at an upcoming event!

[[..PingBack..]]

This article is curated as a part of #60th Issue of Software Testing Notes Newsletter.

https://softwaretestingnotes.substack.com/p/issue-60-software-testing-notes

LikeLike