11 notes &

“Tea. Earl Grey. Hot.” What Would a Siri API Look Like?

It has been widely rumored that Siri will be supporting 3rd party apps. Right now most heavy users of Siri have become accustomed to the multitude of apologies and I-don’t-knows that accompany out-there queries. Examples:

- “What’s the score of the Duke Carolina game?”

- “What are the latest tweets by at gruber?”

- “Update Mac Tyler’s email address to desk at Mac Tyler dot com.”

- “When is Moonrise Kingdom playing tonight?”

- “What’s going on in the world right now?”

- “Send my latest presentation to Jennifer.”

- “What do I need to make on my next exam in Chemistry to get an A?”

One can go on. Clearly these are kinds of questions that aren’t all optimal for Wolfram Alpha to handle. Instead these requests should be handled, respectively, by ESPN, Twitter, Contacts, Movie Trailers, CNN, Keynote, and Grades 2, using, to wit, a system-wide API. But what would a Siri API look like?

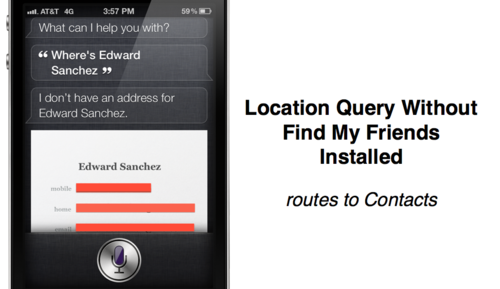

To start, let’s keep in mind that some kind of private Siri API already exists, apparently wired into non-system apps. Consider Find My Friends. Without Find My Friends installed, Siri answers thusly the query, “Where’s Edward Sanchez?”

As you can see, Siri intelligently routes me to Contacts and gives me whatever I have input as Edward’s address.

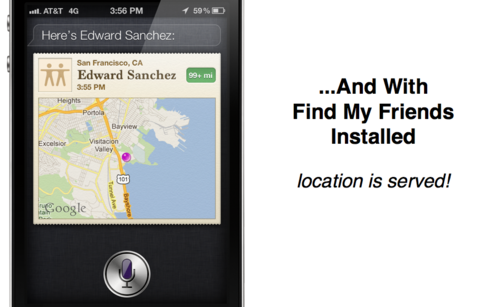

Now with Find My Friends installed:

Voilà. Find My Friends serves up a location with the private Siri API. One can safely assume that Weather, Stocks, Mail, Messages, Clock–all of the stock apps (surely with Movie Trailers to come to handle showtimes) are responsible for sending data (and graphics) to Siri.

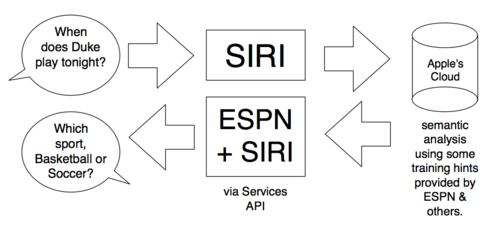

If this is true, we third party developers have a lot of work ahead of us, and this work can be broken into two parts: Services and Semantics. Services already exist in OS X, Android, and Windows 8. This is the system-wide ability for an app to accept various kinds of tasks from another app. For example, if I develop a new music app, I’ll want to be considered by Siri if the user makes a music request. Thus, I’ll register my app with the “Siri Play Music” Service.

The second half of Siri integration, Semantics, is the tricky part: something that most iOS developers have never dealt with. Semantics will attempt to capture the various ways a user can ask for something, and, more importantly, the ways Siri, in turn, can ask for more information should that be required. This means that developers will need to imagine and provide “hints” about the numerous ways a user can ask for something. Sure, machine learning can cover some of that, but at this early stage Siri will need human supervision to work seamlessly.

For example, if I ask “When does the Duke game start,” Siri will somehow have to know that this is the same question as “When does Duke play tonight,” and so on. It’s not magic. Someone has to tell Siri that those two things mean the same thing, and that someone, if it can be helped, should not be the user. It should be the developers of the ESPN app. This of course won’t be enough. Entire response trees will need to be implemented by app developers. The ESPN app will need to be smart enough to reply, “Which sport, Duke Men’s Basketball or Duke Women’s Soccer?” And so on.

Will such a system be subject to abuse? Almost certainly. Imagine Google claiming it can handle all queries then simply sticking them in its search engine. App screeners will need to carefully approve each registered semantic clause to make sure it isn’t too general (thus, not closely enough connected with the users’ specific intents) and also that it isn’t reaching beyond the app’s core capabilities. This is the kind of screening that human screeners will be especially adept at, something that Google, with Android, won’t be able to scale with robots anytime soon. This is a kind of service an open platform will struggle with.

If I were to speculate about what Apple’s big WWDC TBA session is (some have guessed television), I would guess Apple is going to teach its multitude of developers the basics of natural language processing and how exactly it plans to let them integrate with Siri. Let’s keep in mind that a conversational semantic services API of this kind –whatever it will end up looking like–has never been done before and will likely require new tools, new paradigms, to fully capture its power and breadth. And I can’t think of a better place, or time, to introduce such a platform than at the upcoming WWDC.