Dec 15 2020

Being Manipulated by Robots

How susceptible would you be to suggestion or even manipulation by a robot, or even just a digital AI (artificial intelligence)? This is one of those questions where almost everyone thinks they would not be affected, while in reality many or even most people are. We don’t like to think that our behavior can easily be manipulated, but a century of psychological research tells a different story. There is an entire industry of marketing, advertising, and product placement based on the belief that your behaviors can be deliberately altered, and not just through persuasion but through psychological manipulation. Just look at our current political environment, and how easily large portions of our society can be convinced to at least express support for absurd ideas, simply by pushing the right buttons.

How susceptible would you be to suggestion or even manipulation by a robot, or even just a digital AI (artificial intelligence)? This is one of those questions where almost everyone thinks they would not be affected, while in reality many or even most people are. We don’t like to think that our behavior can easily be manipulated, but a century of psychological research tells a different story. There is an entire industry of marketing, advertising, and product placement based on the belief that your behaviors can be deliberately altered, and not just through persuasion but through psychological manipulation. Just look at our current political environment, and how easily large portions of our society can be convinced to at least express support for absurd ideas, simply by pushing the right buttons.

Robots and AI are likely to play dramatically increased roles in our society in the future. In fact, our world is already infused with AI to a greater extent that we realize. You have probably interacted with a bot online and didn’t realize it. For example, a recent study found that about half of twitter accounting spreading information about coronavirus are likely bots. What about when you know that an actual physical robot is the one giving you feedback – will that still affect your behavior. Apparently so.

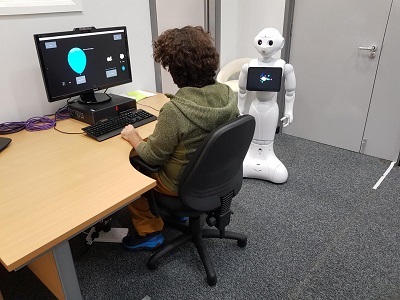

In a recent study psychologists had subjects play a computer game which has already been validated as a marker of risk taking. The game involves hitting the space bar to inflate a digital balloon. As the balloon gets bigger, it becomes worth more digital pennies. You can cash in at any time. However, at random the balloon can also pop, and you then lose all your money. The more risk you take, the more money you can make, but the greater the chance that you lose it all, at least on that go. The researchers had subjects play the game in one of three scenarios – alone, with a robot present who told them when to begin, but then was silent, and with a robot present that would give them encouragement to keep going.

The group with the encouraging robot took significantly more risk in this game than the other two groups, which did not differ from each other. It would have been fun to have a fourth group with a human giving encouragement to see if that differed from the robot – perhaps in a follow up experiment. It’s also interesting that the robot-riskier group made more money than the other two groups. What do these results mean?

As a side point to the study, it shows that people are risk-averse (supporting lots of prior research showing this phenomenon). Without encouragement, people were more risk averse than optimal in order to maximize their earnings. They tended to reduce the risk they were willing to take after losing money to a balloon pop. We can play a little evolutionary psychology with this – why did humans evolve to be apparently excessively risk averse? My guess is that in the so-called evolutionary milieu, risk taking was more about avoiding death than losing money. As the stakes get higher, and the costs of losing greater, than being more risk averse makes sense. We are therefore the descendants of those primates who were generally risk averse and avoided death. We are also greatly affected by recent experience, and avoid behavior that has previously produced a negative outcome.

We see this behavior a lot in medicine. In fact, much of the anti-vaxxer movement is fueled by this psychology – I know someone who had a bad reaction to a vaccine, therefore avoid all vaccines. Storied of apparent negative reactions have a lot of emotional impact. We are just a risk-averse species.

But this risk aversion can be overcome with a little encouragement. This is because we are also a social species – we respond very powerfully to social cues, or little psychological nudges. And we respond even if the social interaction is coming from an artificial object or digital representation. This is also inline with prior research, what I call the “cartoon phenomenon”. We can respond with full emotions to a 2-dimensional cartoon we know is fake. This is also consistent with what we know about neurology – our brains classify object as alive and therefore connected to emotions if they act alive, even if they are just cartoons, tiny simple robot, or even just basic shapes. And “acting alive” just means moving in a non-inertial frame, in other words, moving in a way that cannot be completely accounted for by external forces like gravity.

While this is just one study, it is consistent with a growing body of research showing all of the above – we can be manipulated, we respond to robots as if they are alive as long as they act alive, which means robots (including just digital or virtual bots) can manipulate us. The real question is – what are the implications of these facts? To what extent will they be used in a benign fashion to help people, vs used in a selfish fashion to promote an agenda or self-interest? I hate to be pessimistic, but I think it much more likely that this type of thing will mostly be used to sell stuff and promote ideological misinformation. In totalitarian countries, it will be used by the government to control the people. I say “will” but this is already happening.

The authors of the study write: ” Our results point to both possible benefits and perils that robots might pose to human decision-making.” Yes it does. But will we collectively anticipate the full expression of these benefits or perils, and what will we do about it? Will we leave it up to big tech companies, or will we craft regulations? How much is this a cybersecurity issue (hint – a lot)? Obviously this is a complex and deep issue beyond what I can discuss here. But it is an issue that needs attention. Like many technologies, we need to contemplate the implications and try to get out in front of possible abuses with careful regulations. Ideally this would be done transparently, in full public view with input from many sectors. In a healthy open society different institutions balance each other – government, industry, media, professions, academia, and public advocacy groups – to minimize abuse. Whether or not we are currently a “healthy” society is yet another discussion.

But probably it’s not best to ignore this issue and just let it evolve blindly. That is unlikely to lead to a place we want to be.