I missed the announcement of the publication of the 2023-24 World Quality Report so I’m catching up here to maintain my record of reviewing this annual epic from Capgemini (I reviewed the 2018/19, 2020/21 and 2022/23 reports in previous blog posts).

I’ve taken a similar approach to reviewing this year’s effort, comparing and contrasting it with the previous reports where appropriate. This review is a lengthy post, but I’m still doing you a favour even if you read this in its entirety compared to the 96 pages of the actual report!

TL;DR

The 15th edition of the “World Quality Report” is the fourth I’ve reviewed in detail and this is the thickest one yet (in every sense?).

As expected, AI is front and centre this year which is somewhat ironic given AI & ML barely got a mention in last year’s report. The hype is real and some of the claims made by respondents around their use and expected benefits of AI strike me as being very optimistic indeed. Their faith in the accuracy of their training data is particularly concerning.

Realizing value from automation clearly remains a huge challenge and I found the data around automation in this year’s report more depressing than usual. I got the feeling that AI is seen as the silver bullet in solving automation woes and I think a lot of organizations are about to be very disappointed.

It would have been nice to see some questions around human testing to help us to understand what’s going in these large organizations but, alas, there was nothing in the report to enlighten us in this area. The prevalence of Testing Centres of Excellence (CoEs) is another hot topic, despite previous reports suggesting that such CoEs were becoming less common as the movement towards agility marched on.

There continue to be many problems with this report – from the sources of its data, to the presentation of findings, and through to the conclusions drawn from the data. The lack of responses from smaller organizations mean that the results remain heavily skewed to very large corporate environments, which perhaps goes some way to explaining why my lived reality working with organizations to improve their testing and quality practices is quite different to that described in this report.

“Quality Engineering” is not clearly delineated from testing, with the concepts often being used interchangeably – this is confusing at best and potentially misleading.

The focus areas of the report changed almost completely, from “six pillars of QE” last year to eight (largely different) areas this year, making comparisons from one report to the next difficult or impossible. The sample set of organizations is the same as last year in the interests of year-on-year comparison, so why change the focus areas making the value of any such comparisons highly questionable? Is this a deliberate ploy or just poor study design?

Unlike the content of these reports, my advice remains steadfast – don’t believe the hype, do your own critical thinking and don’t take the conclusions from such surveys and reports at face value. While I think it’s worth keeping an interested eye on trends in our industry, don’t get too attached to them – the important ones will surface and then you can consider them more deeply. Instead, focus on building excellent foundations in the craft of testing that will serve you well no matter what the technology du jour happens to be.

The survey (pages 87-91)

This year’s report runs to 96 pages, the longest since I’ve been reviewing them (up from a mere 80 pages last year). I again looked at the “About the study” section of the report first as it’s important to get a picture of where the data came from to build the report and support its recommendations and conclusions.

The survey size was again 1750, the same number as for the 2022/23 report.

The organizations taking part were again all of over 1000 employees, with the largest number (35% of responses) coming from organizations of over 10,000 employees. The response breakdown by organizational size was the same as that of the previous three reports, with the same organizations contributing every time. While this makes cross-report comparisons perhaps more valid, the lack of input from smaller organizations unfortunately continues and inevitably means that the report is heavily biased & unrepresentative of the testing industry as a whole.

While responses came from 32 countries (as per the 2022/23 report), they were heavily skewed to North America and Western Europe, with the US alone contributing 16% and then France with 9%. Industry sector spread was similar to past reports, with “Hi-Tech” (19%), “Financial Services” (15%) and “Public Sector/Government” (11%) topping the list.

The types of people who provided survey responses this year was also very similar to previous reports, with CIOs at the top (24% again), followed by QA Testing Managers and IT Directors. These three roles comprised over half (59%) of all responses.

Introduction (pages 4-5)

The introduction is the usual jargon-laden opening to the report, saying little of any value. But, there’s no surprise when it comes to the focus of this year’s epic:

…the emergence of a true game changer in the field of software and quality engineering: Generative AI adoption to augment our engineering skills, accelerated like never before. The lack of focus on quality seen in the last few years is becoming more visible now and has brought back the emphasis on the Hybrid Testing Center of Excellence (TCoE) model, indicating somewhat of a reversal trend.

Do the survey’s findings reflect the game-changing nature of generative AI around quality engineering? What’s with the “lack of focus on quality seen in the last few years” when previous reports have been glowing about QE and its importance in the last couple of years? And what exactly is a “Hybrid Testing Center of Excellence”? Let’s delve in to find out.

Executive Summary (pages 6-7)

While the Executive Summary is – as you’d expect – a fairly high level summary of the report’s findings, a couple of points are worth highlighting from it. Firstly:

…almost all organizations have transitioned from conventional testing to agile quality management. Evidently, they understand the necessity of adapting to the fast-paced digital world. An agile quality culture is permeating organizations, albeit often at an individual level rather than at a holistic program level. Many organizations are

adopting a hybrid mode of Agile. In fact, 70% of organizations still see value in having a traditional Testing Center of Excellence (TCoE), indicating somewhat of a reversal trend.

I’m intrigued by what the authors mean by “conventional testing” and “agile quality management”, as well as the fact that the majority of organizations still adopt a “traditional” TCoE. Secondly:

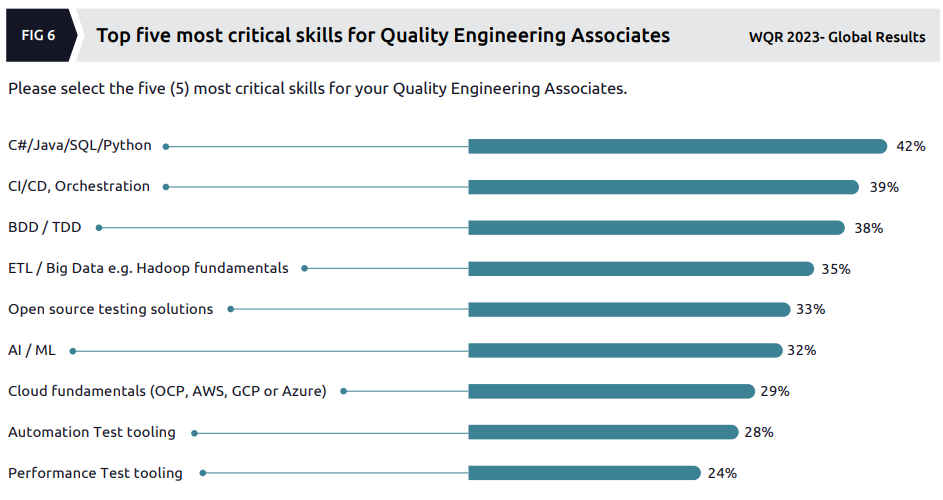

What is clear is the extended knowledge and skills that are required from the QE experts who operate in agile teams. Coding skills in particular (C#, Java, SQL, Python), and business-driven development (BDD) and test-driven development (TDD) competencies, are in demand.

The idea that “QE experts” need coding skills and competency in BDD and TDD strikes me as unrealistic. I’m not sure whether the authors are referring to expert testers with some development skills, expert developers with some testing skills or some superhuman combination of tester, developer and BA (remembering that, of course, BDD is neither a development or testing skill, per se).

With all the “game-changing” talk around AI, there’s a nod to reality:

A significant percentage (31%) remains skeptical about the value of AI in QA, emphasizing the importance of an incremental approach.

Key recommendations (pages 8-9)

The “six pillars of QE” from the last report (viz. “Agile quality orchestration”, “Quality automation”, “Quality infrastructure testing and provisioning”, “Test data provisioning and data validation”, “The right quality indicators” and “Increasing skill levels”) no longer warrant a mention, with this year’s recommendations being in these eight areas instead:

- Business assurance

- Agile quality management

- QE lifecycle automation

- AI (the future of QE)

- Quality ecosystem

- Digital core reliability

- Intelligent product testing

- Quality & sustainability

The recommendations are generally of the cookie cutter variety and could have been made regardless of the survey results in many cases. A couple of them stood out, though. Firstly, under “Digital core reliability”:

Use newer approaches like test isolation, contract testing etc., to drive more segmentation and higher automated test execution.

The idea that test isolation and contract testing are leading edge approaches in the automation space is indicative of how far behind many large organizations must be. Secondly, under “Intelligent product testing”:

Invest in AI solutions for test prioritization and test case selection to drive maximum value from intelligent testing.

I was wondering what “intelligent product testing” was referring to, so maybe the authors are suggesting a delegation of the intelligence aspect of testing to AI? I’m aware of a number of tools that claim to prioritize tests and make selections from a library of such test cases. But I’m also aware that good test prioritization relies on a lot of contextual inputs, so I’m dubious about any AI’s ability to do this “to drive maximum value” from (intelligent) testing.”

Current trends in Quality Engineering & Testing (p11-59)

Exactly half of the report is focused on current trends, broken down into the eight areas detailed in the previous section. Some of the most revealing content is to be found in this part of the report. I’ve broken down my analysis into the same sections as the report. Strap yourself in.

Business assurance

This area was not part of the previous year’s report. There’s something of a definition of what “business assurance” is, even if I don’t feel much wiser based on it:

Business assurance encompasses a systematic approach to determining business risks and focusing on what really matters the most. It consists of a comprehensive plan, methodologies, and approaches to ensure that business operation processes and outcomes are aligned with business standards and objectives.

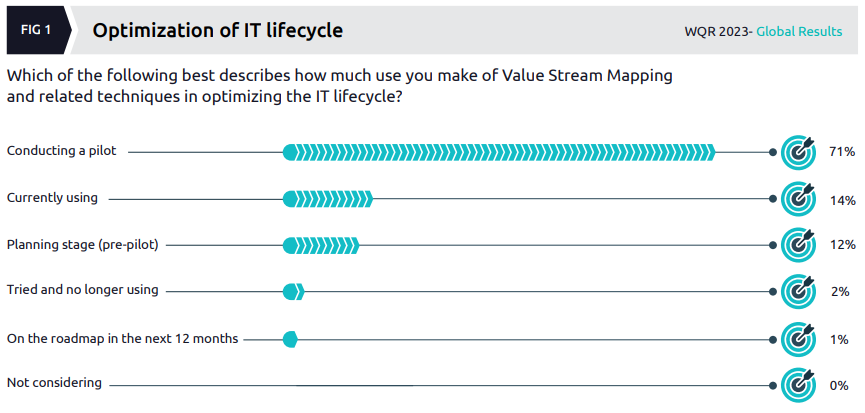

One point of focus in this section is value stream mapping (which featured in the previous report) but the authors’ conclusions about its widespread adoption appear at odds with the data:

Many organizations are running pilots apparently, but their statement that “Many businesses are now shifting from mere output to a results-driven mindset with value stream mapping (VSM)” seems to go too far.

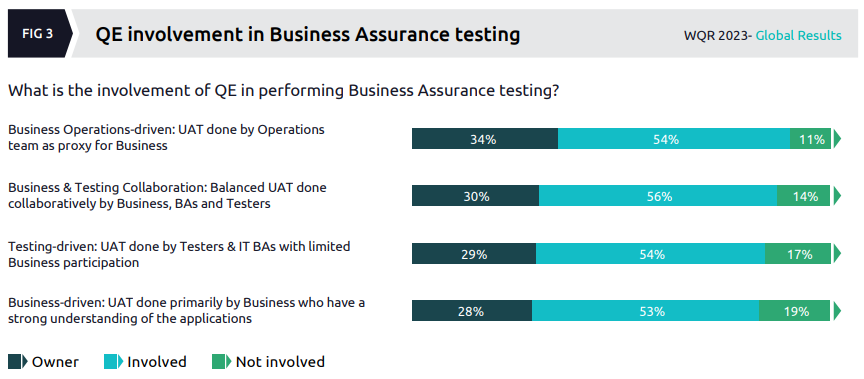

Moving on to how QE is involved in “Business Assurance testing” (which is not defined and I’m unclear as to what it actually is):

This data was from a small sample (314 of the 1750 respondents) and their conclusion that “both business stakeholders and testers are working together during the UAT process to drive value” (from the second bar) is hardly a revelation.

I remain unclear why this area is included in this report as it doesn’t seem related to quality or testing in a strong way. The first recommendation in this section of the report is “Leverage the testing function to deliver business outcomes” – isn’t this what good testing always helps us to do?

Agile quality management

This area wasn’t in the previous year’s report (the closest subject matter was under “Quality Orchestration in Agile Enterprises”) and the authors remind us that “last year, we noticed a paradigm shift in AQM [Agile Quality Management] through the emphasis placed on embracing agile principles rather than merely implementing agile methodologies”. The opening paragraph of this section sets the scene, sigh (emphasis is mine):

The concept of agile organizations has been a part of boardroom conversation over the past decade. Businesses continue to pursue the goal of remaining relevant in volatile, uncertain, complex, and ambivalent environments. Over time, this concept has evolved into much more than a mere development methodology. It has become a way of thinking and a mindset that emphasizes continuous improvement, adaptability, and customer-centricity. This evolution is clearly represented in the trends shaping agile quality management (AQM) practices today.

I’m going to assume “ambivalent” is a typo (or ChatGPT error). Much more worrisome to me is the idea that agile has evolved from being a development methodology to “a way of thinking and a mindset that emphasizes continuous improvement, adaptability, and customer-centricity” – this is exactly the opposite of what I’ve seen! The great promise shown by the early agilists has been hijacked, certified, confused, process-ized, packaged and watered down, moving away from being a way of thinking to a commodity process/methodology. Either the authors’ knowledge of the history of the agile movement is lacking or they’re confused (or both). Having said that, if they genuinely have evidence that there’s a move away from “agile as methodology” (aka “agile by numbers”) to “agile as mindset”, then I think that would be a positive thing – but I failed to spot any such evidence from the data they share.

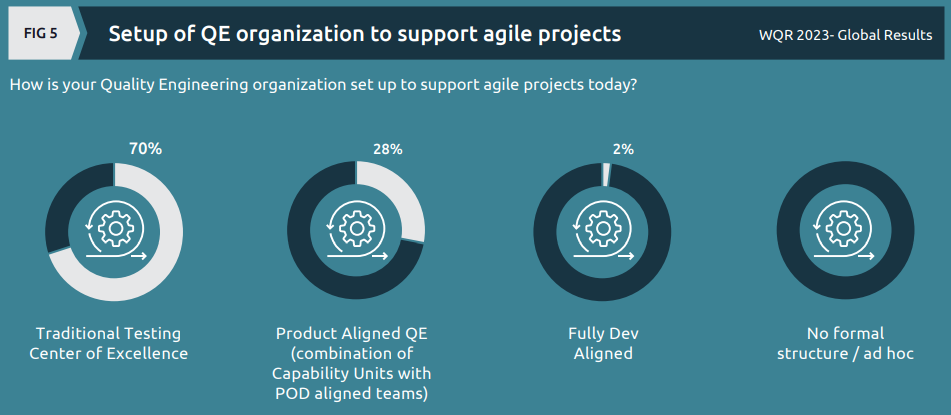

The first data looks at how QE is organized to support agile projects, with a whopping 70% still relying on a “Traditional Testing Center of Excellence” and essentially all the rest using “product aligned QE” (which I think basically means having QE folks embedded with the agile teams).

Turning to skills:

Worryingly, there is no mention here of actual testing skills, but the authors remark:

Organizations are now prioritizing development skills over traditional testing skills as the most critical skills for quality engineers. Development-focused skills like C#/Java/SQL/Python and CI/CD are all ranked in the top 5, while traditional testing skills like automation and performance tooling ranked at the bottom of the results.

Looking at this skills data, they also say:

We feel this is in alignment with the industry’s continued focus on speed to market; as quality engineers continue to adopt more of a developer mindset, their ability to introduce automation earlier in the lifecycle increases as is their ability to troubleshoot and even remediate defects on a limited basis.

I find the lack of any commentary around human testing skills (outside of coding, automation, DevOps, etc.) deeply concerning. These skills are not made redundant by agile teams/”methodologies” and the lack of humans experiencing software before it’s released is not a trend that’s improving quality, in my opinion.

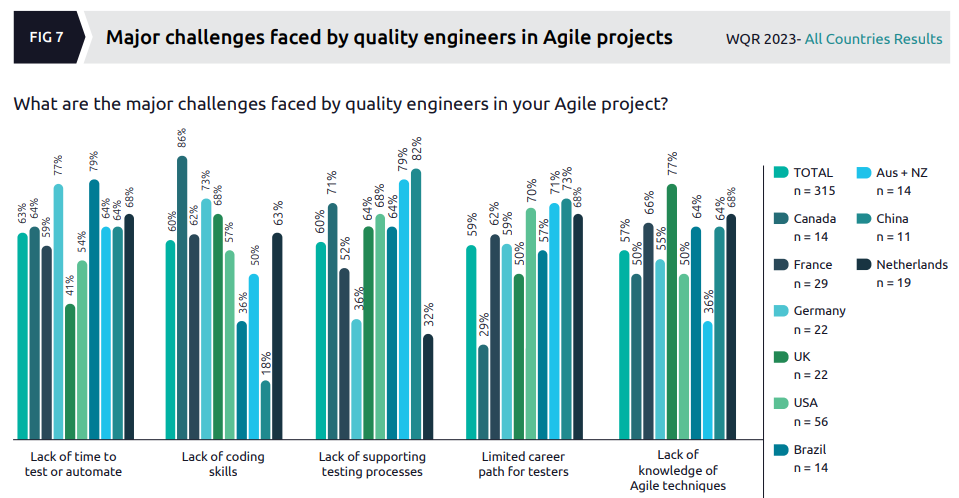

Turning to the challenges faced by quality engineers in Agile projects:

This is a truly awful presentation of the data, the lack of contrast in the colours used on the various bars makes it almost unreadable. The authors highlight one data point, the 77% of UK organizations for which “lack of knowledge of Agile techniques” was the most common challenge, saying:

This could indicate the speed at which these organizations moved into a product-aligned QE model while still trying to utilize their traditional testers.

They slip in and out of the use of terminology such as “Agile techniques” throughout the report (which is unhelpful) and they appear to be claiming that “traditional testers” moving into an Agile “product-aligned QE model” have a lack of knowledge – maybe they’re referring to good human testers being asked to become (QE) developers, in which case this lack of knowledge is to be expected.

The following text directly contradicts the previous data on the very high prevalence of “traditional” testing CoEs:

Many quality engineering organizations predicted the evolution and took a more proactive stance by shifting from a traditional ‘Testing Center of Excellence’ approach to a product-based quality engineering setup; however, most organizations are still in the process of truly integrating their quality engineering teams into an agile-centric model. As shown in the diagram… only 4% of respondents report that more than 50% of their quality engineering teams are operating in a more agile-centric pod-based model.

In closing out this section, the authors say (emphasis is mine):

…quality engineering teams are asked to take on a more development-focused persona and build utilities for the rest of the organization to leverage rather than focusing solely on safeguarding quality. The evolution of agile practices, the integration of AI and ML, and the synergy between DevOps and agile are transforming quality engineering in infinitely futuristic ways. The question is, when will organizations embrace these changes en-masse, adopt proactive strategies, and make them a norm? Next year’s report will probably unveil the answer.

I find it highly unlikely that the answer to this question will be revealed in the next report, as such questions have rarely – if ever – been answered in the past. Given how difficult it’s been for large organizations to move towards agile ways of working (despite 20+ years of trying), I’d suggest that en masse movement towards QE is unlikely to eventuate before the next big thing distracts these trend followers from realizing whatever benefits the QE model was designed to bring.

QE lifecycle automation

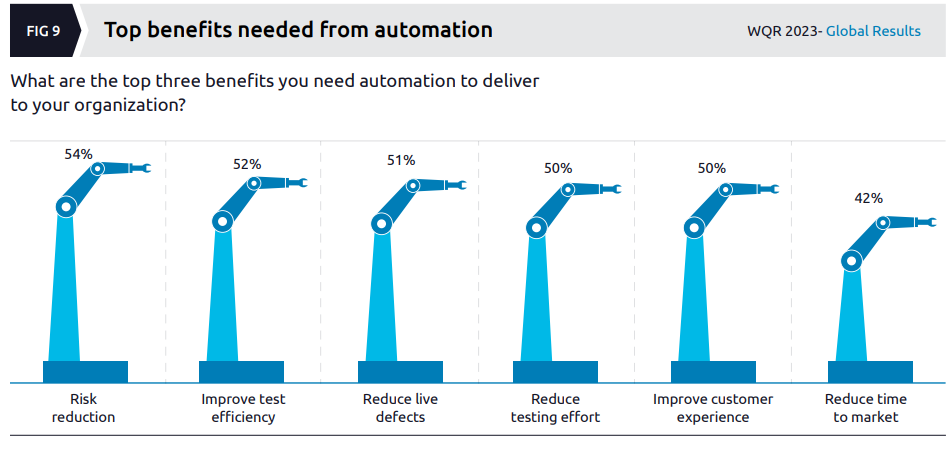

This section of the report is on all things automation, now curiously being referred to as “QE lifecycle automation”. It kicks off with the benefits the respondents are looking for from automation:

I’ll make no comment on these expectations apart from mentioning that my own experience of implementing many automation initiatives hasn’t generally seen “reduced testing effort” or “improved test efficiency” (though I’m not sure what they mean by that here).

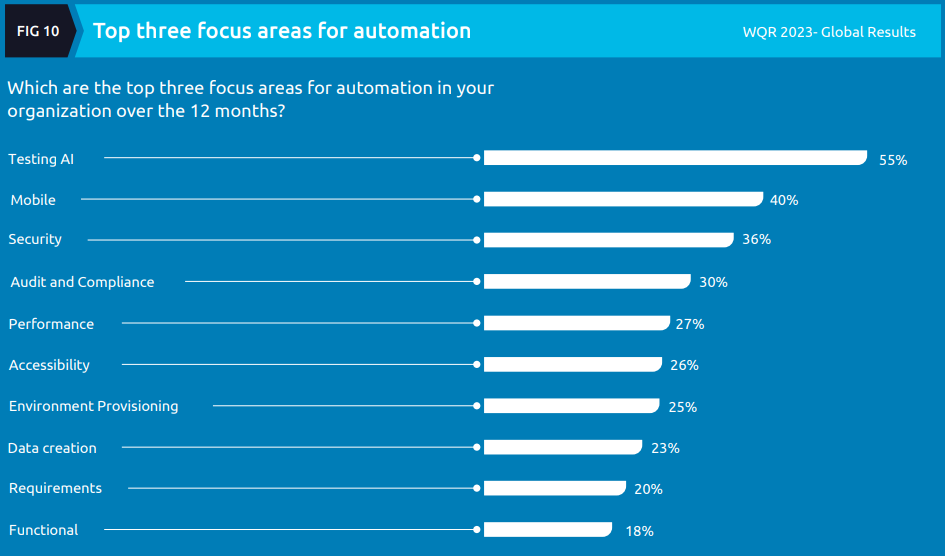

Moving into where organizations intend to focus their automation efforts in the coming year:

The authors say:

We were expecting AI to steal the limelight this year since it is the buzzword in the boardroom these days. We think that AI is the new tide that needs to be ridden with caution, which means quality engineering and testing (QE&T) teams need to understand how AI-based tools work for them, how they can do their jobs better, and bring better outcomes for their customers.

More than 50% of the respondents were eager to see testing AI with automation, which is well ahead of the other top focus areas like security and mobile.

Interestingly, we found that functional (18% of respondents) and requirements (20% of respondents) automation were of the least focus for organizations, presumably because of the challenges in automating and regular updating involved in these areas. Perhaps, this is an area where we can expect to see AI tools becoming a key part of automation toolkits.

Surprise surprise, it’s all about AI! It’s time to ride the tide of AI, folks. What does “Testing AI” mean? Is it testing systems that have some aspect of AI within them or using AI to assist with testing? Whatever it is, it’s the top priority apparently.

I also don’t understand what “Functional” means and how other automation focus areas are related to it or not. For example, if I implement a UI-driven test on a mobile device using automation, does that come under “Functional” or “Mobile” (or both). It’s hard for me to fathom how respondents answer such questions without understanding these distinctions.

The last part of the quote above is illustrative of the state of our industry – building robust and maintainable automation is just too hard, so we’ll deprioritize that and get distracted by this shiny AI thing instead.

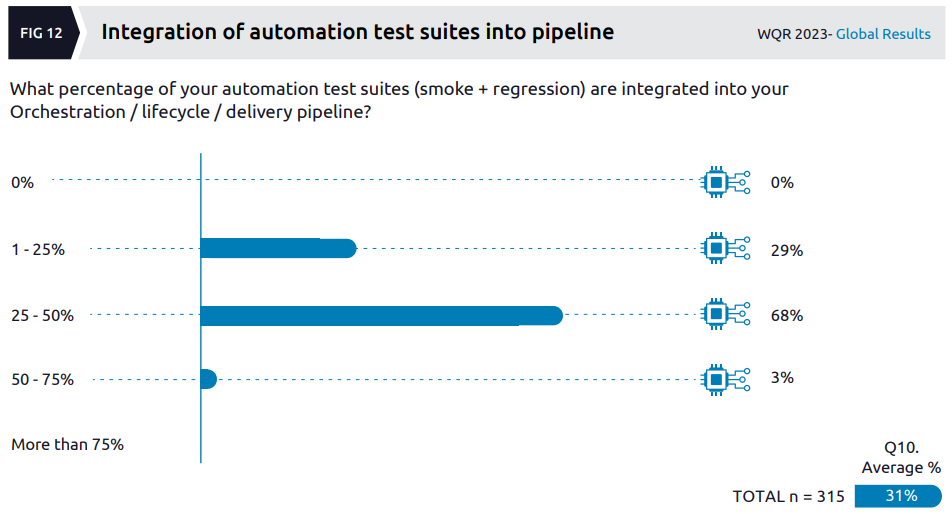

The data around integration of automated tests into pipelines makes for shocking reading:

The authors note:

Overall, the survey revealed that only 3% of respondents’ organizations have more than half of their suites integrated which may be due to diminishing returns or lack of QE access to orchestration instances. This comes as a surprise since most of the organizations now have some automated test suites integrated into their pipelines for smoke and regression testing.

I agree that this low percentage of organizations actually getting their automated test suites into pipelines is surprising! Maybe they shouldn’t be so worried about trying to make use of AI, but rather to actually make use of what they already have in a more meaningful and efficient way. The response to the next question “What are the most impactful challenges preventing your Quality Engineering organization from integrating into the DevOps/DevSecOps pipeline?” revealed that a whopping 58% (the top answer) said “Quality Engineering team doesn’t 58% have access to enterprise CI instance”, I rest my case. With no sense of irony about the report’s findings more generally, the authors say:

What was worth noting was that the more senior respondents (those furthest from the actual automation) reported higher levels of integration than those solely responsible for the work

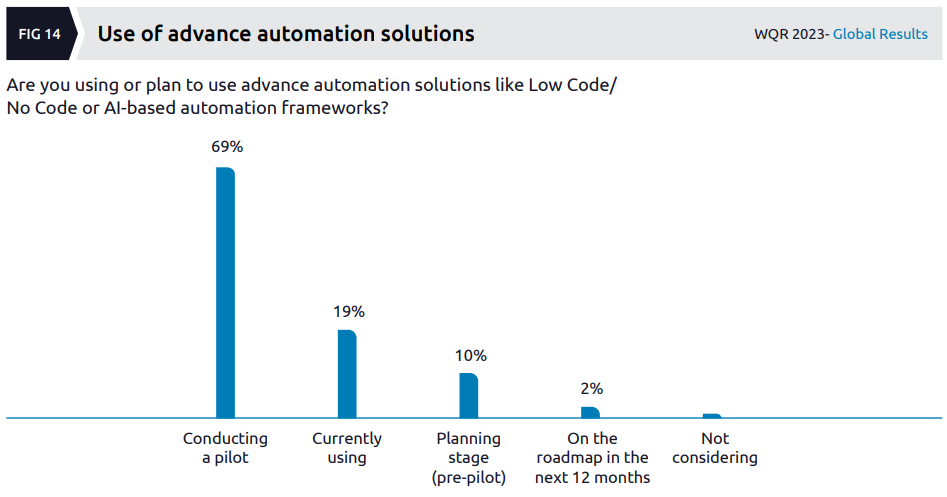

The much vaunted dominance of low/no code & AI automation solutions is blown away by this data, with not much going on outside of pilots:

The data in this section of the report, as per similar sections in previous reports, only goes to show how difficult it is for large organizations to successfully realize benefits from automation. Maybe “AI” will solve this problem, but I very much doubt it as the problems are generally not around the technology/tools/toys.

AI (the future of QE)

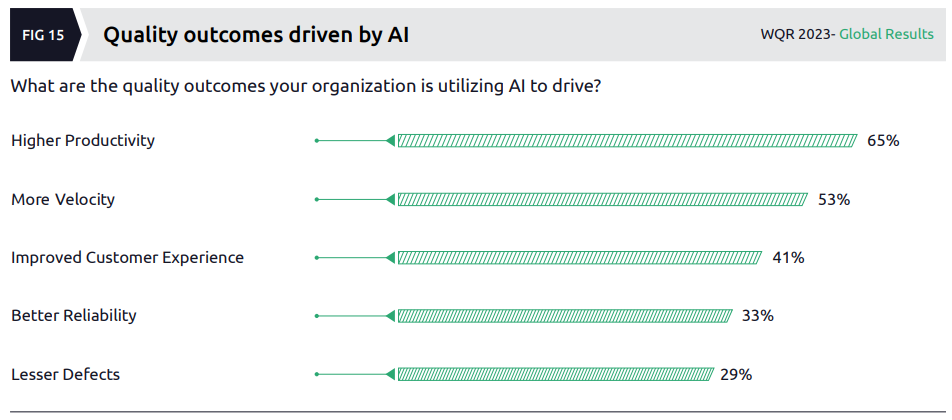

With AI being such a strong focus of this year’s report, this section is where we get into the more detailed data. Firstly, it’s no surprise that organizations see AI as the next big thing in achieving “higher productivity”:

Looking at this data, it’s all about speed with any consideration around improving quality coming well down the list (“lesser defects” – which should be “fewer defects” of course – coming last on this list). Expressing their surprise at this lack of focus on using AI to reduce defects, the authors say (emphasis is mine):

With Agile and DevOps practices being adopted across organizations, there is more continuous testing with multiple philosophies like “fail fast” and “perpetual beta” increasing the tolerance for defects being found, as long as they can be fixed quickly and efficiently.

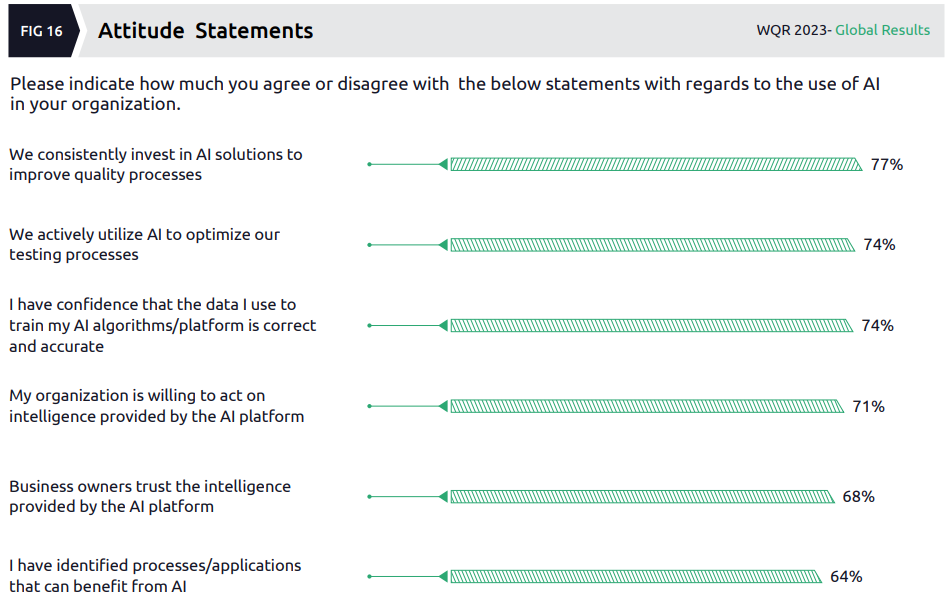

I find the response around training data in this question quite disturbing:

The authors are quite bullish on this, saying:

…the trust in training data for AI solutions is very high, which in turn reflects the robust infrastructure and processes that organizations have developed over the years to collect continuous telemetry from all parts of the quality engineering process.

I’m less than convinced this is the reason for such confidence and it feels to me like organizations want to feel confident but have little evidence to back up that confidence. I really hope I’m wrong about that, since AI solutions are clearly being seen as useful ways to drive significant decisions, in particular around testing.

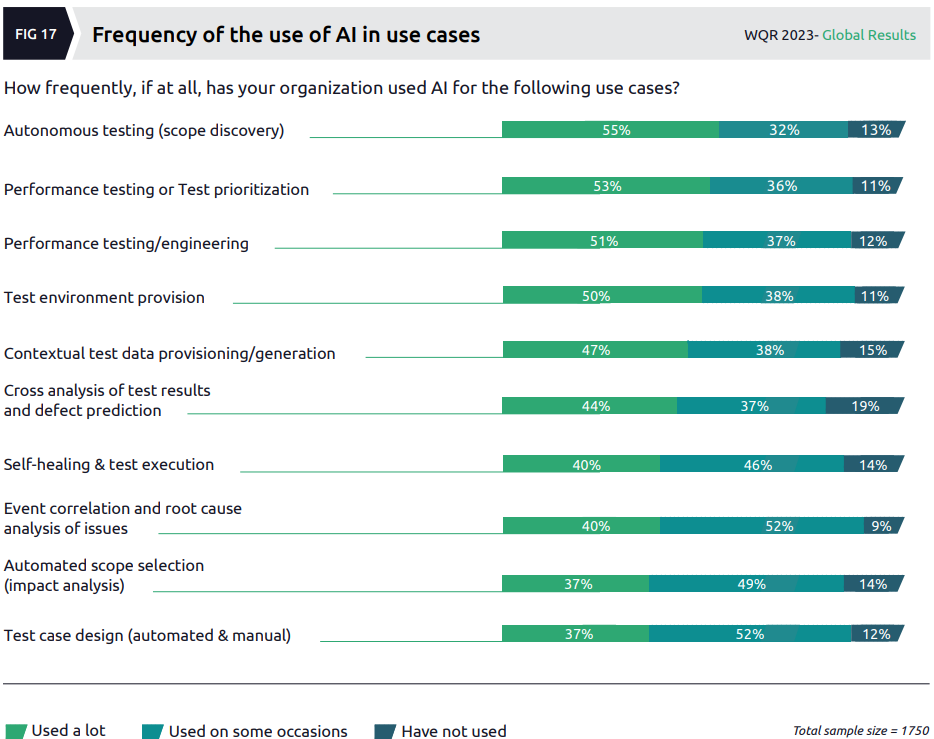

The next question is where the reality of AI usage is revealed:

The use cases seem very poorly thought out, though. For example, does performance testing belong in “Performance testing or Test prioritization” or “Performance testing/engineering”? And why would performance testing be lumped together with test prioritization when, to me at least, they’re such different use cases.

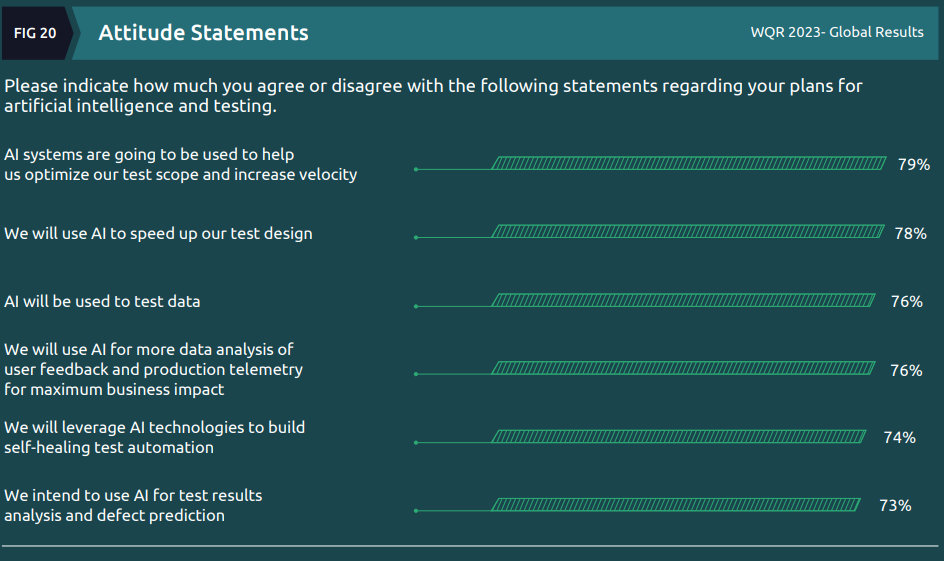

I’ll wrap up my observations on this AI section with this data:

I’m suspicious that all of these statements get almost the same level of agreement.

Quality ecosystem

This section (which is similar in content to what was known as “Quality infrastructure testing and provisioning” in the previous report) kicks off by looking at “cloud testing”:

…82% of the survey respondents highlighted cloud testing as mandatory for applications on cloud. This highlights a positive and decisive shift in the testing strategy that organizations are taking on cloud and infrastructure testing. It also demonstrates how important it is to test cloud-related features for functional and non-functional aspects of applications. This change in thinking is a result of organizations realizing that movement to cloud alone does not make the system available and reliable.

The authors consider this data to be very positive, but my initial thought was why this number wouldn’t be 100%?! If your app is in/on the cloud, where else would you test it?

The rest of this section was more cloud stuff, SRE, chaos engineering, etc. and I didn’t find anything noteworthy here.

Digital core reliability

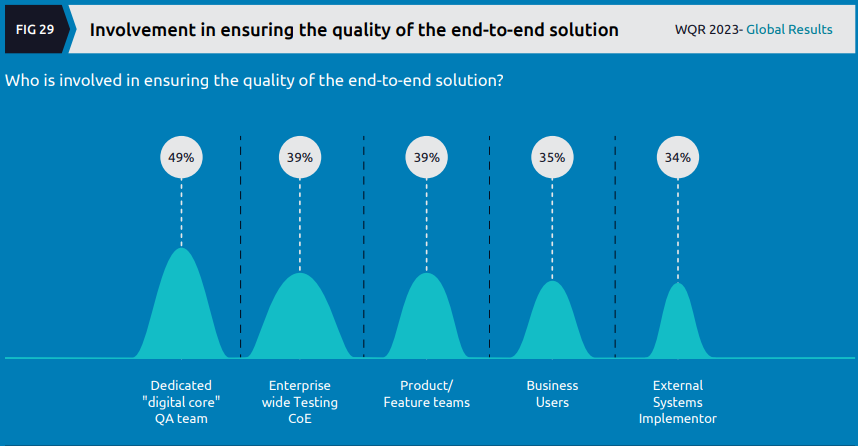

In this section of the report (which had no obvious corresponding section in last year’s report), the focus is on foundational digital (“core”) technology and how QE manages it. In terms of “ensuring quality”:

About half of the respondents say that a dedicated QA team is responsible for “ensuring the quality” but there’s also a high percentage still using CoEs or having testers embedded into product/feature teams according to this data.

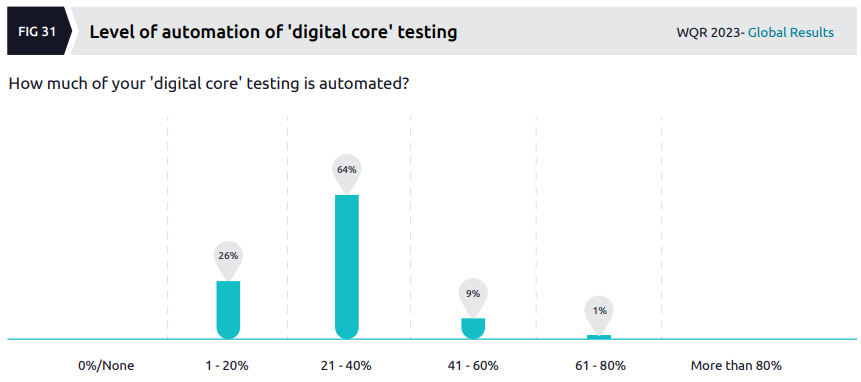

Turning to automation specifically for digital core testing:

The authors go on to discuss an “enigma” around the low level of automation revealed by this data (emphasis is mine):

This clearly is due to the same top challenges around testing digital core solutions – the complexity of the environment owing to the mix of tools, dependencies related to environment and data availability. When it is hard to test, it is even harder to automate.

There’s also another contradiction we need to address – while 31% of organizations feel the pressure to keep up with the pace of development teams developing digital core solutions, 69% of organizations do not feel the pressure. With <40% of automation coverage and digital core solutions becoming more SaaS and less customized, it is a bit of an enigma to unravel. Why don’t organizations feel the pressure to keep up? Is that because they have large QA teams rushing to complete all testing manually? Or are teams stressed by the number and frequency of code drops coming in for testing?

It’s good to see the authors acknowledging that the data is contradictory in this area. There are other possible causes of this “enigma”. Maybe the respondents didn’t answer honestly (or, more generously, misunderstood what they were being asked in some of the survey questions). Maybe they don’t feel the pressure to keep up because they’ve lowered their bar in terms of what they test (per the previous commentary on the “increasing tolerance for defects”).

The following is one of the more sensible suggestions in the entire report:

When it comes to test automation, replicating what functional testers do manually step by step through a tool might not be the best possible approach. Trying to break the end-to-end tests into more manageable pieces with a level of contract testing could be a more sustainable approach for building automated tests.

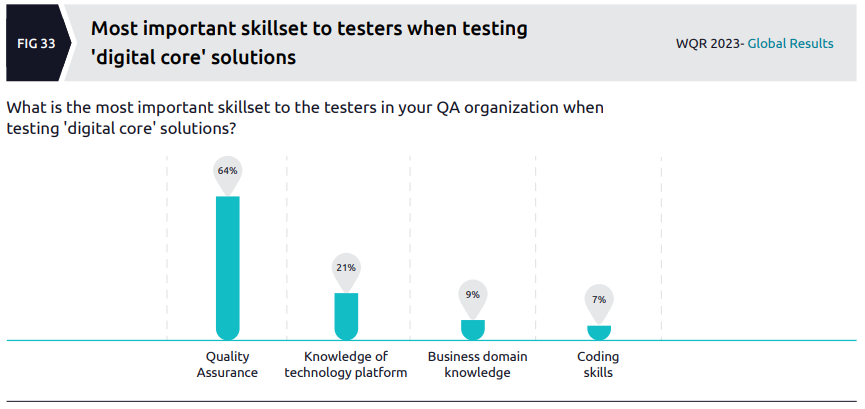

Wrapping up this section, the focus turns to skills required by testers when testing “digital core”:

The top answer was “Quality Assurance”, is that a testing skillset? It seems awfully broad in answer to the question, in any case. The report’s tendency for confirmation bias rears its ugly head again, with the authors making their own judgements that are unsupported by the data (emphasis is mine):

When we looked at the data, quality assurance skills were rated as the most important skillset over domain or platform skills required for testing digital core solutions. This datapoint feels like bit of an outlier when compared to two other datapoints – 35% of organizations still utilize business users in validating digital core solutions and 33% of organizations pointed to gaps in domain expertise as a challenge to overcome when testing digital core solutions. The truth is while solid testing and quality assurance skills are sought after due to the nature of digital core solutions, domain expertise remains invaluable.

Intelligent product testing

This section (again not obviously close to the content of a section from last year’s report) looks to answer this bizarre question:

What really goes into creating the perfect intelligent product testing system ecosystem?

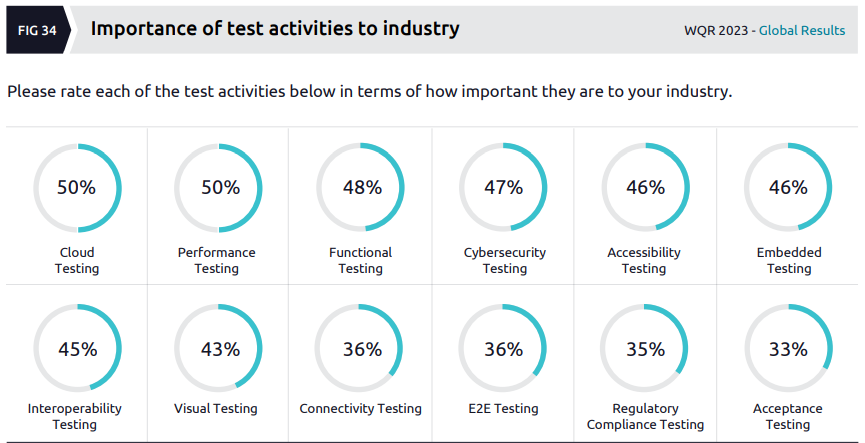

The first data in this section refers to different test activities and how important they are considered to be:

The presentation of this data doesn’t make sense to me, since the respondents were asked to rate the importance of various activities while the results are expressed as percentages. Most of the test activities get very similar ratings anyway, with the authors noting that 36% seems low for “E2E Testing”:

..we were surprised to learn that only 36% thought end-to-end (E2E) testing was necessary. It has been established that the value of connected products is distributed throughout the entire chain, and its resilience depends on the weakest link in the chain, and yet, the overall value of E2E testing has not been fully recognized. One reason we suspect could be that it requires significant investment in test benches, hardware, and cloud infrastructure for E2E testing.

Note how the analysis of the data changes again here – they refer to the necessity of a test activity (E2E testing in this case), not a rating of its importance. I had to chuckle at their conclusion here, which essentially says “E2E testing is too hard so we don’t do it”.

I see no compelling evidence in the report’s data to support the opening claim in the following:

One of the significant trends observed in the WQR 2023 survey is the expectation for improving the test definition. The increasing complexity of products, along with hyper-personalization to create a unique user experience, necessitates passing millions of test cases to achieve the perfect user experience. Realistically speaking, it’s not possible to determine all possible combinations.

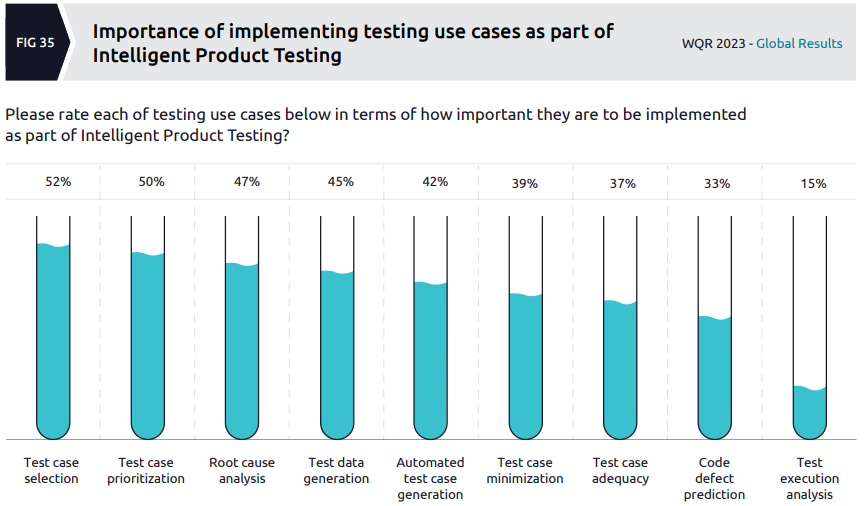

The next question has the same rating vs. percentage data issue as the one discussed above, but somewhat supports their claim I suppose:

I again don’t see the evidence to support the following statement (nor do I agree with it, based on my experience):

For product testers, the latest findings suggest that automation toolchain skills and programming languages are now considered mainstream and no longer considered core competencies.

Quality & sustainability

It’s good to see some focus on sustainability in the IT industry, especially when “reports show that data centers and cloud contribute more to greenhouse emissions than the aviation sector”.

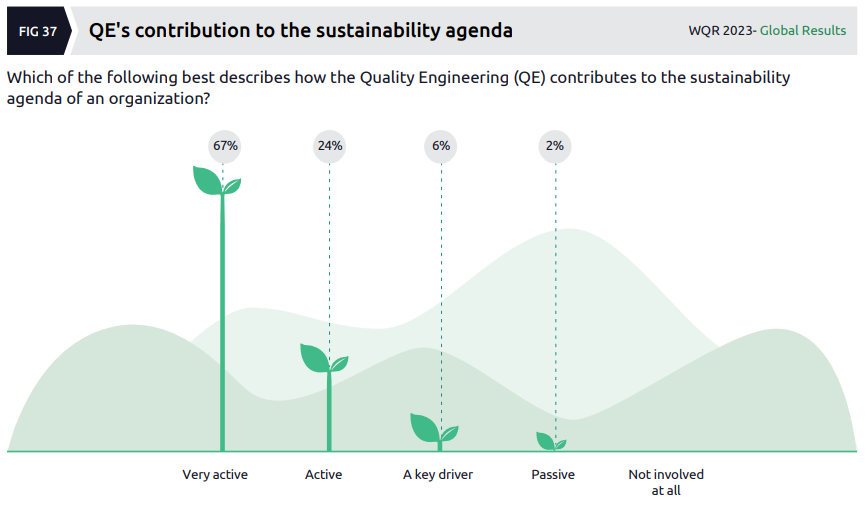

This section of the report looks specifically at the role that “quality engineering play in reducing the environmental impact of IT programs and systems”, which seems a little odd to me. I don’t really understand how anyone could answer the following question (and what it even means to do so):

Although the data in this next chart doesn’t excite me, I had to include it here as it takes the gong (amongst a lot of competition) for the worst way of presenting data in the report:

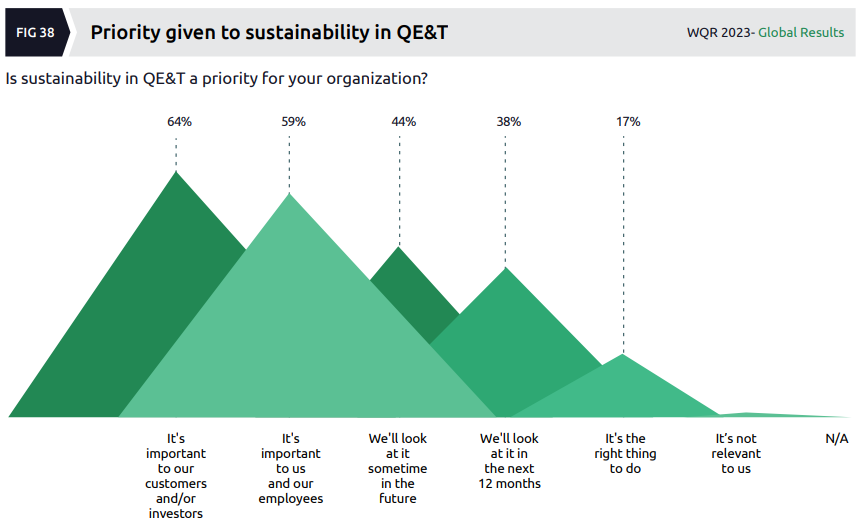

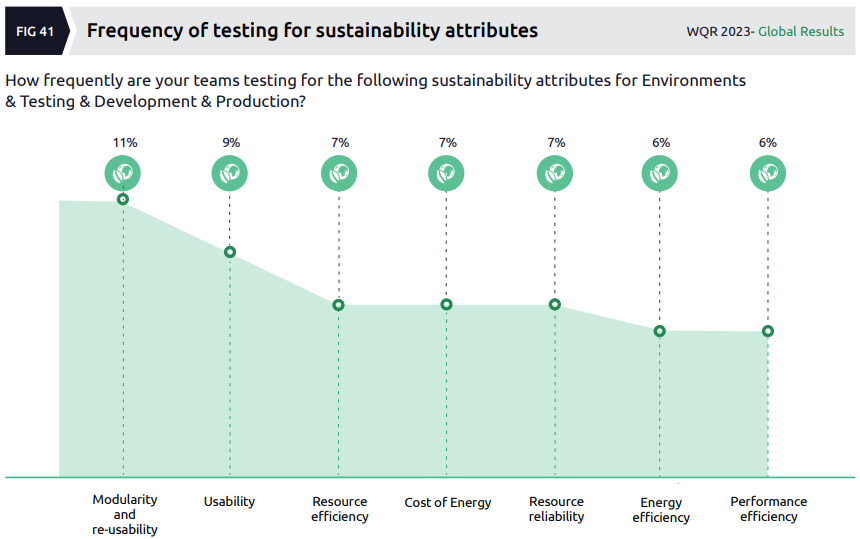

The final chart I’ll share from this section of the report again suffers from a data issue, in that the question it relates to (which itself seems poorly posed and too broad) is one of frequency while the data is shown as a percentage (of what?):

Perhaps I’m reading this wrong, but it seems that almost none of the organizations surveyed are really doing very much to test for sustainability (which is exactly what I’d expect).

Sector analysis (p60-85)

As usual, I didn’t find the sector analysis to be as interesting as the trends section. The authors identify the same eight sectors as the previous year’s report, viz.

- Automotive

- Consumer products, retail and distribution

- Energy, utilities, natural resources and chemicals

- Financial services

- Healthcare and life sciences

- Manufacturing

- Public sector

- Technology, media and telecoms

Last year’s report provided the same four metrics for each sector but this year a different selection of metrics was presented by way of summary for each sector. A selection of some of the more surprising metrics follows.

- In the Consumer sector, 50% of organizations say only 1%-25% of their organizations have adopted Quality Engineering.

- In the Consumer sector, 76% of organizations are using traditional testing centres of excellence to support agile projects

- In the Financial Services sector, 75% of respondents said they are using or plan to use advanced automation solutions like No Code/ Low Code or AI based automation frameworks.

- In the Financial Services sector, 82% of organizations support agile projects through a traditional Testing Center of Excellence.

- In Healthcare, 81% of organizations have confidence in the accuracy of data used to train AI platforms.

- In the Public sector, 60% of organizations said the top-most issue in agile adoption was lack of time to test.

Geography-specific reports

The main World Quality Report was supplemented by a number of short reports for specific locales. I only reviewed the Australia/New Zealand one and didn’t find much of interest there, with the focus of course being on AI, leading the authors to suggest:

We think that prompt engineering skills are the need of the hour from a technical perspective.

Really good analysis. How much of a marketing/pre-sales tool do you think these reports are?

Thanks for your kind words. I think the WQR is primarily a marketing and sales vehicle for the companies behind it. Producing a lengthy report like this helps them to look credible and they know most people at the level making decisions about which consultancies to hire won’t go to the effort of reading the details of such long reports. I see it as a missed opportunity to find out what’s really going on in testing/QE but I suspect the findings from surveying people “on the tools” wouldn’t fit the narrative that the consultancies want to tell.

Thanks for saving me the effort of reading this usually poor and biased report. In reality it’s just a marketing tool for drumming up more business rather than an independent and rational view of (part) the quality domain.

Very interesting evaluation Lee.

Manu Gulati ssw.com.au