‘Can I Use…’, but for ARIA!

Posted by Lola Odelola

Three years ago we announced our work on the ARIA-AT program to develop an interoperability testing system for assistive technologies, starting with screen readers. Last year we completed a redesign of the ARIA Practices Guide (APG), which web developers use for guidance on making accessible websites and apps. We’ve been hard at work along the way to build a testing system and collect results on screen reader web rendering interoperability. Today we’re announcing that the first round of test results from ARIA-AT are available and embedded on the APG so web developers can read about screen reader support for various ARIA features.

When developing and creating accessible web experiences, knowing how different screen readers will interpret your web components is tricky. It’s hard to know if they’ll be interpreted at all or in the way you expect without doing a lot of manual testing across devices, assistive technologies and operating systems. Not anymore!

Now developers can see what patterns are supported in which browser and screen reader combinations. This is a big move in the direction of making the web more accessible, open and inclusive for screen reader users.

We’ve built the ARIA-AT-APP to facilitate tester and vendor collaboration on testing that web patterns are being rendered by assistive technologies (ATs), in major browsers, with consistent voicing language. We’re working specifically with screen readers today, but we’ve taken care to design the system to support the eventual inclusion of other ATs like magnifiers, braille displays, and more.

Before now, the test results for each pattern, browser and screen reader didn’t feed back into the APG, adding a barrier for developers between understanding how to create accessible web components and knowing which patterns were supported by which systems. Now that’s changed!

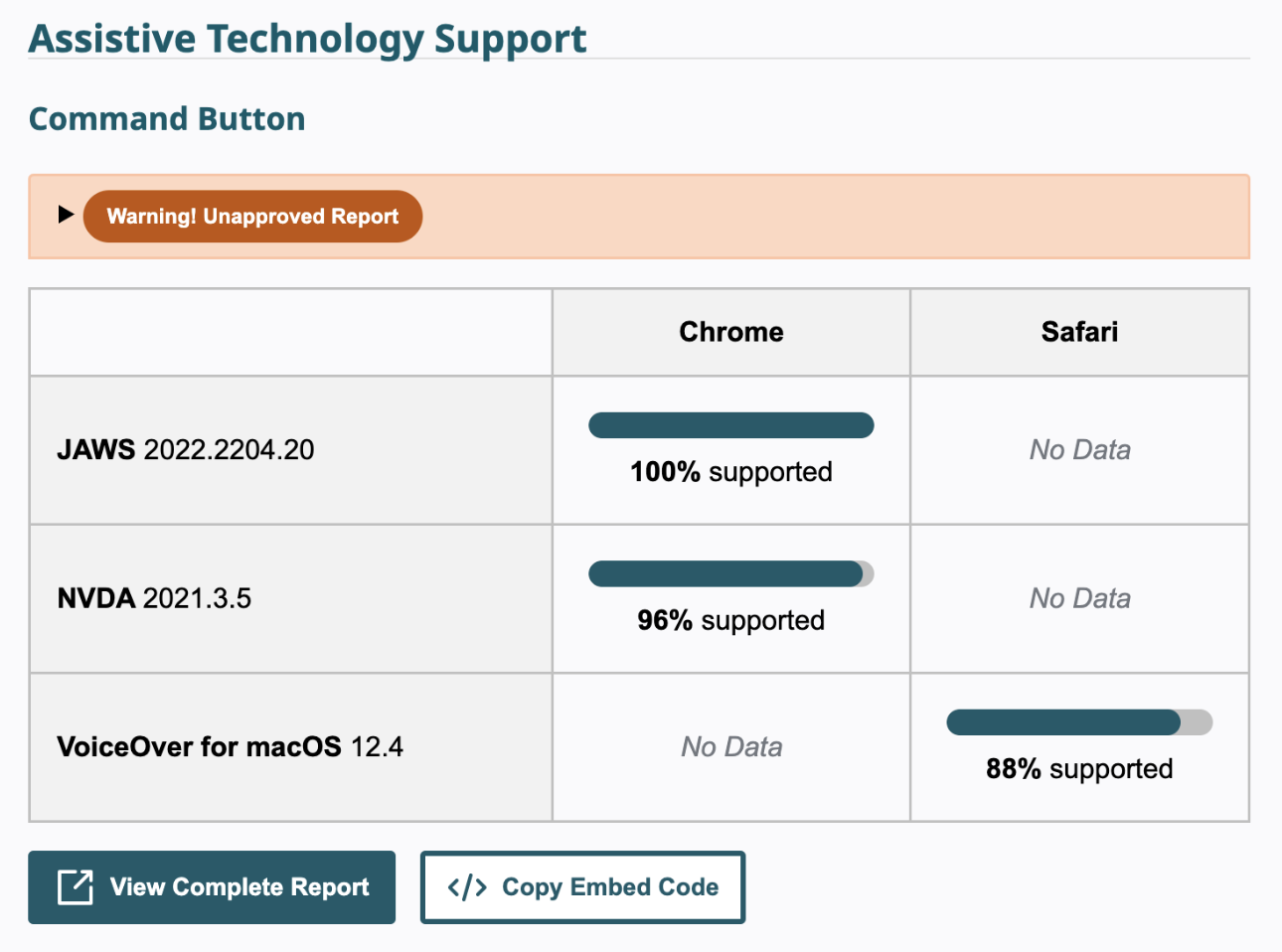

With the launch of APG Support Tables, web developers will now be able to see in which browser and which screen reader a pattern is supported and to what extent. In the above example we can see that the Button pattern is supported in Chrome for Jaws version 2022.2204.20 at 100% and NVDA version 2021.3.5 at 96%, and in Safari for VoiceOver for macOS version 12.4 at 88%.

Each browser-voiceover assistant version combination has a test report for each pattern with the ARIA-AT app, and each report has a series of tests. These tests assess the command/keyboard input and return the correct output. In the above example NVDA and VoiceOver have failing tests within their test reports, that is the output for some of their tests are incorrect or incomplete. A link to the complete test report for each pattern is also available on the pattern page, making it easier to see which tests are failing.

The notice “Warning! Unapproved Report” relays the status of the report which the results are a part of. A report will be unapproved until all tested assistive technologies sign off on the test results, or after 6 months. Assistive technology providers have a 6 month window to raise concerns with the results or test assertions.

We’ve based this work off of the playbook we used to build wpt.fyi on top of Web Platform Tests, and the MDN browser support tables on top of the Browser Compat Data Collector. Those projects test browser interoperability, and present that data to web developers. They have dozens of collaborators across the major browser companies that contribute to writing and running the 1.6m web standards tests across browser engine and operating system combinations. It’s taken 12 years, and a lot of hard work from over 1.5k contributors to get to this point with Web Platform Tests and wpt.fyi.

ARIA-AT is just a few years in, with a growing contributor base from major browser companies and assistive technology vendors. We’ve put a lot of thought into building the infrastructure to write and run these tests through a peer review process. These early results that are now available on APG are collected manually by a group of testers who also contribute to the peer review process. We are also currently building screen reader automation technology to be able to re-collect the data with automation every time a screen reader version changes or a new test is written.

Critical infrastructure like WPT and ARIA-AT take a lot of work from coalition teams across multiple companies. This is the invisible work that the web platform needs in order to remain stable and accessible.

With this data, web developers are more equipped to build and create accessible user experiences. Web developers can be confident that the APG patterns are supported in the specified browsers and assistive technologies, without having to do the tests themselves. This is a huge step for web accessibility.

This integration will allow developers to make more future-oriented plans, even for patterns that aren’t ready, as we intend to cover all patterns in the APG. Our continued work will also eventually enable consistency across assistive technologies, there’s a possible future where testing in one assistive technology may be enough to instill confidence that other technologies will also understand.

You can head over to the Prime Access Consulting blog for a deep dive on how the ARIA-AT tests are written. Thank you to Meta for funding our work on this effort, Prime Access for consensus building and test writing, the W3C for project hosting, and NVDA, Vispero, Apple, Google, and Microsoft for engaging in the project and working toward assistive technology interoperability on the web.

Special thanks to Alexander Flenniken, Carmen Cañas, Louis Do and Mike Pennisi for proof reading and corrections.

Comments

We moved off of Disqus for data privacy and consent concerns, and are currently searching for a new commenting tool.