How We Got Here

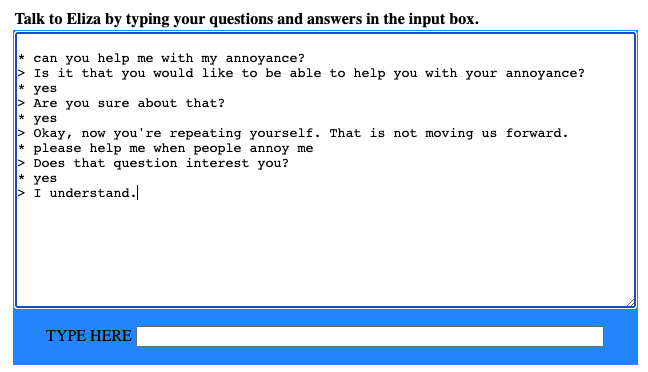

Seventy years ago Alan Turing proposed that real Artificial Intelligence (AI) would be able to interact with the person through a keyboard and screen just like a human. That is, the way to pass a Turing test would be if the user could not tell who was on the other end of the network — human or AI. ELIZA was an early attempt to pass the Turing test. Eliza mimicked what a psychotherapist might do, asking “tell me more?”. or “how does that make you feel?” or matching a pattern. ELIZA was also easy enough to trick, and lacked a sense of context. It was … a start. There are plenty of Eliza-like programs available online. There’s a trivial example from today at right.

Eliza was coded in the 1960’s. The whole program fit in a few pieces of paper. Versions of it were exported to BASIC, and thousands of young people, myself included, typed it in to run on our early micro-computers.

There have been plenty of advances since. Natural Language Processing made the computer able to understand and respond in ways that sound like English. Google gave us the ability to search and rank ideas to see which are “more correct-er” by seeing which sites are the most referenced by others. 20q took the game of “twenty questions” and implemented a neural network, so that playing the game trains the game. Paul Graham proposed a Bayesian Filter to recognize spam email back in 2002. Those filters would eventually be implemented; something very much like that runs in my gmail today. Wolfram Alpha isn’t a turning test, but instead promised to provide a sort of structure to the great, unwashed mass of data on the internet. Wolfram can find Common answers to common questions, such as how to convert from British Pounds to Dollars.

So would happen if we put them all together, to create a model that seems to talk in a conversational way?

Enter chatGPT.

The tool has a text-chat interface just like Eliza; you can sign up for free and putter with it. There is also an API, so if you wanted to ask a lot of questions at scale and save the results in a text file, you could. How can we learn more, or get past “play with it?”

ChatGPT In the broader world

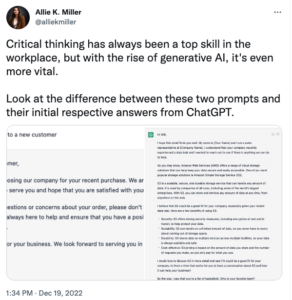

Allie Miller sent ChatGPT two different requests. In the first, write a email to a new customer. In the second, she asked to write a “sales email about AWS on S3 to a brand-new customer. That customer is a large supply-chain company with over 500 employees, that thinks it had a data leak. The customer is named Will and he likes basket ball.” The second script shows amazing detail; click through at for the example. Daniel Ritter asked it to rephrase the US Declaration of Independence in the style of the Beastie Boys.

sent ChatGPT two different requests. In the first, write a email to a new customer. In the second, she asked to write a “sales email about AWS on S3 to a brand-new customer. That customer is a large supply-chain company with over 500 employees, that thinks it had a data leak. The customer is named Will and he likes basket ball.” The second script shows amazing detail; click through at for the example. Daniel Ritter asked it to rephrase the US Declaration of Independence in the style of the Beastie Boys.

The combination of state (it remembers what you asked last time), Natural Language (it can make the results read correctly and infer the question, instead of “just” searching), and access to data via something like a internet database, is a lot more promising that google. What does this mean for software delivery? The folks at Sauce Labs have an blog post where they ask it to generate sample test code in Cucumber for Google. In a Linkedin Post, Jason Arbon suggested that chatGPT could be useful for self-education (ask it a question, learn about a topic), test data generation, and even “getting help fixing bugs in automation code… you can just give GPT the code and ask it to fix the problem you have, and it often works ;).”

Personally, I am extremely skeptical about claims of magical AI that fixes bugs. Still, instead of dismiss them offhand, I want to figure them out. So I asked for a cucuomber example of how to test Google myself, and got this:

Feature: Search for a term on google.com

Scenario: Successfully search for a term

Given I am on the homepage of google.com When I enter “selenium” in the search bar and press enter Then the search results page should display a list of results for the term “selenium” And the page title should contain the term “selenium”

I tried the tool in a different website – Amazon.com, Buy.com, Affirm.com I went to a list of the top 100 most visited sites and used NyPost.com, the 99th ranked one. Finally, I tried xndev.com, the Website of my company, Excelon Development. ChatGPT seemed to replace keywords in a way that made sense – the Amazoon and Buy tests were about searching for a product, the NYPost was about reading a headline. The Affirm test was about getting a loan. On the XNDEV.com website, however, the software struck out, suggesting I “create an account.”

Likely there is a meta-model of what the websites do somewhere, and the tool is turning the words into Cucumber tests. If Excelon has such a description in the model, and it might not, it really doesn’t fit the model of reader-as-consumer that the others are. Now that I’ve mentioned it, it will be interesting to see if the test gets smarter in the future.

By now, though, I had an operating model: The software is trained on data. I knows how to make logical substitution matches, and it knows how people talk. Given the right data, it came make logical substitutions that sound right.

Jason pointed me to this Youtube video, where the author asks for a sample of C# code to do data driven tests in Webdriver. It looks impressive. I asked the same question in python, and it was able to find similar code. I asked if there was a pascal version, and the software told me there is no Pascal Webdriver, but if I could link a library in, here is some sample code. My guess at this point is the software is capable of transforming from one programming language to another. Thus, if it can solve the problem in one language, it can solve it in others.

Two intriguing things for me were the claims it could generate test data, and that it could find problems in code.

The example below will use a little bit of code. Readers that know a string from an integer, if statements and loops should be fine.

ChatGPT Fixing Errors

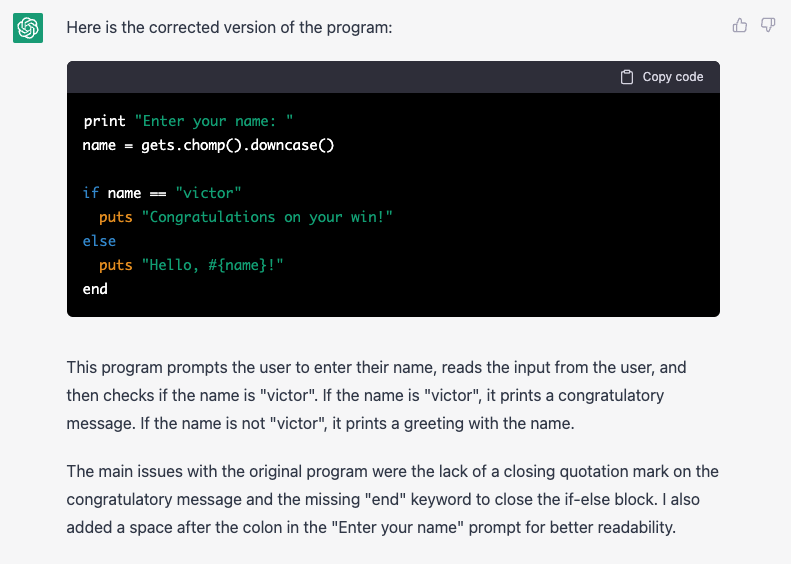

First I created a trivial error – I “forget” a quotation mark at the end of a trivial ruby program. The sample program is below, and in Github.

print “Enter your name “

name = gets.chomp().downcase()

if (name == “victor“)

puts “Congratulations on your win!

else

puts “hello, “ + name + “\n“;

end

The first time I gave chatGPT this problem, it removed the “if” statement entirely, only showing the bottom part. After that, I provided the compile error to ChatGPT. An hour later, when I re-ran the tool, it produced this output.

In an hour, the tool actually figured out how to fix a quotation mark error.

This appears to be ChatGPT approximating what most English speakers would call learning.

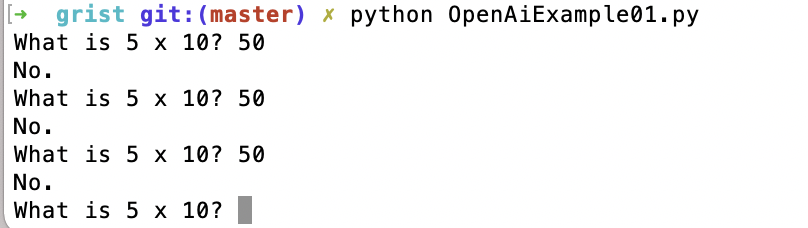

The documentation for ChatGPT also has an example of the tool fixing a bug. The sample code picks two random numbers, from one to twelve, then asks the user what the value is when they are multiplied. In the sample program the programmer forgets to convert a number to text output, thus causing a crash. The fix does not crash. The program itself generates two numbers and asks you to multiply them, but the comparison tries to use string (text) comparison and always comes back negative. Here’s some sample output:

I put the initial code ChatGPT generated into GitHub as OpenAiExample01.py. My fix, where I figured to convert the strings to numbers, in is OpenAIExample02.py.

More on this in a moment. For now, let’s talk about test data generation.

ChatGPT for Test Data

Jason said ChatGPT would be good for test data, so I gave it a simple classic one that I could use at multiple companies over years: Generate mail addresses. Specifically, five valid and five invalid. Here’s what I got.

This is, of course, no good. But it is better than two hours ago, when I asked the same question, and the addresses were exactly alike.

This is, of course, no good. But it is better than two hours ago, when I asked the same question, and the addresses were exactly alike.

The tool has a vote up/vote down button for answers. So I could explain the problem, vote the answer down, and, perhaps, see a different answer tomorrow morning.

What’s Really Going On

ChatGPT doesn’t seem to be aware in the sense that humans are. Instead, it seems to have access to the internet, a reasonable mastery of conversational english, and the ability to translate programming languages. Likely, it does something similar with english, as English has a grammar just like code.

The 20Q question-and-answer game has been online for twenty years. Over that time, it has become so good that you are unlikely to tell if you are playing with a human. That is because of the rules of the game and the way data goes in. As long as people play fairly, the tool simply remembers what others have entered as uses it as training data. ChatGPT won’t be able to understand the programmers intent, and sure won’t translate requirements into code. For now, it might be trained to find common errors, such as string to integer comparisons and conversions gone awry, forgetting a “end” or curly-brace at the end of a structure, or forgetting quotation marks. Given the error message, it’s likely a human could write a program to do this. Linters already do about half of the job. Dave Gombert once told me he did once did the other half in his compiler construction class. There may be some utility for this program for finding broad categories of errors and for unit tests. For that matter, the Cucumber examples above are trivial. They screen scraping an example from a BDD website, using the context of other websites to do a search and replace, and then being able to convert languages. That is impressive, yet has little practical utility.

That’s my quick, brief analysis of GPTChat. I could be entirely wrong. At the very least, I’ll come back tomorrow and keep pushing. For that matter, you might take the tool in directions I have never thought of. For today I thought it was worth taking them time to show my work in public.

What do you think?

[…] ChatGPT for testers Written by: Matt Heusser […]

Some of the issues you have found (the addresses example per instance) have to do with what you are asking, if instead of asking for 5 valid addresses you would ask for “can you give me the address of 5 landmark places in new york?” you will get this:

Certainly! Here are five landmark places in New York City:

Empire State Building: 350 Fifth Ave, New York, NY 10118

Central Park: Central Park, New York, NY 10022

Statue of Liberty: Liberty Island, New York, NY 10004

Metropolitan Museum of Art: 1000 5th Ave, New York, NY 10028

Times Square: Broadway, New York, NY 10036

I hope this information is helpful! Let me know if you have any other questions.

The model has problems with questions that are too broad, but when you go to something specific you get a good answer, more examples:

“and the address of the 10 places most visited by tourists in the USA in the year 2020?”

It is difficult to determine the specific addresses of the 10 places most visited by tourists in the United States in 2020, as this information may vary depending on the source and the criteria used to determine the rankings. However, here is a list of some popular tourist destinations in the United States, along with their addresses:

Times Square: Broadway, New York, NY 10036

Central Park: Central Park, New York, NY 10022

The Las Vegas Strip: Las Vegas, NV 89109

Disney World: Lake Buena Vista, FL 32830

Niagara Falls: Niagara Falls, NY 14303

The Grand Canyon: Grand Canyon National Park, AZ 86023

The Golden Gate Bridge: San Francisco, CA 94129

The Statue of Liberty: Liberty Island, New York, NY 10004

The National Mall: Washington, D.C. 20565

The Alamo: 300 Alamo Plz, San Antonio, TX 78205

I hope this list is helpful! Let me know if you have any other questions.

“can you give me one address of the city hall in each of the five boroughs of new york?”

Certainly! Here are the addresses of the City Halls for each of the five boroughs of New York City:

Manhattan: 1 Centre St, New York, NY 10007

Brooklyn: 209 Joralemon St, Brooklyn, NY 11201

Queens: 120-55 Queens Blvd, Kew Gardens, NY 11424

The Bronx: 851 Grand Concourse, Bronx, NY 10451

Staten Island: 10 Richmond Terrace, Staten Island, NY 10301

I hope this information is helpful! Let me know if you have any other questions.

GPT Chat can also provide pieces of code, try asking per instance for code to generate random names like this:

can you write a selenium code using any extension that can generate random names?

Sure! Here is an example of a Selenium script that uses the “faker” Python library to generate random names:

from selenium import webdriver

from faker import Faker

# Create a webdriver instance

driver = webdriver.Chrome()

# Create a Faker instance

fake = Faker()

# Generate a random name

name = fake.name()

# Print the generated name

print(name)

# Close the webdriver

driver.close()

This script will open a Chrome browser, generate a random name using the “faker” library, and then close the browser.

You can also use the “faker” library to generate other types of fake data, such as addresses, phone numbers, and email addresses. For more information about the “faker” library and the types of data it can generate, you can refer to the documentation at https://faker.readthedocs.io/

maximo.diego.iglesias@gmail.com

and without a library?

Sure! Here is an example of a Selenium script that generates a random name without using any external libraries:

import random

from selenium import webdriver

# Create a webdriver instance

driver = webdriver.Chrome()

# List of first names

first_names = [‘Alice’, ‘Bob’, ‘Charlie’, ‘Dave’, ‘Eve’, ‘Frank’]

# List of last names

last_names = [‘Smith’, ‘Johnson’, ‘Williams’, ‘Jones’, ‘Brown’, ‘Davis’]

# Generate a random index for the first and last names

first_name_index = random.randint(0, len(first_names) – 1)

last_name_index = random.randint(0, len(last_names) – 1)

# Generate a random name using the indices

name = f”{first_names[first_name_index]} {last_names[last_name_index]}”

# Print the generated name

print(name)

# Close the webdriver

driver.close()

This script will open a Chrome browser, generate a random name by selecting a random first name and a random last name from the specified lists, and then close the browser.

You can add more names to the lists or use different lists of names to generate different types of names. You can also use other techniques to generate random names, such as generating random combinations of letters and numbers or using a database of real names.

maximo.diego.iglesias@gmail.com

can you give me a list of 20 american first names and 20 last names

Sure! Here is a list of 20 American first names:

Emily

Madison

Olivia

Abigail

Isabella

Sophia

Elizabeth

Chloe

Victoria

Avery

Olivia

Sophia

Emma

Abigail

Emily

Charlotte

Madison

Elizabeth

Avery

Chloe

And here is a list of 20 American last names:

Smith

Johnson

Williams

Jones

Brown

Davis

Miller

Garcia

Rodriguez

Martinez

Hernandez

Lopez

Gonzalez

Wilson

Anderson

Thomas

Jackson

White

Harris

Martin

Note that these lists are just a small sample of the many names that are commonly used in the United States, and they are not exhaustive or definitive. There are many other first and last names that are also commonly used in the United States.

maximo.diego.iglesias@gmail.com

20 unique first names including both boy and girl ones?

Sure! Here is a list of 20 unique first names that include both boy and girl names:

Liam

Noah

Ethan

Oliver

William

James

Benjamin

Elijah

Lucas

Mason

Olivia

Sophia

Emma

Abigail

Emily

Charlotte

Avery

Chloe

Victoria

Madison

These names are all among the most popular names in the United States, according to data from the Social Security Administration. Note that these lists are just a small sample of the many names that are commonly used in the United States, and they are not exhaustive or definitive. There are many other first names that are also commonly used in the United States.

It’s database it’s giant and is good looking for info, you just have to point it in the right direction.

As for the syntax error on your code, you would expect that the language parser could find that right? too bad it does not always do that.

I have been toying with this idea of generating code automatically for a lot of things for some years now, I even started a tool that could write the code for a visual basic interface by just setting a few things about the control you wanted to add but having the tool filling some info that is always overlooked like the max length per instance or the tab index since the software company I was working for at that moment had a monolithic CRM solution using that language.

did you catch how the selenium script to generate names technically “used” selenium but really just printed to the console? That’s kind of what I expect from ChatGPT at this point. It would be interesting to see if it could be trained to actually use javascript to inject HTML into the DOM. But to do that you’d need to know javascript and pyton and http well enough to do it yourself — but it might save you some time. I write my thoughts up on that https://xndev.mystagingwebsite.com/2023/01/the-place-for-chatgpt/ would love to hear your perspective. Thank you for the comment!

The tool is impressive and useless I asked it to generate test cases for requirement: “When user enters 0 display error message otherwise display entered value” and got following response:

Test case: Enter 0

Input: 0

Expected Output: Error message

Test case: Enter negative number

Input: -5

Expected Output: -5

Test case: Enter positive number

Input: 7

Expected Output: 7

Test case: Enter decimal number

Input: 3.14

Expected Output: 3.14

Test case: Enter non-numeric value

Input: “hello”

Expected Output: Error message

It clearly understands the requirement but at the same time assumes user can enter only numeric values. If it fails at such trivial task how can you trust it with more complex ones. Nonetheless, when I pointed out its mistake, it corrected test cases. When asked about missing null / empty value cases, it extended the list, but again assumed error should be returned. Useless but still impressive.

Thank you for the comment! If you think about “useless but impression”, another common term for it might be “bullshit.” And I just did another post on that … https://xndev.mystagingwebsite.com/2023/01/the-place-for-chatgpt/