Who is this article for?

For developers that want to learn everything they need to become efficient and effective testers, that don't have a QA team behind them or that just want to take control of their code quality.

Why I wrote this article

Testing is a key component of a developer's code lifecycle, be it for whole teams or for lone wolfs, yet comprehensive testing guides have not kept up with Django REST Framework's growth, and there's no resource I had the chance to see out there that covers everything I think a testing standard should do for an app. That is to:

- Have fast enough tests for both efficient code-and-test flows for developers and for CI/CD pipelines.

- Have comprehensive enough tests to isolate what breaks in the piece of code and what breaks in external/internal code the code we are testing depends on.

- Leave a clear set of useful tools to pinpoint relevant individual or multiple tests resulting in a rocket-fast workflow for the developer.

In this article I propose a testing approach, an structure for tests, a set of tools for testing while developing and also show how to test each common part of a Django app. This is a work in progress and I seek feedback and suggestions, son any meaningful comment will help tons.

Why PyTest

- Less boilerplate (you don’t have to memorize assert statements like with

unittest) - Customizable error details

- Auto-discovery independently of IDE used

- Markers for across-team standarization

- Awesome terminal commands

- Parametrizing for DRY

- Huge plugins community All of this and more make of a game-changer skill to be a proficint PyTest tester.

How to structure tests

It is a good idea for scale-proof apps to create a tests folder and inside it make a folder for each of the apps in our django project:

...

├── tests

│ ├── __init__.py

│ ├── test_app1

│ │ ├── __init__.py

│ │ ├── conftest.py

│ │ ├── factories.py

│ │ ├── e2e_tests.py

│ │ ├── test_models.py <--

│ │ ├── test_signals.py <--

│ │ ├── test_serializers.py

│ │ ├── test_utils.py

│ │ ├── test_views.py

│ │ └── test_urls.py

│ │

│ └── ...

└── ...

PyTest Settings

PyTest settings configurations can be set in pytest.ini files under [pytest] or in setup.cfg files under [tool:pytest]:

:in pytest.ini

[pytest]

DJANGO_SETTINGS_MODULE = ...

markers = ...

python_files = ...

addopts = ...

:in setup.cfg

[tool:pytest]

DJANGO_SETTINGS_MODULE = ...

markers = ...

python_files = ...

addopts = ...

In many tutorials you'll see people creating pytest.ini files. This files can only be used for pytest settings, and we will want to use many more settings such as plugins settings and not have the need to add a lot of files for each plugin as our project grows. So is highly recommended to go instead with a setup.cfg file insead

In this files, the main configuration that will matter to you are:

-

DJANGO_SETTINGS_MODULE: indicates where in the working dir are the settings located. -

python_files: indicates the patterns pytest will use to match test files. -

addopts: indicates the command line arguments pytest should run with whenever we run pytest . -

markers: here we define the markers we and our team may later agree to use for categorizing tests (i.e: "unit", "integration", "e2e", "regression", etc.).

Types of Tests

Though in the QA world exist a lot of different names for all possible kind of tests see here, we can get away with a thoroughly developer-tested app knowing the following 4 types of tests:

- Unit Tests: test of a relevant piece of code isolated (mostly by mocking external code to the code tested) from interactions with other units of code. Be it internal code like a helper function we made to clean the code, a call to the database or a call to an external API.

- Integration Tests: tests that test a piece of code without isolating them from interactions with other units.

- e2e Tests: e2e stands for “end to end”, this tests are integration tests that test the end to end flows of the Django app we are testing.

- Regression Tests: a kind of test that, be it integration or unit, was originated by a bug that was covered right after fixing it by a test to expect it in the future.

Why do we test

Before proceeding to recommend a step by step approach to bulletproof your app with tests, it is a good idea to stablish why we test. There are mainly two reasons:

- To guide us while coding: while we are developing, we'll have this tests to guide our code, the aim of the code we are building will be to pass this tests.

- To make sure we did not break anything: every piece of code is like an spaghetti noodle on a spaghetti dish. The odds you broke something when taking a noodle and putting it back afterwards are high to say the least.

A Proper Test Flow

There are two main code testing flows for testing developers:

- TDD (Test Driven Development): making tests before asserting them through code.

- Testing after developing: testing the piece of code right after creating it.

Unless we are really familiarized with the syntax and the code we are expecting to write (almost impossible when we are not yet sure of the code we will be writing), it’s almost always a good idea to go with the latter one. That is, to write our code and once we consider it finished, proceed to test it works as expected. But I’ll recommend a mix of them:

- Make end-to-end tests: this should be the TDD component of our final test-suite. This tests will help you first and foremost to layout beforehand what each endpoint should return, and to have a preliminary vission of how the rest of the code of your app should follow.

- Create Unit Tests: write code and create the isolated tests for each of the parts of the Django app. This will not only help the developer through the development process but will also be extremely helpful to pinpoint where the problem is happening.

The only integration tests in our test suite should be the e2e ones. This tests should not be part of our team's testing flow while developing and, when facing big codebases, given integration tests take time, this should not even form part of the CI/CD pipeline we set up. E2E tests should mostly be used as a sanity check we developers run after all of the unit tests of the code we are working on pass (see PyTest docs talk about this strategy), to make sure the code cohesion among the different code units works properly.

Another reason to avoid e2e tests on your pipelines and developer workflow, and using instead unit tests, is to avoid flaky tests, tests that fail when run in the suite but that run perfectly when run alone. See how to fix flaky tests.

The tests we should be using in our workflow are, indeed, the unit tests, that we might identify with a PyTest marker or in the test function's name itself, to later run them all with a single command. This command will be used frequently during our development workflow.

What should I test in my unit tests?

An issue that may arise on the reader's head is that, by following this approach, we can’t test the models. This is in fact what I intend to happen. Though this is not an article of how to manage your business logic inside the different parts of your dango app, models should only be used for data representation. The logic we may need for a model should be stored in either signals or model managers.

Since we are avoiding database access for our main tests, we'll leave the job of making sure we built our models correctly to the e2e tests.

Though this may be a tricky question in some other programming languages and frameworks, knowing what is a testable unit in Django is simple since we can classify our logic in 6 parts. Thus a unit test could be of a:

- Model (model methods/model managers)

- Signal

- Serializer

- Helper Object a.k.a "utils" (functions, classes, method, etc.)

- View/Viewset

- URL Configuration

Common Testing Utilities

Markers

Before introducing examples of tests, it’s a good idea to introduce some useful markers PyTest provides us. Markers are just decorators with the format @pytest.mark.<marker> we set wrapping our test functions.

-

@pytest.mark.parametrize(): this marker will be used to run the same test several times with different values, working as a for loop. -

@pytest.mark.django_db: if we don’t give a test access to the db, it will by default not be able to access the db. This marker provided by the pytest-django plugin will of course only make sense for integration tests.

Mocking

When making unit tests, we will want to mock access to external APIs, to the db and to internal code. That’s where the following libraries will be helpful:

-

pytest-mock: to provideunittest.mockobject and a non invasive patch function instead ofunittest.mock's context manager. -

requests-mock: provides a requests factory through therffixture and the ability to mock requests objects. -

django-mock-queries: provides a class that will let us mock a queryset object and fill it with non persistend object instances.

PyTest Commands

The command we run in our working directory to run all tests is pytest, but PyTest provides commands that will help us, among other things, to narrow the tests we run and easily select only the tests we want to run. The main ones are:

-

-k <expression>: matches a file, class or function name inside the tests folder that contains the indicated expression. -

-m <marker>: will run all tests with the inputed marker. -

-m "not <marker>": will run all tests that don’t have the inputed marker. -

-x: stops running tests once a test fails, letting us stop the test-run right there so we can go back to debugging our test instead of waiting for the test suite to finish running. -

--lf: starts running the test suite from the last failed test, perfect to avoid continiously running tests we already know pass when debuggin. -

-vv: shows a more detailed version of a failed assertion. -

--cov: show % of tests covered by tests (depends onpytest-covplugin). -

--reruns <num_of_reruns>: used for dealing with flaky tests, tests that fail when run in the test suite but pass when run alone.

Addopts

Addopts are PyTest commands that will want to run every time we run the pytest command so we don't have to input it every time.

We can configure our addopts the following way:

DJANGO_SETTINGS_MODULE = ...

markers = ...

python_files = ...

addopts = -vv -x --lf --cov

Pinpoint Tests

In order to quickly run only a desired test, we can run pytest the -k command and type the desired test's name.

If we want to instead run a set of tests with something in common, we can run pytest using the -k command to select all test_*.py files, all Test* classes or all test_* functions with the inserted expression.

A good way to group tests, is to set custom markers in our pytest.ini/setup.cfg file and share the markers across our team. We can have a marker for every kind of pattern we want to use as a filter for our test.

What kind of markers are relevant to make is up to the developers' criteria, but what I propose is using markers only for grouping tests which are hard to group matching expressions using the -k command. An example of a common relevant marker is a unit marker to select only unit tests.

On our pytest.ini we will set the marker like this:

[tool:pytest]

markers =

# Define our new marker

unit: tests that are isolated from the db, external api calls and other mockable internal code.

So if we want to mark a test that follows the pattern of a unit test, we can mark it like this:

import pytest

@pytest.mark.unit

def test_something(self):

pass

Since we will have more than one unit test in each file (and if you follow my advice, most of the test files will actually be unit tests files), we can avoid the tediousity of making a marker for each function by setting a global marker for all the file by declaring the pytestmark variable in the top of the file right after the imports, which will contain a singular pytest marker or a list of markers:

# (imports)

# Only one global marker (most commonly used)

pytestmark = pytest.mark.unit

# Several global markers

pytestmark = [pytest.mark.unit, pytest.mark.other_criteria]

# (tests)

If we want to go further and set a global marker for all the tests, pytest creates a fixtures called items that represents all PyTest test objects inside the containing directory. So we could use that to create, for example, an all marker and mark all test files on the same level and below of a conftest.py with it like this:

# in conftest.py

def pytest_collection_modifyitems(items):

for item in items:

item.add_marker('all')

Factories

Factories are pre-filled model instances. Instead of manually making model instances by hand, factories will do the work for us. The main modules for creating factories are factory_boy and model_bakery. For a matter of productivity we should almost always go with model_bakery, which will only, given a Django model, create a model’s instance filled with valid data. The problem with model_bakery is that it creates randomized nonsense data, so if we want a factory that generates fields that are not random characters and make any sense, then we should go ahead and use factory_boy along faker to, for example, if we have a 'name' field, generate a name that looks like a name and not random letters.

For creating factories we will want to create and store them when making integration tests but we will not want to store them when making unit tests, since we don’t want to access the db. Instances that are saved into the db are called "persistent instances" in contrast with "non persistent", which are the ones we will use to mock the response of database calls. Here is an example of both with model_bakery:

from model_bakery import baker

from apps.my_app.models import MyModel

# create and save to the database

baker.make(MyModel) # --> One instance

baker.make(MyModel, _quantity=3) # --> Batch of 3 instances

# create and don't save

baker.prepare(MyModel) # --> One instance

baker.prepare(MyModel, _quantity=3) # --> Batch of 3 instances

If we want to have other than random data shown (for example to show in the frontend to product owner), we might either overwrite model_bakery’s default behaviour (see here). Or we can write factories with factory_boy and faker in the following way:

# factories.py

import factory

class MyModelFactory(factory.DjangoModelFactory):

class Meta:

model = MyModel

field1 = factory.faker.Faker('relevant_generator')

...

# test_something.py

# Save to db

MyModelFactory() # --> One instance

MyModelFactory.create_batch(3) # --> Batch of 3 instances

# Do not save to db

MyModelFactory.build() # --> One instance

MyModelFactory.build_batch() # --> Batch of 3 instances

Faker has generators to generate random relevant data for many different topics, each topic is represented in a faker provider. Factory boy’s default faker version comes with all providers out of the box, so you just gotta go here, see all providers, and pick a provider you like to pass the name of the generator you want as a string to generate a field (i.e: faker.Faker(‘name’)).

Factories can be stored either in the conftest.py, which is a file where we can also set configurations for all the tests in the same directory level and below (for example, here is were we define fixtures), or, if we have many different factories for our app, we can store them directly in a per-app factories.py file:

....

├── tests

│ ├── __init__.py

│ ├── test_app1

│ │ ├── __init__.py

│ │ ├── conftest.py <--

│ │ ├── factories.py <--

│ │ ├── e2e_tests.py

│ │ ├── test_models.py

│ │ ├── test_signals.py

│ │ ├── test_serializers.py

│ │ ├── test_utils.py

│ │ ├── test_views.py

│ │ └── test_urls.py

│ │

│ └── ...

└── ...

How to Organize a Test

First of all, as stated before, tests should be inside a file with the patterns indicated in our pytest.ini/setup.cfg file.

For ordering tests inside our file, for every unit of code tested (for example, a Django APIView) create a class with Test, in Camel Case and inside that create the tests for that unit (for example, a test for all the accepted methods by the view).

For organizing a test under the test function I recommend to use the “AMAA” criteria, a custom version of the “AAA” criteria. That is, the tests should follow this order:

- Arrange: set everything needed for the test

- Mock: mock everything needed to isolate your test

- Act: trigger your code unit.

- Assert: assert the outcome is exactly as expected to avoid any unpleasant surprises later.

A test structure should therefore look like this:

# tests/test_app/app/test_some_part.py

...

# inside test_something.py

class TestUnitName:

def test_<functionality_1>(self):

# Arrange

# Mock

# Act

# Assert

...

Test Examples

For the examples, we'll use the folowing Transaction and Currency models for building examples around them:

# inside apps/app/models.py

import string

from django.db import models

from django.utils import timezone

from hashid_field import HashidAutoField

from apps.transaction.utils import create_payment_intent, PaymentStatuses

class Currency(models.Model):

"""Currency model"""

name = models.CharField(max_length=120, null=False, blank=False, unique=True)

code = models.CharField(max_length=3, null=False, blank=False, unique=True)

symbol = models.CharField(max_length=5, null=False, blank=False, default='$')

def __str__(self) -> str:

return self.code

class Transaction(models.Model):

"""Transaction model."""

id = HashidAutoField(primary_key=True, min_length=8, alphabet=string.printable.replace('/', ''))

name = models.CharField(max_length=50, null=False, blank=False)

email = models.EmailField(max_length=50, null=False, blank=False)

creation_date = models.DateTimeField(auto_now_add=True, null=False, blank=False)

currency = models.ForeignKey(Currency, null=False, blank=False, default=1, on_delete=models.PROTECT)

payment_status = models.CharField(choices=PaymentStatuses.choices, default=PaymentStatuses.WAI, max_length=21)

payment_intent_id = models.CharField(max_length=100, null=True, blank=False, default=None)

message = models.TextField(null=True, blank=True)

@property

def link(self):

"""

Link to a payment form for the transaction

"""

return settings.ALLOWED_HOSTS[0] + f'/payment/{str(self.id)}'

And inside tests/test_app/conftest.py we'll set our factories as fixtures to later access them as a param in our test functions. Not always will we need a model instances with all of its fields filled by the user, maybe we want to automatically filled them in our backend. In that case we can make custom factories with certain fileds filled:

def utbb():

def unfilled_transaction_bakery_batch(n):

utbb = baker.make(

'transaction.Transaction',

amount_in_cents=1032000, # --> Passes min. payload restriction in every currency

_fill_optional=[

'name',

'email',

'currency',

'message'

],

_quantity=n

)

return utbb

return unfilled_transaction_bakery_batch

@pytest.fixture

def ftbb():

def filled_transaction_bakery_batch(n):

utbb = baker.make(

'transaction.Transaction',

amount_in_cents=1032000, # --> Passes min. payload restriction in every currency

_quantity=n

)

return utbb

return filled_transaction_bakery_batch

@pytest.fixture

def ftb():

def filled_transaction_bakery():

utbb = baker.make(

'transaction.Transaction',

amount_in_cents=1032000, # --> Passes min. payload restriction in every currency

currency=baker.make('transaction.Currency')

)

return utbb

return filled_transaction_bakery

E2E Tests

Once we already have our models and their factories created, is a good idea to have both as a tutor and as a sanity check, the end to end tests of the flows we want happening in our app. This should not and most surely will not pass as soon as we make them. This are, again, rather flexible tests of what, given a moment in the programmer’s developing process, he thinks every endpoint should give us in return for every input.

Depending on the complexity of the feature we are working or on changes brought by product owner, might we completely change them along the way.

For this end-to-end tests, with the purpose of having examples for many cases, we will assume we want full CRUD functionality for both models using all six http methods like this:

GET api/transactions List all transaction objects

POST api/transactions Create a transaction object

GET api/transactions Retrieve a transaction object

PUT api/transactions/hash Update a transaction object

PATCH api/transactions/hash Update a field of a transaction object

DELETE api/transactions/hash Delete a transaction object

For this we will:

- Make a fixture for DRF's APIClient object and name it like

api_clientto start testing directly from the endpoint:

# conftest.py

@pytest.fixture

def api_client():

return APIClient

A good idea for this fixture, since it is not only relevant for this app, is to define it in a conftest.py file above all apps so it can be shared among all of them:

....

├── tests

│ ├── __init__.py

│ ├── conftest.py <--

│ ├── test_app1

│ │ ├── __init__.py

│ │ ├── conftest.py

│ │ ├── factories.py

│ │ ├── e2e_tests.py

│ │ ├── test_models.py

│ │ ├── test_signals.py

│ │ ├── test_serializers.py

│ │ ├── test_utils.py

│ │ ├── test_views.py

│ │ └── test_urls.py

│ │

│ └── ...

└── ...

Now, let's proceed to test all endpoints:

from model_bakery import baker

import factory

import json

import pytest

from apps.transaction.models import Transaction, Currency

pytestmark = pytest.mark.django_db

class TestCurrencyEndpoints:

endpoint = '/api/currencies/'

def test_list(self, api_client):

baker.make(Currency, _quantity=3)

response = api_client().get(

self.endpoint

)

assert response.status_code == 200

assert len(json.loads(response.content)) == 3

def test_create(self, api_client):

currency = baker.prepare(Currency)

expected_json = {

'name': currency.name,

'code': currency.code,

'symbol': currency.symbol

}

response = api_client().post(

self.endpoint,

data=expected_json,

format='json'

)

assert response.status_code == 201

assert json.loads(response.content) == expected_json

def test_retrieve(self, api_client):

currency = baker.make(Currency)

expected_json = {

'name': currency.name,

'code': currency.code,

'symbol': currency.symbol

}

url = f'{self.endpoint}{currency.id}/'

response = api_client().get(url)

assert response.status_code == 200

assert json.loads(response.content) == expected_json

def test_update(self, rf, api_client):

old_currency = baker.make(Currency)

new_currency = baker.prepare(Currency)

currency_dict = {

'code': new_currency.code,

'name': new_currency.name,

'symbol': new_currency.symbol

}

url = f'{self.endpoint}{old_currency.id}/'

response = api_client().put(

url,

currency_dict,

format='json'

)

assert response.status_code == 200

assert json.loads(response.content) == currency_dict

@pytest.mark.parametrize('field',[

('code'),

('name'),

('symbol'),

])

def test_partial_update(self, mocker, rf, field, api_client):

currency = baker.make(Currency)

currency_dict = {

'code': currency.code,

'name': currency.name,

'symbol': currency.symbol

}

valid_field = currency_dict[field]

url = f'{self.endpoint}{currency.id}/'

response = api_client().patch(

url,

{field: valid_field},

format='json'

)

assert response.status_code == 200

assert json.loads(response.content)[field] == valid_field

def test_delete(self, mocker, api_client):

currency = baker.make(Currency)

url = f'{self.endpoint}{currency.id}/'

response = api_client().delete(url)

assert response.status_code == 204

assert Currency.objects.all().count() == 0

class TestTransactionEndpoints:

endpoint = '/api/transactions/'

def test_list(self, api_client, utbb):

client = api_client()

utbb(3)

url = self.endpoint

response = client.get(url)

assert response.status_code == 200

assert len(json.loads(response.content)) == 3

def test_create(self, api_client, utbb):

client = api_client()

t = utbb(1)[0]

valid_data_dict = {

'amount_in_cents': t.amount_in_cents,

'currency': t.currency.code,

'name': t.name,

'email': t.email,

'message': t.message

}

url = self.endpoint

response = client.post(

url,

valid_data_dict,

format='json'

)

assert response.status_code == 201

assert json.loads(response.content) == valid_data_dict

assert Transaction.objects.last().link

def test_retrieve(self, api_client, ftb):

t = ftb()

t = Transaction.objects.last()

expected_json = t.__dict__

expected_json['link'] = t.link

expected_json['currency'] = t.currency.code

expected_json['creation_date'] = expected_json['creation_date'].strftime(

'%Y-%m-%dT%H:%M:%S.%fZ'

)

expected_json.pop('_state')

expected_json.pop('currency_id')

url = f'{self.endpoint}{t.id}/'

response = api_client().get(url)

assert response.status_code == 200 or response.status_code == 301

assert json.loads(response.content) == expected_json

def test_update(self, api_client, utbb):

old_transaction = utbb(1)[0]

t = utbb(1)[0]

expected_json = t.__dict__

expected_json['id'] = old_transaction.id.hashid

expected_json['currency'] = old_transaction.currency.code

expected_json['link'] = Transaction.objects.first().link

expected_json['creation_date'] = old_transaction.creation_date.strftime(

'%Y-%m-%dT%H:%M:%S.%fZ'

)

expected_json.pop('_state')

expected_json.pop('currency_id')

url = f'{self.endpoint}{old_transaction.id}/'

response = api_client().put(

url,

data=expected_json,

format='json'

)

assert response.status_code == 200 or response.status_code == 301

assert json.loads(response.content) == expected_json

@pytest.mark.parametrize('field',[

('name'),

('billing_name'),

('billing_email'),

('email'),

('amount_in_cents'),

('message'),

])

def test_partial_update(self, api_client, field, utbb):

utbb(2)

old_transaction = Transaction.objects.first()

new_transaction = Transaction.objects.last()

valid_field = {

field: new_transaction.__dict__[field],

}

url = f'{self.endpoint}{old_transaction.id}/'

response = api_client().patch(

path=url,

data=valid_field,

format='json',

)

assert response.status_code == 200 or response.status_code == 301

try:

assert json.loads(response.content)[field] == valid_field[field]

except json.decoder.JSONDecodeError as e:

pass

def test_delete(self, api_client, utbb):

transaction = utbb(1)[0]

url = f'{self.endpoint}{transaction.id}/'

response = api_client().delete(

url

)

assert response.status_code == 204 or response.status_code == 301

Once we have the tests for preliminary expected outputs of our endpoints for our apps, we can proceed with building the rest of the app from the models up.

Utils

Utils are helper functions that will be spreaded all along our code, so you could find yourself building them and their correspondent tests in any order.

The first util we are going to make, is a fill_transaction function that , given an Transaction model's instance, will fill the fields that are not intended to be filled by the user.

One field we can fill on the backend is the payment_intent_id field. A "payment intent" is the way Stripe (a payment service) represents transactions expected to happen; and it's id, to put it in simple terms, is the way they can find data about it in their db.

So a util that using stripe's python library to create and retrieve a payment intent id could be this one:

def fill_transaction(transaction):

payment_intent_id = stripe.PaymentIntent.create(

amount=amount,

currency=currency.code.lower(),

payment_method_types=['card'],

).id

t = transaction.__class__.objects.filter(id=transaction.id)

t.update( # We use update not to trigger a save-signal recursion Overflow

payment_intent_id=payment_intent_id,

)

A test for this util should mock the API call and the 2 db calls:

class TestFillTransaction:

def test_function_code(self, mocker):

t = FilledTransactionFactory.build()

pi = PaymentIntentFactory()

create_pi_mock = mocker.Mock(return_value=pi)

stripe.PaymentIntent.create = create_pi_mock

filter_call_mock = mocker.Mock()

Transaction.objects.filter = filter_call_mock

update_call_mock = mocker.Mock()

filter_call_mock.return_value.update = update_call_mock

utils.fill_transaction(t)

filter_call_mock.assert_called_with(id=t.id)

update_call_mock.assert_called_with(

payment_intent_id=pi.id,

stripe_response=pi.last_response.data,

billing_email=t.email,

billing_name=t.name,

)

Signals

For signals, we can have a signal to run our fill_transaction util when a Transaction is created.

from django.db.models.signals import pre_save

from django.dispatch import receiver

from apps.transaction.models import Transaction

from apps.transaction.utils import fill_transaction

@receiver(pre_save, sender=Transaction)

def transaction_filler(sender, instance, *args, **kwargs):

"""Fills fields"""

if not instance.id:

fill_transaction(instance)

This signal will be by the way implicitly tested on the e2e. A good explicit unit test for this signal could be the following:

import pytest

from django.db.models.signals import pre_save

from apps.transaction.models import Transaction

from tests.test_transaction.factories import UnfilledTransactionFactory, FilledTransactionFactory

pytestmark = pytest.mark.unit

class TestTransactionFiller:

def test_pre_save(self, mocker):

instance = UnfilledTransactionFactory.build()

mock = mocker.patch(

'apps.transaction.signals.fill_transaction'

)

pre_save.send(Transaction, instance=instance, created=True)

mock.assert_called_with(instance)

Serializers

For our app, we'll have one single serializer for our Currency model and two serializers for our Transaction model:

- One that contains the fields that could be modified by the transactions manager (someone creating and deleting transactions)

- One that contains the fields that could be seen by "transaction managers" or also by those who will be the ones who are paying.

from hashid_field.rest import HashidSerializerCharField

from rest_framework import serializers

from django.conf import settings

from django.core.validators import MaxLengthValidator, ProhibitNullCharactersValidator

from rest_framework.validators import ProhibitSurrogateCharactersValidator

from apps.transaction.models import Currency, Transaction

class CurrencySerializer(serializers.ModelSerializer):

class Meta:

model = Currency

fields = ['name', 'code', 'symbol']

if settings.DEBUG == True:

extra_kwargs = {

'name': {

'validators': [MaxLengthValidator, ProhibitNullCharactersValidator]

},

'code': {

'validators': [MaxLengthValidator, ProhibitNullCharactersValidator]

}

}

class UnfilledTransactionSerializer(serializers.ModelSerializer):

currency = serializers.SlugRelatedField(

slug_field='code',

queryset=Currency.objects.all(),

)

class Meta:

model = Transaction

fields = (

'name',

'currency',

'email',

'amount_in_cents',

'message'

)

class FilledTransactionSerializer(serializers.ModelSerializer):

id = HashidSerializerCharField(source_field='transaction.Transaction.id', read_only=True)

currency = serializers.StringRelatedField(read_only=True)

link = serializers.ReadOnlyField()

class Meta:

model = Transaction

fields = '__all__'

extra_kwargs = {

"""Non editable fields"""

'id': {'read_only': True},

'creation_date': {'read_only': True},

'payment_date': {'read_only': True},

'amount_in_cents': {'read_only': True},

'payment_intent_id': {'read_only': True},

'payment_status': {'read_only': True},

}

The unit tests for serializers should aim to test two things (when relevant):

- That it can properly serialize a Model instance

- That it can properly turn valid serialized data into a model (a.k.a "deserialize")

import pytest

import factory

from rest_framework.fields import CharField

from apps.transaction.api.serializers import CurrencySerializer, UnfilledTransactionSerializer, FilledTransactionSerializer

from tests.test_transaction.factories import CurrencyFactory, UnfilledTransactionFactory, FilledTransactionFactory

class TestCurrencySerializer:

transaction = UnfilledTransactionFactory.build()

@pytest.mark.unit

def test_serialize_model(self):

currency = CurrencyFactory.build()

serializer = CurrencySerializer(currency)

assert serializer.data

@pytest.mark.unit

def test_serialized_data(self, mocker):

valid_serialized_data = factory.build(

dict,

FACTORY_CLASS=CurrencyFactory

)

serializer = CurrencySerializer(data=valid_serialized_data)

assert serializer.is_valid()

assert serializer.errors == {}

class TestUnfilledTransactionSerializer:

@pytest.mark.unit

def test_serialize_model(self):

t = UnfilledTransactionFactory.build()

expected_serialized_data = {

'name': t.name,

'currency': t.currency.code,

'email': t.email,

'amount_in_cents': t.amount_in_cents,

'message': t.message,

}

serializer = UnfilledTransactionSerializer(t)

assert serializer.data == expected_serialized_data

https://docs.pytest.org/en/stable/flaky.html

@pytest.mark.django_db

def test_serialized_data(self, mocker):

c = CurrencyFactory()

t = UnfilledTransactionFactory.build(currency=c)

valid_serialized_data = {

'name': t.name,

'currency': t.currency.code,

'email': t.email,

'amount_in_cents': t.amount_in_cents,

'message': t.message,

}

serializer = UnfilledTransactionSerializer(data=valid_serialized_data)

assert serializer.is_valid(raise_exception=True)

assert serializer.errors == {}

ml_model_name_max_chars = 134

@pytest.mark.parametrize("wrong_field", (

{"name": "a" * (ml_model_name_max_chars + 1)},

{"tags": "tag outside of array"},

{"tags": ["--------wrong length tag--------"]},

{"version": "wronglengthversion"},

{"is_public": 1},

{"is_public": "Nope"},

))

def test_deserialize_fails(self, wrong_field: dict):

transaction_fields = [field.name for field in UnfilledTransaction._meta.get_fields()]

invalid_serialized_data = {

k: v for (k, v) in self.transaction.__dict__.items() if k in transaction_fields and k != "id"

} | wrong_field

serializer = MLModelSerializer(data=invalid_serialized_data)

assert not serializer.is_valid()

assert serializer.errors != {}

class TestFilledTransactionSerializer:

@pytest.mark.unit

def test_serialize_model(self, ftd):

t = FilledTransactionFactory.build()

expected_serialized_data = ftd(t)

serializer = FilledTransactionSerializer(t)

assert serializer.data == expected_serialized_data

@pytest.mark.unit

def test_serialized_data(self):

t = FilledTransactionFactory.build()

valid_serialized_data = {

'id': t.id.hashid,

'name': t.name,

'currency': t.currency.code,

'creation_date': t.creation_date.strftime('%Y-%m-%dT%H:%M:%SZ'),

'payment_date': t.payment_date.strftime('%Y-%m-%dT%H:%M:%SZ'),

'stripe_response': t.stripe_response,

'payment_intent_id': t.payment_intent_id,

'billing_name': t.billing_name,

'billing_email': t.billing_email,

'payment_status': t.payment_status,

'link': t.link,

'email': t.email,

'amount_in_cents': t.amount_in_cents,

'message': t.message,

}

serializer = FilledTransactionSerializer(data=valid_serialized_data)

assert serializer.is_valid(raise_exception=True)

assert serializer.errors == {}

Viewsets

We'll use DRF viewsets for CRUD operations in our models, skipping the need to test url configuration:

# in urls.py

route_lists = [

transaction_urls.route_list,

]

router = routers.DefaultRouter()

for route_list in route_lists:

for route in route_list:

router.register(route[0], route[1])

urlpatterns = [

path('admin/', admin.site.urls),

path('api/', include(router.urls)),

]

# in views.py

from rest_framework.viewsets import ModelViewSet

from rest_framework.permissions import IsAuthenticated

from apps.transaction.api.serializers import CurrencySerializer, UnfilledTransactionSerializer, FilledTransactionSerializer

from apps.transaction.models import Currency, Transaction

class CurrencyViewSet(ModelViewSet):

queryset = Currency.objects.all()

serializer_class = CurrencySerializer

class TransactionViewset(ModelViewSet):

"""Transaction Viewset"""

queryset = Transaction.objects.all()

permission_classes = [IsAuthenticated]

def get_serializer_class(self):

if self.action == 'create':

return UnfilledTransactionSerializer

else:

return FilledTransactionSerializer

The first step is mocking all permissions used in the views we are gonna test. This will later be tested on their own:

from rest_framework.permissions import IsAuthenticated

@pytest.fixture(scope="session", autouse=True)

def mock_views_permissions():

# little util I use for testing for DRY when patching multiple objects

patch_perm = lambda perm: mock.patch.multiple(

perm,

has_permission=mock.Mock(return_value=True),

has_object_permission=mock.Mock(return_value=True),

)

with (

patch_perm(IsAuthenticated),

# ...add other permissions you may have below

):

yield

With plain class-based-views or function-based-views, we would start testing the view from the view itself and isolate the view from the urlconf that triggers that view. But since we are using routers for this, we start testing the viewsets from the endpoint itself with an API Client.

import factory

import json

import pytest

from django.urls import reverse

from django_mock_queries.mocks import MockSet

from rest_framework.relations import RelatedField, SlugRelatedField

from apps.transaction.api.serializers import UnfilledTransactionSerializer, CurrencySerializer

from apps.transaction.api.views import CurrencyViewSet, TransactionViewset

from apps.transaction.models import Currency, Transaction

from tests.test_transaction.factories import CurrencyFactory, FilledTransactionFactory, UnfilledTransactionFactory

pytestmark = [pytest.mark.urls('config.urls'), pytest.mark.unit]

class TestCurrencyViewset:

def test_list(self, mocker, rf):

# Arrange

url = reverse('currency-list')

request = rf.get(url)

qs = MockSet(

CurrencyFactory.build(),

CurrencyFactory.build(),

CurrencyFactory.build()

)

view = CurrencyViewSet.as_view(

{'get': 'list'}

)

#Mcking

mocker.patch.object(

CurrencyViewSet, 'get_queryset', return_value=qs

)

# Act

response = view(request).render()

#Assert

assert response.status_code == 200

assert len(json.loads(response.content)) == 3

def test_retrieve(self, mocker, rf):

currency = CurrencyFactory.build()

expected_json = {

'name': currency.name,

'code': currency.code,

'symbol': currency.symbol

}

url = reverse('currency-detail', kwargs={'pk': currency.id})

request = rf.get(url)

mocker.patch.object(

CurrencyViewSet, 'get_queryset', return_value=MockSet(currency)

)

view = CurrencyViewSet.as_view(

{'get': 'retrieve'}

)

response = view(request, pk=currency.id).render()

assert response.status_code == 200

assert json.loads(response.content) == expected_json

def test_create(self, mocker, rf):

valid_data_dict = factory.build(

dict,

FACTORY_CLASS=CurrencyFactory

)

url = reverse('currency-list')

request = rf.post(

url,

content_type='application/json',

data=json.dumps(valid_data_dict)

)

mocker.patch.object(

Currency, 'save'

)

view = CurrencyViewSet.as_view(

{'post': 'create'}

)

response = view(request).render()

assert response.status_code == 201

assert json.loads(response.content) == valid_data_dict

def test_update(self, mocker, rf):

old_currency = CurrencyFactory.build()

new_currency = CurrencyFactory.build()

currency_dict = {

'code': new_currency.code,

'name': new_currency.name,

'symbol': new_currency.symbol

}

url = reverse('currency-detail', kwargs={'pk': old_currency.id})

request = rf.put(

url,

content_type='application/json',

data=json.dumps(currency_dict)

)

mocker.patch.object(

CurrencyViewSet, 'get_object', return_value=old_currency

)

mocker.patch.object(

Currency, 'save'

)

view = CurrencyViewSet.as_view(

{'put': 'update'}

)

response = view(request, pk=old_currency.id).render()

assert response.status_code == 200

assert json.loads(response.content) == currency_dict

@pytest.mark.parametrize('field',[

('code'),

('name'),

('symbol'),

])

def test_partial_update(self, mocker, rf, field):

currency = CurrencyFactory.build()

currency_dict = {

'code': currency.code,

'name': currency.name,

'symbol': currency.symbol

}

valid_field = currency_dict[field]

url = reverse('currency-detail', kwargs={'pk': currency.id})

request = rf.patch(

url,

content_type='application/json',

data=json.dumps({field: valid_field})

)

mocker.patch.object(

CurrencyViewSet, 'get_object', return_value=currency

)

mocker.patch.object(

Currency, 'save'

)

view = CurrencyViewSet.as_view(

{'patch': 'partial_update'}

)

response = view(request).render()

assert response.status_code == 200

assert json.loads(response.content)[field] == valid_field

def test_delete(self, mocker, rf):

currency = CurrencyFactory.build()

url = reverse('currency-detail', kwargs={'pk': currency.id})

request = rf.delete(url)

mocker.patch.object(

CurrencyViewSet, 'get_object', return_value=currency

)

del_mock = mocker.patch.object(

Currency, 'delete'

)

view = CurrencyViewSet.as_view(

{'delete': 'destroy'}

)

response = view(request).render()

assert response.status_code == 204

assert del_mock.assert_called

class TestTransactionViewset:

def test_list(self, mocker, rf):

url = reverse('transaction-list')

request = rf.get(url)

qs = MockSet(

FilledTransactionFactory.build(),

FilledTransactionFactory.build(),

FilledTransactionFactory.build()

)

mocker.patch.object(

TransactionViewset, 'get_queryset', return_value=qs

)

view = TransactionViewset.as_view(

{'get': 'list'}

)

response = view(request).render()

assert response.status_code == 200

assert len(json.loads(response.content)) == 3

def test_create(self, mocker, rf):

valid_data_dict = factory.build(

dict,

FACTORY_CLASS=UnfilledTransactionFactory

)

currency = valid_data_dict['currency']

valid_data_dict['currency'] = currency.code

url = reverse('transaction-list')

request = rf.post(

url,

content_type='application/json',

data=json.dumps(valid_data_dict)

)

retrieve_currency = mocker.Mock(return_value=currency)

SlugRelatedField.to_internal_value = retrieve_currency

mocker.patch.object(

Transaction, 'save'

)

view = TransactionViewset.as_view(

{'post': 'create'}

)

response = view(request).render()

assert response.status_code == 201

assert json.loads(response.content) == valid_data_dict

def test_retrieve(self, api_client, mocker, ftd):

transaction = FilledTransactionFactory.build()

expected_json = ftd(transaction)

url = reverse(

'transaction-detail', kwargs={'pk': transaction.id}

)

TransactionViewset.get_queryset = mocker.Mock(

return_value=MockSet(transaction)

)

response = api_client().get(url)

assert response.status_code == 200

assert json.loads(response.content) == expected_json

def test_update(self, mocker, api_client, ftd):

old_transaction = FilledTransactionFactory.build()

new_transaction = FilledTransactionFactory.build()

transaction_json = ftd(new_transaction, old_transaction)

url = reverse(

'transaction-detail',

kwargs={'pk': old_transaction.id}

)

retrieve_currency = mocker.Mock(

return_value=old_transaction.currency

)

SlugRelatedField.to_internal_value = retrieve_currency

mocker.patch.object(

TransactionViewset,

'get_object',

return_value=old_transaction

)

Transaction.save = mocker.Mock()

response = api_client().put(

url,

data=transaction_json,

format='json'

)

assert response.status_code == 200

assert json.loads(response.content) == transaction_json

@pytest.mark.parametrize('field',[

('name'),

('billing_name'),

('billing_email'),

('email'),

('amount_in_cents'),

('message'),

])

def test_partial_update(self, mocker, api_client, field):

old_transaction = FilledTransactionFactory.build()

new_transaction = FilledTransactionFactory.build()

valid_field = {

field: new_transaction.__dict__[field]

}

url = reverse(

'transaction-detail',

kwargs={'pk': old_transaction.id}

)

SlugRelatedField.to_internal_value = mocker.Mock(

return_value=old_transaction.currency

)

mocker.patch.object(

TransactionViewset,

'get_object',

return_value=old_transaction

)

Transaction.save = mocker.Mock()

response = api_client().patch(

url,

data=valid_field,

format='json'

)

assert response.status_code == 200

assert json.loads(response.content)[field] == valid_field[field]

def test_delete(self, mocker, api_client):

transaction = FilledTransactionFactory.build()

url = reverse('transaction-detail', kwargs={'pk': transaction.id})

mocker.patch.object(

TransactionViewset, 'get_object', return_value=transaction

)

del_mock = mocker.patch.object(

Transaction, 'delete'

)

response = api_client().delete(

url

)

assert response.status_code == 204

assert del_mock.assert_called

Coverage of our test suite

Given that we will have code with different logical ramifications, we can test how much of the code we are covering with our tests (aka the "coverage" of our test suite) in percentual terms using the pytest-cov plugin. In order to see the coverage of our tests, we will have to use the --cov command.

Coverage won't tell you if your code will break or not, it will only tell you how much it have you covered with tests, and this has nothing to do with whether the tests you made are relevant or not.

It is a good idea to manually modify our coverage settings inside our setup.cfg under [coverage:run] (or, if you want to have a standalone file, inside a .coveragerc file under [run]) and set what directories we would like to test for coverage and what files we might want to exclude inside those. I myself have all my apps inside a apps directory, so my setup.cfg will look something like this:

[tool:pytest]

...

[coverage:run]

source=apps

omit=*/migrations/*,

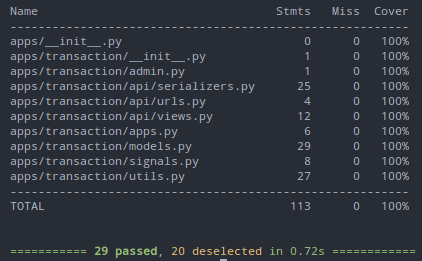

If we run pytest --cov --cov-config=setup.cfg (you could include it in your addopts) we may have an output:

That I got a 100% does not mean nothing. Again, if you get a 90% because you left an irrelevant piece of code untested, your coverage will be as good as 100%.

Disclaimer: pytest-cov plugin is reported to be incompatible with VSCode debugger, so you might want to remove it's command from addopts or sometimes even remove it from your project altogether.

On flaky tests

Flaky tests are caused by not properly isolating tests between each other. This should be acceptable when runing e2e tests, but it should be a red warning when happening with unit tests.

In progress

- Plain views tests

- URL configuration tests

- Resources list

- Imports cleanup

Top comments (32)

Hi!

Just wanted to say a huge thanks for this article.

I'm new to Pytests and in the middle of rewriting a project to DRF, in TDD. I've bookmarked your article and probably opened it close to 100 time since I started.

Thanks again!

Thank you very much! This is what I intended it for :)

I really feel more comfortable with pytest and django since I read it. Especially for the general organization of the project and the split between integration, units and e2e tests.

great work !

Edit: Just one small thing: e2e tests are not detected by default by pytest. It finds only the files starting with

test_or ending with_test, so I think you have overridden thepython_filesproperty without specifying it in the article.Hi @sherlockcodes ,still trying to understand what is happening in your viewset tests.

I can see methods within it with

mockerbut I cannot see where it is being set. kindly advice.Consider a scenario, I have a model viewset, within it, I have overridden the patch to update another model when a certain condition is met.

I don't think I understand exactly what you are asking. What is is that you can't see where it's being set?

What I am mocking, with the

is the viewset's

get_querysetmethod, so to block access to the database.In your described scenario. What is it that you want to mock? If it is a method from the viewset, just replace the "get_queryset" part with the name of whatever method you are trying to mock.

I can see 'mocker' passed as a param, but no import nor config for pytest.

Because it's passed as a fixture to the test function. You don't need no imports. It's PyTest's ABC. If you make a basic pytest tutorial or go through this article there's no way you don't encounter fixtures. I encourage you to do a basic pytest tutorial and then go through this article. You should not have any doubts like this left. Hope I was helpful

100%

Hello Lucus, I just picked up DRF recently, and I found your article while looking for DRF testing tools! Thank you for writing such an in-depth article. I'm wondering if this is still the preferred approach over the inbuilt

rest_framework.testand why?Thanks!

Hi Estee. You either question the aproach or the toolsets. rest_framework.test is a toolset. A very limited one also. You can barely do anything I talked about in the article with that. You don't have the ease of the commands, nor the customizability of the test runner, nor the several handy plugins.

Oh i see! Thank you, that helps me in understanding better!

Hi, thanks for the article!

I have a question about the project structure:

If I understand it correctly your structure looks like this

I wonder if you could comment on a different approach where you put tests for each app inside the app, like a so:

Thank you

Hi Jan. You lose the ability of using pytest fixtures on a tree structure. Basically, with pytest, you can make a conftest.py file on a per folder bases and you can add fixtures using your own criteria.

Let me explain myself. Let's suppose I want to use a fixture for all tests for an app (ie: I often make an autouse fixture for mocking api calls in every app and like to use another fixture to unmock it for the app it's managing the utils with API calls for integration tests). If I use the aproach you mention, I'll lose this ability. I of course might be missing more stuff, but it's the first thing that came to my mind. You should still manage to configure pytest to activate autoused fixtures on different projects, but there are many use cases that rely on this particular file structure you may will have to encounter workarounds on pytest conf. If you are really interested in this we can talk about different testing folder structures and pro/cons

Hey. First of all I would like to Thanks for this amazing article. I just try to follow you concept and when I trying to apply this with HyperlinkedModelSerializer testing I'm getting some issues. It would be really helpful if you can share CurrencyFactory class code snippet. That was the missing pease of this article.

Hi Rishan, thanks for asking. Remember you can always make a working factory with model_bakery. Besides that, I unfortunately lost all my files regarding this project. Nevertheless it's the easiest possible factory to make. You would need to make a random string faker generator for each field, and limit the characters to 1 in for the currency symbol (i.e: "$"), 3 for the currency code (i.e: "usd"), and a bigger amount of characters allowed for the currency name (i.e: "US Dollar")

I liked your article!

Im inviting you to use my new package for rest framework called drf-api-action and write about it

which is designed to elevate your testing experience for (DRF) REST endpoints. this package empowers you to effortlessly test your REST endpoints as if they were conventional functions

github.com/Ori-Roza/drf-api-action

thanks!

Thanks Lucas Miguel, for such a work

It took me 1 month to cover this. I started from 0 and now feeling like Hero 😂. Worth reading. Also if you can come up with one writeup on Mockup and Factories that would be highly appreciated.

Thanks Again Lucas. Great Work.

Hi Himanshu. It means a lot to me! I'll add that to the backlog

Hi! Great article! I'm trying to write serializer tests for my code. When I call

assert serializer.is_valid(raise_exception=True)

my test_serialized_data asks for database connection.

But I don't want to do it because it is unit test.

Also I cannot mock is_valid method, because I'm testing it.

What should I do to avoid database connection?

That's great I was looking at a good article using Django and Pytest together.

Glad it's useful!

Hi Lucas,

I'm struggeling to make this part working:

where the ftd is defined or from where it is coming from?

Thanks!

Hi thanks for you very useful post, I didn't get how can this snippet works, if FilledTransactionFactory.build() does not create database obj, t.id will be None. I missed something?

It does build an object, it just doesn't hit the database to store it. It's like instantiating a common python object

Thanks for sharing this useful tutorial and I hope you can continue this series.

Hello Lucas,

Not sure if I missed out, but is there a way to do an e2e(integration) test without a running database?

Regards,

Vasant Singh Sachdewa

Hey Vasant. Of course you could, it would imply mocking a lot of tuff (every unit involved) but if you read my article again you'll see there's no point in it. You basically would be missing the point, that is, keeping the e2e tests for sanity checks and having a test that merges the whole code.

Thanks Lucas, I really appreciate it

hi it would be nice if you provided the github link to your code.

Hi! There's no GH link. I made it for the post based on what I used in my company. If I make time for it I might attach one