Here is a “brief” tour inside AudioKit’s Synth One for developers.

A bit about AudioKit

There are quite a few developers working on both AudioKit and Synth One right now. AudioKit was founded by Aurelius Prochazka. It’s his drive that keeps AudioKit going strong along with other dedicated contributors.

The project combines code from other projects as well. The actual DSP crunching is via Paul Batchelor’s C library SoundPipe. Marcus Hobbs, creator of Wilsonic, worked on the DSP and the microtonal tuning panel. Audiobus creator Michael Tyson’s useful code from his retired Amazing Audio Engine is in there too. And, Apple’s FilterDemo peeks through the fence also.

How cool is open source? There are links to each down in Resources. At some point, looking at them will help you grok the whole thing.

You can even do sample playback with AudioKit. Shane Dunne’s new AKSampler code will be used in Matt Fecher’s upcoming AudioKit FM Player 2 app.

Synth One is not the only project built with AudioKit! Here’s a list of quite a few apps using AudioKit. There’s nothing stopping you from writing one too. Check out the getting started with AudioKit page.

Marcus Hobbs, one of AudioKit Synth One’s core-developers, at Burning Man.

Introduction

Sometimes I look at my watch to see what project I’m working on. I’ve developed some self-defense maneuvers to keep my sanity. Well, ok, that’s gone. To keep track of things. Yeah, better.

One of the main things I do is to keep a notebook. Not a laptop; one of those things with paper in it. I do this for several reasons. One is to remember things. Another is to learn and understand things. The act of writing helps learning and retention enormously. Really. (One of my many degrees is in education and I’ve taught quite a bit). And finally, keep track of what you tried. (Yep, that didn’t work. Let’s not do that again). And besides, I like writing with a fountain pen. Try it sometime.

I’ve been banging on AudioKit’s SynthOne app to try to add an AUv3 extension to it. I’m not done, because it’s not as easy as you might think – especially since it was never intended to be an AUv3 extension because that did not exist when the project started.

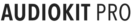

So, along the way, I got out my notebook and fountain pen and started drawing two UML (Unified Modeling Language) diagrams in particular. Class diagrams and Sequence diagrams always help me to grok what is going on. So, the reason for this post: Why don’t I share my breadcrumbs through AudioKit and SynthOne? Maybe one of you will read this and the be able to develop the next great iOS synth app! Hey, it could happen.

There are two big codebases with which you need to become acquainted. SynthOne (well, duh), but also AudioKit because SynthOne, as you will see, uses quite a bit of AudioKit to get its work done – to the astonishment of no one. This is daunting because there is a lot there. So, “eins nach dem Andern”, Wozzeck.

Pressing a keyboard key

What’s the first use-case for the typical user of a synth app?

Right, banging on the keyboard to hear something.

So, let’s begin with the view you have as a user. You see a few views arranged vertically and a keyboard view at the bottom.

Let’s pick out a few of the classes involved in this initial view of the app.

Let’s get an overview with an UML class diagram of the first set of classes we’ll look at.

The Manager class (I wish it were named PanelManager or GUIManager because I forget it’s not in the DSP world) is a UIViewController that loads the panels on demand when the user presses the arrow buttons on the side. Actually it inherits from UpdatableViewController which contains code to deal with AU parameters, as do most of the view controllers in SynthOne.

The KeyboardView has a delegate that is called when the user touches or releases a key. The Manager happens to be the delegate.

The manager contains a Sustainer which in turn contains an AKPolyphonicNode – a class from AudioKit. AKSynthOne is a subclass of AKPolyphonicNode and that is the node that the Sustainer contains.

What happens when you press a key? Of course, the KeyboardView gets the touch event, extracts the frequency and velocity data, then informs its delegate – the Manager. Then, ah never mind. Let’s see the sequence diagram!

As expected, the KeyboardView sends the delegate message noteOn (and of course, noteOff later on touchUpInside) to the Manager. The Manager then sends the data to the Sustainer which frobs a bit with it before sending it to AKSynthOne which is a Swift class that holds a reference to the actual audio unit, S1AudioUnit.

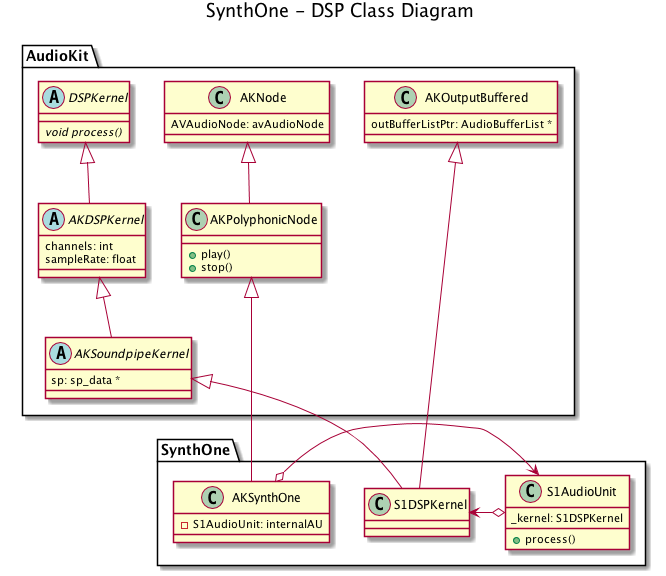

Huh, there’s a DSPKernel class. Yup, just as I discussed in my previous blog post, Writing an Audio Unit v3: Instrument, Apple’s FilterDemo contains a few very useful classes. The abstract DSPKernel C++ class is one of them. S1DSPKernel is a C++ class which inherits from DSPKernel. Yes, C++. I listed the reasons why Swift or Objective-C cannot be used in real time threads in that post if you’d like to review.

The FilterDemo implemented a state machine triggered by MIDI events. So does any synth to some extent. SynthOne does it with a C++ class named S1NoteState. This class keeps track of 3 states, on, off, and release. It does some set up with SoundPipe, the C library which does the actual DSP. I’m showing here just one thing – the frequency. Take a look and you’ll see quite a bit more.

So, the end of this long chain has been reached and the S1NoteState object has its data in place.

But where’s the kaboom? There was supposed to be an earth-shattering kaboom!

Rendering

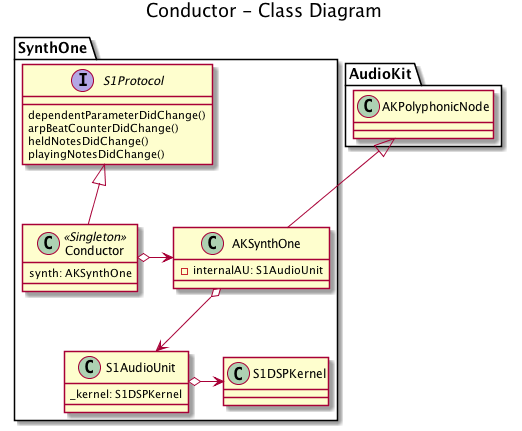

The S1NoteState object is ready now. Audio Units have a render block that is called by the system at audio rate. There needs to be something in the render block that reaches the S1NoteState‘s run function.

Let’s see a UML sequence diagram.

The AUAudioUnit is S1AudioUnit (but the system sees just an AUAudioUnit). S1AudioUnit holds a reference to S1DSPKernel and just like FilterDemo in its render block, sets its buffers then calls the function to process events – cunningly named processWithEvents. The DSPKernel function then calls the abstract function process. In S1DSPKernel’s process, after a bit of frobnostication, the S1NoteState‘s run function is called. It is here in the run function that the SoundPipe oscillators, filters, LFOs etc. are kicked in the butt and told to get to work, and so the output buffers are filled.

The Kernel

I did mention that S1DSPKernel inherits from DSPKernel. Well, indirectly. The whole story is in this class diagram.

As you can see, there are a few classes in between from AudioKit that each do a few useful things like maintaining the number of channels.

AKSynthOne is an AudioKit “node”, so it can be connected to a “graph” like any other node. Underneath is AVFoundation’s AVAudioNode. The AudioKit class maintains an AVAudioEngine and the AKNode’sAVAudioNodes are connected to it. Usually.

But why?

Underneath

Let’s look at one of AudioKit’s playground examples. The first one – “Introduction and Hello World”.

1 2 3 4 5 6 7 8 9 10 11 | import AudioKitPlaygrounds import AudioKit let oscillator = AKOscillator() AudioKit.output = oscillator try AudioKit.start() oscillator.start() sleep(1) |

What does setting AudioKit.output to the oscillator do? Well, of course, the output of the oscillator goes to AudioKit’s output, duh. But what is underneath?

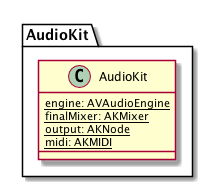

AudioKit has a static variable that points to an AVAudioEngine. It also has a static variable to an AKMixer. Actually, AudioKit is a defacto Singleton since it has just static functions and variables.

The didSet observer on the output variable connects the output to the mixer, and then the mixer’s AVAudioNode to the engine’s output AVAudioNode.

1 2 | output?.connect(to: finalMixer) engine.connect(finalMixer.avAudioNode, to: engine.outputNode) |

So now, the AudioKit node that we created (AKOscillator) can be played through an AVAudioEngine. The call to AudioKit.start() calls start on the internal AVAudioEngine.

If you look inside the init functions of AKNodes – here is an excerpt from AKOscillator’s init – you will see that they contain AVAudioNodes which in turn contain AUAudioUnits.

1 2 3 4 5 6 7 8 9 10 11 12 | AVAudioUnit._instantiate(with: _Self.ComponentDescription) { [weak self] avAudioUnit in guard let strongSelf = self else { AKLog("Error: self is nil") return } strongSelf.avAudioNode = avAudioUnit strongSelf.internalAU = avAudioUnit.auAudioUnit as? AKAudioUnitType strongSelf.internalAU?.setupWaveform(Int32(waveform.count)) for (i, sample) in waveform.enumerated() { strongSelf.internalAU?.setWaveformValue(sample, at: UInt32(i)) } } |

That final statement in the example, oscillator.start(), calls start on the audio unit internalAU.

Remember the “Pressing a key on the keyboard” sequence from above? AKSynthOne sends the startNoteto an audio unit – S1AudioUnit. Where is that audio unit hosted? Yeah! In the AVAudioEngine!

If you poke through the classes in AudioKit, you will find quite a few audio units such as AKMorphingOscillatorAudioUnit. Each one has a C++ kernel that uses SoundPipe to do the DSP.

The Conductor

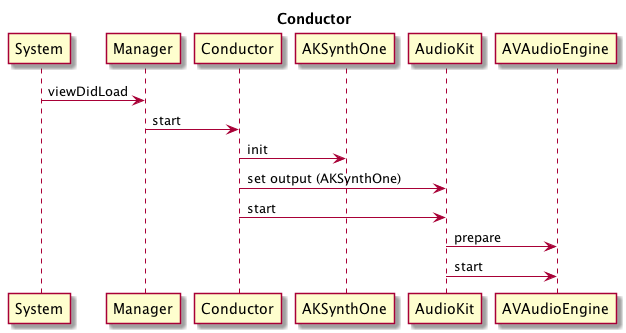

So, where does this happen in SynthOne? Ok, you read the heading. In the Conductor class.

The Conductor is a singleton that does many things – such as binding UI controls to AU parameters – and the engine setup is just one of them.

Summary

I said “brief tour”. I lied. 🙂

This is not everything about SynthOne of course. But, I hope you have a better idea of what’s going on inside.

By the way, that AVAudioEngine stuff? It’s not in the AUv3 extension because in those, the host is an app like AUM or Cubasis (non-exhaustive list; don’t sue me) and it’s not needed.