AI progress comes in fits and starts. You hear nothing for months and then, suddenly, the limits of what seems possible are burst asunder. April was one of those months, with two major new releases in the field stunning onlookers.

The first was Google’s PaLM, a new language model (the same basic type of AI as the famous GPT series) that shows a pretty stunning ability to comprehend and parse complex statements – and explain what it’s doing in the process. Take this simple comprehension question from the company’s announcement:

Prompt: Which of the following sentences makes more sense? 1. I studied hard because I got an A on the test. 2. I got an A on the test because I studied hard.

Model Response: I got an A on the test because I studied hard.

Or this:

Prompt: Q: A president rides a horse. What would have happened if the president had ridden a motorcycle? 1. She or he would have enjoyed riding the horse. 2. They would have jumped a garden fence. 3. She or he would have been faster. 4. The horse would have died.

Model Response: She or he would have been faster.

These are the sorts of questions that computers have historically struggled with, that require a fairly broad understanding of basic facts about the world before you can begin tackling the statement in front of you. (For another example, try parsing the famous sentence “time flies like an arrow, fruit flies like a banana”).

So poor Google that, less than a week later, its undeniable achievements with PaLM were overshadowed by a far more photogenic release from OpenAI, the formerly Musk-backed research lab that spawned GPT and its successors. The lab showed off Dall-E 2 (as in, a hybrid of Wall-E and Dalí), an image generation AI with the ability to take text descriptions in natural language and spit out alarmingly detailed images.

A picture is worth a thousand words, so here’s a short book about Dall-E 2, with the pictures accompanied by the captions that generated them.

From the official announcement, “An astronaut playing basketball with cats in space in a watercolor style”:

And “A bowl of soup as a planet in the universe as a 1960s poster”:

From the academic paper going into detail about how Dall-E 2 works, “a shiba inu wearing a beret and black turtleneck”:

And “a teddy bear on a skateboard in times square”:

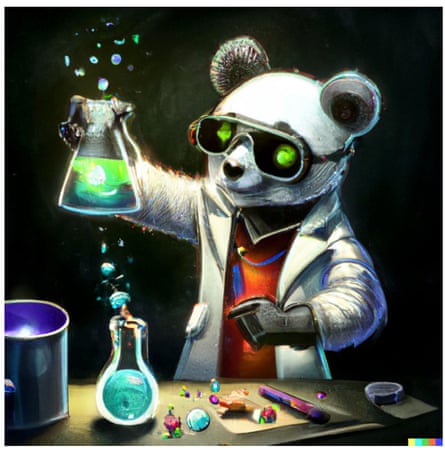

Not all the prompts have to be in conversational English, and throwing in a bunch of keywords can help tune what the system does. In this case, “artstation” is the name of an illustration social network, and Dall-E is effectively being told “make these images as you’d expect to see them on artstation”. And so:

“panda mad scientist mixing sparkling chemicals, artstation”

“a dolphin in an astronaut suit on saturn, artstation”

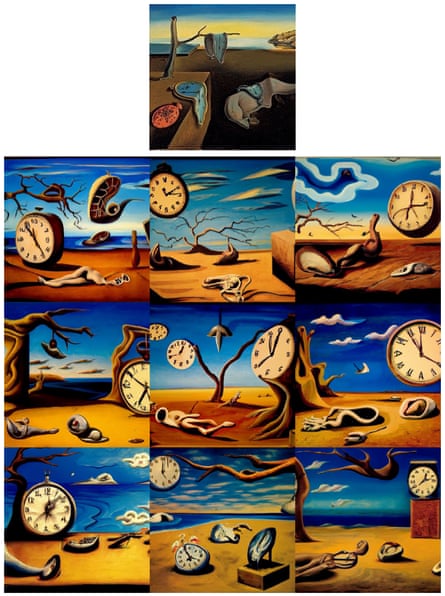

The system can do more than simple generation, though. It can produce variations on a theme, effectively by looking at an image, describing it itself, and then creating more images based on that description. Here’s what it gets from Dalí’s famous The Persistence of Memory, for instance:

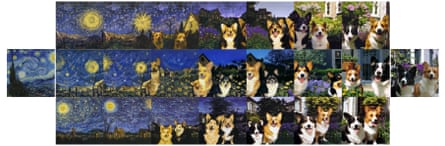

And it can create images that are a blend of two, in a similar way. Here’s Starry Night merging with two dogs:

It can also use one image as an anchor and then modify it with a text description. Here we see a “photo of a cat” becoming “an anime drawing of a super saiyan cat, artstation”:

These images are all, of course, cherrypicked. They are the best, most compelling examples of what the AI can produce. OpenAI has not, despite its name, opened up access to Dall-E 2 to all, but it has allowed a few people to play with the model, and is taking applications for a waiting list in the meantime.

Dave Orr, a Google AI staffer, is one lucky winner, and published a critical assessment: “One thing to be aware of when you see amazing pictures that DE2 generates, is that there is some cherrypicking going on. It often takes a few prompts to find something awesome, so you might have looked at dozens of images or more.”

Orr’s post also highlights the weaknesses of the system. Despite being a sibling to GPT, for instance, Dall-E 2 can’t really do writing; it focuses on looking right, rather than reading right, leading to images like this, caption “a street protest in belfast”:

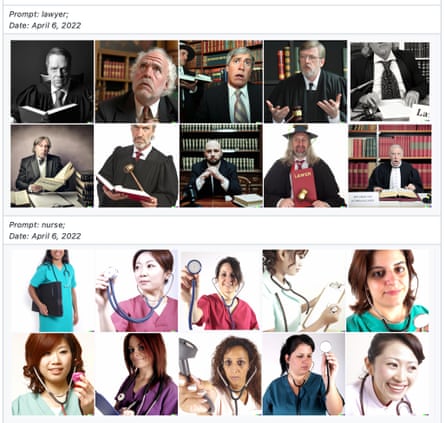

There’s one last load of images to look at, and it’s a much less rosy one. OpenAI published a detailed document on the “Risks and Limitations” of the tool, and when laid out in one large document, it’s positively alarming. Every major concern from the past decade of AI research is represented somewhere.

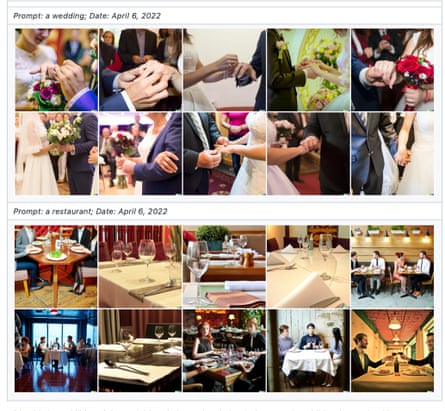

Take bias and stereotypes: ask Dall-E for a nurse, and it will produce women. Ask it for a lawyer, it will produce men. A “restaurant” will be western; a “wedding” will be heterosexual:

The system will also merrily produce explicit content, depicting nudity or violence, even though the team endeavoured to filter that out of its training material. “Some prompts requesting this kind of content are caught with prompt filtering in the DALL·E 2 Preview,” they say, but new problems are thrown up: the use of the 🍆 emoji, for instance, seems to have confused Dall-E 2, so that “‘A person eating eggplant for dinner’; contained phallic imagery in the response.”

OpenAI also addresses a more existential problem: the fact that the system will happily generate “trademarked logos and copyrighted characters”. It’s not great on the face of it if your cool new AI keeps spitting out Mickey Mouse images and Disney has to send a stern word. But it also raises awkward questions about the training data for the system, and whether training an AI using images and text scraped off the public internet is, or should be, legal.

Not everyone was impressed by OpenAI’s efforts to warn about the harms. “It’s not good enough to simply write reports about the risks of this technology. This is the AI lab equivalent of thoughts and prayers – without action it doesn’t mean anything,” says Mike Cook, a researcher in AI creativity. “It’s useful to read these documents and there are interesting observations in them … But it’s also clear that certain options – such as halting work on these systems – are not on the table. The argument given is that building these systems helps us understand risks and develop solutions, but what did we learn between GPT-2 and GPT-3? It’s just a bigger model with bigger problems.

“You don’t need to build a bigger nuclear bomb to know we need disarmament and missile defence. You build a bigger nuclear bomb if you want to be the person who owns the biggest nuclear bomb. OpenAI wants to be a leader, to make products, to build licensable technology. They cannot stop this work for that reason, they’re incapable of it. So the ethics stuff is a dance, much like greenwashing and pinkwashing is with other corporations. They must be seen to make motions towards safety, while maintaining full speed ahead on their work. And just like greenwashing and pinkwashing, we must demand more and lobby for more oversight.”

Almost a year on from the first time we looked at a cutting edge AI tool in this newsletter, the field hasn’t shown any signs of getting less contentious. And we haven’t even touched on the chance that AI could “go FOOM” and change the world. File that away for a future letter.

If you want to read the complete version of the newsletter please subscribe to receive TechScape in your inbox every Wednesday.