TL;WR

Welcome to part 2 of the All About Automated Tests series. This one is going to be a little more pleasant compared to Part 1 as I try to demonstrate some of the obvious and the not-so-obvious benefits of adding value to a product via automated tests.

Benefit 1 - Modularisation

In my opinion, the greatest benefit, mostly because it actually generates other benefits is the modularisation of code as a result of introducing automated tests particularly unit testing. Separating your code to be more modular might be a by-product but it's fantastic nonetheless.

Typically, in no/low test codebases you tend to see a lot of code which looks like this:

public class OrderRequestService

{

private readonly IDatabaseContext _databaseContext;

public OrderService(IDatabaseContext databaseContext)

{

_databaseContext = databaseContext;

}

public Order CreateOrder(CreateOrderRequest createOrderRequest)

{

var product = _databaseContext.Product.SingleOrDefaultAsync(x => x.CompanyId == createOrderRequest.ProductId);

if (product == null)

{

throw new ProductNotFoundException(createOrderRequest.ProductId);

}

if (product.StockCount < 1)

{

throw new ProductNotInStockException(createOrderRequest.ProductId);

}

var supplier = _databaseContext.Supplier.Where(x => x.ProductId == createOrderRequest.ProductId);

var supplierWithShortestDeliveryTime = supplier.Where(x => x.CanBeSuppliedWithinSevenDays(DateTime.UtcNow));

if (!supplierWithShortestDeliveryTime.IsActiveSupplier)

{

throw new SupplierNotActiveException(supplierWithShortestDeliveryTime.Id);

}

// And then about 20 other procedural statements

var order = new Order()

{

Quantity = createOrderRequest.Quantity,

ProductId = createOrderRequest.ProductId

};

return order;

}

}

I know this example is written in C# (therefore OOP) but this almost completely language-agnostic. This seems harmless enough but the more and more cognitive complexity you add to this the harder it will become to maintain and change existing functionality. It becomes even worse when you retrospectively need to add tests - Your tests will end up being a bloated mess of setup code.

The sensible thing to do in this example in order to make sure your tests are still readable, maintainable and quick to execute is to separate the concerns out. The obtaining of a product could be refactored out into a ProductAvailabilityService class and the same with the interactions involving suppliers - a SupplierSelectionService class. This would then enable testing in isolation and perhaps even more importantly - giving you units of reusable code which would be immediately available to other parts of the application.

The other cardinal sin within this example is the reliance on non-deterministic data (DateTime.UtcNow). This is so ridiculously common within the industry and the cause of so many bugs in production specifically when you start to deploy into different time zones. Refactoring the reliance on environmental variables not only makes you more modular but allows for the specific targeted testing of multiple cases.

Although these are basic programming principles (SOLID, in this instance) which you should be doing with or without tests, I often find the adherence to these principles often comes as a by-product of writing code which is easily testable - it feels almost automatic - it's like you get better design by default, for free.

Benefit 2 - Context Switching Damage Management

This never, ever gets mentioned and is closely related to Benefit 1 but it makes complete sense if you think about it. If your code is more modular and/or you have a test suite safety mat, in general, you shouldn't suffer too much from the damage of context switching (I'm not encouraging context switching at all but it's a sad reality).

If you're making changes to an untested 400 line mega-function with tons and tons of return statements (I am not advocating this either) and you get that virtual tap-on-the-shoulder or even worse, a screenshot with absolutely no context on Slack/Teams, what is the likelihood that you're going to make a mistake in that function? Or forget something? I'd say it's definitely quite high and I've seen it happen countless times. Is this going to be the same in a small, well tested function or component? Probably not ...meaning when you do resume work you will be able to enter "the zone" quicker and not only that, you'll have the cosy blanket of a failing test if you have made a mistake.

Benefit 3 - Intent Is Clear

Typically, well written tests accurately describe the intent of code e.g. (Typical unit test naming syntax)

ProcessOrder_WhenTheProductIsInStock_ThenProductIsOrderIsSubmitted

and

ProcessOrder_ProductIsOutOfStock_ThrowsProductOutOfStockExceptionIsThrown

or even (Gherkin-style):

Given: There is a product in stock

When: I order a product

And the product is in stock

Then: An order is submitted

You can clearly determine what is being tested in both of these scenarios, there's no real room for ambiguity. The behaviour is very well defined so in the result of one of these tests failing, the maintainer will know that they've broken something. This for me, is the best form of documentation and a thousand times better than using auto-generated code comments or writing needless comments

// Opens the filestream

var fileStream = new FileStream("some_file.txt", FileMode.Open);

There is a caveat to this benefit of course - it all depends on your quality of tests and specifically the naming of the test. The intent of the test should be clear - not forgetting you're testing outcomes not implementation details.

Benefit 4 - Bug/Regression Reduction

It's the most obvious benefit but you will have far fewer bugs as a result of maintaining a mature test suite. Even taking the smallest type of a test you can write (a unit test) the highest real percentage of coverage I've achieved previously was about 92-94% of an entire codebase, purely on unit tests. This is anecdotal but when I joined said company the coverage was 0% with an almost daily meeting to discuss bugs, so you can probably say there were on average 5-10 bugs per week. Once around 80% coverage was achieved, that dropped to around 0-1 a month. You as I will quickly discover that the biggest protection when modifying functionality were those tests running either locally or on build - when they were red, I knew I'd introduced a regression bug.

For full disclosure, this did go hand in hand with many process changes including the introduction of a Scrum-like methodology, proper pull request review procedures and manual testing etc but from first hand experience, in the many instances in which we modified functionality in some way, we had a failing test as a result.

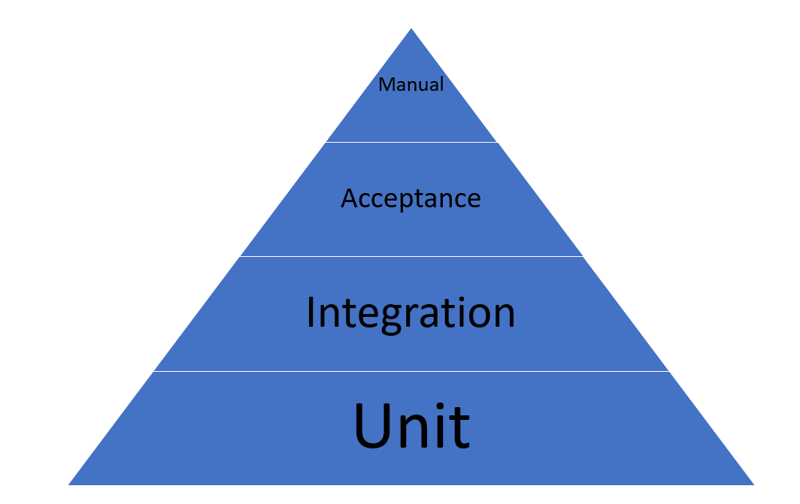

One very recent example of bug prevention I've experienced occurred when I was implementing a password complexity function as required as result of a penetration test. Unfortunately like many specifications there was a missing requirement which had to be retrospectively added. There was quite a massive regex which checked various conditions of the specified password and I had to add a requirement for sequential characters. I made my changes to the regex - "Well that was easy to add" I said and a build was started as a result of my PR and lo and behold I'd broken a test by making the regex more permissible, and thus breaking the password requirements as per the penetration test. This is a small example but it's quite applicable to every single test type on the aforementioned test pyramid.

Benefit 5 - Refactoring Can Be Made Much Easier

If you're not refactoring code either as part of a change or addressing tech debt then you'll quickly start to experience both product fragility (a small change in one area breaks multiple other areas of the application) or increased development costs

by having to address multiple areas for one change (...well, the ones you remember...for the ones you don't it's yet another bug).

In terms of refactoring with testing this is a big "can" because if you are sensible about how you write your tests (e.g. you test outcomes not implementations) then refactoring the internals of functions/classes/services should be very easy to do. I learned a very valuable lesson recently in which I kept having to go back whenever I refactored a class to change the unit tests. This is a sign that your unit tests are too fragile and also a sign that the class is too complex. Breaking up the class in this instance and testing outcomes not implementations will make refactoring a breeze. If you find yourself constantly having to change tests as the result of refactoring (NOT changing requirements) then you've probably identified a code smell.

I've often found that the likelihood that a test needs to be changed actually lowers the more you climb the pyramid (less changes for acceptance/E2E tests and most changes for unit tests).

Benefit 6 - Execution Before Deployment

In a mature development environment ideally you should use automated tests as a quality gate for shipping software. Ideally this should run on a per pull request basis whenever any change is going to be introduced into the codebase. It's not really relevant to this article but perhaps you have a build and deploy pipeline which looks similar to this:

- Test locally

- Test on build server on pull request

- Test on build server when merged into the Development environment

- Test on build server when merged into the UAT environment

- Test on build server when merged into the Pre-Prod environment

- Manual tests by QAs

- Deployed to production

- Customer reports bug

Within the above process there are 5 separate stages from which code is being executed via tests before it reaches production. This offers quite a bit of protection from the introduction of bugs into the codebase, 4 of these being machine-agnostic builds.

The other obvious benefit is how quickly you can ascertain whether your change has broken anything. For unit tests that will be Stage 1 which is really early in the pipeline. It's also not out of the question to be able to see failing integration or E2E tests at this stage dependant on your system but if not they will be caught in Stage 2, 3 or 4.

Consider how this would play out in the same pipeline above without any automated testing:

- Manual functional volatile test locally (potentially)

- Build on pull request

- Build when merged into the Development environment

- Build when merged into the UAT environment

- Build when merged into the Pre-Prod environment

- Manual tests by QAs (potentially missing the bug)

- Deployed to production

- Customer reports bug

The best possible outcome is at Stage 1, the author of the change catches a bug because they just happened to have functionally tested it locally (Noting that this is a volatile test, I doubt this will be functionally tested again other than at the time of modification).

The next possible stage is Stage 6. Utilising a QA to test something an automated test could have highlighted is a complete waste of their time and then your time as a developer, if you have to then go and fix it.

The worst stage is Stage 8, using your customers as a replacement for automated tests is one of the worst things you can do and will result in product alienation and churn.

Summary

These are just some of the benefits I've experienced first hand when implementing automated tests of any kind into a SDLC. I am always keen to add arrow's to my unit testing quiver so if there are any you can think of please do let me know.

Top comments (0)