Docker security: security monitoring and security tools are becoming hot topics in the modern IT world as the early adoption fever is transforming into a mature ecosystem. Docker security is an unavoidable subject to address when we plan to change how we architect our infrastructure.

Docker comes bundled with some neat security safeguards by default:

-

Docker containers are minimal: One or just a few running processes, only the strictly required software. Less software means smaller probability of being affected by a vulnerability.

-

Docker containers are task-specific: There is a pre-definition of what exactly should be running in your containers, path of the data directories, required open ports, daemon configurations, mount points, etc. Any security-related anomaly is easier to detect than in other multi-purpose systems. This design principle goes hand in hand with the microservices approach, greatly reducing the attack surface.

-

Docker containers are isolated: Both from the hosting system and from other containers, thanks to the resource isolation features of the Linux kernel such as cgroups and namespaces. There is a notable pitfall here, the kernel itself is shared between the host and the containers, we will address that later on.

-

Docker containers are reproducible: Due to their declarative build systems any admin can easily inspect how the container is built and fully understand every step. It’s very unlikely that you end up with a legacy, patched-up system nobody really wants to configure from scratch again, does this ring a bell? ;) 7 #Docker security threats: examples, best-practices and tools to battle them Click to tweet

There are, however, some specific parts of Docker based architectures which are more prone to attacks. In this article we are going to cover 7 fundamental Docker security vulnerabilities and threats.

Each section will be divided into:

- Threat description: Attack vector and why it affects containers in particular.

- Docker security best practices: What can you do to prevent this kind of security threats.

- Proof of Concept Example(s): A simple but easily reproducible exercise to get some firsthand practice.

Docker vulnerabilities and threats to battle

Docker host and kernel security

Docker security vulnerabilities present in the static image

Docker credentials and secrets

Docker runtime security monitoring

Docker host and kernel security

Description: If any attacker compromises your host system, the container isolation and security safeguards won’t make much of a difference. Besides, containers run on top of the host kernel by design. This is really efficient for multiple reasons you probably know already, but from the point of view of security it can be seen as a risk that needs to be mitigated.

Best Practices: How to “secure a Linux host” is a huge theme with plenty of literature available. If we just focus on the Docker context:

-

Make sure your host & Docker engine configuration is secure (restricted and authenticated access, encrypted communication, etc). We recommend using the Docker bench audit tool to check configuration best practices.

-

Keep your base system reasonably updated, subscribe to security newsfeeds for the operating system and any software you install on top, especially if it’s coming from 3rd party repositories, like the container orchestration you installed.

-

Using minimal, container-centric host systems like CoreOS, Red Hat Atomic, RancherOS, etc, will reduce your attack surface and can bring some helpful new features like running system services in containers as well.

-

You can enforce Mandatory Access Control to prevent undesired operations -both on the host and on the containers- at the kernel level using tools like Seccomp, AppArmor or SELinux.

Examples:

Seccomp allows you to filter which actions can be performed by the container, particularly system calls. Think of it as a firewall, but for the kernel call interface.

Some capabilities are already blocked by the default Docker profile, try this:

# docker run -it alpine sh

/ # whoami

root

/ # mount /dev/sda1 /tmp

mount: permission denied (are you root?)Code language: PHP (php)Or

/ # swapoff -a

swapoff: /dev/sda2: Operation not permittedCode language: PHP (php)You can create custom Seccomp profiles for your container, disabling for example calls to chmod.

Let’s retrieve the default docker Seccomp profile:

https://raw.githubusercontent.com/moby/moby/master/profiles/seccomp/default.json

Code language: JavaScript (javascript)Editing the file, you will see a whitelist of syscalls (~line 52), remove chmod, fchmod and fchmodat from the whitelist.

Now, launch a new container using this profile and check that the restriction is enforced:

# docker container run --rm -it --security-opt seccomp=./default.json alpine sh

/ # chmod +r /usr

chmod: /usr: Operation not permittedCode language: PHP (php)Docker container breakout

Description: The “container breakout” term is used to denote that the Docker container has bypassed isolation checks, accessing sensitive information from the host or gaining additional privileges. In order to prevent this, we want to reduce the default container privileges. For example, the Docker daemon runs as root by default, but you can create an user-level namespace or drop some of the container root capabilities.

Looking at previously exploited vulnerabilities because of default Docker configuration:

“The proof of concept exploit relies on a kernel capability that allows a process to open any file in the host based on its inode. On most systems, the inode of the / (root) filesystem is 2. With this information and the kernel capability it is possible to walk the host’s filesystem tree until you find the object you wish to open and then extract sensitive information like passwords.”

Best Practices:

Drop capabilities (fine-grained access control beyond the root all or nothing) that are not required by your software.

- CAP_SYS_ADMIN is a specially nasty one in terms of security, it grants a wide range of root level permissions: mounting filesystems, entering kernel namespaces, ioctl operations…

-

Create an isolated user namespace to limit the maximum privileges of the containers over the host to the equivalent of a regular user. Avoid running containers as uid 0, if possible.

-

If you need to run a privileged container, double check that it comes from a trusted source (see Container image authenticiy below)

-

Keep an eye on dangerous mountpoints from the host: the Docker socket (/var/run/docker.sock), /proc, /dev, etc. Usually, these special mounts are required to perform the container’s core functionality, make sure you understand why and how to limit the processes that can access this privileged information. Sometimes just exposing the file system with read-only privileges should be enough, don’t give write access without questioning why. In any case, Docker does copy-on-write to prevent changes in one running container to affect the base image that might be used for other container.

Examples:

By default, the root account of a Docker container can create device files, you may want to restrict this:

# sudo docker run --rm -it --cap-drop=MKNOD alpine sh

/ # mknod /dev/random2 c 1 8

mknod: /dev/random2: Operation not permittedCode language: PHP (php)The root account will override any file permissions by default, this is very easy to check: just create a file with an user, chmod it to 600 (only owner can read & write), become root and you can read it anyway.

You may want to restrict this in your containers, specially if you have backend storage mounts with sensitive user data.

# sudo docker run --rm -it --cap-drop=DAC_OVERRIDE alpine sh

Code language: PHP (php)Create a random user and cd to it’s home. Then:

~ $ touch supersecretfile

~ $ chmod 600 supersecretfile

~ $ exit

~ # cat /home/user/supersecretfile

cat: can't open '/home/user/supersecretfile': Permission deniedCode language: PHP (php)Multiple security scanners and malware tools forge their own raw network packages from scratch, you can also forbid that:

# docker run --cap-drop=NET_RAW -it uzyexe/nmap -A localhost

Starting Nmap 7.12 ( https://nmap.org ) at 2017-08-16 10:13 GMT

Couldn't open a raw socket. Error: Operation not permitted (1)Code language: PHP (php)Check out the complete list of capabilities here and drop anything you containerized application doesn’t need.

By default, if you create a container without namespaces, the process inside the container belongs to root from the point of view of the host.

# docker run -d -P nginx

# ps aux | grep nginx

root 18951 0.2 0.0 32416 4928 ? Ss 12:31 0:00 nginx: master process nginx -g daemon off;Code language: PHP (php)But we can create a completely separated user namespace. Edit the /etc/docker/daemon.json file and add the conf key (be careful not to break json format):

"userns-remap": "default"

Code language: JavaScript (javascript)Restart the Docker daemon. This will create a preconfigured dockremap user. You will notice this new namespace is empty.

# systemctl restart docker

# docker psCode language: PHP (php)Launch the nginx image again:

# docker run -d -P nginx

# ps aux | grep nginx

165536 19906 0.2 0.0 32416 5092 ? Ss 12:39 0:00 nginx: master process nginx -g daemon off;Code language: PHP (php)Now the nginx process runs in a different user namespace, increasing the isolation of the containers.

Container image authenticity

Description: There are plenty of Docker images and repositories on the Internet doing all kinds of awesome and useful stuff, but if you are pulling images without using any trust and authenticity mechanism, you are basically running arbitrary software on your systems.

- Where did the image come from?

- Do you trust the image creator? Which security policies are they using?

- Do you have objective cryptographic proof that the author is actually that person?

- How do you know nobody has been tampering with the image after you pulled it?

Docker will let you pull and run anything you throw at it by default, so encapsulation won’t save you from this. Even if you only consume your own custom images, you want to make sure nobody inside the organization is able to tamper with an image. The solution usually boils down to the classical PKI-based chain of trust.

Best Practices:

-

The regular Internet common sense: do not run unverified software and / or from sources you don’t explicitly trust.

-

Deploy a container-centric trust server using some of the Docker registry servers available in our Docker Security Tools list.

-

Enforce mandatory signature verification for any image that is going to be pulled or running on your systems.

Example:

Deploying a full-blown trust server is beyond the scope of this article, but you can start signing your images right away.

Get a Docker Hub account if you don’t have one already.

Create a directory containing the following trivial Dockerfile:

# cat Dockerfile

FROM alpine:latestCode language: CSS (css)Build the image:

# docker build -t <youruser>/alpineunsigned .

Code language: HTML, XML (xml)Log into your Docker Hub account and submit the image:

# docker login

[…]

# docker push /alpineunsigned:latestCode language: PHP (php)Enable Docker trust enforcement:

# export DOCKER_CONTENT_TRUST=1

Code language: PHP (php)Now try to retrieve the image you just uploaded:

# docker pull <youruser>/alpineunsigned

Code language: HTML, XML (xml)You should receive the following error message:

Using default tag: latest

Error: remote trust data does not exist for docker.io//alpineunsigned:

notary.docker.io does not have trust data for docker.io//alpineunsignedCode language: JavaScript (javascript)Now that DOCKER_CONTENT_TRUST is enabled, you can build the container again and it will be signed by default.

# docker build --disable-content-trust=false -t <youruser>/alpinesigned:latest .

Code language: HTML, XML (xml)Now you should be able to push and pull the signed container without any security warning. The first time you push a trusted image, Docker will create a root key for you, you will also need a repository key for the image, both will prompt for a user defined password.

Your private keys are in the ~/.docker/trust directory, safeguard and backup them.

The DOCKER_CONTENT_TRUST is just an environment variable, and will die with your shell session but trust validation should be implemented across the entire process, from the images building, the images hosting in the registry through images execution in the nodes.

Container resource abuse

Description: Containers are much more numerous than virtual machines on average, they are lightweight and you can spawn big clusters of them on modest hardware. That’s definitely an advantage, but it implies that a lot of software entities are competing for the host resources. Software bugs, design miscalculations or a deliberate malware attack can easily cause a Denial of Service if you don’t properly configure resource limits.

To add up to the problem, there are several different resources to safeguard: CPU, main memory, storage capacity, network bandwidth, I/O bandwidth, swapping… there are some kernel resources that are not so evident, even more obscure resources such as user IDs (UIDs) exist!.

Best Practices: Limits on these resources are disabled by default on most containerization systems, configuring them before deploying to production is basically a must. Three fundamental steps:

-

Use the resource limitation features bundled with the Linux kernel and/or the containerization solution.

-

Try to replicate the production loads on pre-production. Some people uses synthetic stress test, others choose to ‘replay’ the actual real-time production traffic. Load testing is vital to know where are the physical limits and where is your normal range of operations.

-

Implement Docker monitoring and alerting. You don’t want to hit the wall if there is a resource abuse problem, malicious or not, you need to set thresholds and be warned before it’s too late.

Examples:

Control groups or cgroups are a feature of the Linux kernel that allow you to limit the access processes and containers have to system resources. We can configure some limits directly from the Docker command line:

# docker run -it --memory=2G --memory-swap=3G ubuntu bash

Code language: PHP (php)This will limit the container to 2GB main memory, 3GB total (main + swap). To check that this is working, we can run a load simulator, for example the stress program present in the ubuntu repositories:

root@e05a311b401e:/# stress -m 4 --vm-bytes 8G

Code language: PHP (php)You will see a ‘FAILED’ notification from the stress output.

If you tail the syslog on the hosting machine, you will be able to read something similar to:

Aug 15 12:09:03 host kernel: [1340695.340552] Memory cgroup out of memory: Kill process 22607 (stress) score 210 or sacrifice child

Aug 15 12:09:03 host kernel: [1340695.340556] Killed process 22607 (stress) total-vm:8396092kB, anon-rss:363184kB, file-rss:176kB, shmem-rss:0kBCode language: CSS (css)Using docker stats you can check current memory usage & limits. If you are using Kubernetes, on each pod definition you can actually book the resources that you application need to run properly and also define maximum limits, using requests and limits:

[...]

- name: wp

image: wordpress

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

[...]Code language: JavaScript (javascript)Docker security vulnerabilities present in the static image

Description: Containers are isolated black boxes, if they are doing their work as expected it’s easy to forget which software and version is specifically running inside. Maybe a container is performing like a charm from the operational point of view but it’s running version X.Y.Z of the web server which happens to suffer from a critical security flaw. This flaw has been fixed long ago upstream, but not in your local image. This kind of problem can go unnoticed for a long time if you don’t take the appropriate measures.

Best Practices: Picturing the containers as immutable atomic units is really nice for architecture design, from the security perspective however, you need to regularly inspect their contents:

Update and rebuild you images periodically to grab the newest security patches, of course you will also need a pre-production testbench to make sure these updates are not breaking production.

- Live-patching containers is usually considered a bad practice, the pattern is to rebuild the entire image with each update. Docker has declarative, efficient, easy to understand build systems, so this is easier than it may sound at first.

- Use software from a distributor that guarantees security updates, anything you install manually out of the distro, you have to manage security patching yourself.

- Docker and microservice based approaches consider progressively rolling over updates without disrupting uptime a fundamental requisite of their model.

- User data is clearly separated from the images, making this whole process safer.

-

Keep it simple. Minimal systems expect less frequent updates. Remember the intro, less software and moving parts equals less attack surface and updating headaches. Try to split your containers if they get too complex.

Use a vulnerability scanner, there are plenty out there, both free and commercial. Try to stay up to date on the security issues of the software you use subscribing to the mailing lists, alert services, etc.

- Integrate this vulnerability scanner as a mandatory step of your CI/CD, automate where possible, don’t just manually check the images now and then.

Example:

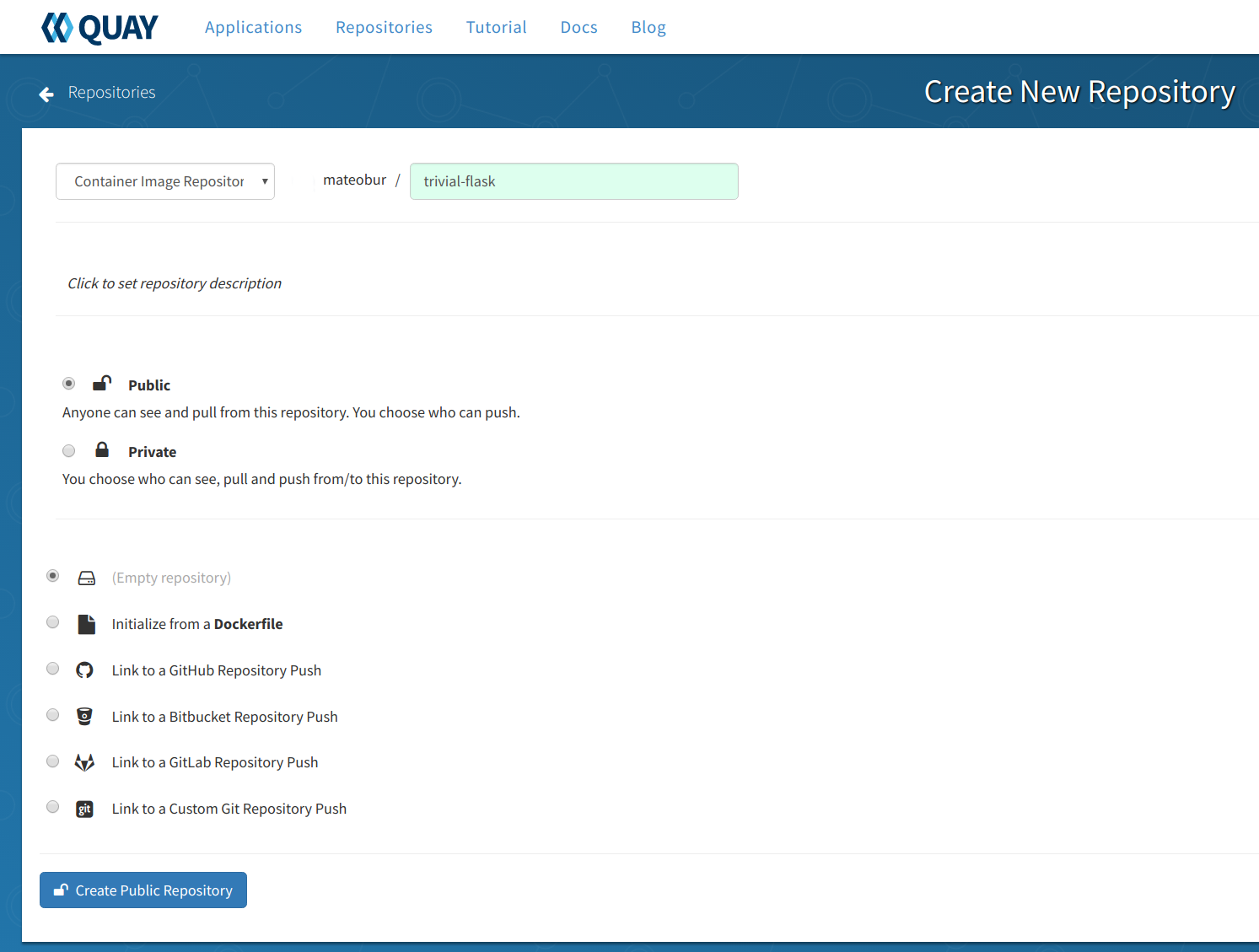

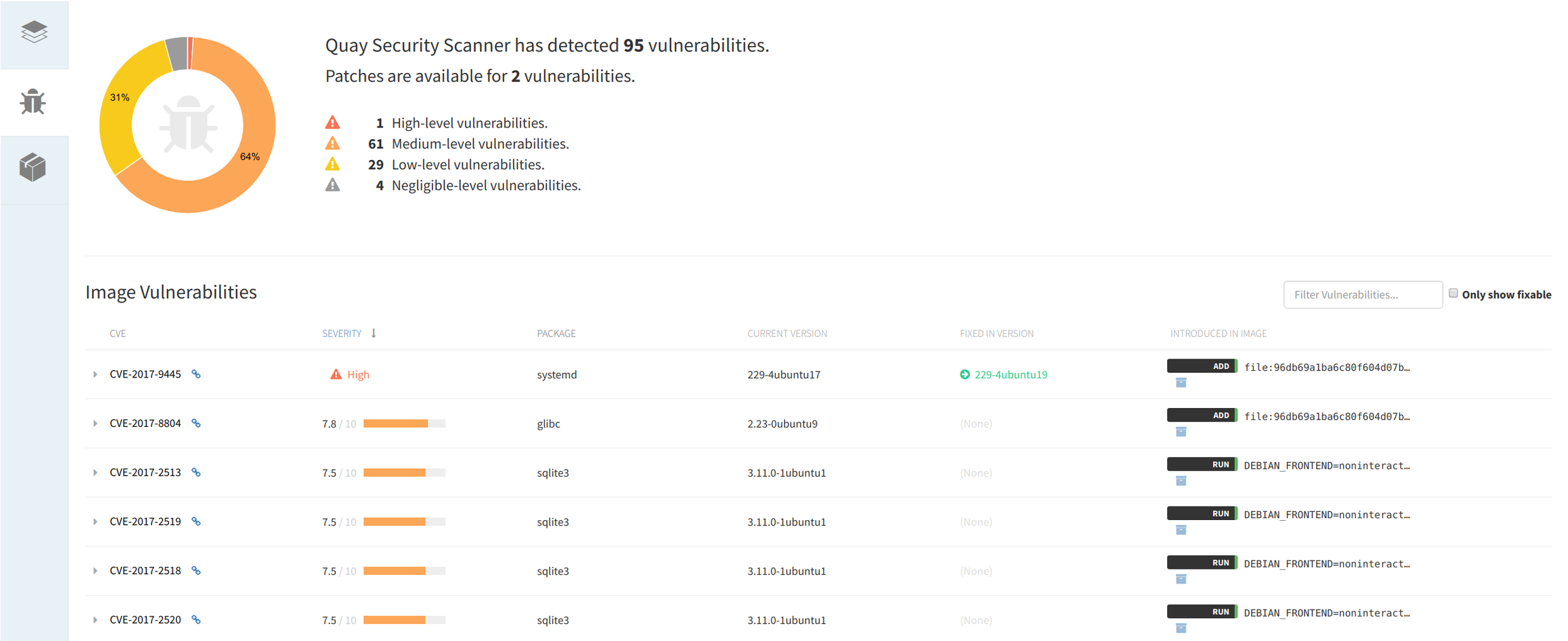

The are multiple Docker images registry services that offer image scanning, for this example we decided to use CoreOS Quay that uses the open source Docker security image scanner Clair. Quay it’s a commercial platform but some services are free to use. You can create a personal trial account following these instructions.

Once you have your account, go to Account Settings and set a new password (you need this to create repos).

Click on the + symbol on your top right and create a new public repo:

We go for an empty repository here, but you have several other options as you can see in the image above.

Now, from the command line, we log into the Quay registry and push a local image:

# docker login quay.io

# docker push quay.io/your_quay_user/your_quay_image:tagCode language: PHP (php)Once the image is uploaded into the repo you can click on it’s ID and inspect the image security scan, ordered by severity, with the associated CVE report link and also upstream patched package versions.

Docker credentials and secrets

Description: Your software needs sensitive information to run: user password hashes, server-side certificates, encryption keys… etc. This situation is made worse by the containers nature: you don’t just ‘setup a server’, the microservices are plenty and may be constantly created and destroyed. You need an automatic and secure process to share this sensitive info.

Best Practices:

-

Do not use environment variables for secrets, this is a very common yet very unsecure practice.

-

Do not embed any secrets in the container image. Read this IBM post-mortem report: “The private key and the certificate were mistakenly left inside the container image.”

-

Deploy a Docker credentials management software if your deployments get complex enough, we have reviewed several free and commercial options in our Docker security tools list. Do not attempt to create your own ‘secrets storage’ (curl-ing from a secrets server, mounting volumes, etc, etc) unless you know really really well what you are doing.

Examples:

First, let’s see how to capture an environment variable:

# docker run -it -e password='S3cr3tp4ssw0rd' alpine sh

/ # env | grep pass

password=S3cr3tp4ssw0rdCode language: PHP (php)Is that simple, even if you su to a regular user:

/ # su user

/ $ env | grep pass

password=S3cr3tp4ssw0rdCode language: PHP (php)Nowadays, container orchestration systems offer some basic secret management. For example Kubernetes has the secrets resource. Docker Swarm has also its own secrets feature, that will be quickly demonstrated here:

Initialize a new Docker Swarm (you may want to do this on a VM):

# docker swarm init --advertise-addr <your_advertise_addr>

Code language: HTML, XML (xml)Create a file with some random text, your secret:

# cat secret.txt

This is my secretCode language: CSS (css)Create a new secret resource from this file:

# docker secret create somesecret secret.txt

Code language: CSS (css)Create a Docker Swarm service with access to this secret, you can modify the uid, gid, mode, etc:

# docker service create --name nginx --secret source=somesecret,target=somesecret,mode=0400 nginx

Code language: PHP (php)Log into the nginx container, you will be able to use the secret:

root@3989dd5f7426:/# cat /run/secrets/somesecret

This is my secret

root@3989dd5f7426:/# ls /run/secrets/somesecret

-r-------- 1 root root 19 Aug 28 16:45 /run/secrets/somesecretCode language: JavaScript (javascript)This is a minimal proof of concept, at the very least now your secrets are properly stored and can be revoked or rotated from a central point of authority.

Docker runtime security monitoring

Description: In the former sections, we have covered the static aspect of Docker security: vulnerable kernels, unreliable base images, capabilities that are granted or denied at launch-time, etc. But what if, despite all these, the image has been compromised during runtime and starts to show suspicious activity?

Best Practices:

-

All the previously described static countermeasures does not cover all attack vectors. What if your own in-house application has a vulnerability? Or attackers are using a 0-day not detected by the scanning? Runtime security can be compared to Windows anti-virus scanning: detect and prevent an existing break from further penetration.

-

Do not use runtime protection as a replacement for any other static up-front security practices: Attack prevention is always preferable to attack detection. Use it as an extra layer of peace-of-mind.

-

Having generous logs and events from your services and hosts, correctly stored and easily searchable and correlated with any change you do will help a lot when you have to do a post-mortem analysis.

Example:

Sysdig Falco is an open source, behavioral monitoring software designed to detect anomalous activity. Sysdig Falco works as an intrusion detection system on any Linux host, although it is particularly useful when using Docker since it supports container-specific context like container.id, container.image, Kubernetes resources or namespaces for its rules.

Falco rules can trigger notifications on multiple anomalous activity, let’s show a simple example of someone running an interactive shell in one of the production containers.

First, we will install Falco using the automatic installation script (not recommend for production environments, you may want to use a VM for this):

# curl -s https://s3.amazonaws.com/download.draios.com/stable/install-falco | sudo bash

# service falco startCode language: PHP (php)And then we will run an interactive shell in a nginx container:

# docker run -d --name nginx nginx

# docker exec -it nginx bashCode language: PHP (php)On the hosting machine tail the /var/log/syslog file and you will be able to read:

Aug 15 21:25:31 host falco: 21:25:31.159081055: Debug Shell spawned by untrusted binary (user=root shell=sh parent=anacron cmdline=sh -c run-parts --report /etc/cron.weekly pcmdline=anacron -dsq)

Code language: PHP (php)Sysdig Falco doesn’t need to modify or instrument containers in any way. This is just a trivial example of Falco capabilities, check out these examples to learn more.

Conclusions

Docker itself is build with security in mind and some of their inherent features can help you protecting your system. Don’t get too confident though, there is no magic bullet here other than keeping up with the state-of-the-art Docker security practices but there are a few container-specific security tools that can help giving battle to all these Docker vulnerabilities and threats.

Hopefully these simple examples have stirred up your interest in the matter!