In connection with websites and search engine optimization, the term pagination is used for content that is not completely displayed on one page. Pagination describes the distribution of content on more than one URL, whereby you can “scroll” to the following and previous URLs. Problems with duplicate content often result from paginated shop or directory pages, since pages 2 and 3 are usually very similar to page 1 without offering any additional added value.

Paginated pages are important for the following website types:

- Product directories and category pages in e-commerce

- Search result lists

- Blogs

- Forums

- Websites that work with large databases, for example, company registers or recipes

In the case of documents that are divided over several pages for usability reasons, it is important to inform the search engines that the current URL is a page from a multi-page document. Also which URL is the page before and the page after.

What are the Wrong Implementations of Pagination in SEO?

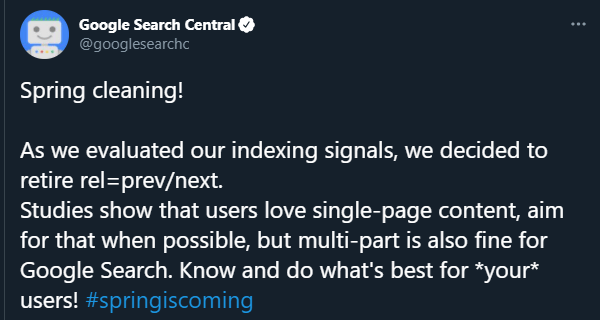

At the beginning of 2019, Google announced that the attributes “rel =prev” and “rel = next”, which were inserted in the <head> area of the page, would no longer be taken into account. These were previously understood as a useful indication for search engines to recognize the paginated websites.

And, it should be noted that Google has stopped using “rel = prev” and “rel = next” 4 years ago before the official statement for the Search Engine Optimization Community.

In the past, there were different strategies for dealing with paginated pages. Most of these were based on the intention that only the first page of paginated pages should appear in the search engine’s index. In online shops with very few articles, one wanted to direct the search engine to the article detail pages and not “distract” with the category pages. In the case of online shops with a large number of articles and frequent product range changes, the category pages are often more important than the product detail pages.

The following things are often done wrong for Pagination in the Search Engine Optimization field:

- Put a canonical tag on page 2 and the following on page 1. The canonical tag is mainly intended for pages with almost identical content, not to define pagination.

- Put canonical on page 1 and combine it with the NOINDEX Meta Robots instruction. This is even worse than the previous example: Google has expressly said that in this constellation the NOINDEX configuration is inherited on page 1. This means that not only pages 2 (as requested) are not indexed, but the first page does not appear in the search results either.

- Incorrect use of “rel = prev” and “rel = next”. These instructions belong in the “<head>” area of the HTML source code, not as an attribute in the link tags of the pagination links. You can do this, but it’s not the approach Google recommends. The assumption is that the information in the <head> can be parsed much more easily and quickly than attributes in “<a href>” tags.

Why Canonical Tag shouldn’t be used for Pagination in SEO?

Another known bug relates to the canonical tag related to paginated pages. There is still a persistent rumor in SEO circles that apart from the first page of a product category, all other pages (2, 3, 4, etc.) must contain a canonical tag with a reference to the first page. However, this overlooks the fact that a canonical tag is intended for websites with identical content and not to define pagination. Shop pages with pagination do not contain duplicate content as they show different products.

A canonical tag of the paginated pages on the first page in combination with the noindex meta tag is worse than the previous example. In this constellation, the noindex configuration of the pagination is passed on to the first page. Not only are the pages with pagination not indexed, but the first page is also not displayed in the search results. Since the first website is an important category page, the consequences of this action are easy to understand.

Why Paginated Content should be included in Sitemaps for SEO?

As a common habit for some SEOs, paginated pages are not included in the sitemap. The background is that in an online shop the main page is indexed with an item listing and paginated pages have little added value in comparison. Since paginated content includes unique content and links for users and Search Engines, paginated content such as directory lists, category pages, or blog post lists should be included in the sitemaps.

Why a Content Shouldn’t be Divided into Multiple Pages?

News sites and some blog sites publish articles divided into multiple pages. According to Google, “content shouldn’t be served over different pages”, because it makes it harder to understand the content for the Search Engines while harms the user experience. Most of the content publishers’ main intent by dividing content over multiple pages is that acquiring more pageview for the ads revenue. But, this will eventually harm the Search Engine Rankings for Organic Performance while harming the website’s branding and reliability by disturbing the users.

Thus, for protecting the content integrity, a content shouldn’t be divided into multiple pages.

Why Repetitive and Duplicate Content Should be Avoided for Pagination SEO?

In order to avoid duplicate content, any (SEO) text should only appear on the first component page. If this is also displayed on other pagination websites, the website operator creates a large number of pages with the same content. These are then downgraded by Google as thin content. And, any product, blog post, or paginated content element should only appear on one page, it shouldn’t be repeated. Another way to avoid repetitive and duplicate content for the pagination is that decreasing the boilerplate content while increasing the element count per page. If there is not enough paginated content element for the paginated web page, Google will start to “dilute” the paginated contents’ prominence and it will focus on “boilerplate content”.

Also, increasing the paginated content element per paginated page will decrease the count of the paginated web page so that “click exploration path” will be shortened.

Why “Noindex, Follow” shouldn’t be used?

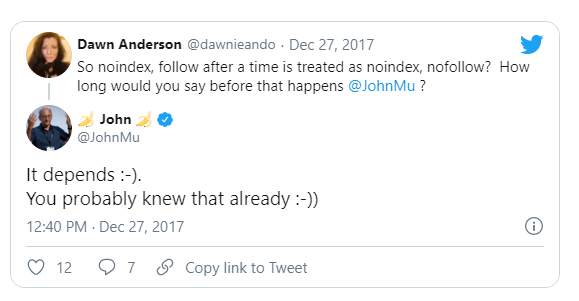

It is no longer a secret among SEOs: Google has confirmed that after a certain time the meta robots tag noindex, follow will be converted into a noindex, nofollow meta tag.

“But if we see the noindex there for longer than we think this page really doesn’t want to be used in search so we will remove it completely. And then we won’t follow the links anyway. So in noindex and follow is essentially kind of the same as a noindex, nofollow. There’s no really big difference there in the long run. ” Source: seroundtable.com

If your own pagination is set to noindex, follow must be clear that Google will no longer follow the links or the paginated pages after a certain time. The use of noindex, follow is therefore no longer appropriate.

If you don’t want to watch the video, you can read the transcript below.

So it’s kind of tricky with noindex. Which I think is somewhat of a misconception in general with an SEO community. In that with a noindex and follow it’s still the case that we see the noindex. Snd in the first step we say okay you don’t want this page shown in the search results. We’ll still keep it in our index, we just won’t show it and then we can follow those links. But if we see the noindex there for longer than we think this page really doesn’t want to be used in search so we will remove it completely. And then we won’t follow the links anyway. So in noindex and follow is essentially kind of the same as a noindex, nofollow. There’s no really big difference there in the long run.

John Mueller, Google Webmaster Trend Analyst

Why is a linear Pagination Bad for Users?

Large online shops have more than 100 pagination pages. In this case, the focus must also be on the topic of usability. In this situation, it cannot really be assumed that users, let alone a bot, will actually reach all of these pages. A solution here would be: display middle and back paginated pages directly on the first page to make them more accessible. Above all, the Googlebot comes close to all websites with a low double-digit number.

In many areas, there is no consensus among SEOs when it comes to pagination, and two approaches are often recommended. We would like to introduce these two to you as different positions in the next two paragraphs.

SEO Arguments for Pagination

In the SEO Community, there are different perspectives and opinions. Search Engine’s official guidelines and explanations are told. But, to understand and learn SEO, also SEO Community’s opinions and arguments should be known by a Holistic SEO. Thus, below we have curated different opinions and arguments from the SEO Community towards Pagination.

What are the SEO Arguments that Against Pagination?

In the SEO World, besides the correct Pagination practices, there are creative and different approaches to Pagination. One of these insights is to allow Search Engine to discover the website from product and service pages by moving internal links to page elements that are normally located in paginated category pages such as “product categories” or “blog post categories”.

Thus, it is ensured that the most relevant product, service, or article page is scanned, not the website from the web pages that are gradually buried in click depth. According to the same view, paginated content pages should be “noindex” so that an improvement for “crawl budget” should also be provided. With this method, only the first page of the paginated content is indexed by Google, while the rest is not displayed in the search results. Below, you will see some E-commerce Websites that use similar methods such as using a canonical tag on paginated content for the first page or noindex tag for the paginated content.

- Zalando

- Otto

- About You (Canonical Tag is only set appropriately aboutyou.de)

If we deepen Pagination’s influence on Crawl Efficiency. SEO also needs to keep an eye on the crawl budget. For example, if there are more than 1,000,000 websites on a domain, the Google bot usually cannot or will no longer crawl all web pages from the website. It is therefore important to control this accordingly. Otherwise new or more important websites will no longer be crawled and indexed, but paginated component pages that are classified as weak content. Furthermore, there are a number of arguments against pagination from the SEO Community below.

- With pagination, your own content is partially hidden behind hundreds of links. A clear signal for search engines that this is less relevant content. Then why should these pages ever rank well?

- If one of the component pages is temporarily unavailable during crawling, the Google Crawler can no longer access and index the following pages.

- With a high number of websites, the crawling process through the paginated pages is greatly slowed down and easily leads to not all pages being included.

- Another point is the use of the laboriously paginated pages: analysis tools often provide information that hardly anyone has ever looked at the last pages, as the click depth is generally very high.

Now the entire necessity of technical pagination from an SEO perspective has been called into question. However, there is also a contrary position, which classifies pagination as relevant in its function of making websites accessible.

Note: All of the arguments from this section which is againt Pagination has been already answered. This section has been written to show every perspective in the SEO Community for the Pagination.

Position 2: Pagination makes products accessible to everyone

Pagination makes product pages accessible to crawlers of Search Engines such as Googlebot. In many online shops, products are not linked anywhere else in the shop except on the second page. This is the only way for Googlebot, Bingbot, or other Search Engine crawlers to discover a big website’s products, services, or articles via internal links. If the component pages are now blocked for search engines, the products, articles, services, etc. can no longer be reached by the crawler. This can lead to indexing problems with main content pages. Are all other pages on noindex, nofollow. To keep every web page from a website accessible for the search engines’ crawlers, a secure path that makes all product detail pages accessible to Google or other crawlers should be active and indexable. Since the web pages that can be indexed flow more PageRank, it also can help for better rankings.

Suggestions of SEO Opinion that supports Pagination’s are listed below.

- Each component page has a canonical tag on itself

- Robots tag should include meta index, follow features

- The page titles and meta descriptions automatically contain the addition “(Page N)” (otherwise all meta titles & descriptions are duplicated)

- All component pages are fully accessible (HTTP code 200).

Which Perspective is the right one for SEO?

In this section, Holistic SEO & Digital will transfer its own opinion.

In SEO, there are no “certain rules”, that’s why we always “test”, gather data with analytical thinking, and read new events, updates, and SEO case studies. In my career, I have implemented both of these methods and perspectives and I have acquired positive outcomes. But, you should know when to do which one.

In some English Webmaster Hangouts, I have even asked John Mueller that can I noindex the paginated URLs while moving internal links to the product pages, and I have got the answer of yes.

I also sometimes used “canonical tags on paginated content”, but every SEO Project has its own circumstances. I recommend you to stick with general and official SEO Rules and guidelines of Google, Bing but sometimes, you should also try new things.

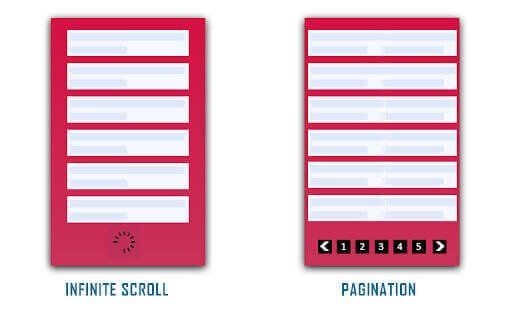

How to Implement Pagination with Infinite Scroll for SEO?

If you have not yet decided on pagination, for example, you are in the middle of a relaunch, now is the time to reconsider. Of course, there are also viable alternatives to pagination here. With infinite scrolling, for example, the products are automatically reloaded again and again.

One of the important points to be aware of when applying Infinite Scroll is the change of “URL”. If the URL does not change, Google will not see or index all relevant internal links and content. Another important point is to realize the infinite scroll by notifying the user. When the user is scrolling, instead of suddenly encountering new content, it is correct for User Experience to be given a warning or to receive a confirmation with a button like “more product”.

A Pagination SEO Experiment and Analysis for Crawl Budget by Henry Matthew

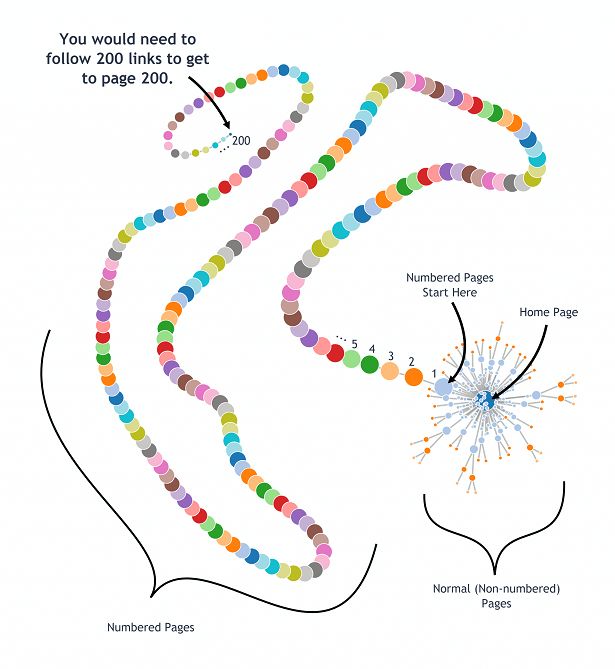

A small pagination experiment by Matthew Henry from portent.com shows the problem with pagination on large websites in more detail: Website visitors know from experience that a click on “2” or “next” at the end of a page takes us to the next page. This logic is a lot harder to teach search engines. By default, the web crawler follows outgoing links from the home to subpages via the internal link.

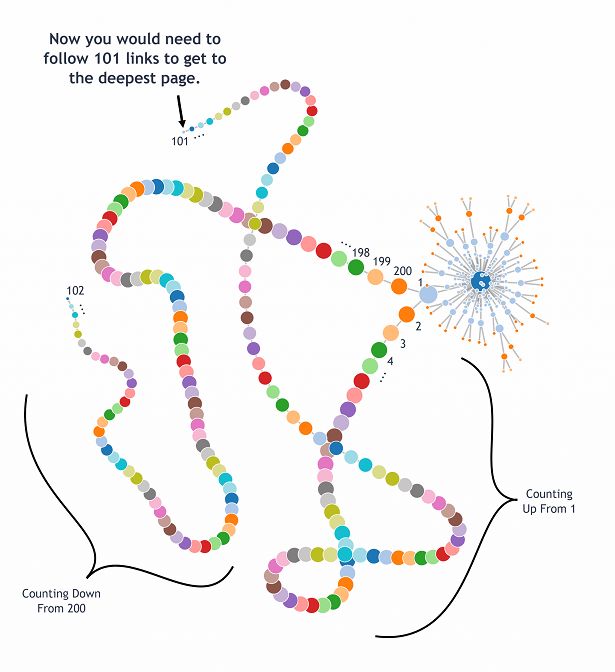

Suppose we have a web project with 200 paginated pages. It doesn’t matter whether it’s a blog, a webshop, or an online newspaper. For example, blog articles often contain a link to the next or previous article. If there were no overview of all blog pages, a user would have to click “Next” 199 times to get from the first to the last post on page 200.

Sounds awkward, but that’s exactly how the web crawler works: it starts at page one, follows the link to pages 2, 3, and so on. It is a considerably long way to get to 200. A so-called pagination tunnel is created for search engines that have four major disadvantages :

- The content on the last few pages is hidden behind hundreds of links, signaling the search engines to be less relevant. So these pages will also rank worse and worse.

- If only one page returns an error during crawling – perhaps due to a brief server error – the crawler can no longer reach all of the following pages.

- The web crawler cannot crawl this arrangement of pages in parallel, but only linearly. This slows down the whole process and easily results in not all pages being recorded.

- Last but not least, probably no user will ever see the last pages, as they would have to click through all the previous pages to do so.

For the experiment, Henry now suggests linking only the next page to the previous page. The web crawler can start from page 1 and page 200 and ‘only’ have to follow 100 links to reach all pages. The pagination tunnel is cut in half. It looks similar if you make all consecutive pages individually clickable. Then the crawling process would also be divided into two half-length tunnels: namely into even and odd pages.

What Happens When You Combine These Two Paginated Methods?

If you can jump from page 1 not only to 2, 3, 4, etc. but also to page 50, this would shorten the crawler’s path and it would continue logarithmically instead of linearly. If you set a few links between pages 1 and 200 as “intermediate marks”, users and crawlers will get to the desired page much faster. Henry’s experiment suggests that the larger the web project, the more pages the pagination should be able to skip at once.

With 10,000 or even 100,000 paginated pages, intermediate links in the middle of the navigation are practically mandatory in order to shorten the tunnel. This speeds up the crawling process considerably and is more user-friendly.

Different Methods to Paginate Pages Search Engine Friendly

The goal already results from the question: The content should only land once in the search engine index since page 2 and following are not suitable landing pages for SEO. There are the following options for this.

This section curates the all of the possible methods, while implementing or reading, try to remember the arguments and official Search Engine Guidelines and Statemens as above.

Method 1: Leave It as It is

In fact, this is a method that Google itself suggests. The argument here is that Google will recognize paginated content in most cases. Two problems have already been named indirectly: On the one hand, “most cases” are not necessarily your own domain. On the other hand, other search engines such as Bing should also be considered, which can certainly generate traffic.

Another problem that Google doesn’t mention here is the massive indexing of pages that shouldn’t even be included in the index. Because instead of just page 1, a multiple is recorded.

Method 2: Use the Complete Overview Paginated Pages (View-All Pages)

One way of preventing paginated content from being indexed is to use complete overview pages, also known as view-all pages. There are two clearly different versions of the implementation.

Version 1 : The paginated page uses a canonical to refer to a general overview.

<link rel = "canonical" href = "http://www.example.com/all-content" />

A canonical is only a recommendation for Googlebot. Google decides for itself whether to use the canonical. A direct link to the overall view itself would be easier, but not recommended because of the increased loading time. The internal linking therefore still leads to the paginated pages. Because of the canonical, which is located on the well-linked page, the power of the internal linking is partially lost. As a result of this poorer linking, the linked products may rank worse than before.

Version 2: The page internally links to the overall view, but initially only loads part of the articles in order to keep the loading times mentioned above low (“Infinite Scrolling”).

This type is technically more demanding and also more prone to errors. In addition, the indexing and ranking of articles that are not loaded immediately can be made more difficult. If this method is chosen, it must be technically ensured that Google can capture the reloaded links and images after scrolling.

Method 3: Canonical Tag on The First Page

The method of using a canonical from page 2, which refers back to page 1, can still be found frequently. Since page 2 lists different products than page 1, the contents differ greatly from one another. As a result, Google often ignores the canonical in such cases, as this only applies if the content is similar.

In addition, all link power is awarded to page 1. The internally linked page 2 receive little or no power, which makes it difficult for items listed on later pages to scroll.

Method 4: Noindex, Follow

Noindex and Follow is replaced by Google after a while, as “noindex, nofollow”, according to the Google official statement. We have also mentioned earlier that URLs that are not indexed will not be crawled properly and will not transfer PageRank.

At this point, in some SEO Cases, it has been observed that “noindex and nofollow” are applied to both discover internal link networks and improve crawl budget. However, on a “noindex” web page, javascript rendering will not be applied. If your goal is to implement “noindex, follow”, make sure that internal links are not added with Javascript.

Method 5: Using the robots.txt

At first glance, the solution to exclude paginated content using robots.txt appears similar. Since most shops include page numbers with the paginated URLs (e.g. www.example.com/product-category?page=1), these can be entered once. More on this at robots.txt for SEOs.

In contrast to the above solution via “noindex, follow”, this indicates that the crawler should not even follow the paginated links. When you disallow the Paginated Content, you must make sure that no internal links to the respective web pages are displayed. Disallowing the paginated Content means that its content will not be displayed to Googlebot.

Method 6: Divide Content Into Fine-grained Categories for Pagination

In order to avoid pagination right from the start, it makes sense to break down the products into many smaller categories. Provided that the keyword mapping is correct, this generates a very good approach to keywords via the categories. However, the click depth increases, which is neither beneficial for visitors to the homepage nor SEO. In addition, this form is very labor-intensive if the product portfolio is large, and it can easily happen that categories without articles become orphaned over time.

Due to the high potential for additional rankings and the low short-term susceptibility to errors, as well as the fact that this measure can be carried out largely independently without technology, this variant is often recommended. A solid taxonomy also can help for more granular and fine-grained categories.

Method 7: Deindexing Paginated Content with Google Search Console for PaginationSEO

Deindexing paginated content via Google Search Console’s URL Removal tool is to clean the index bloating from Google Search Engine Results Pages. But, all of the pagination SEO methods here have some positives and negatives in terms of communication with Search Engine and SEO Performance.

If noindex tag is used for paginated content, it means that Googlebot won’t crawl these pages, render JavaScripts and take weight to the internal links. And, if you don’t use “self-referencing canonical” for the paginated content, they won’t be indexed, and the content, links from these pages won’t have any weight.

But, Daniel Heredia Mejias has another idea. According to him, an SEO can use “self-referencing canonical”, “link rel next, and prev” attributes, values along “index” signals by removing the pages from Google index. Thus, a paginated web page might be still crawled, rendered by Googlebot, all of the internal links and content can be seen and weighted by Google Algorithm, but also the indexing bloat can be avoided.

- In theory, this might work, but also even if a website removes its content via Google Search Console URL Removal Tool while having lots of internal links to the paginated content, and index allowing, self-canonicalizing signals (including sitemaps), Google might not remove the web page from its SERP.

- Or, even if Google removes the paginated content from SERP via Google Search Console URL Removal Tool, it can add it back after a short time.

- In another situation, Google can think that there is a mixed-signal if a web page says “remove this page” manually but also says “index this page” algorithmically. And, this might be seen as a trust-harming situation.

- Lastly, Google might stop the crawling, and rendering the removed paginated URLs via Google Search Console (GSC) URL Removal Tool, and still the self-canonicalizing, and indexing signals might lose their meaning.

Also, Google might perform all of these possibilities at the same time repeadetly.

Since this is the most unique approach for the Pagination SEO from Danieal Heredia Mejias, I still didn’t perform any experiment for method 7. But, in the future, we will refresh this section with actual results.

Note: To remove multiple pages from Google Search Console’s URL Removal Tool, you can use the “Remove all URLs with this prefix” option. In this case, to remove all paginated content via GSC URL Removal Tool, the pagination URL parameter or prefix should be used.

Obsolete method: rel = ”next” / ”prev”

For years, rel = “next” / “prev” was recommended by Google. In spring 2019, however, Google said that they no longer use this practice.

How did re = “next” / “prev” work? By specifying rel = ”next” or rel = ”prev” in the respective link, the strong connection between the URLs of the search engine is explained. The integration takes place (e) in the head area of the page: Page 1 linked to page 2:

<link rel = "next" href = "http://www.example.com/Artikel-Teil2.html" />

Page 2 linked to Page 1:

<link rel = "prev" href = "http://www.example.com/Artikel-Teil1.html" />

Conclusion: rel = “next” and rel = “prev” no longer have to be used. For websites that currently use this practice, there is no need for action and can have this method integrated. Bing, for example, orients itself on rel = “next” / “prev” to understand the structure of the page and to discover pages.

Pagination SEO Methods from Online Stores

So there are numerous options, all of which have certain advantages and disadvantages. To determine what is possibly the best method, we have selected the top 10 online shops in 2013, according to Statista.

| Domain | SISTRIX Visibility | Method |

|---|---|---|

| Amazon.de | 2,119.59 | No |

| Otto.de | 216.88 | Noindex from page 2 |

| Zalando.de | 164.14 | Noindex from page 2 |

| Notebooksbilliger.de | 48.13 | Noindex from page 2 |

| Mediamarkt.de | 97.20 | rel = ”next” / ”prev” |

| Lidl.de | 56.48 | rel = ”next” / ”prev” |

| Bonprix.de | 38.46 | rel = ”next” / ”prev” |

| Cyberport.de | 24.02 | rel = ”next” / ”prev” |

| Conrad.de | 66.98 | Canonical on the first page ** |

| Alternate.de | 31.45 | Canonical on the first page |

In only one of the ten shops examined (Amazon.de), no specific SEO treatment of the pagination topic could be determined. Four of the other online shops trust that all pages except the first one are set to “noindex”. Three shops continue to use “rel = next and prev”. The rest refer to the first page via canonical. Incidentally, it was also very exciting in this observation that logarithmic pagination apparently is hardly used even in large shops.

In the lights of these information, below you will find some basic Pagination Types for SEO.

Basic Pagination Type for SEO

Always links the first page, the previous, and the next. Here the customer has to make a lot of clicks until he reaches the last page. As you can see in the picture, Amazon uses this variant. The last page is grayed out here and cannot be clicked.

First and Last Pages for Pagination

This pagination always shows the first five pagination pages. Here, too, the user needs a lot of clicks to get to the last page. Nobody used this method in the big shops.

Neighbour Numbers for Pagination

This type always shows the first pagination page and the five neighbors to the current page.

Previous and Next Pages for SEO

We noticed a rather rare type of navigation in the Zalando shop. Only the previous and the next page are linked here. In contrast to the first basic pagination, the first page is not linked.

Pages numbered with logarithmic page navigation or pagination are arranged at regular intervals. The range of each web page continues to increase and decrease at a certain logarithmic interval, so web pagination becomes more easily accessible to Googlebot and other Search Engine Crawlers.

Integration of parameters as part of the pagination

A practicable solution using canonicals is the use of additional parameters. A parameter with the relevant page number is attached to the actual page.

Examples for using parameters for pagination.

- https://www.example.com/category-x/ -> main page

- https://www.example.com/category-x?page=2 -> page 2, including parameters

By attaching parameters, a page can be controlled much more easily. Thanks to the parameter handling in the Google Search Console, but also via the Disallow command in the robots.txt, the category sub-pages can easily be excluded from the index, if that’s what you want. In the pagination of categories, while applying the pagination parameter, it must be applied in the language of the website, it must be understandable for the Search Engine, and it must be decided according to whether it should be indexed or not.

Last Thoughts on Pagination and Holistic SEO

Pagination is one of the fundamental issues for SEO. When performing pagination, it is important not to use page components repeatedly, that paginated content does not have duplicate and thin content, paginated content gives itself canonical, not marked with noindex, and is included in the sitemap. However, pagination types and models also affect a website’s PageRank Distribution or Crawl Efficiency.

We have also examined the different ideas and opinions within the SEO Community with you, as we have examined the official statements and guides of the Search Engine. We impartially conveyed the good and bad aspects of these ideas. In the light of all this information, a Holistic SEO should know all these views and concepts with their good and bad sides and use the most appropriate one for the SEO Project.

In the light of new information, our Pagination SEO Guideline will be renewed.

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024

- Semantic HTML Elements and Tags - January 15, 2024

- What is Interaction to Next Paint (INP), and How to Optimize It - December 7, 2023

This is massive work on both SEO and data science! Huge congratulations for putting this up together Koray. Already linked to it twice!