If there is one rule of computing, is that it gets increasingly more expensive and more difficult to scale up performance within a single device or a single server node. And if there is another rule, it is that companies would rather throw hardware at a problem than rewrite their software.

This is the main reason why Arm servers like those in many of our tablets and smartphones have not taken over the datacenter, even a decade after the debate over wimpy versus brawny server nodes started off in earnest, and it is also why the Arm server chips that are contenders in the datacenter really don’t look that different from Xeon, Epyc, and Power processors already there. The performance and price/performance of circuits scale with Moore’s Law improvements, so they get less expensive per unit of performance over time at a pretty nice clip even if it is slowing, but the people who write software get more expensive each year along the rate of inflation as a baseline and even faster when you consider that there are so many companies chasing that programming talent.

But hope springs eternal in this IT world, and the microserver based on a relatively wimpy processors and a minimalist design could get some play as compute becomes more disaggregated from storage and networks get flatter and faster.

We were reminded of our own enthusiasm for microservers and wimpy machines – servers usually based on a single processor that was designed more for a desktop or workstation or embedded controller than a traditional server – by the recent launch of very tiny server nodes in a very compact form factor by Arnouse Digital Device Corp, which has created dense chassis crammed with single-node computers that are the size of a credit card that were being used as racks of PCs but which are now being tweaked to be used as rack-mounted wimpy server nodes by the US military – the Air Force is kicking the tires, but the Army and the Navy have deployed them – and by hyperscalers, according to Michael Arnouse, chief executive officer and founder of the hardware company that bears his name.

We were on board with lean, mean, green machines back in the early 2000s, about the same time that Sun Microsystems was pushing AMD’s Opteron processors hard and talking a lot about the SWAP – space, watts, and performance – vectors of its Sunfire systems. We even went so far as to create an open source hardware project called Hardware Foundry – before Facebook did the Open Compute Project – that shared the microserver designs based on MiniITX boards and X86 clone processors. The need to make a living got in the way of that project, but we did put microservers into production for one of our websites and learned a lot about their limitations and benefits in the process. When you run a baby datacenter in a New York City apartment during a recession or two, you can’t help but learn a few things because staying in business was dependent on it.

The approach that Arnouse is taking peddling wimpy servers is not that much different from the BlueGene/Q massively parallel supercomputer, which was a couple of hundred of cabinets of PowerPC A2 microservers linked by an optical 5D torus interconnect, or even the original Atom-based SeaMicro servers that eventually found a home inside of AMD. The X86 chip maker was not able to make a business out of microservers and despite having plenty of modestly powered and very energy efficient processors of its own, plus the “Freedom” torus interconnect that SeaMicro created to virtualize compute, storage, and networking, this microserver business did not take off.

The projections for sales of microservers five years ago, when the idea was really being tested out in the market, were very optimistic, and Facebook was at the front of the pack with its “Group Hug” microservers based on X86 and Arm processors and with a minimalist enclosure and midplane to link them all to each other and to the outside world. Looking back now, the projections were a bit on the ambitious side. IHS iSuppli Research, which was tracking microserver sales at the time, had a precise definition of what one was: a single board computer with a processor that has a power consumption of 45 watts or less. Based on that definition, IHS iSuppli reckoned that there were 19,000 microserver nodes sold in 2011, but with triple digit growth over a few years and 50 percent growth in the years after that, would grow to be around 10 percent of the market with 1.23 million units shipped by 2016.

As far as we know, that did not happen. (Smile.) Facebook did end up using the Group Hug microserver board, but as a object storage controller within a super-dense disk array, but it did not move an appreciable portion of its compute to the Group Hug machines. Facebook did reprise the microserver with the “Yosemite” systems, which sported beefier single socket server nodes and acceptably fast shared networking. These machines could have perhaps been called a milliserver, and they are part of the Facebook server fleet.

But what Arnouse is talking about – and starting to get some traction in selling – is really a microserver, and the Moore’s Law improvements in compute, storage, and networking capacity compared to a decade or more ago means that a server node the size of a credit card has a lot more oomph and is, in many cases, perhaps suitable for many workloads in the datacenter. And here is the thing that is different thing time around: massive volumes of data mean that companies have to do more computing at the edge, where low cost and thermal efficiency are more important than single thread performance.

ADDC has a couple of different generations of machines. The SR-10 is an enclosure that holds ten of the microservers, and it looks like this:

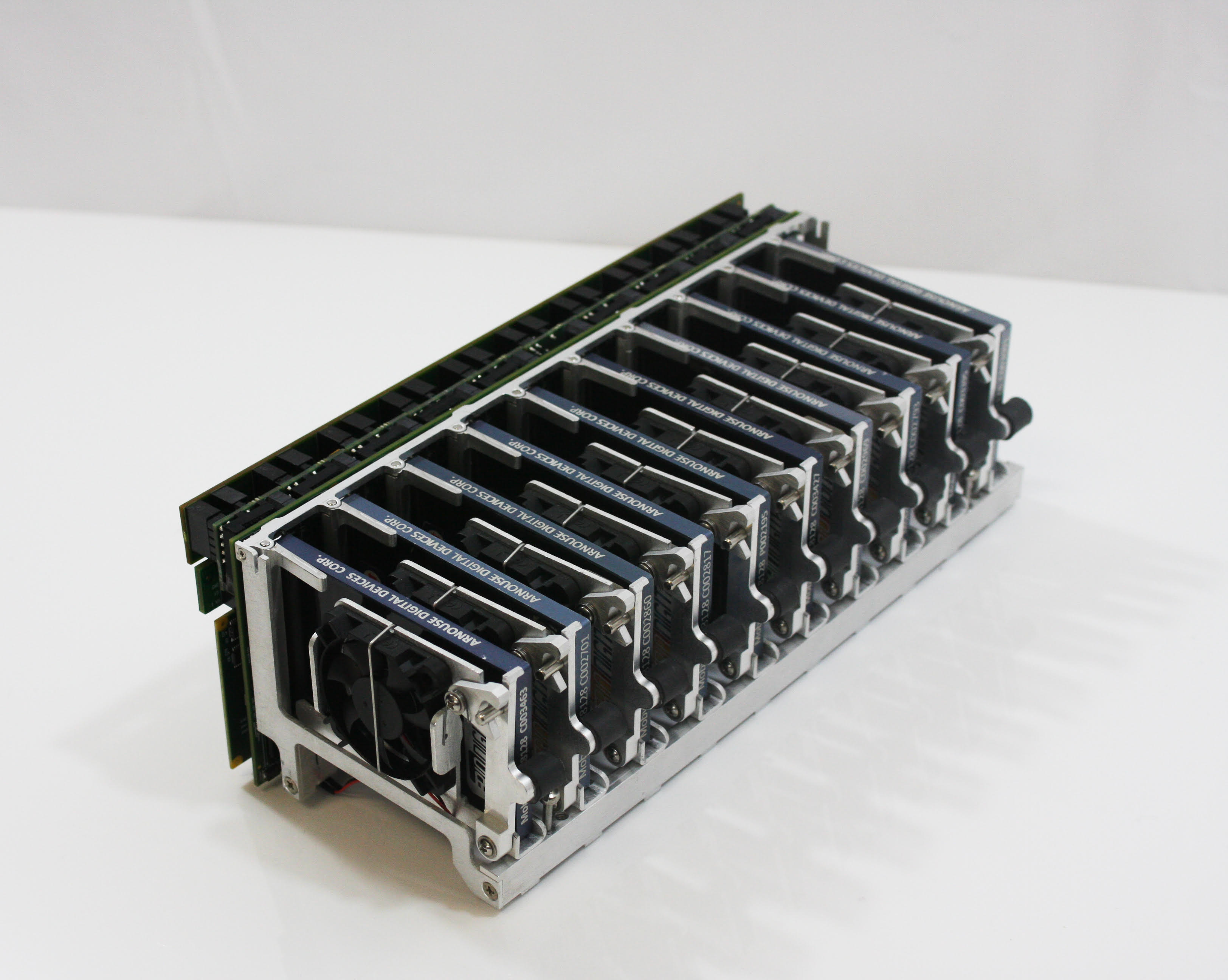

The SR-60 crams six of these sleds into a single enclosure, for a total of 60 machines in a 2U form factor:

The company even has an SR-90 enclosure, announced back in July and sold in volume to an unnamed hyperscaler – yes, one of the Super Eight or Magnificent Seven – for an undisclosed workload, which crams 90 server nodes in a very deep 2U enclosure. Here is the SR-90:

Arnouse has been selling card-based PCs since 2006, and decided in 2016 to rack up the devices and network them together to create what is in essence a cluster in a box to attack the datacenter. The PC 7 and PC 8 compute cards were based on AMD Athlon processors, and with the PC 9 cards from a year ago, the company shifted to an embedded version of the Xeon E3 processor with an 8 GB memory footprint. The PC 10 card, which is the current model, has a “Skylake” Core i7-7600U processor with four cores running at 2.8 GHz and turbo speed to 3.9 GHz. Each node has anywhere from 2 GB to 16 GB of DDR4 main memory and up to 128 GB of flash storage. The impending PC 11 card has a quad core “Kaby Lake” Core i7-8650 which runs at 4.2 GHz and which provides about 30 percent better thread performance per watts than a stock “Skylake” Xeon SP Gold processor, which has more cores and NUMA scalability to boot, but which also clocks much slower and runs a lot hotter. The point is, the compute density per rack is a lot higher with 60 or 90 servers using these quad-core chips than it is using a pair of Xeon SP chips with 12 or 18 cores in the same 2U form factor. This PC 11 compute card will also have a 16 GB memory footprint, plus 128 GB of flash, max.

The PC 10 and PC 11 nodes can run Windows Server 2008, Windows Server 2012, and Windows Server 2016 as well as Red Hat Enterprise Linux, SUSE Linux Enterprise Server, and Canonical Ubuntu Server.

The SR-60 has an integrated Ethernet switch that has 3 Gb/sec downlinks to the server nodes and a pair of 10 Gb/sec uplinks to the network for each ten-pack of nodes, which works out to 120 Gb/sec coming out of the enclosure; the SR-90 will deliver 180 Gb/sec coming out of its enclosure. That may not sound like all of that much networking, but let’s do some math. If you take a pair of top bin Skylake Xeon SP chips with 28 cores each and put a 100 Gb/sec Ethernet card in the machine, that is 1.8 Gb/sec per core; if you use 25 Gb/sec or 50 Gb/sec downlinks, as is common in hyperscale datacenters, that works out to 450 Mb/sec to 890 Mb/sec of bandwidth per core. (We are rounding a little here.) Step down the core count to something more like a more traditional 18-core Xeon SP and a 25 Gb/sec downlink, you are at just under 700 Mb/sec per core. The SR-60 is delivering 750 Mb/sec per core. And the cores are pretty hefty. The issue with these card-sized server nodes is really that the memory footprint is kinda small on the PC10 and PC11 compute cards. But ADDC could fix that by shifting to an embedded AMD Epyc or Cavium ThunderX2 processor, which doesn’t have the caps that Intel artificially puts on memory footprints with the Core i7 or Xeon E3 processors.

There is always a caveat with microservers. They only work in situations where companies need a fairly static and modest compute and storage footprint that will not need to scale up, as is possible in virtualized or containerized environments where there is a big chunk of raw compute, memory, storage, and networking that is sliced up, or in situations where the application is inherently parallel and therefore distributable across many modestly powered nodes. The wimpy node needs a processor with decent clock speed and memory bandwidth, these Fast Arrays of Wimpy Nodes, as they were proposed by David Andersen at Carnegie Mellon University, can be useful provided the software can be compressed into these footprints.

There are limits, as Urs Hölzle, a Google Fellow and senior vice president of technical infrastructure at the search engine giant and public cloud provider, famously pointed out in the aftermath of the FAWN paper with his own thoughts. Because of latency issues and the desire to not gut code to make use of slower, more parallel arrays of compute, Urs Hölzle concluded that wimpy cores (and therefore wimpy nodes made up of processors with a bunch of them) can only beat brawny cores (and therefore systems that deploy lots of them) when the speed of the wimpy core is “reasonably close” to that of the midrange brawny cores.

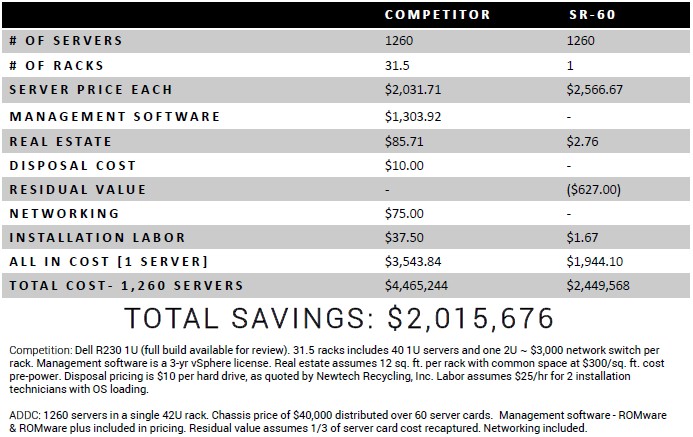

The same SWAP arguments hold up here in 2018 as they did back in the early 2000s, when the wimpy versus brawny argument really got going but we didn’t use that language as yet. Here is a node to node comparison that Arnouse cooked up comparing 1,260 of its PC 10 servers in a rack of 21 of its SR-60 modules compared to 1,260 of Dell’s PowerEdge R230 servers:

The node to node comparison is has the PowerEdge 230s equipped with four-core 2.7 GHz E3-1240 v6 processors and 16 GB of main memory and 2 TB 7.2K RPM disk drives and 1 Gb/sec Ethernet adapters. The SR-60 chassis costs $40,000 per loaded enclosure, by the way. It might be equally appropriate to compare a more mainstream PowerEdge server using a single Skylake Xeon D processor or a pair of Skylake Xeon SP processors with 18 cores. That would bring the compute density back to something akin to normal in the datacenter on the PowerEdge side. But it may not change the aggregate performance profile of the two types of clusters – PowerEdge versus SR-60 – by that much. It only takes 140 PowerEdge nodes to deliver the 5,040 cores, and keeping the memory the same at 4 GB per core would yield a DDR4 memory footprint of 20 TB, or 144 GB per machine.

The issue is normalizing performance. The Skylake Xeon SPs with more cores per socket run a lot slower, but they have shared memory footprints that are a lot larger. And for some applications, particularly those that are memory capacity or memory bandwidth bound, this matters more than clock speed. A PowerEdge R640 with a pair of Xeon SP-6140 Gold with 18 cores running at 2.3 GHz, 160 GB of memory, and 1.6 TB of flash costs $14,341 at Dell today, and 140 of them will run you just a hair of $2 million. That is a half million bucks less than the Dell servers shown above for the same cores and memory. If all of the other costs are proportional, then there should be a roughly 9X contraction in costs for management and a 4.7X contraction in space. The microserver still has advantages. But the gap may not be as large as this table implies.

The point is, do your own math with your own workloads, and keep an open mind about architectures and look back in from time to time to see how things might have changed.

It’s interesting, but not really a revolutionary take on clustered computing. It’s, after all, an x86 server much like anything you could rack about 10 years ago at a price that’s not that attractive. Having lots of nodes is a good configuration for throughput, but anything on shared data will be a nightmare of RDMA, assuming it’s even feasible. The hardware design comes from the misguided idea of putting the whole computer on a card when all you care about is the persistent state that would *very* comfortably fit on the card and allow a much less constrained dock. It’s useful, but for *VERY* specific scenarios.

So, unless they pack A LOT of network bandwidth and Optane-based persistent storage, I’m not that much impressed.

Some price numbers in the table above look very strange:

– no residual value for commodity Dell servers and at the same time high residual value for unknown brand with non-standard CPU in a highly proprietary form factor

– ridiculous price of management software (what is it based on – list prices?).

Also, large Xeon or EPYC servers in the qtys discussed above will be priced MUCH lower than retail (i.e. while 1 Dell server may cost $14.3K 140 of those servers will most likely be priced well below 1.5M$). Upcoming 2×48-core and 2×64-core Zen2 servers are likely to reverse any price/performance advantage PC11-based servers may have now.

Also, PC10 and PC11 couldn’t even be considered servers (micro or whatever) due to a lack of ECC RAM.

Those really are notebook motherboards marked up 300%-400% or so.

Why would anyone buy that is rather mysterious.

And one doesn’t even have to wait until Zen2 CPUs are available to get comparable density and much better price/performance. For example, by using 5 Supermicro 8U blade enclosures each with 20 2-CPU blades (each blade with dual Xeon 6138 125W 20 cores/12x16GB RAM/4x 400 or 480GB NVMe/2×25+2×10 GbE) or with 10 4-CPU blades (each blade with quad Xeon 6138 125W 20 cores/24x16GB RAM/8x 400 or 480 GB NVMe/1×100 EDR+2×10 GbE) per rack for under $1M$ total (for 4,000 server cores with 2.7GHz sustained on all cores) vs claimed 2.45M$ for 5,040 notebook cores (no ECC and unknown sustained speed) – i.e. to get much better price/performance, more hardware (RAM/disk/network speed), expandable (RAM and SSD) and easier to manage cluster.

Hi Igor,

You make some great points here, but its worth noting that while this article and your comments are aimed predominately in the enterprise space. This technology and its application really comes into its own when you want to push compute improvements to environments that are not conducive to a standard rack server deployment.

What is also missing here is the ease at which these cards can be rapidly deployed or upgraded into pre-installed chassis’s, the ability to quickly upgrade cards within the SR racks means that already congested rack environments do not require new rack space allocations or time to remove old systems to replace with new. This eliminates time, cost and risk in the upgrade process.

Complete disclosure here, I am the exclusive re-seller for Canada, the EU, Iceland, Norway and Switzerland for ADDC products. I have sold this technology into military users in these territories, in both off the peg and bespoke formats.

If you would like to have a move in depth technical discussion about this technology and its real world applications, I would be happy to have that conversation.