The controversial tech used to predict problems before they happen

Thursday 3 October 2019 11:50, UK

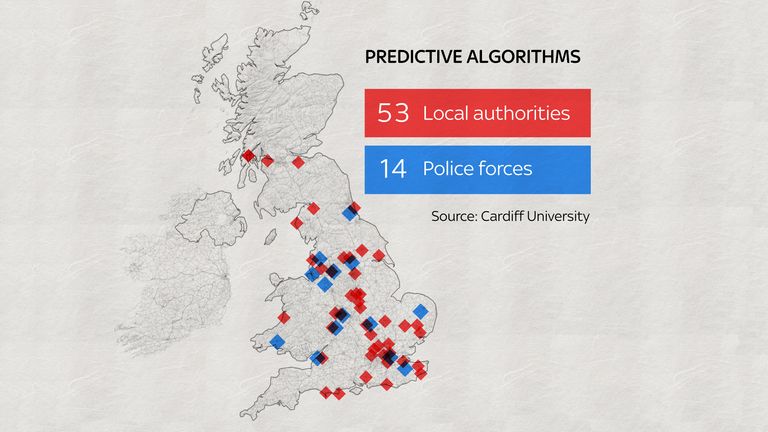

At least 53 councils are using computer models to detect problems before they happen, according to new research by Cardiff University and Sky News, which shows the scale of the controversial technology in UK public services.

So-called predictive algorithms are being used by councils for everything from traffic management to benefits sanctions.

:: Add to Apple podcasts, Google Podcasts, Spotify, Spreaker.

The real figures could be much higher, as not every local authority responded fully to freedom of information requests.

Almost a third of the UK's 45 police forces are also using predictive algorithms.

:: Analysis - Hidden revolution is taking place

Fourteen forces, including the UK's largest force, the Metropolitan Police, are employing for tasks such as providing guidance when considering which crimes should be investigated.

Advocates say these algorithms mean problems can be predicted and therefore prevented.

Critics say lack of oversight means it is not clear how exactly the extensive personal data needed to build the algorithms is being used.

:: Debate - A force for good or an invasion of privacy?

One local authority pioneering the use of predictive algorithms is Bristol City Council.

Its Bristol Integrated Analytics Hub takes in data such as benefits, school attendance, crime, homelessness, teenage pregnancy and mental health from 54,000 local families to predict which children could suffer from domestic violence, sexual abuse or go missing.

Gary Davies, head of early intervention and targeted services at Bristol City Council, told Sky News that the system worked by comparing the data of at-risk children to children who have gone missing several times.

Each child is then given a score which out of 100 and marked as high, medium or low risk, a designation which is used to flag cases to social workers for intervention.

The system works in the same way as the recommendation engines of Silicon Valley tech giants.

Amazon uses a predictive algorithm to suggest items to buy, based on a customer's previous purchases and viewing history. Facebook's suggested friends feature predicts who users might know but aren't connected with online.

Comparing the Bristol algorithm to Google's similar personalised search function, Mr Davies made it clear that the computer was not deciding which children would receive social care.

"What it isn't doing is saying computer says yes or no," he told Sky News. "It's not making decisions about you or your life."

Other public services echoed this view. Kent Police, which recently introduced a predictive algorithm to help decide which cases to follow up with further investigation, told Sky News that the system was only used to advise police officers.

The algorithm, called EBIT, is used to analyse a third of all crime in Kent.

Before Kent Police began using EBIT, it pursued around 75% of cases. Now, it investigates 40% - although Kent Police stresses that it is 98% accurate.

However, data protection campaigners challenged whether, in practice, council workers would be able to ignore the recommendation of an officially sanctioned algorithm.

Jen Persson, founder of privacy non-profit Digital Defend Me, said that algorithmic systems were extremely influential, even if they didn't take the actual decision.

"People have a tendency to trust the computer," Ms Persson said. "Whilst it's advertised as being able to help you make a decision, in reality it replaces the human decision. You have that faith in the computer that it will always be right."

Joanna Redden, co-director of Cardiff University's data justice lab, which conducted the study, warned that there was hardly any oversight in this area.

"Some of these systems come with a range of risks and can harm individuals but also society more generally by increasing unfairness and inequality.

"Despite this, these systems are being introduced without public consultation and without efforts to measure what the effects of these systems might be on those who get caught up in them.

"At a most basic level government bodies should be providing lists of where these systems are being introduced, how they are being used and how citizen data is being shared."

In 2018, a report by the Information Commissioner's Office into the Metropolitan Police's Gangs Matrix, which used a predictive algorithm to flag suspected gang members according to their perceived danger, found "serious" problems with force's use of data.

The Metropolitan Police told Sky News that it "does not believe that the Gangs Matrix directly discriminates against any community and that it reflects the disproportionality of violent offenders and victims of violence that are also described in the report."