FPGA maker Xilinx has acquired Chinese deep learning chip startup DeePhi Tech for an undisclosed sum.

The Next Platform has been watching DeePhi closely over the last few years as it appeared to be building on two converging datacenter trends—FPGA acceleration and neural networks. Specifically, the startup was focused on using Xilinx FPGAs and its own custom software stack and pruning techniques for making inference extremely fast on reprogrammable silicon.

We first described the FPGA based deep compression work DeePhi is known following a chat in 2015 with one of the co-founders, Stanford’s Song Han. At that time he was still shopping his EIE compression technique to various hardware vendors. In fact, FPGAs did not enter the conversation until we caught up with DeePhi again in 2016. By this time, the company’s CEO, Song Yao had a firm partnership with Xilinx in place and was vocal in saying that for dense, compressed neural networks, FPGAs would have an edge that CPUs, DSPs, and GPUs could not for training and (as time went on) inference.

“FPGA based deep learning accelerators meet most requirements,” Yao explained in 2016. “They have acceptable power and performance, they can support customized architecture and have high on-chip memory bandwidth and are very reliable.” The time to market proposition is less restrictive for FPGAs well since they are already produced. It “simply” becomes a challenging of programming them to meet the needs of fast-changing deep learning frameworks,” Yao said. “And while ASICs provide a truly targeted path for specific applications, FPGAs provide an equally solid platform for hardware and software co-design,” he added.

We talked to DeePhi yet again in 2017 where their deep learning on FPGA strategy had evolved even further, warranting new investment and a certain focus on the inference side of the AI workload. Xilinx was one of the major investors in its funding round (undisclosed amount).

As we opined then, the Xilinx, DeePhi relationship goes beyond a hardware and could give Xilinx a play in the inference market, which is still anyone’s game. As Xilinx distinguished engineer, Ashish Sirasao told The Next Platform following the funding round, the hard-wired network pruning and compression techniques central to DeePhi’s approach have already been tuned for convolutional neural networks and long-short term memory (LSTM) models, but there is an increased drive toward meshing these two networks to do things like near real-time video translation and captioning, for instance. This inference job is a computationally intensive task, but Sirasao says DeePhi’s ability to do this in the 8-bit precision range across drastically reduced model sizes will be more important—and can be very competitive over CPUs and GPUs for this same dual-tasking.

“DeePhi has the technology to prune LSTM and convolutional neural networks in a multilayered way, making it possible to do image classification with natural language processing at the same time. We see a lot of momentum with people trying to merge these technologies and we want to make sure there is an absolutely optimized implementation of CNNs and LSTMs for these multilayer problems.” He adds that they have proven internally that going to 8-bit for these merged problems is where the efficiency is since the computation can be doubled. “This new wave in inference is what DeePhi is doing now; we are helping to create more proof points and engagements to drive research,” Sirasao explained.

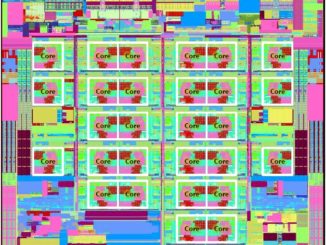

The articles linked here have far more detailed technical descriptions of the two platforms that spun out of the original work at Stanford by Song Han. These are the Aristotle and Descartes architectures. Aristotle is focused on video and image recognition tasks, but DeePhi contends the architecture is flexible and scalable for both servers and portable devices. Descartes is designed for compressed Recurrent Neural Networks (RNN) including LSTM. By taking advantage of sparsity, the DeePhi Descartes Architecture can achieve over 2.5 TOPS on a KU060 FPGA at 300MHz allowing for very high performance speech recognition, natural language processing, and many other recognition tasks. This is available on AWS for the FPGA based F1 instances for the curious.

As Ravi Sunkavalli, senior director of IP engineering at Xilinx added in 2017 post-funding, “if you have looked at the original Google TPU paper, one-third of the workloads they have are in the same area. Here also, the computations are very irregular. Some of the challenges DeePhi has addressed (and what FPGAs are google for) could transform this by allowing things like online deep compression while computing with special purpose logic.” He notes that when computations re irregular, DeePhi on a FPGA can take advantage of sparsity by doing custom sparse matrix multiplication techniques. “When parallelization is challenging in CPUs or GPUs with their notions of threads, they can get hard to use. With the DeePhi approach, there is a hardware load balancer, a custom memory data path with custom hardware and scheduling, so it is instead possible to use a parallelized pipeline architecture.” He says this is how DeePhi is proving its performance over CPU and GPU as well as energy efficiency.

Following the acquisition this week, Salil Raje, executive vice president of the Software and IP Products Group at Xilinx said, “We are thrilled to welcome DeePhi Tech to the Xilinx family and look forward to further building our leading engineering capabilities and enabling the adaptable and intelligent world. Talent and innovation are core to realizing our vision. Xilinx will continue to invest in DeePhi Tech to advance our shared goal of deploying accelerated machine learning applications in the cloud as well as at the edge.”

The DeePhi Tech team will continue to operate out of its offices in Beijing, adding to the more than 200 employees Xilinx has in China.

Be the first to comment