Mind-Reading AI Optimizes Images Reconstructed from Your Brain Waves

The meme of mind-reading machines have been a recurring science-fiction staple for decades. Yet more than ever, that futuristic fantasy seems closer to reality, thanks to recent advances in the development of artificially intelligent, “deep” neural networks. Recent research has demonstrated how machines might be used to decode “blocks” of complex thoughts, reconstruct memories or even videos as they are being watched in real time. But often these reconstructions have limitations: the images reproduced by these AI models might bear little resemblance to the original object, or the system might be constrained to a predetermined set of objects to identify.

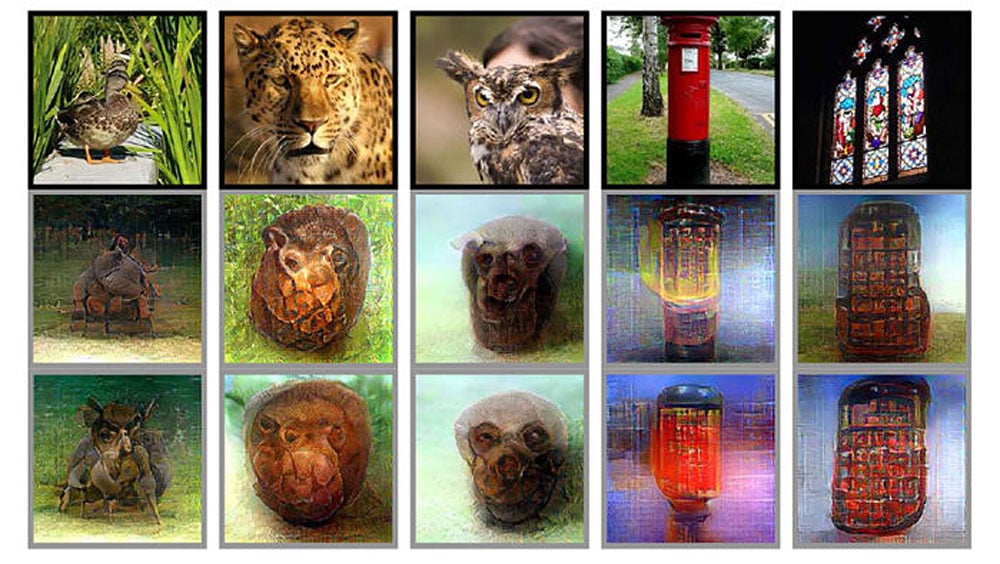

But these thought-decoding technologies are improving. A team of scientists based in Kyoto University, Japan have now developed a new method called “deep image reconstruction,” which uses a reconstruction algorithm capable of “decoding” a “hierarchy” of complex visual information from human brain activity, such as colors and shapes. The team’s algorithm also optimizes the pixels of the decoded image so that it more closely resembles the actual object, in combination with a multiple-layered deep neural network (DNN) to simulate the same processes that occur when a human brain perceives an image. Watch this short video to gsee some of the experimental results:

In their paper published on Biorxiv, the researchers explain how their approach to this kind of AI-assisted translation of perceptual content better imitates the elaborate, hierarchical neural representations that are constructed in humans’ natural visual system.

“We have been studying methods to reconstruct or recreate an image a person is seeing just by looking at the person’s brain activity,” neuroscientist and paper senior author Yukiyasu Kamitani told CNBC. “Our previous method was to assume that an image consists of pixels or simple shapes. But it’s known that our brain processes visual information hierarchically extracting different levels of features or components of different complexities. These neural networks or AI model can be used as a proxy for the hierarchical structure of the human brain.”

The team used fMRI (functional magnetic resonance imaging) technology to gather brain activity data from subjects as they viewed real-life images animals and other objects, as well as images of geometric shapes and letters. This raw data was then filtered through a deep neural network so that the decoding process would occur in a way that more closely matched what happens in the human brain when it perceives something.

“We believe that a deep neural network is good proxy for the brain’s hierarchical processing,” said Kamitani. “By using a DNN we can extract information from different levels of the brain’s visual system.”

This filtered data then acts as a template of sorts. To identify what image someone is seeing, the team then used a “decoder” — which is trained on fMRI data taken while subjects were viewing natural images — to repeatedly refine the decoded information further in hundreds of calculated passes (as seen in the video above), so that the image will come closer to the original, pixel by pixel.

To create more realistic-looking images, the results were further enhanced with a deep generator network (DGN), an algorithm that better captures common dominant features (such as eyes, faces and textural patterns), which will then offer visual clues to what that particular object might be. With the help of a DGN to boost the image output, the team found that a neutral human evaluator could match the decoded image with the original 99 percent of the time.

The team also tested out their system on translating perceptual data on geometric forms and alphabetical letters, which was a challenge as the system was only initially trained on natural images. Nevertheless, the system was able to produce images that were recognizably close to the initial shape or letter.

Next, the team attempted to decode the brainwaves from subjects who were merely imagining the same sets of images, recalling them from memory. The resulting images are not all that clear, especially for natural images, as it can be difficult to recall exactly how the finer details of an image might look like. “The brain is less activated,” Kamitani explained, so those visual clues that define an image are less apparent. The team found, however, that for shapes and letters, the deep network generator would produce a discernible image 83 percent of the time.

While such mind-reading technology is still in the early stages of development, the findings offer a fascinating window into a range of future possibilities. For instance, when paired with search algorithms, it might someday be possible to search for a particular image online or on your computer just by recalling that exact same image in your head. It could also be one way for patients with speech disorders to communicate with people around them. Mind-reading machines could also become invaluable for developing brain-machine interfaces (BMIs) that allow users to communicate by merely thinking a thought — an impressive prospect, indeed.