MIT Team Creates Video From Still Photo

InputOutput

InputOutput

InputOutput

CAMBRIDGE, MASS.— Scientists at MIT have used machine learning to create video from a single still shot.

“In our generation experiments, we show that our model can generate scenes with plausible motions,” Carl Vondrick, Hamed Pirsiavash and Antonio Torralba said in a paper to be presented at Conference on Neural Information Processing Systems in Barcelona next week. “We conducted a psychophysical study where we asked over a hundred people to compare generated videos, and people preferred videos from our full model more often.”

The team started by setting up an algorithm to “watch” 2 million random videos—about two years worth—to learn scene dynamics, and use that knowledge to generate video.

“We use a large amount of unlabeled video to train our model. We downloaded over 2 million videos from Flickr by querying for popular Flickr tags as well as querying for common English words,” they said.

These videos were divided into two data sets; one unfiltered, and the other filtered for scene categories, of which four were used—golf course, babies, beaches and train stations. The videos were motion stabilized so static backgrounds could be more easily differentiated from foreground objects in motion.

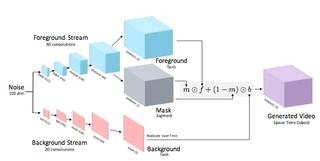

This allowed researchers to set up a two-stream video generation architecture (illustrated below) that would produce a “foreground or [a] background model for each pixel location and timestamp,” a methodology reflective of the way video compression codecs “reuse” pixels in static scene elements.

The video generator produced 32-frame videos a little more than one second in length, at 64x64 resolution. These were run by a discriminator network programmed to discern “realistic scenes from synthetically generated scenes.” This served to further instruct the algorithm to create “plausible” motion, described by Motherboard as “far surpass[ing] previous work in the field.”

Vondrock, a Ph.D. student at MIT’s Computer Science and Artificial Intelligence Laboratory, wrote the paper with Torralba, and MIT professor, and Pirsiavash, a former CSAIL post-doctoral student who is now a professor at the University of Maryland Baltimore County, according to CSAIL.

See “Generating Videos With Scene Dynamics,” by Carl Vonrick, Hamed Pirsiavash and Antonio Torralba.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.