What Is a Design Review?

Design review: A usability-inspection method in which (usually) one reviewer examines a design to identify usability problems.

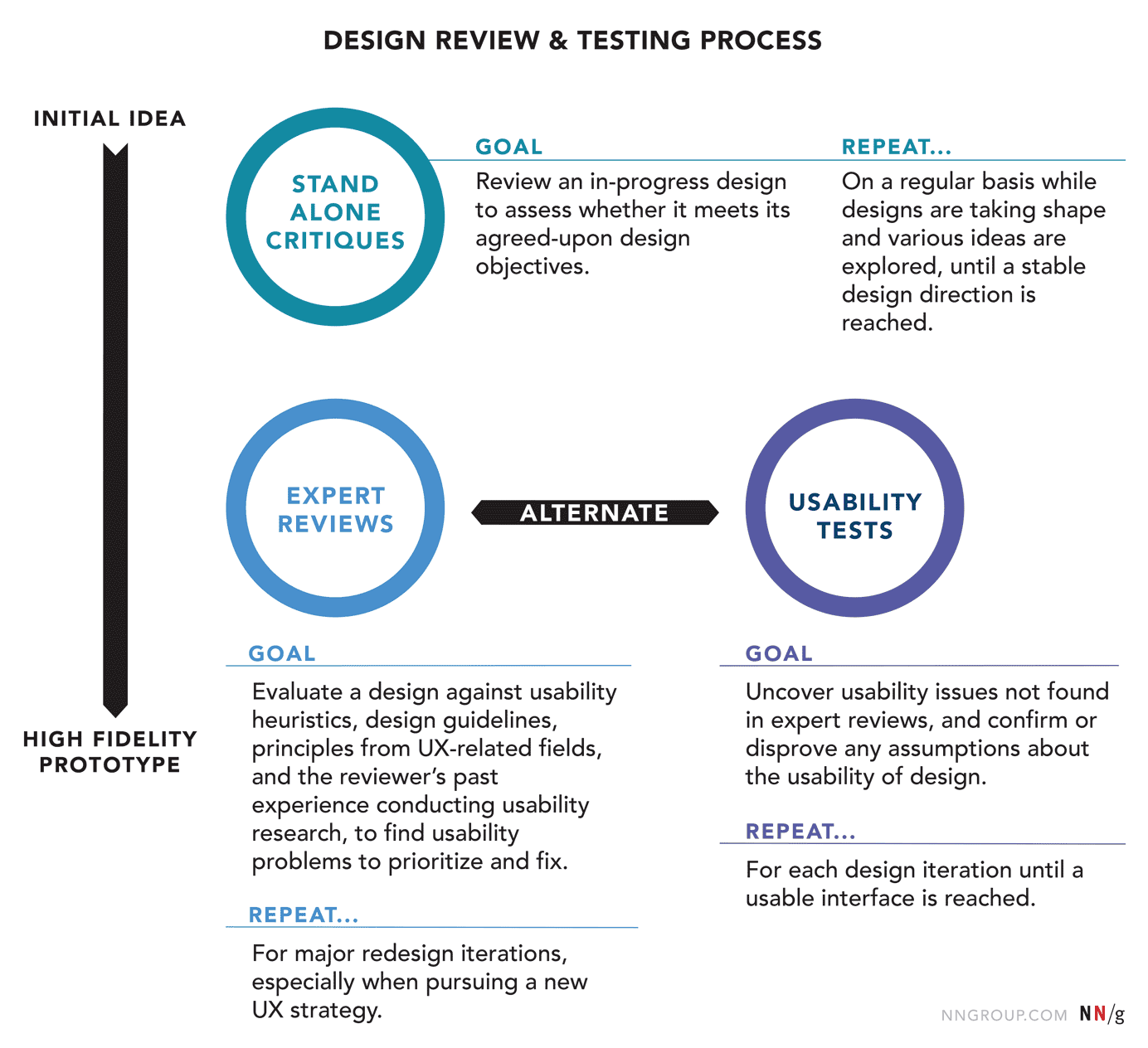

The phrase “design review” is a broad term that encompasses several methods of inspection — in each, the level of inspection varies depending on who is doing the review and the review’s goals. Common types of design reviews include:

- Heuristic evaluation: a type of design review in which the design is evaluated for compliance with a set of heuristics such as Jakob Nielsen’s 10 Usability Heuristics.

- Standalone design critique: a design review in which an in-progress design is analyzed (usually as a group conversation) to determine whether it meets its objectives and provides a good experience.

- Expert review: a design review in which a UX expert inspects a system (such as a website or application, or a section therein) to check for possible usability issues. The distinction between heuristic evaluations and expert reviews is blurry in many organizations, and it’s okay to think of an expert review as a more general version of a heuristic evaluation.

Design reviews can be conducted at all stages in the design cycle, provided that there is a prototype with sufficient level of detail. In fact, since these reviews are based on inspection, as opposed to actual use by a real user, one can even review a set of specifications or other more abstract versions of a user interface that could not be tested with participants. Another advantage is that it’s possible to review an isolated segment of a design, such as a single dialog box or an exception-handling workflow. (In contrast, in user testing, you usually want to test broader tasks and avoid taking the study participant directly to the individual feature you’re currently working on.)

In this article we focus on expert reviews.

Expert Reviews

Expert reviews usually expand on heuristic evaluations by assessing the design not only for compliance with heuristics, but also against other known usability guidelines, principles of usability-related fields such as cognitive psychology and human-computer interaction, and the reviewer’s expertise and past experience in the field. The emphasis on the reviewer’s past experience and knowledge of usability principles is why this type of design review is often referred to as an expert review.

A written document is often the deliverable of an expert review, although sometimes the expert’s conclusions may be presented in a meeting instead. Written documents take more time to create and read, but contain detailed information and recommendations, and can serve as a reminder for the reasoning behind certain design changes. These documents can also rank the usability findings according to the problem’s severity or frequency.

Saying that an expert review should be done by a UX expert then begs the question of who is a UX expert. There are no shortcuts: The only way to become a UX expert is by doing UX research and getting substantial exposure to real user behavior. If you design in a vacuum and never see how the target audience interacts with your design, you don’t build the kind of UX expertise that’s needed to review interfaces. We can’t specify a particular number of years’ experience as a requirement for expert reviewers, because some people gain knowledge faster than others, or have greater access to observing more users. Let’s just say that you’re not an expert the very day you start your first UX job, and that gathering the depth and breadth of UX knowledge needed for an expert review takes some time.

Get a Fresh Perspective

Design reviews work best when the examiner not only has a deep knowledge of usability best practices, and a large amount of past experience conducting usability research, but also is someone who was not involved in creating the design to be reviewed. A fresh perspective is more likely to be unbiased and deliver candid feedback: an outsider is not emotionally invested in the design, is oblivious to any internal team politics, and can easily spot glaring issues that may stay hidden to someone who’s been staring at the same design for too long. (Side note: The reason people are bad at proofreading their own writing is because their mind sees what they intended to write, not what actually makes it onto the page! The same is true of our own designs: we understand what the design is intended to do, and so we can’t see clearly whether it truly accomplishes that goal.)

In contrast, when doing usability testing, designers can test their own designs if necessary, because the test participant provides that invaluable outside perspective. (However, to avoid priming or bias in the study results, it’s still better to have an impartial person conduct the test and stick the designer in the observation room, behind a mirror.)

Domain knowledge about the problem that your system attempts to solve can be helpful in understanding the goals and limitations of the system, but is not necessary to conduct a thorough, effective design review. It is more important that the reviewer has expertise in usability and user experience, to be able to identify probable UX issues. In fact, reviewers who are able to draw on usability experience from many different domains are ideal, because they have witnessed a vast variety of designs that work and don’t work in various contexts.

Components of an Expert Review

The core components of a design review are:

- List of usability strengths

To make reviews less “doom and gloom,” and ensure that good design elements are not marred in the redesign process, the review should include a list of strengths and a short explanation for each.

- List of usability problems, mapped to where they occur in the design

For each usability problem, it’s important to have a clear explanation: the heuristic or principle violated should be clearly cited and related to the design, so that any fix will address the underlying issue and the same mistake will be avoided elsewhere as well. Whenever possible, the discussion should also include a link to an article or some other source of additional information, as a reference in case designers or other stakeholders want to read more.

If a problem does not necessarily violate a classic guideline or principle, but instead stems from other usability research (either the reviewer’s experience or another trusted source), the reviewer should explain clearly why the design represents a problem. (For example, “Users who are asked to confirm each choice that they make become habituated with these confirmation screens and respond automatically without paying attention to the text on the screen, which may result in slips.”)

Grounding the usability problem in a violation of existing UX knowledge is essential to elevate the expert review above the opinion wars that are rife in many development projects. A design element should never be designated a usability problem because the reviewer personally doesn’t like it or would be happier using a different design. As always in UX, you are not the user, and that applies to both designer and reviewer. All usability problems noted in the expert review must be accompanied by objective explanations, not subjective criticisms.

- Severity ratings for each usability problem

Including a severity rating for each issue discovered is key to making the findings actionable and helping designers prioritize the redesign work. At Nielsen Norman Group, we often use a simple 3-point severity scale for each problem: High, Medium, or Low.

- Recommendations for fixing each usability problem

Another key element of an actionable usability finding is a clear recommendation for how to address the issue. Often, once the issue is noticed and the underlying reason of the issue is understood, the fix will be obvious. In other cases, the recommendation may be to investigate further: to pay attention to the design element during the upcoming usability studies to determine whether it truly causes any usability issues, or to consider conducting some other type of user research to get a clearer answer.

- Examples of best practices to guide improvements

A real example of a good design can be more illuminating than a thousand words. Whenever possible, support your recommendations with examples of other sites addressing the same issue. Providing multiple examples of sites solving the same issue prevents the conclusion that there is any single best way to design the solution.

Expert Reviews vs. Usability Testing

The types of usability issues found in an expert review vary from those that would be uncovered during a usability study, which is why combining these methods results in the best overall design. An expert review can identify minor issues that would be difficult to observe or measure in a small qualitative study: inconsistencies in font usage throughout the interface, a color that doesn’t follow brand guidelines, or the use of center-aligned text instead of left-aligned. Larger issues that go against best design practices would also get caught in the expert review, so they could be addressed before wasting any time with real participants.

On the other hand, usability testing may uncover issues that an expert may have not thought of — for example, because the real audience has very specific knowledge or needs.

An expert review works well as part of the planning process for an upcoming usability study on that design. Evaluating the design, especially if it reflects a completely new strategy for your user experience, can identify areas of concern to focus on in the study and also catch “obvious” issues that should be fixed before usability testing. Solving those issues gets them out of the way, so the study can uncover unpredictable findings.

Luckily, it’s not a question of A or B here. We can do both A and B: subject the same project to as many rounds of usability evaluation as time allows, including some rounds of expert reviews and some rounds of usability testing.

When to Do an Expert Review

Expert reviews can be done at any stage in the design cycle if the team has access to a UX expert. However, because the expert’s time can be quite precious, in many organizations expert reviews are used before a major redesign project, to identify significant strengths and weaknesses of the current live design. Even when a major redesign will not occur, an expert review should be conducted every 2 to 5 years, outside of the main design lifecycle, as a step back from current design concerns and for assurance that the design still serves the user needs appropriately, in a usable way.

Sometimes, the expert review is considered the final milestone of a project phase, where the design (or completely developed project) is measured against its original objectives and, if successful, triggers the next phase: development or launch. This type of process works only if there were previous iterations of design critiques and prototype usability testing. Otherwise, saving the expert review until the end of the process causes a lot of rework if major issues are identified!

For this reason, we recommend conducting some type of design review iteratively during the creative phase, when the designs are still flexible enough to be easily modified. Even though you may not afford a full expert review on every design iteration, you may still be able to alternate design critiques with user testing and occasional expert reviews.

Conclusion

Expert reviews are a valuable method for uncovering usability issues, complementary to usability testing. They are often performed by a person with expertise in usability and principles of human behavior, and they result in a list of usability issues and strengths, together with recommendations for fixing those issues.

Consider hiring Nielsen Norman Group to conduct an unbiased, expert UX review.