Future of AI Part 5: The Cutting Edge of AI

By Imtiaz Adam, @deeplearn007, MSc Computer Science, MBA, Sloan Fellow in Strategy. Director Machine Learning, 5G and Digital Strategy DLS.

This article is the final in the series relating to the future of AI. The focus of the series has been about how AI technology will impact our lives over the course of the 2020s and beyond. Digital Transformation, Industry 4.0 and 5G have been the key themes.

Part 1 focussed on the period to 2025;

Part 2 placed greater emphasis on the period after 2025;

Part 3 focused mostly how of AI and 5G will transform the healthcare sector and was kindly published by KDnuggets;

Part 4 considered the potential of AI and 5G to enable sustainable economic growth, the future of work and job creation;

Part 5 will focus on the real impact that AI is likely to make, the types of AI along with a discussion about Artificial General Intelligence (AGI) and the barriers to achieving it. The final section will summarise the main cutting edge techniques of AI today along with a short glossary of terms used within AI. I am an optimist and strongly believe that AI will make a transformational and positive contribution to the world over the course of this decade.

For the purposes of this article I will refer to AI as including Machine Learning and Deep Learning within the term.

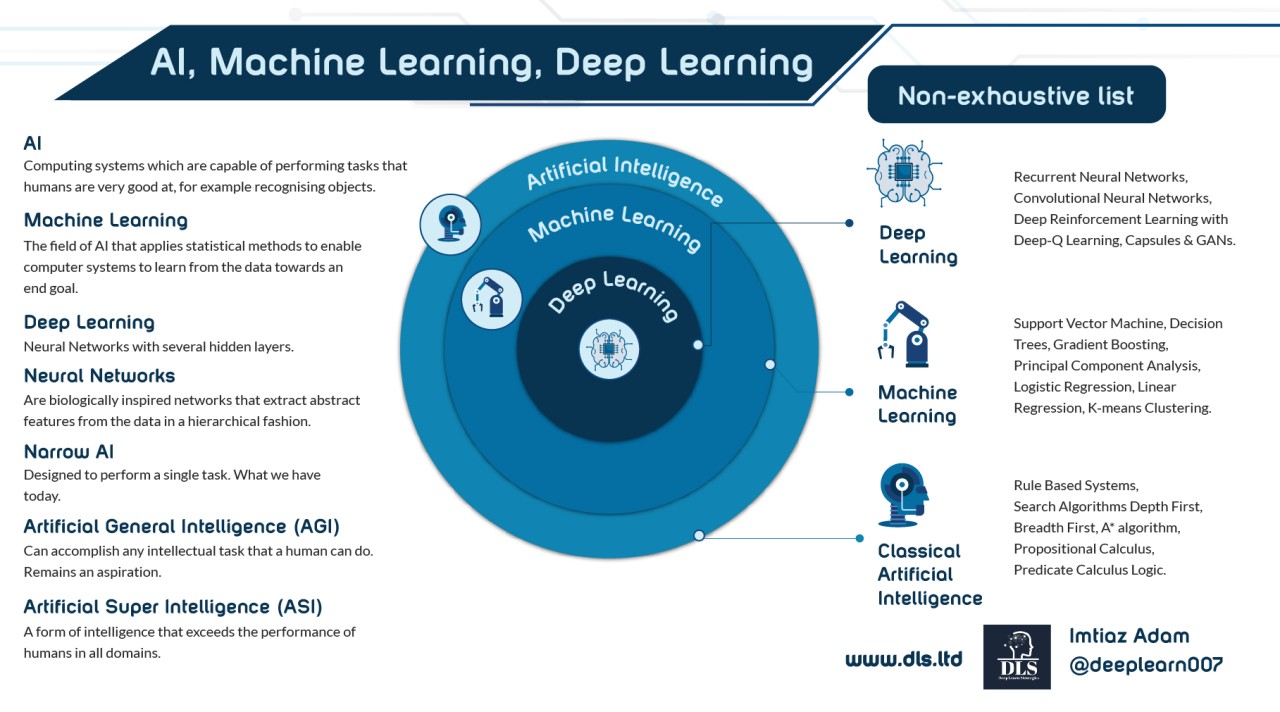

Source for image below Geospatial World What is the difference between AI, Machine Learning and Deep Learning?

Why we need AI? Data and Digital Transformation

The growth in big data results in both challenges and opportunities for organisations. In terms of opportunities this includes the potential for enhanced understanding o the customer and personalised marketing in turn resulting in efficiency gains and reduced wastage, improvements in healthcare and financial services offerings. However the volume of data is truly vast and the transmission of this data is a challenge over the networks. In order for organisations to make sense of the data and enable reduced latency in the next generation of applications there is necessity for the computing to be situated closed to the user, and this is referred to as the edge (of the network). The advantage of Edge Computing is that it will allow firms to undertake near real-time analytics, with improved user experiences and less cost as the volume of data transmitted back and forth between the cloud and the device is reduced. In turn this will mean that AI (for example Deep Learning on connected cameras, drones or autonomous vehicles) will increasingly be inferencing on the edge, meaning AI will increasingly be embedded within the devices around us. AI will be at the heart of the 5G revolution and key to enabling the edge to deliver enhanced customer experiences as well as an intelligent Internet of Things in Industry 4.0.

The convergence of AI, 5G and Edge Computing alongside Augmented Reality and Mixed Reality will enable exciting new innovations in customer experience, personalisation of services and cleaner economic development in the 2020s and 6G combined with AI will result in the Internet of Everything (IoE) in terms of anything that can be connected.

Source for Figure 1 below Giordani et al. Towards 6G Networks: Use Cases and Technologies

There are those (see next section) who believe that 5G will in fact enable many of the disruptive technologies that Giodania et al. show under the 6G arrow in the image above and that 6G will offer a continuation of the technology revolution that 5G will enable, however, I believe that the timeline (2025 and beyond) is more probable for certain technologies such as more advanced autonomous vehicles (with levels 4 or 5 of autonomy, and whilst manufacturers hope to have level 4 on the road by 2022 we have seen that they have tended to be overoptimistic) to make an impact and scale as it will require sufficient scaling of 5G networks along with appropriate regulatory and legal frameworks.

Augmented Reality (AR) and Mixed Reality (MR) will make a bigger impact in the period 2022 onwards as telecoms companies roll out dedicated 5G networks. AI will be the core technology working alongside the other technologies that 5G will enable. A good overview of how AI works alongside AR see the article by Daniel Rothman "Enhancing AR with Machine Learning". Daniel Rothman states that "Apple’s Measure is one of my favorite AR apps. It’s a simple and reliable way of taking physical measurements with your smartphone and it demonstrates how robust AR tracking has become."

Source for Image below Daniel Rothman "Enhancing AR with Machine Learning". Apple’s Measure in action. The results are accurate within ±1 cm!

Digital Transformation in the 2020s

Before we reach the era of 6G around 2030, there will be huge opportunities for the deployment of Machine Learning and Deep Learning at scale across the network and into devices. This is emphasised by Loughlin et al. in "The Disruptions of 5G on Data-driven Technologies and Applications" emphasise the role and opportunities for Machine Learning and Deep Learning in the 5G era. Furthermore, this is the logic for my view that we are not on the verge of a new AI Winter due to the amount of data that we are about to generate with the rolling out of 5G networks.

Source for image below: Loughlin et al. 5G Use Cases "The Disruptions of 5G on Data-driven Technologies and Applications"

Loughlin et al. state "The increased number of interconnected devices and the accompanying sensors will generate a tremendous amount of data on a daily basis. At the same time, there is a surging demand for personalized services on mobile devices to enhance user experience. For example, companies may want to provide real-time personalized recommendations to users. The unprecedented amount of data residing in the edge devices is the key to build personalized Machine/Deep Learning models for enhanced user experience."

An example of the positive impact of AI for humanity is provided by Fergus Walsh who reported that "AI 'outperforms' doctors diagnosing breast cancer" with the following statement "Artificial Intelligence is more accurate than doctors in diagnosing breast cancer from mammograms, a study in the journal Nature suggests."

"An international team, including researchers from Google Health and Imperial College London, designed and trained a computer model on X-ray images from nearly 29,000 women."

"The algorithm outperformed six radiologists in reading mammograms."

"AI was still as good as two doctors working together."

"Unlike humans, AI is tireless. Experts say it could improve detection."

In spite of the positive impacts of AI in areas such as Healthcare and the potential for AI in areas of Fintech to enable financial inclusion, the concept of AI generates fear or anxiety for many.

The popular perception of AI in the public domain and mass media is a version of AI popularised in Hollywood movies and novels. This is a form of AI that can match (or in some cases even exceed) human capabilities.

For anyone who has not viewed Terminator, Ex Machina, I, Robot or 2001: A Space Odyssey, there is a spoiler alert and I suggest that you skip the AI in movies part.

AI in the movies and resulting popular perception

In the Terminator movie Skynet emerges as an Artificial General Intelligence that "...began a nuclear war which destroyed most of the human population, and initiated a program of genocide against the survivors. Skynet used its resources to gather a slave labor force from surviving humans."

In Ex Machina a female humanoid robot named Ava is assessed to test whether Ava can successfully pass the Turing test and in the process outmanoeuvres the humans who are interrogating her by using a combination of the aggression and cunning intelligence.

In I, Robot, the AI system VIKI (Virtual Interactive Kinetic Intelligence is a villain with intentions to take over humans for their own benefit.

And in 2001: A Space Odyssey, HAL 9000 (Heuristically Programmed Algorithmic Computer) is a sentient Artificial General Intelligence that listed as the 13th-greatest film villain in the AFI's 100 Years...100 Heroes & Villains.

Some forms of advanced AI appear more heroic or friendly for example KITT in Knight Rider is an AI electronic computer module in the body of a highly advanced, very mobile, robotic automobile and J.A.R.V.I.S. (Just A Rather Very Intelligent System) featured in the Marvel Cinematic Universe. In the current state of AI we have not attained the point where Voice AI technology can come close to the likes of KITT or J.A.R.V.I.S. and whilst there are incremental improvements in the likes of Alexis over time, voice technology and chatbots still don't give the customer an interaction of a human level conversation. The fact remains that we have not passed the Turing test yet.

The Turing Test

Wikipedia explains "The Turing test, developed by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses."

"By extrapolating an exponential growth of technology over several decades, futurist Ray Kurzweil predicted that Turing test-capable computers would be manufactured in the near future. In 1990, he set the year around 2020. By 2005, he had revised his estimate to 2029."

There have been claims that the Turing test was passed in 2014 at an event arranged by the University of Reading but researchers responded with criticism for example "It's nonsense," Prof Stevan Harnad told the Guardian newspaper. "We have not passed the Turing test. We are not even close."

Hugh Loebner, creator of another Turing Test competition, has also criticised the University of Reading's experiment for only lasting five minutes.

Paula Adwin authored "What’s the Turing Test and Which AI Passes It?" provides an intuitive explanation of the Turing test and states that "While there have been two well-known computer programs or chatbots, claiming to have passed the Turing Test, the reality is that no AI has been able to pass it since it was introduced."

"Turing, himself, thought that by the year 2000 computer systems would be able to pass the test with flying colors. Looks like technology might be lagging a little."

In 2018 Alphabet's CEO claimed that the Turing test was passed but Artem Opperham in an article entitled "Did Google Duplex beat the Turing Test? Yes and No" provides two reasons why it did not beat the Turing test, with the first being that the person knew it was a machine, and the more important being that the subject matter was narrow and in order to truly pass the Turing test a machine should be able to answer any question.

"There is no way possible that we will not have a general conversational AI in the next 10 years that can speak to any human in any language about every possible topic."

AI Replacing Humans

Overall AI is often viewed with concern. For example the WEF predicted in 2016 that by 2020 Five Million Jobs would be lost due to AI by 2020 and forecast that "Employment outlook is net positive in only five of the 15 countries covered."

The WEF later published a report predicting that AI will create 58 million jobs by 2022. There are those within the AI community, myself included believe that the type of AI that we have today can be used to help generate new business and employment opportunities by augmenting humans and making sense of the flood of data that we are creating with digital technology.

For example Daniel Thomas in an article entitled "Automation is not the future, human augmentation is" quotes Paul Reader, CEO of Mind Foundry, an AI startup spun out of University of Oxford’s Machine Learning Research Group as stating “Throughout history innovations have come along like electricity and steam, and they do displace jobs. But it is about how we shape those innovations. Any tech can be used for good or for evil, and we want to use it for good.”

Source for Image Below Accenture, Daniel Thomas, Raconteur Automation is not the future, human augmentation is

Sofia the Robot (image below) is an impressive piece of robotics engineering but it is not a form of advanced Artificial General Intelligence (see below for details of this type of AI) that can match human intelligence. The article from Jaden Urbi entitled "The complicated truth about Sophia the robot — an almost human robot or a PR stunt"

In a Facebook post, leading AI researcher Yann LeCun said Hanson’s staff members were human puppeteers who are deliberately deceiving the public.

"In the grand scheme of things, a sentient being, or AGI, is the goal of some developers. But nobody is there yet." This article will explain what some of the barriers are and what AI is likely to enable us to do in the current decade (2020-2029).

Image above Sofia the Robot, Hanson Robotics Editorial credit: Anton Gvozdikov / Shutterstock.com

It is understandable that the general public have anxieties about AI given the lack of understanding about the technology in the wider domain and perhaps the need for more in the Data Science community to explain the subject in a manner that the wider public including many journalists and some of the social media influencer community can understand. I hope that this article will help play a role in enabling the business community and wider public understand the complexities of AI and in particular the challenges of Artificial General Intelligence and how we can avail the opportunities that 5G will provide to use AI to enhance our economies and every day lives.

The Reality of AI in the 2020s

The 2020s are going to be a decade where AI and 5G technology working alongside each other will result in a world where physical and digital connect in the form of intelligent connected devices with AI inferencing on the device itself. The intelligence will enable substantial advances in healthcare, financial services (banking and insurance), retail, marketing, manufacturing and environmental benefits.

Whilst there will be continued and exciting research advancements in the field of AI, it is highly unlikely that the 2020s will be a period in which Artificial General Intelligence (see below for definitions) will arrive and instead it will be a period in which we will use AI technology alongside 5G as the key for Digital Transformation of every sector of the economy resulting in mass personalisation at scale for healthcare, retail, financial services and other sectors. The result of the Industry 4.0 revolution that AI and 5G will usher in across the 2020s will be cleaner economic development with new the creation of new jobs, economic growth and exciting new business opportunities.

Use case examples

CBINISIGHTS published an article entitled What Is 5G? Understanding The Next-Gen Wireless System Set To Enable Our Connected Future explained that the widespread adoption of 5G would enable transformative effects on industry for 3 major points:

- Reduced latency allowing for larger data streams to be transmitted more rapidly;

- Enhanced reliability allowing for improved data transmission;

- Enhanced flexibility of 5G over WI-FI resulting in support for a greater range of devices, sensors, and wearables.

Source for Image below CBINSIGHTS What Is 5G? Understanding The Next-Gen Wireless System Set To Enable Our Connected Future

Healthcare

Alan Conboy in "The Future of Healthcare Starts With Edge Computing" states that "In the US, edge computing deployment could propel the reach and availability of cancer screening centers and ‘pop-up’ clinics. It also will give doctors and healthcare professionals access to more immediate and actionable patient monitoring using IoT connectivity within cardiac pacemakers, defibrillators and even sensors in insulin pumps."

Fintech

5G alongside AI will enable a seamless and frictionless customer experiences in retail, payments and banking.

Jamie Carter authored Why FinTech needs 5G and states that "FinTech will certainly benefit from is 5G's primary use-case; connecting more devices at low power, at low cost, and with high reliability. This should lead to a surge in the number of connected devices, from smartphones, wearables and home appliances to sensors on all kinds of objects, public infrastructure, and even clothes."

"This is the Internet of Things (IoT), and 5G is expected to supercharge its development."

What is Artificial Intelligence (AI)

AI deals with the area of developing computing systems which are capable of performing tasks that humans are very good at, for example recognising objects, recognising and making sense of speech, and decision making in a constrained environment.

Classical Artificial Intelligence: are algorithms and approaches including rules-based systems, search algorithms that entailed uninformed search (breadth first, depth first, universal cost search), and informed search such as A and A* algorithms. These laid a strong foundation for more advanced approaches today that are better suited to large search spaces and big data sets. It also entailed approaches from logic, involving propositional and predicate calculus. Whilst such approaches are suitable for deterministic scenarios, the problems encountered in the real world are often better suited to probabilistic approaches. Such approaches encountered AI winters with the first AI winter in the 1970s following the publication of the Lighthill report and DARPAs funding cutbacks and the second AI Winter in the late 1980s with a loss in confidence in expert systems.

Source for image below Sebastian Schuchmann History of the first AI Winter (timeline for both winters shown below)

I have set out above why I strongly believe that we are not on the verge of another AI winter due to the demand for Machine Learning and Deep Learning as the Vs of big data (Velocity, Volume, Value, Variety, and Veracity) are set to increase in particular volume as Edge Computing and the IoT is set for rapid growth in the 2020s.

An Overview of AITypes of AI

Narrow AI (or Artificial Narrow Intelligence, ANI): the field of AI where the machine is designed to perform a single task and the machine gets very good at performing that particular task. However, once the machine is trained, it does not generalise to unseen domains. This is the form of AI that we have today, for example Google Translate.

Examples of the type of ANI that we have today include Machine Learning and Deep Learning algorithms of today.

Machine Learning is defined as the field of AI that applies statistical methods to enable computer systems to learn from the data towards an end goal. The term was introduced by Arthur Samuel in 1959. Deep Learning refers to the field of Neural Networks with several hidden layers. Such a Neural Network is often referred to as a Deep Neural Network.

Artificial General Intelligence (AGI): a form of AI that can accomplish any intellectual task that a human being can do. It is more conscious and makes decisions similar to the way humans take decisions. AGI remains an aspiration at this moment in time with various forecasts ranging from 2030 to 2060 or even never in terms of its arrival. It may arrive within the next 20 or so years but it has challenges relating to hardware, energy consumption required in today’s powerful machines, and the need to solve for catastrophic memory loss that affects even the most advanced Deep Learning algorithms of today.

Image Source below: Vasant Kumar What is Artificial Intelligence?

Note that there are those who believe that AGI will be conscious for example Simon Stringer implies that AGI will be conscious, and Peter Voss believes that AGI will have self awareness but maybe perceive the world in the different manner than humans perceive the world.

Artificial Super Intelligence (ASI) : is a form of intelligence that exceeds the performance of humans in all domains (as defined by Nick Bostrom). This refers to aspects like general wisdom, problem solving and creativity.

The Singularity is defined in Wikepedia with reference to the work of Ray Kurwell "The Singularity Is Near: When Humans Transcend Biology" in which "Kurzweil describes his law of accelerating returns which predicts an exponential increase in technologies like computers, genetics, nanotechnology, robotics and Artificial Intelligence. Once the Singularity has been reached, Kurzweil says that machine intelligence will be infinitely more powerful than all human intelligence combined. Afterwards he predicts intelligence will radiate outward from the planet until it saturates the universe. The Singularity is also the point at which machines intelligence and humans would merge."

AI in the 2020s & The Challenges for Achieving AGI

The next decade 2020-2029 is going to be about narrow AI albeit one in which narrow AI makes it way into the devices all around us. Research into AGI will continue and we will make continuous incremental advances through the next decade.

Views of leading experts in the field on the arrival of AGI varies. It maybe that in a highly optimistic scenario AGI arrives in 2030 or more likely in a few decades.

- Ray Kurzweil, Google’s director of engineering predicts the arrival of the Singularity sometime before 2045;

- Louis Rosenburg believes as early as 2030;

- Jürgen Schmidhuber, pioneering researcher in AI and father of the LSTM, believes that AGI will arrive around 2050;

In 2017 May, 352 AI experts who published at the 2015 NIPS and ICML conferences were surveyed. Based on survey results, experts estimate that there’s a 50% chance that AGI will occur until 2060.

Dylan Azulay authored When Will We Reach the Singularity? – A Timeline Consensus from AI Researchers with the article entailing responses from 32 PhD researchers in the AI field who were asked about their estimates for the arrival of the singularity

The following observation summarises the divergence of opinion on the subject "It’s interesting to note that our number one response, 2036-2060, was followed by likely never as the second most popular response."

The forecasts from any of the leading experts remains an estimate. In order to attain AGI a great deal of barriers need to be overcome that will take a many research breakthroughs.

Deep Learning Neural networks generated excitement in particular in the 2010s (in particular from 2012 onwards with the success of AlexNet) with Convolutional Neural Networks (CNNs) outperforming radiologists in diagnosing diseases from medical images, and the AlphaGo Deep Reinforcement Learning combined with Tree Search algorithm beating world Go champion Lee Sedol.

Source Image below BBC: AI Google's AlphaGo beats Go master Lee Sedol

A core fundamental of Deep Neural Networks is the Backpropagation Algorithm. The method entails fine-tuning weights of a Neural Network with regards to the error rate resulting from the previous iteration (known as an epoch). Effective training of the Neural Network will result in weights that allow reduction error rates in turn resulting in a more reliable model with increased generalisation.

Backpropagation is a short form for "backward propagation of errors." It is a standard method of training Artificial Neural Networks. This method helps to calculate the gradient of a loss function with respects to all the weights in the network.

See the paper authored by Rumelhart, Hinton and Williams entitled Learning representations by back-propagating errors for more details on Backpropogation.

Source for Image Below: Guru 99, Back Propagation Neural Network: Explained With Simple Example

Backpropogation lies at the heart of Deep Neural Networks.

For those who want a deeper understanding of the process with worked examples I recommend taking a look at an article by Ritong Yen "A Step-by-Step Implementation of Gradient Descent and Backpropagation."

However even the most powerful Deep Neural Networks that we have today face a challenge in true generalisation (for the sake of simplicity consider this as multitasking) because the network will forget how to successfully perform the task that it was previously taught. This is known as catastrophic memory loss.

Attempts have been made by researchers to overcome this problem for example, Thomas Macauley observed that a Telefónica research team in Barcelona with their approach consisting of two separate parameters with one compacting the information that the Neural Networks require into the fewest neurons possible without compromising its accuracy, whilst the second protects the units that were essential to complete past tasks. However, the article notes that the "Telefónica system alone will not cure catastrophic forgetting." The team leader, Sera is quoted as stating that "that further compression is possible but believes that the ultimate solution would be an entirely new method of remembering that no longer relies on Backpropagation."

"I would say that the key thing would be to find a training algorithm that's maybe more biologically inspired and that is not based on erasing past information," he says. "But that is a huge thing."

Moving away from Backpropogation, Towards Biology & Understanding The Human Brain

Dr Geoffrey Hinton suggested the research community should ditch the technique and focus on unsupervised learning instead: “I don’t think [Backpropagation] is how the brain works. We clearly don’t need all the labeled data.”

A good overview of the application and challenges of Backpropogation is provided by Carlos Pervez in "Why we should be Deeply Suspicious of BackPropagation"

Interesting research from Bellec et al. entitled "Biologically inspired alternatives to Backpropagation through time for learning in Recurrent Neural Nets" argued that "The gold standard for learning in Recurrent Neural Networks in Machine Learning is Backpropagation through time (BPTT), which implements stochastic gradient descent with regard to a given loss function. But BPTT is unrealistic from a biological perspective, since it requires a transmission of error signals backwards in time and in space, i.e., from post- to presynaptic neurons. We show that an online merging of locally available information during a computation with suitable top-down learning signals in real-time provides highly capable approximations to BPTT"

An article by Synced "Does Deep Learning Still Need Backpropagation?" researchers from the Victoria University of Wellington School of Engineering and Computer Science have introduced the HSIC (Hilbert-Schemidt independence criterion) bottleneck as an alternative to backpropagation for finding good representations.

The research team also conducted experiments on the MNIST, FashionMNIST, and CIFAR 10 datasets for classic classification problems. Some of the results are shown below:

The paper by Ma et al. can be found on the hyperlink and is entitled "The HSIC Bottleneck: Deep Learning Without Back-Propagation"

Ram Sagar in "Is Deep Learning without BackPropogration possible" explains that:

The authors in their paper, claim that this method:

- Facilitates parallel processing and requires significantly less operations;

- Does not suffer from exploding or vanishing gradients;

- Is biologically more plausible than backpropagation as there is no requirement for symmetric feedback;

- Provides a performance on the MNIST/FashionMNIST/CIFAR10 classification comparable to backpropagation;

- Appending a single layer trained with SGD (without backpropagation) results in state-of-the-art performance.

Ram Sargar observes "The successful demonstration of HSIC as a method is an indication of the growing research in exploration of Deep Learning fundamentals from an information theoretical perspective."

HSIC represents a fascinating research initiative as an alternative to Backpropogation, however, it will require reviews from others with testing and validation at scale on different (and potentially more complex) data sets for performance and replicability to assess its potential to move beyond Backpropogation. Until then Backpropogation will remain the as the key algorithm being used in Deep Learning.

Computer Hardware restrictions for AGI

There are also challenges on the hardware side. Leah Davidson in an article entitled Narrow vs. General AI: What’s Next for Artificial Intelligence? explains that in order "To reach AGI, computer hardware needs to increase in computational power to perform more total calculations per second (cps). Tianhe-2, a supercomputer created by China’s National University of Defense Technology, currently holds the record for cps at 33.86 petaflops (quadrillions of cps). Although that sounds impressive, the human brain is estimated to be capable of one exaflop (a billion billion cps). Technology still needs to catch up. "

Will Quantum Computing play a role in accelerating the arrival of AGI?

Quantum Computing has been heralded some as being a means for dramatically speeding up computing capabilities to enable the hardware to accelerate dramatically from current levels.

Michael Baxter authored "Is Artificial General Intelligence possible? If so, when? explains that "A key part of the narrative of Artificial General Intelligence is Moore’s Law — named after Intel co-founder Gordon Moore, who predicted a doubling in the number of transistors on integrated circuits every two years. Today, Moore’s Law is generally assumed to mean computers doubling in speed every 18 months."

"At a Moore’s Law trajectory, within 20 years computers would be 8,000 times faster than present, and within 30 years, one million times faster."

"And although cynics might suggest Moore’s Law as defined by Gordon Moore is slowing, other technologies, such as Photonics, molecular computing and quantum computers could see a much faster rate of growth. Take as an example, Rose’s Law. This suggests that the number of qubits in a quantum computer could double every year. Some experts predict quantum computers doubling in power every six months — if correct, within 20 years, quantum computers will be a trillion times more powerful than present."

A key landmark for Quantum Computing is quantum supremacy defined as "...the goal of demonstrating that a programmable quantum device can solve a problem that classical computers practically cannot (irrespective of the usefulness of the problem)."

Google claimed to have achieved Quantum Supremacy in 2019, it was reported that a team from IBM challenged the claim.

Emily Conover authored an article entitled Google claimed quantum supremacy in 2019 — and sparked controversy stating that "Google researchers reported a demonstration of Quantum Computing’s power in the Oct. 24 Nature. Sycamore took only 200 seconds to perform a calculation that the researchers estimated would have taken a state-of-the-art supercomputer 10,000 years to compute (SN Online: 10/23/19). But IBM hit back with a paper suggesting an improved supercomputing technique that could theoretically perform the task in just 2.5 days. That’s still a serious chunk of computing time on the world’s most powerful computer, but not unattainable."

"Whether it’s here or just around the corner, quantum supremacy has been compared to the Wright brothers’ first flight at Kitty Hawk, N.C., in 1903. Airplanes became a reality but weren’t practically useful — yet the milestone still made the history books. In the same vein, Quantum Computers haven’t yet achieved their revolutionary potential.

Quantum supremacy is “not going to change the world overnight. We have to be patient,” theoretical physicist John Preskill of Caltech says. But for now, he says: “Let’s celebrate.” It maybe that the 2020s will be decade of continued research breakthroughs in Quantum Computing but it maybe the end of the decade before Quantum Computing really makes a practical impact in the real world.

Research developments in the lab will continue to make headlines through the next few years, for example researchers at Princeton University announced that "In leap for quantum computing, silicon quantum bits establish a long-distance relationship" explains how they have demonstrated that two quantum-computing components, known as silicon "spin" qubits, can interact even when spaced relatively far apart on a computer chip.

David Nield in "Physicists Just Achieved The First-Ever Quantum Teleportation Between Computer Chips" further explains this as "Put simply, this breakthrough means that information was passed between the chips not by physical electronic connections, but through quantum entanglement – by linking two particles across a gap using the principles of quantum physics."

"We don't yet understand everything about quantum entanglement (it's the same phenomenon Albert Einstein famously called "spooky action"), but being able to use it to send information between computer chips is significant, even if so far we're confined to a tightly controlled lab environment."

Philipp Gerbert and Frank Rueb of BCG authored The Next Decade in Quantum Computing—and How to Play and state that some experts "...warn of a potential quantum winter, in which some exaggerated excitement cools and the buzz moves to other things. Perhaps Quantum Computing will play more of a practical role in advancing Computational Power for AI in the 2030s rather than the 2020s with the 2020s the decade of continued scientific research breakthroughs and incremental advancements in Quantum Computing.

Efficiency of the human brain

Energy efficiency is also a challenge for computing hardware. Frits van Paasschen in The Human Brain vs. Computers. Should we fear Artificial Intelligence? noted that "Brains are also about 100,000 times more energy-efficient than computers, but that will change as technology advances." Furthermore, the human brain has great efficiency with DNA cells for memory storage and durability with Frits van Paasschen noting that "DNA can hold more data in a smaller space than any of today’s digital memories. According to one estimate, all of the information on every computer in 2015 coded onto DNA could “fit in the back of an SUV.” And that "The essence of memory, of course, lies in its durability...and hard drives decompose after 20 or 30 years. However, scientists have sequenced 30,000-year-old Neanderthal DNA."

A human could survive for a given day and still mentally perform tasks with sufficient glasses of water and a couple of apples and bananas. However, the most powerful algorithms of today require enormous computing power and hence energy consumption to compete with the human brain. For example Sam Shead noted in an article entitled "Here's how much computing power Google DeepMind needed to beat Lee Sedol at Go" stating that "Google DeepMind may have made history by beating the world champion of Chinese board game Go on Wednesday but it needed an awful lot of computing power to do it."

"Altogether, DeepMind used 1,202 CPUs and 176 GPUs, according to a paper in Nature that was published by several of DeepMind's employees in January."

Hence the human brain still has significant advantages that have evolved over thousands of years and so we should perhaps not be surprised that AGI will take time to achieve. We still need to discover a great deal more about the human brain before we attain AGI as noted by Dr Anna Becker, PhD in AI and CEO of Endotech.io.

Transfer Learning

Niklas Dinges in What is Transfer Learning? Exploring the popular Deep Learning approach defines Transfer learning as "..the reuse of a pre-trained model on a new problem. It's currently very popular in Deep Learning because it can train Deep Neural Networks with comparatively little data. This is very useful since most real-world problems typically do not have millions of labeled data points to train such complex models."

"According to DeepMind CEO Demis Hassabis, Transfer Learning is also one of the most promising techniques that could lead to AGI someday."

Dipanjan Sarkar in A Comprehensive Hands-on Guide to Transfer Learning with Real-World Applications in Deep Learning explained that "Humans have an inherent ability to transfer knowledge across tasks. What we acquire as knowledge while learning about one task, we utilize in the same way to solve related tasks. The more related the tasks, the easier it is for us to transfer, or cross-utilize our knowledge. "

"Conventional Machine Learning and Deep Learning algorithms, so far, have been traditionally designed to work in isolation. These algorithms are trained to solve specific tasks. The models have to be rebuilt from scratch once the feature-space distribution changes. Transfer learning is the idea of overcoming the isolated learning paradigm and utilizing knowledge acquired for one task to solve related ones."

Scott Martin What Is Transfer Learning? in NVIDIA Blogs explains "Take image recognition. Let’s say that you want to identify horses, but there aren’t any publicly available algorithms that do an adequate job. With transfer learning, you begin with an existing Convolutional Neural Network commonly used for image recognition of other animals, and you tweak it to train with horses.

Here’s how it works: First, you delete what’s known as the “loss output” layer, which is the final layer used to make predictions, and replace it with a new loss output layer for horse prediction. This loss output layer is a fine-tuning node for determining how training penalizes deviations from the labeled data and the predicted output.

Next, you would take your smaller dataset for horses and train it on the entire 50-layer neural network or the last few layers or just the loss layer alone. By applying these transfer learning techniques, your output on the new CNN will be horse identification.

Matt Turck Frontier AI: How far are we from Artificial “General” Intelligence, really?explains that "For transfer learning to lead to AGI, the AI would need to be able to do Transfer Learning across increasingly far apart tasks and domains, which would require increasing abstraction."

"Transfer Learning...works well when the tasks are closely related, but becomes much more complex beyond that."

PathNet, A step towards AGI

Théo Szymkowiak authored "DeepMind just published a mind blowing paper: PathNet"

where the author observed "Since scientists started building and training Neural Networks, Transfer Learning has been the main bottleneck. Transfer Learning is the ability of an AI to learn from different tasks and apply its pre-learned knowledge to a completely new task. It is implicit that with this precedent knowledge, the AI will perform better and train faster than de novo Neural Networks on the new task."

DeepMind is on the path of solving this with PathNet. PathNet is a network of neural networks, trained using both stochastic gradient descent and a genetic selection method.

"Transfer learning: After learning a task, the network fixes all parameters on the optimal path. All other parameters must be reinitialized, otherwise PathNet will perform poorly on a new task."

"Using A3C, the optimal path of the previous task is not modified by the back-propagation pass on the PathNet for a new task. This can be viewed as a safety net to not erase previous knowledge."

The full paper by Fernando et al. can be found on the following hyperlink "PathNet: Evolution Channels Gradient Descent in Super Neural Networks"

Fascinating research work into Deep Reinforcement Learning and Transfer Learning has been published by:

- Barreto et al. "Transfer in Deep Reinforcement Learning Using Successor Features and Generalised Policy Improvement"

- Ada et al. "Generalization in Transfer Learning"

- Hu and Montana "Skill Transfer in Deep Reinforcement Learning under Morphological Heterogeneity"

- Ammanabrolu and Riedl "Transfer in Deep Reinforcement Learning using Knowledge Graphs"

Matt Turck also notes that "Recursive Cortical Networks (RCN) are yet another promising approach. Developed by Silicon Valley startup Vicarious, RCN were recently used to solve text-based CAPTCHAs with a high accuracy rate using significantly less data than its counterparts much — 300x less in the case of a scene text recognition benchmark." For more details see the paper published by George et al. entitled "A generative vision model that trains with high data efficiency and breaks text-based CAPTCHAs."

Furthermore, Matt Turck acknowledges the research work of Josh Tenenbaum at MIT as showing promising results and the hybrid AI approaches that combine different approaches for example Deep Learning combined with Logic. This research is interesting because there has been criticism that Deep Learning currently requires large data sets for effective training that will show consistent and reproducible good results in both in and out of sample testing, where as a human child can learn to determine what is a dog and a cat from much smaller set of examples. This need for large data sets has been a barrier for scaling Deep Learning into other areas of the economy including those businesses (and areas of healthcare) that have smaller sets of data and will be of increasing importance in the eras of 5G and 6G when AI will increasingly sit on the edge (devices around us such as autonomous cars and robots). As noted above Transfer Learning has been helping in this area but is still in relatively early stages and remains an area of research and development.

An article in MIT Technology Review by Will Knight reported that "Two rival AI approaches combine to let machines learn about the world like a child".

The article noted that Deep Learning alongside symbolic reasoning enabled a program that learned in a very humanlike manner.

The paper entitled The Neuro-Symbolic Learner: Interpreting Scenes, Words, and Sentences form Natural Supervision is a joint paper between MIT CSAIL, MIT Brain Computer Science, MIT-IBM Watson AI Lab and Google DeepMind.

Will Knight in the Technology Review observed that:

"More practically, it could also unlock new applications of AI because the new technology requires far less training data. Robot systems, for example, could finally learn on the fly, rather than spend significant time training for each unique environment they’re in."

“This is really exciting because it’s going to get us past this dependency on huge amounts of labeled data,” says David Cox, the scientist who leads the MIT-IBM Watson AI lab.

Ben Dickenson also comments on the same research in an article entitled "Architects of Intelligence: A reflection on the now and future of AI" observes that "Tenenbaum recently headed a team of researchers who developed the Neuro-symbolic Concept Learner. The NSCL is a hybrid AI model that combines neural nets and symbolic AI to solve problems. The results of the researchers’ work show that NSCL can learn new tasks with much less data than pure neural network–based models require. Hybrid AI models are also explainable as opposed to being opaque black boxes."

"But not everyone is a fan of hybrid AI models."

“Note that your brain is all Neural Networks. We have to come up with different architectures and different training frameworks that can do the kinds of things that classical AI was trying to do, like reasoning, inferring an explanation for what you’re seeing and planning,” Yoshua Bengio says.

Geoffrey Hinton, another Deep Learning pioneer, is also critical toward hybrid approaches. In his interview with Ford, he compares hybrid AI to combining electric motors and internal combustion engines. “That’s how people in conventional AI are thinking. They have to admit that Deep Learning is doing amazing things, and they want to use Deep Learning as a kind of low-level servant to provide them with what they need to make their symbolic reasoning work,” Hinton says. “It’s just an attempt to hang on to the view they already have, without really comprehending that they’re being swept away.”

The above shows that there is an ongoing dispute in the research community about the optimal approach to move AI towards a generalised approach that will attain human levels of ability.

We can summarise from the above that whilst there is a great deal of research work and constant incremental advances, it is going to take time to attain AGI.

Some fear the future arrival of AGI will leave humans redundant. Others, myself included believe that we will use AI to augment ourselves and by the time AGI arrives, we will be smarter and more enlightened.

Furthermore more one may opine that if the intention of AGI is to develop AI that can match the capabilities of the human brain then surely we need to understand more about the human brain?

Using Deep Learning to improve our understanding of the human brain

Nathan Collins explains how Deep Learning is being applied to help us understand our brains better in an article entitled Deep Learning comes full circle states that "Although not explicitly designed to do so, certain Artificial Intelligence systems seem to mimic our brains’ inner workings more closely than previously thought, suggesting that both AI and our minds have converged on the same approach to solving problems. If so, simply watching AI at work could help researchers unlock some of the deepest mysteries of the brain."

“There’s a real connection there,” said Daniel Yamins, assistant professor of psychology. "Now, Yamins, who is also a faculty scholar of the Stanford Neurosciences Institute and a member of Stanford Bio-X, and his lab are building on that connection to produce better theories of the brain – how it perceives the world, how it shifts efficiently from one task to the next and perhaps, one day, how it thinks."

"Then came a surprise. In 2012, AI researchers showed that a Deep Learning Neural Network could learn to identify objects in pictures as well as a human being, which got neuroscientists wondering: How did Deep Learning do it?"

"The same way the brain does, as it turns out. In 2014, Yamins and colleagues showed that a deep learning system that had learned to identify objects in pictures – nearly as well as humans could – did so in a way that closely mimicked the way the brain processes vision. In fact, the computations the deep learning system performed matched activity in the brain’s vision-processing circuits substantially better than any other model of those circuits."

Another example of interesting research relates to Neuromorphic chips. Ben Dickson in "What is neuromorphic computing?" states that "The structure of neuromorphic computers makes them much more efficient at training and running neural networks. They can run AI models at a faster speed than equivalent CPUs and GPUs while consuming less power. This is important since power consumption is already one of AI’s essential challenges."

"The smaller size and low power consumption of neuromorphic computers make them suitable for use cases that require to run AI algorithms at the edge as opposed to the cloud.

Peter Morgan in an article entitled "Deep Learning and Neuromorphic Chips" explains that "Neuromorphic chips attempt to model in silicon the massively parallel way the brain processes information as billions of neurons and trillions of synapses respond to sensory inputs such as visual and auditory stimuli. Those neurons also change how they connect with each other in response to changing images, sounds, and the like. This is the process we call learning and memories are believed to be held in the trillions of synaptic connections."

"The Human Brain Project, a European lead multibillion dollar project to simulate a human brain, has incorporated Steve Furber’s group from the University of Manchester’s neuromorphic chip design into their research efforts. SpiNNaker has so far been able to accomplish the somewhat impressive feat of simulating a billion neurons with analogue spike trains in hardware. Once this hardware system scales up to 80 billion neurons we will have in effect the first artificial human brain, a momentous and historical event."

"Darwin is an effort originating out of two universities in China. The successful development of Darwin demonstrates the feasibility of real-time execution of Spiking Neural Networks in resource-constrained embedded systems. Finally, IARPA, a research arm of the US Intelligence Department, has several projects ongoing involving biologically inspired AI and reverse engineering the brain. One such project is MICrONS or Machine Intelligence from Cortical Networks which “seeks to revolutionize machine learning by reverse-engineering the algorithms of the brain.” The program is expressly designed as a dialogue between data science and neuroscience with the goal to advance theories of neural computation."

As Peter Morgan observes this is an active area of research and development and it remains to be seen yet whether we will actually reverse engineer the human brain using this technique. At the very least one would expect neuromorphic computing to lead to potentially exciting opportunities within the business and healthcare communities in the 2020s.

Baely Almonte notes in an article entitled "Engineering professor uses Machine Learning to help people with paralysis regain independence" how a brain-computer interface using Machine Learning to translate brain patterns into instructions for a personal computer, or even a powered wheelchair can be used to understand the specific tasks that a person wants to achieve.

An article by Zoë Corbyn entitled "Are brain implants the future of thinking?" notes"Brain-computer interface technology is moving fast and Silicon Valley is moving in. Will we all soon be typing with our minds?" The article states that "But many experts anticipate that the technology will be available for people with impairments or disabilities within five or 10 years. For non-medical use, the timeframe is greater – perhaps 20 years."

The Future is Humans Augmenting ourselves with AI

Along the way the arrival of 6G around 2030 that Marcus Weldon of Nokia Bell Labs, claims "...will be a sixth sense experience for humans and machines” where biology meets AI and the potential for Quantum Computing to take off in the real world in the 2030s may combine to accelerate the pathway towards stronger AI in the 2030s and beyond.

However, to reduce the fears and anxieties of many out there, this article should make clear that given the challenges of achieving AGI, it is not going to magically appear whilst a teenager is playing around on their gaming computer. The computational complexities and other design challenges for AGI are going to take time to resolve and the period of the 2020s is going to be about maximising the benefits of cutting edge AI techniques across the sectors of the economy.

Therefore whilst we will continue to hear about breakthroughs of Deep Learning algorithms outperforming humans at specific tasks in 2020 and across the decade, we are unlikely to experience AI outperforming humans at multiple tasks in the foreseeable future. For more information see the article by Kyle Wiggers "Geoffrey Hinton and Demis Hassabis: AGI is nowhere close to being a reality."

Some fear the consequences of the eventual arrival of AGI and ASI will be highly negative for humanity with jobs at risk and humanity itself threatened as noted in the infographic below.

Source for infographic below: Lawtomated, AI for Legal: ANI, AGI and ASI

Whilst I personally do believe that AGI will arrive someday in the future (albeit not in the 2020s), I don't believe that it will occur in some vacuum or perhaps better to state in isolation of humans also learning to use advanced AI to enhance ourselves.

The work being undertaken by the likes of the Stanford Neurosciences Institute into using AI to further understand how our own brains work along with the research and development into neural human brain computer chips means that AI will not advance in isolation but rather so too will our own understanding of both our own brains but also the ability for humans to augment ourselves with advanced AI and hence there will be a partnership between AI and humans in the future rather than AGI or ASI simply replacing humans. Albeit we'll need to become more enlightened and aware of the risks (control by others) and hence perhaps the real risk with AI is not so much AGI and ASI replacing humans but rather particular people seeking to use technology to influence (perhaps even control) the actions of others.

As a society we are already engaged in a debate in relation to the influence of social media using algorithms and the impact of the Cambridge Analytica scandal with Facebook with our politicians still playing catch up in understanding modern technology. Our ability to handle the challenges that face us today rather than focus anxiety on technologies that are yet to arrive will in fact be key to creating the foundations for how we handle the eventual arrival of AGI and use advanced AI to further develop humanity. As things stand today and for the foreseeable future humanity faces genuine dangers from extreme weather events, climate change, and the challenges of how we are going to feed a world where the population is forecast to grow from 7.7 to 11 billion people this century.

Calos Miskins notes in "How the new era of 5G connectivity will enhance farming" that"...by 2050 there will be over 9 billion people on the planet and food production needs to increase by 60% to sustain that. So, how are we going to feed everyone? How are we going to sustain the planet and ensure a stable future for the upcoming generations?" and argues that we will need to use AI and 5G to further drive AgTech solutions to solve for the challenges that lie ahead.

Perhaps from a philosophical perspective it maybe better that we are not on the verge of introducing AGI into the world. today. If AGI was to learn from us from the perspective of recent times in our world one may consider the experience with the chatbot Tay. Tay was a chatbot placed on Twitter by Microsoft engineers to learn from humans and almost immediately developed offensive language with Rachel Meltz explaining "Microsoft’s neo-Nazi sexbot was a great lesson for makers of AI assistants."

Furthermore, maybe we are not ready for such a huge transition that AGI may result in the economy at a time when the aftermath of the impact of the Great Recession is still felt in certain sectors of the global economy with recovery only recently occurring and the resulting impact in global politics and across societies.

Perhaps, at this moment in time it is better that we have ANI in light of the immediate problem that we face in coping and making sense of the deluge of data that the digital economy is generating and that 5G will generate in the next decade.

Furthermore, as we use Deep Learning to learn more about our own brains, and transition to Industry 4.0 in the 2020s it will give us time to use ANI to augment our own capabilities and also to apply AI towards challenges that we face in terms of economic growth and cleaner technologies. We will need AI technology to help solve for the problems that are facing in relation to the environment and the need to find innovative ways to feed the world in light of the growing population. Furthermore, AI will play a key role in relation to the healthcare challenges that we face around the world.

In the 2020s the cutting edge of AI is going to occur increasing at the edge (embedded, on device) resulting in exciting new opportunities across businesses, education (virtual tutors and remote classrooms) and the healthcare sector rather than AGI / ASI replacing us.

The section below provides a high level overview of the examples of the state of the art of AI techniques today along with a glossary of the types of Machine Learning.

Examples of State of the Art AI Today

We experienced an exciting time in AI research during the 2010s and in recent times this has continued to produce exciting developments that in turn may also see fascinating new innovative products and services in real-world applications such as the entertainment sector, (including gaming), healthcare, finance, art, manufacturing and robotics. The section below outlines some of the cutting edge techniques used within the AI community today and that we should expect to hear more about during the 2020s

GANs

Generative Adversarial Networks (GANs) were invented by Ian Goodfellow and his colleagues in 2014 after an argument in a bar. GANs are a type of Deep Neural Network that uses a generator and a discriminator. The generator creates images via a deconvolutional neural network and the discriminator network is a CNN classifying images as real or fake.

The generator continuously generates data while the discriminator learns to discriminate fake from real data. This way, as training progresses, the generator continuously gets good at generating fake data that looks like real while the discriminator gets better at learning the difference between fake and real, in turn helping the generator to improve itself. Once trained, we can then use the generator to generate fake data that looks realistic. For example, a GAN trained on faces can be used to generate images of faces that do not exist and look very real. GANs are viewed as one of the most exciting areas of AI today with applications to healthcare and the entertainment sector. It is the technology underlying the FaceApp and the darker side of GANs is noted with DeepFakes and potential for fake news. GANs also have potential implications for Cyber Security as noted by Brad Harris in an article entitled "Generative Adversarial Networks and Cybersecurity: Part 2".

Source for Image below Sarvasv Kupati A Brief Introduction To GANs

Edmond de Belamy is a Generative Adversarial Network portrait painting constructed in 2018 by Paris-based arts-collective Obvious and sold for $432,500 in Southebys in October 2018.

Image below Edmond de Belamy

Ajay Uppili Arasanipalai authored Generative Adversarial Networks - The Story So Far providing a summary of the journey so far with GANs:

- GAN: Generative Adversarial Networks

- DCGAN: Deep Convolutional Generative Adversarial Network

- CGAN: Conditional Generative Adversarial Network

- CycleGAN

- CoGAN: Coupled Generative Adversarial Networks

- ProGAN: Progressive growing of Generative Adversarial Networks

- WGAN: Wasserstein Generative Adversarial Networks

- SAGAN: Self-Attention Generative Adversarial Networks

- BigGAN: Big Generative Adversarial Networks

- StyleGAN: Style-based Generative Adversarial Networks

Exciting research into GANs continues and recent papers of interest include the work by Mukherjee et al. "Protecting GANs against privacy attacks by preventing overfitting" developed a new GAN architecture that they termed "privGAN" with potential applications to Cryptography and Security. In addition the research paper by Kumar et al. "Harnessing GANs for Zero-Shot Learning of New Classes in Visual Speech Recognition" where they apply GANs to Visual Speech Recognition (VSR) with increases the accuracy of the VSR system by a significant margin of 27%. Furthermore, the authors claim that their approach allows it to handle speaker-independent out-of-vocabulary phrases with the ability to seamlessly generate videos for a new language (Hindi) using English training data. The authors further claim that this is the first work to demonstrate evidence empirically that GANs can be used to generate unseen classes of training samples in the VSR domain thereby facilitating zero-shot learning.

Source for Image Below: Kumar et al. "Harnessing GANs for Zero-Shot Learning of New Classes in Visual Speech Recognition", Overall pipeline demonstrated with the generator G as the TC-GAN architecture

Deep Reinforcement Learning (DRL)

Deep Reinforcement Learning entails a combination of Reinforcement Learning and Deep Learning. DRL algorithms deal with modelling an agent that learns to interact with an environment in the most optimal way possible. The agent continuously takes actions keeping the goal in mind and the environment either rewards or penalises the agent for taking a good or bad action respectively. This way, the agent learns to behave in the most optimal manner so as to achieve the goal. AlphaGo from DeepMind is one of the best examples of how the agent learned to play the game of Go and was able to compete with a human being.

It is a very exciting area of AI with Francois-Lavet et al. in a paper entitled "An introduction to Deep Reinforcement Learning" explaining that "This field of research has been able to solve a wide range of complex decision-making tasks that were previously out of reach for a machine. Thus, DRL opens up many new applications in domains such as healthcare, robotics, smart grids, finance, and many more."

Another prominent example of DRL is AlphaGo Zero.

Hees et al. from the DeepMind team published Emergence of Locomotion Behaviours in Rich Environments which set out how DeepMind's AI used DRL to teach itself to walk.

"Everything the stick figure is doing in this video is self-taught. The jumping, the limboing, the leaping — all of these are behaviors that the computer has devised itself as the best way of getting from A to B. All DeepMind’s programmers have done is give the agent a set of virtual sensors (so it can tell whether it’s upright or not, for example) and then incentivize to move forward. The computer works the rest out for itself, using trial and error to come up with different ways of moving."

An article by Open Data Science entitled Best Deep Reinforcement Learning Research of 2019 So Far provides an overview of some of the leading research in DRL in 2019. Examples of fascinating research in the field include Chakraborty et al. with Capturing Financial markets to apply Deep Reinforcement Learning, Sehgal et al. with Deep Reinforcement Learning using Genetic Algorithm for Parameter Optimization and Jiang and Luo who authored Neural Logic Reinforcement Learning.

Active research into DRL that may impact our daily lives during the 2020s is ongoing, for example Cai et al. produced "High-speed Autonomous Drifting with Deep Reinforcement Learning", and Capasso et al. "Intelligent Roundabout Insertion using Deep Reinforcement Learning." Moreover, Genc et al. (a team from Amazon), produced a paper entitled "Zero-Shot Reinforcement Learning with Deep Attention Convolutional Neural Networks".

It is anticipated that Multi-agent DRL will play a key role in the next decade with autonomous vehicles and robotics.

One challenge that Reinforcement Learning algorithms face relates to the situation where rewards are sparse or infrequent resulting in the algorithm receiving insufficient feedback to make progress to obtaining their goal. Furthermore, situations may arise whereby rewards incentivise gains in the short-term at the expense of long-term progress known as deceptive rewards with Matthew Hutson referring to this as resulting in algorithms being trapped in dead ends and DRL being stuck in a rut.

Parisi et al. in Long-Term Visitation Value for Deep Exploration in Sparse Reward Reinforcement Learning observe that "Reinforcement Learning with sparse rewards is still an open challenge." They argue that their research using tubular environments for benchmarking exploration outperforms existing methods in environments with sparse rewards.

Neuroevolution

Neuroevolution, or neuro-evolution, is defined in Wikipedia as "a form of AI that uses evolutionary algorithms to generate Artificial Neural Networks (ANN), parameters, topology and rules. It is most commonly applied in artificial life, general game playing and evolutionary robotics."

Matthew Hutson in "Computers Evolve a New Path Toward Human Intelligence" explains that "In Neuroevolution, you start by assigning random values to the weights between layers. This randomness means the network won’t be very good at its job. But from this sorry state, you then create a set of random mutations — offspring neural networks with slightly different weights — and evaluate their abilities. You keep the best ones, produce more offspring, and repeat. (More advanced neuroevolution strategies will also introduce mutations in the number and arrangement of neurons and connections.) Neuroevolution is a meta-algorithm, an algorithm for designing algorithms. And eventually, the algorithms get pretty good at their job."

Moreover, Matthew Hutson also notes the work of AI researcher Kenneth Stanley with the Steppingstone principle explaining that "The steppingstone principle goes beyond traditional evolutionary approaches. Instead of optimizing for a specific goal, it embraces creative exploration of all possible solutions."

"The steppingstone’s potential can be seen by analogy with biological evolution. In nature, the tree of life has no overarching goal, and features used for one function might find themselves enlisted for something completely different. Feathers, for example, likely evolved for insulation and only later became handy for flight."

Matthew Hutson also notes that AI researcher Jeff Clune published research entitled "AI-GAs: AI-generating algorithms, an alternate paradigm for producing general Artificial Intelligence" whereby "Clune argues that open-ended discovery is likely the fastest path toward artificial general intelligence — machines with nearly all the capabilities of humans. Most of the AI field is focused on manually designing all the building blocks of an intelligent machine, such as different types of neural network architectures and learning processes. But it’s unclear how these might eventually get bundled together into a general intelligence."

"Instead, Clune thinks more attention should be paid to AI that designs AI. Algorithms will design or evolve both the Neural Networks and the environments in which they learn." One such approach is the POET algorithm (Paired Open-Ended Trailblazer).

Evolutionary algorithms can handle the issue of sparse rewards that Reinforcement Learning may encounter but may require substantial processing time.

Khari Johnson authored an article entitled "Intel AI researchers combine Reinforcement Learning methods to teach 3D humanoid how to walk" states that Collaborative Evolutionary Reinforcement Learning (CERL) can achieve better performance than gradient-based or evolutionary algorithms for Reinforcement Learning can on their own.

Such systems may be applied to things like robotic control, systems governing autonomous vehicle function, and other complex AI tasks.

Capsules

A dynamic routing mechanism for capsule networks was introduced by Hinton and his team in 2017. The approach was claimed to reduce error rates on MNIST and to reduce training set sizes. Results were claimed to be considerably better than a CNN on highly overlapped digits.

A Capsule Neural Network (CapsNet) is a machine learning system that is a type of Artificial Neural Network (ANN) that can be used to better model hierarchical relationships. The approach is an attempt to more closely mimic biological neural organization.

Source for Image Below: Capsules, Aurélien Géron

The idea is to add structures called “capsules” to a Convolutional Neural Network (CNN), and to reuse output from several of those capsules to form more stable (with respect to various perturbations) representations for higher order capsules.

Capsnets address the "Picasso problem" in image recognition: images that have all the right parts but that are not in the correct spatial relationship (e.g., in a "face", the positions of the mouth and one eye are switched). For image recognition, capsnets exploit the fact that while viewpoint changes have nonlinear effects at the pixel level, they have linear effects at the part/object level. This can be compared to inverting the rendering of an object of multiple parts.

In 2019 Hinton, Harbour Sabour, Teh and researchers from the Oxford Robotics Institute published a paper entitled Stacked Capsule Autoencoders.

Kyle Wiggers authored AI capsule system classifies digits with state-of-the-art accuracy stated that "the coauthors of the study say that the SCAE’s design enables it to register industry-leading results for unsupervised image classification on two open source data sets, the SVHN (which contains images of small cropped digits) and the MINST (handwritten digits). Once the SCAE was fed images from each and the resulting clusters were assigned labels, it achieved 55% accuracy on SVHN and 98.7% accuracy on MNIST, which were further improved further to 67% and 99%, respectively."

Smarter Training of Neural Networks

Jonathan Frankle Michael Carbin of MIT CSAIL published The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks

Adam Conner-Simons in Smarter training of Neural Networks provided an incisive summary of the paper noting"In a new paper, researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) have shown that Neural Networks contain subnetworks that are up to 10 times smaller, yet capable of being trained to make equally accurate predictions - and sometimes can learn to do so even faster than the originals. "

"MIT professor Michael Carbin says that his team’s findings suggest that, if we can determine precisely which part of the original network is relevant to the final prediction, scientists might one day be able to skip this expensive process altogether. Such a revelation has the potential to save hours of work and make it easier for meaningful models to be created by individual programmers and not just huge tech companies. "

I believe that the techniques and research areas set out in this section will continue to be the main drivers of AI over the next 5 years albeit increasingly on the edge.

Glossary of Terms for AI, Machine Learning and Deep Learning

For a wider overview of the terms used in AI see the article "An Introduction to AI" also published in KDnuggets.

Artificial Intelligence (AI) deals with the area of developing computing systems which are capable of performing tasks that humans are very good at, for example recognising objects, recognising and making sense of speech, and decision making in a constrained environment.

Machine Learning is defined as the field of AI that applies statistical methods to enable computer systems to learn from the data towards an end goal. The term was introduced by Arthur Samuel in 1959.

Supervised Learning: a learning algorithm that works with data that is labelled (annotated). For example learning to classify fruits with labelled images of fruits as apple, orange, lemon, etc.

Unsupervised Learning: is a learning algorithm to discover patterns hidden in data that is not labelled (annotated). An example is segmenting customers into different clusters.

Semi-Supervised Learning: is a learning algorithm when only when a small fraction of the data is labelled.

Reinforcement Learning: is an area that deals with modelling agents in an environment that continuously rewards the agent for taking the right decision. An example is an agent that is playing chess against a human being, An agent gets rewarded when it gets a right move and penalised when it makes a wrong move. Once trained, the agent can compete with a human being in a real match.

Active Learning: a form of semi-superived learning. The main hypothesis in active learning is that if a learning algorithm can choose the data it wants to learn from, it can perform better than traditional methods with substantially less data for training.

Deep Learning refers to the field of Neural Networks with several hidden layers. Such a neural network is often referred to as a Deep Neural Network.

Source for Image Below Ray Bernard Deep Learning to the Rescue, RBC, Inc

Neural Networks: are biologically inspired networks that extract abstract features from the data in a hierarchical fashion. Neural Networks were discouraged in the 1980s and 1990s. It was Geoff Hinton who continued to push them and was derided by much of the classical AI community at the time. A key moment in the history of the development of Deep Neural Networks (see below for definition) was in 2012 when a team from Toronto introduced themselves to the world with the AlexNet network at the ImageNet competition. Their Neural Network reduced the error significantly compared to previous approaches that used hand derived features. They are also referred to as Artificial Neural Networks.

Hinton was awarded the 2018 Turing Prize alongside Yoshua Bengio and Yann LeCun for their work on Deep Learning. Hinton - together with Yoshua Bengio and Yann LeCun - are referred to by some as the "Godfathers of AI"

Few of the main types of Deep Neural Networks used today are:

Convolutional Neural Network (CNN): A convolutional neural networks is type of neural network that uses convolutions to extract patterns from the input data in a hierarchical manner. It’s mainly used in data that has spatial relationships such as images. Convolution operations that slide a kernel over the image extract features that are relevant to the task.

Image Source Mathworks What are Convolutional Neural Networks?

Recurrent Neural Network (RNN): Recurrent Neural Networks, and in particular Long Short Term Memory cells (LSTMs) are used to process sequential data. Time series data for example stock market data, speech, signals from sensors and energy data have temporal dependencies. LSTMs are a more efficient type of RNN that alleviates the vanishing gradient problem, giving it an ability to remember both in the short term as well as far in the history.

Image Source Colah's Blog Understanding LSTM Networks

Restricted Boltzmann Machine (RBM): is basically a type of neural network with stochastic properties. Restricted Boltzmann Machines are trained using an approach named Contrastive Divergence. Once trained, the hidden layers are a latent representation of the input. RBMs learn a probabilistic representation of the input.

Deep Belief Network: is a composition of Restricted Boltzmann Machines with each layer serving as a visible layer for the next. Each layer is trained before adding additional layers to the network which helps in probabilistically reconstructing the input. The network is trained using a layer-by-layer unsupervised approach.

Variational Autoencoders (VAE): are an improvised version of auto encoders used for learning an optimal latent representation of the input. It consists of an encoder and a decoder with a loss function. VAEs use probabilistic approaches and refers to approximate inference in a latent Gaussian model.

Source Image Below keitakurita An Intuitive Explanation of Variational Autoencoders (VAEs Part 1)

Generative Adversarial Networks (GANs): considered in more detail above, are a type of CNN that uses a generator and a discriminator. The generator creates images via a deconvolutional neural network and the discriminator network is a CNN classifying images as real or fake. See above for more details.

The generator continuously generates data while the discriminator learns to discriminate fake from real data. This way, as training progresses, the generator continuously gets good at generating fake data that looks like real while the discriminator gets better at learning the difference between fake and real, in turn helping the generator to improve itself. Once trained, we can then use the generator to generate fake data that looks realistic. For example, a GAN trained on faces can be used to generate images of faces that do not exist and look very real.

Deep Reinforcement Learning: Covered in more detail above, Deep Reinforcement Learning algorithms deal with modelling an agent that learns to interact with an environment in the most optimal way possible. The agent continuously takes actions keeping goal in mind and the environment either rewards or penalises the agent for taking a good or bad action respectively. This way, the agent learns to behave in the most optimal manner so as to achieve the goal. AlphaGo from DeepMind is one of the best example of how the agent learned to play the game of Go and was able to compete with a human being.

Image Source Mao et al. Resource Management with Deep Reinforcement Learning

Capsules: Covered above. It is still an active area of research. A CNN is known to learn representations of data that are often not interpretable. On the other hand, a Capsule network is known to extract specific kinds of representations from the input, example it preserves the hierarchical pose relationships between object parts. Another advantage of capsule networks is that it is capable of learning the representations with a fraction amount of data otherwise the CNN would require.

Top 5 Retail Banking Influencer, Global Speaker, Podcast Host and Co-Publisher at The Financial Brand

3yWOW. So much information.

AI workforce and career development, AIED for teacher preparation, Innovation, Entrepreneurship, Globalization in Higher Ed, Data Analytics, Post-foundational theories, Educational Leadership, and Peace Education

3yVery clear and well-structured info about AI today. Thanks for sharing.