Jibo vs Echo will be decided with developers' hearts

Forget Alexa, when it comes to digital companionship Jibo is aiming to make a faint Echo of Amazon's connected speaker. Handiwork of a team of roboticists, animators, and speech recognition specialists, Jibo raised millions with its promise of social robotics: now, the "family robot" is ready to embrace whatever new abilities third-party coders can come up with. I caught up with Jonathan Ross, Head of SDK Development at Jibo, for an exclusive preview of the new developer toolkit ahead of its public demonstration at SXSW this weekend.

While I've watched videos of Jibo in action, and seen a non-functional shell in person, it was my first time interacting with the robot for real. The Jibo Ross brought along lacked standalone abilities, intended instead to physically demonstrate the apps – or, in Jibo speech, "skills" – cooked up in the SDK on Ross' laptop.

It doesn't take much, in my experience, for a robot – or robotic device – to give at least the vague impression that it's present with you and attentive. Amazon's Echo, for instance, achieves that to a surprising degree simply with the use of its microphones, LEDs, and some sound-location calculations: that way, it can "point" its lights in the direction you're speaking from, making it seem more attentive in the process.

Jibo, though, goes several stages beyond that. For a start it supports physical movement: the chest section can rotate, as can the head. Because of the angles at which each section meets, those rotations tilt the robot rather than just pivoting it and, when you factor in the smoothness of the servos, the result is a sinuous grace that helps belie the fact that Jibo is an automaton.

That gives an edge when it comes to everyday acceptance, Ross suggests, particularly when things go wrong. People are more forgiving of mistakes – than they are, say, of when Siri struggles to understand you correctly or has to resort to a web search – because of how Jibo behaves. That might be showing signs of coyness, or of being bashful.

When a robot glances down at the ground, its normally bouncing eye dipped sheepishly, and apologizes for not being able to help, you'd have to be the sort of person who shouts at kittens to stay mad at it.

You might think, therefore, that sort of behavior to be challenging to work with. In reality the SDK, Ross explained to me, is targeted at as broad a pool of potential developers as possible. The Jibo team has worked hard to make it as straightforward to jump in and create skills for the robot as they can.

Skills are HTML web apps, therefore, though while web app coders are an obvious target, Jibo is also courting game developers. In fact, Ross – who's background includes game production – says, animation in games is very translatable to physical motors. Jibo is a lot like a non-playable character in that respect.

Jibo supports two kinds of movement. Static movements are customizable by each skill developer, but easier to implement are procedural movements, which use the company's own natural kinesthetic actions.

Developed by a team that includes former Disney animators, these actions are what help Jibo appear more organic. It's the difference between a robotic arm on a production line lunging out and jabbing at a weld-point on a car, and Jibo gently rotating to glance at you when you walk into its field of view.

The end position of both is no less accurate in either case, but Jibo's movements include gentle acceleration and deceleration at the beginning and end; the potential for a slight overshoot that mimics our own muscle control; the eye glancing first and then followed, just a split-second later, by the head catching up. Movements can vary depending on task: a video calling app might demand controlled, precise pans and tilts to keep you cleanly in frame, whereas more casual skills could have Jibo glance around lazily, moving only his eye not the whole head.

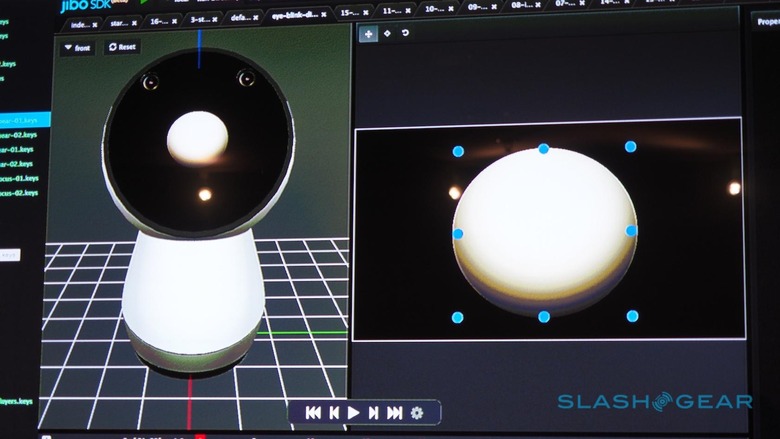

It'd be a lot to expect each skill developer to build from scratch so, given how instrumental kinesthetic movements are to the companion robot's experience overall, the SDK does all the hard work. An animation tool gives a 3D representation of Jibo, which can be dragged around from position to position, with keyframes set at each. The SDK automatically interpolates between those points, working out the right kinesthetics for each.

A second interface controls the morphable eye, allowing it to be bounced, squeezed into different shapes, and generally positioned. Finally, there's control over the multicolor LED ring that runs around the lower body.

In fact, Ross was able to create a whole new animation for Jibo – turning, glancing down, and blinking, while the lights changed to red – in just a couple of minutes. That could then be pushed over to the physical robot in demonstration or, much more rapidly, played in the simulator that's included in the SDK.

The behavior technology is based on the same technology used to code Halo non-playable characters, and effectively serves up APIs for skills. Developers will be able to easily tell Jibo to look at a certain point, go into an idle mode with basic movements, track someone around the room, look around itself, or do other animations, all without needing to know the precise servo controls each actually requires.

Jibo doesn't just offer a pantomime of interaction, however. Just as important as its lifelike movements are its abilities to recognize speech and faces, and then speak up in response. On the vision side, the robot has a sense of the space around him courtesy of the two cameras in its face, along with a memory of where people were and the likelihood of them still being in the same place.

Importantly, developers don't get access to the cameras: they can't turn Jibo into a secret spy. Instead, they get Jibo's interpretation of the environment around him.

That includes recognition of multiple targets in the same space, whether that be audio, movement, or facial recognition. Touch sensors are included in Jibo's head – Ross himself has a habit of patting the robot while talking about it, as if it were a child or a puppy.

How Jibo reacts depends on where in the room it spots a potential playmate. If you walk in through the door, it might merely acknowledge you by turning to look and nodding. Greater interactions are expected to be carried out at a typical conversational distance; that'll be tuned according to the social norms of whatever region it's operating in.

Behaviors will also change depending on who is around. Jibo remembers his "loop" or "crew" – the people interacted with most frequently – and responds accordingly. Individuals are recognized by voice print in their speech: that same system can be used to control skill access, so that for instance your kids couldn't use Jibo to adjust your connected thermostat.

Given the attention Amazon has received in recent months with its rapidly-improving Echo speaker, I was curious whether the Jibo team had considered integrating with the Alexa SDK rather than continuing in-house development. According to Ross, however, there's more difference between his robot and Amazon's chatty speaker than you might think.

"There's a lot of technology we're building that Alexa doesn't provide," he explained, particularly in the speed of interaction. Whereas Amazon relies solely on cloud processing of voice recognition, Jibo has a combination of local and cloud abilities, both off-the-shelf and proprietary.

The "Hey Jibo" wake command, for instance, is handled locally so that there's never a lag or delay while you wait for a response; similarly, there are other basics that can be done without an internet connection at all. The team's focus is on precise and swift social interaction, Ross says, and frankly the cloud is just too slow.

For developers, speech has several layers, depending on the complexity of what each skill hopes to achieve. Coding typical interactions with Jibo is done through a window-based chat interface (actually speaking out loud is more cumbersome, Ross points out, when you're trying to develop and debug) like iMessage.

However, there's more flexibility if it's specifically required. The SDK supports text-to-speech markup, effectively indicating within a body of text which parts should be given emphasis, how unusual words or names should be pronounced, or even if Jibo should do impressions of other characters.

The latter is particularly important given the emphasis on the robot having its own specific personality: though skills will support loading individual audio files, effectively allowing Jibo to sound like anything the developers want, best-practice will be to maintain the robot's own voice. Think of the difference between a father reading a bedtime story and "performing" the voices of different characters, versus an audiobook where each character gets a unique voice actor.

Similarly, though the display can show anything a skill might demand – a map showing the location of the Uber driver Jibo has summoned for you, perhaps, or a to-do list you've dictated from across the room – developers will be encouraged to maintain consistency with the core interface. That means showing the bouncing eye as much as possible, or integrating it into the custom UI of each skill: the way it morphs into weather symbols is a good example.

Although the Jibo SDK won't be publicly released until early April, the company has held a couple of hackathons already, campaign perks for those who backed the original crowdfunding. There's been a surprising range of skills developed at those events, too, Ross says, and though games and storytelling were common applications, the ways in which they've been implemented has helped highlight the ways Jibo is different from a games console or ereader app.

One developer, for instance, turned Jibo into a poker dealer, with players sitting around the robot in a circle. Jibo would look to each in turn, dealing out cards to their smartphones, something which "takes advantage of his unique form factor," Ross says.

Another coder brought along a hardware accessory: an Arduino-powered tail, which could wag when Jibo spotted someone he knew. Although the focus for the SDK is initially on software skills, the team is open to hardware mods and customization, and Jibo can communicate with other connected devices nearby using WiFi.

Interest from larger players in the smart home and entertainment fields has been growing since Jibo's 2014 reveal, Ross told me, though he declined to mention any specific names. The way is being paved for a huge range of interactions, though, including a notification system built into Jibo's core personality. Owners will be able to tell the robot to silence all notifications, or dig into them more granularly on an app-by-app basis via the companion phone software. Eventually, Ross suggests, Jibo could be the hub of the connected home.

Skills will be distributed via an app store, and the company is taking an Apple-like proactive stance on ensuring quality. Each title will go through an approvals process before it's included, covering not only coding best-practice but whether it's harmonious with Jibo's personality, which websites it connects to, and what personal data is gathered and shared.

Paid and free skills will be allowed, but nothing ad-supported. Jibo, they reason, is meant to be a friend not a part-time salesman.

The first Jibo hardware will ship midway through this year, initially to the early adopters who bought during the two rounds of crowdfunding. A date for general sales hasn't been announced yet, nor retail pricing. Of the more than 6,500 preorders, Ross tells me, around a third are developers who have expressed interest in creating skills themselves; given the SDK doesn't require hardware, the potential pool of apps for the robot could be much higher.

It'd be easy to prematurely discount Jibo as just a toy – a geekier, shaved Furby, perhaps – or to underestimate the value that the physicality of its form-factor brings to the overall experience. Company founder Dr. Cynthia Breazeal – a huge Star Wars fan – once described Jibo as the result of R2D2 and Siri having a baby, though I think that still underestimates its enduring appeal.

When I talk to Siri, I know I'm really talking to the cloud. When I ask something of Alexa, though, even though Echo is nothing more than a flashing totem, I feel its presence in the space we share. I'm not just shouting out into the void.

NOW READ: Talking robo-families with Jibo's creator

Physical embodiment has a real contribution to make in how comfortable we are with our digital companions and, as a result, how much we will make use of them. When Jibo wiggles his belly and giggles, shrugs self-consciously when it gets something wrong, or simply glances up at you when you walk past, it blurs the line between "tool" and "friend". You don't forget it's a digital being, no, but those anthropomorphic cues make it far more approachable.

Siri you might prize for its usefulness in managing your schedule. Alexa may impress you with her knowledge and accuracy. Jibo, though, has the potential to win your heart.

[gallerybanner p="430938"]